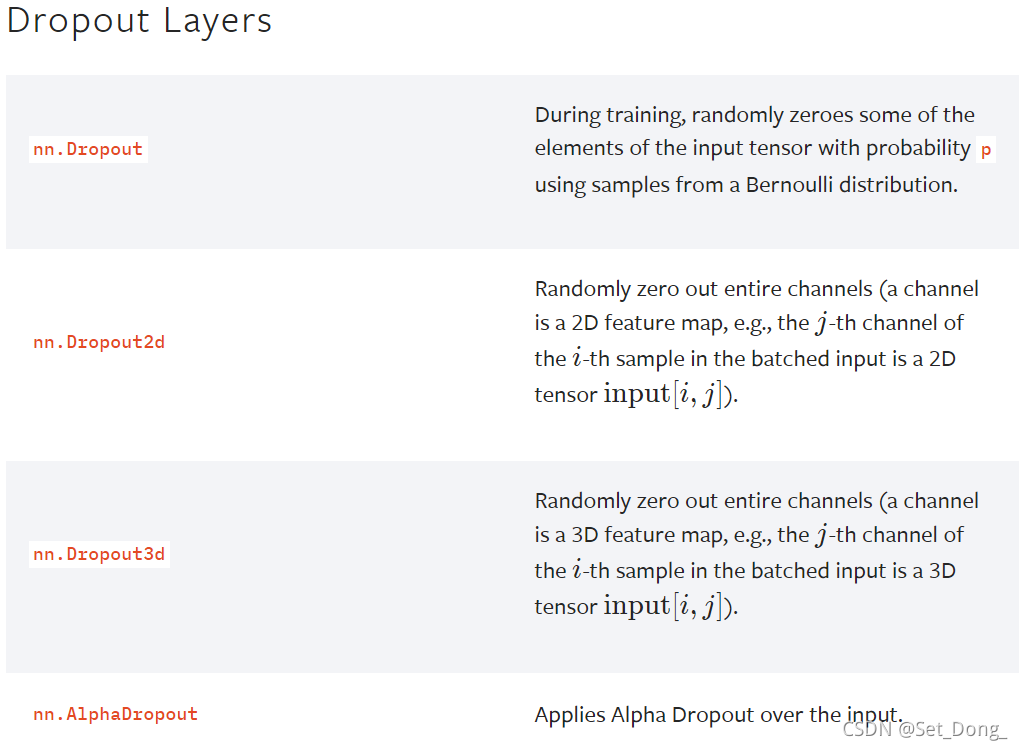

?torch.nn中有以下的dropout方法:

以Dropout2d为例(Dropout2d — PyTorch 1.9.1 documentation)

?MLP模型,在fc2前加入dropout层

class Net_MLP_drop(nn.Module):

def __init__(self):

super(Net_MLP_drop, self).__init__()

self.fc1 = nn.Linear(500, 120)

self.drop = nn.Dropout2d(p=0.5)

self.fc2 = nn.Linear(120, 120)

self.fc3 = nn.Linear(120, 10)

def forward(self, x):

x = x.view(-1, 500)

x = F.relu(self.fc1(x))

x = self.drop(x)

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x需要注意的是,

def forward(self, input: Tensor) -> Tensor:

return F.dropout2d(input, self.p, self.training, self.inplace)from:?torch.nn.modules.dropout — PyTorch 1.9.1 documentation

nn.Dropout只会在训练模式下生效,测试模式下不会生效,这也跟dropout的原理和目标一致。

如果在测试模式下仍使用dropout,会导致准确度降低很多。

方法如下,net为你的模型:

在训练模型时加入:net.train()?

在预测模型时加入:net.eval()?