TensorFlow Lite

https://github.com/tensorflow/tensorflow/tree/master/tensorflow/lite/examples/python/

TensorFlow Lite Python image classification demo

# Get photo

curl https://raw.githubusercontent.com/tensorflow/tensorflow/master/tensorflow/lite/examples/label_image/testdata/grace_hopper.bmp > /tmp/grace_hopper.bmp

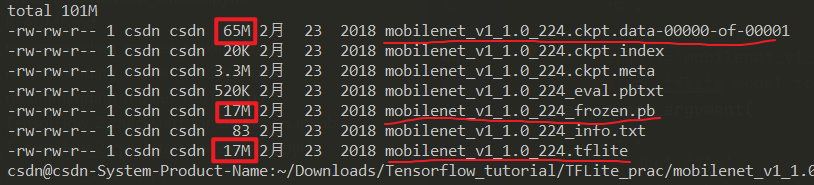

# Get model

curl https://storage.googleapis.com/download.tensorflow.org/models/mobilenet_v1_2018_02_22/mobilenet_v1_1.0_224.tgz | tar xzv -C /tmp

# Get labels

curl https://storage.googleapis.com/download.tensorflow.org/models/mobilenet_v1_1.0_224_frozen.tgz | tar xzv -C /tmp mobilenet_v1_1.0_224/labels.txt

mv /tmp/mobilenet_v1_1.0_224/labels.txt /tmp/

首先,我们需要把该下载的图片、模型、标签,下载到本地

然后运行下面的指令:

python3 label_image.py

--model_file mobilenet_v1_1.0_224/mobilenet_v1_1.0_224.tflite

--label_file mobilenet_v1_1.0_224_frozen/mobilenet_v1_1.0_224/labels.txt

--image grace_hopper.bmp

输出的预测结果如下所示:

0.919720: 653:military uniform

0.017762: 907:Windsor tie

0.007507: 668:mortarboard

0.005419: 466:bulletproof vest

0.003828: 458:bow tie, bow-tie, bowtie

time: 9.754ms

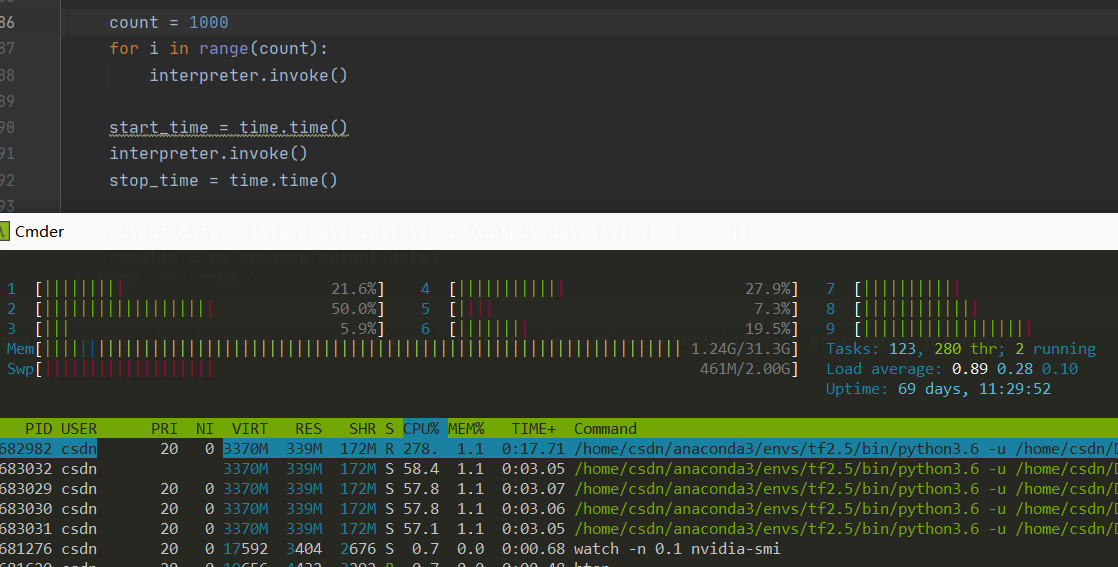

此处我们需要先调用模型 1000 次,当然实际的时候不需要这么多次,这里只是为了把加载模型的时间排除在外

更改了一部分代码

import tensorflow as tf 变成 import tflite_runtime.interpreter as tflite

interpreter = tf.lite.Interpreter(model_path=args.model_file) 变成 interpreter = tflite.Interpreter(model_path=args.model_file)

0.919721: 653:military uniform

0.017762: 907:Windsor tie

0.007507: 668:mortarboard

0.005419: 466:bulletproof vest

0.003828: 458:bow tie, bow-tie, bowtie

time: 14.443ms

对比上面的 9.754ms 来说,好像变慢了,原因是什么?

而且更加的耗费 CPU 资源了,原因是?