Openmmlab无法加载预训练模型的问题

这两天在调试mmsegmentation和mmdetection,可能是因为自己的原因,预训练模型死活加载不了预训练的模型,无法正常的索引到预训练模型的地址,最后通过降低版本的方式成功地加载了预训练模型并跑了起来,具体的流程如下:

解决过程

-

安装pytorch和torchvision

我是30系列的显卡,所以需要的cuda版本需要是11以上。

conda install pytorch==1.9.0 torchvision==0.10.0 cudatoolkit=11.1 -c pytorch -c conda-forge -

安装mmcv-full

pip install mmcv-full==1.3.10 -f https://download.openmmlab.com/mmcv/dist/cu111/torch1.9.0/index.html -

安装apex

git clone https://github.com/NVIDIA/apex pip install -v --disable-pip-version-check --no-cache-dir --global-option="--cpp_ext" --global-option="--cuda_ext" ./ -

安装mmdetection,我使用的是SwinTransformer/Swin-Transformer-Object-Detection这个版本的

git clone https://github.com/SwinTransformer/Swin-Transformer-Object-Detection.git pip install -v -e . -

安装mmpycocotools

pip uninstall pycocotools pip install mmpycocotools

测试的代码如下,当时我主要是想测试一下mmdetection在dota数据集上的表现:

from mmcv import Config

from mmdet.datasets import build_dataset

from mmdet.models import build_detector

from mmdet.apis import train_detector

from mmdet.apis import set_random_seed

import os.path as osp

import mmcv

import numpy as np

from mmdet.datasets.builder import DATASETS

from mmdet.datasets.custom import CustomDataset

import warnings

# warnings.filterwarnings('ignore')

# 目前的解决方案,要不重写一个dataset的类,要不统一都弄成coco的形式。

cfg = Config.fromfile('./configs/fcos/fcos_r50_caffe_fpn_gn-head_mstrain_640-800_2x_coco.py')

# todo 1. 定义数据集

# 目前这个数据有大问题,咱首先得处理coco格式,然后得写个带可视化得api方便查看,奶奶得。

cfg.dataset_type = 'CocoDataset' # todo 数据集格式

cfg.classes = ('plane', 'baseball-diamond', 'bridge', 'ground-track-field', 'small-vehicle', 'large-vehicle',

'ship', 'tennis-court', 'basketball-court', 'storage-tank',

'soccer-ball-field', 'roundabout', 'harbor', 'swimming-pool', 'helicopter', 'container-crane',) # todo 类名

data_images = '/home/lyc/data/scm/remote/dota1.5hbb/PNGImages/images/' # todo 数据集根路径

cfg.data.train.ann_file = '/home/lyc/data/scm/remote/dota1.5hbb/dota_train.json' # todo json文件路径

cfg.data.val.ann_file = '/home/lyc/data/scm/remote/dota1.5hbb/dota_val.json' # todo 验证集json文件路径

cfg.data.test.ann_file = '/home/lyc/data/scm/remote/dota1.5hbb/dota_val.json' # todo 测试集json文件路径

cfg.data.train.type = cfg.dataset_type

cfg.data.val.type = cfg.dataset_type

cfg.data.test.type = cfg.dataset_type

cfg.data.train.classes = cfg.classes

cfg.data.val.classes = cfg.classes

cfg.data.test.classes = cfg.classes

cfg.data.train.img_prefix = data_images #

cfg.data.val.img_prefix = data_images

cfg.data.test.img_prefix = data_images

cfg.data.samples_per_gpu = 4 # Batch size of a single GPU used in testing 默认是8x2

cfg.data.workers_per_gpu = 1 # Worker to pre-fetch data for each single GPU

# *************** transform **************

train_pipeline = [

dict(type='LoadImageFromFile'),

dict(type='LoadAnnotations', with_bbox=True),

dict(

type='Resize',

img_scale=(1024, 1024),

# multiscale_mode='value',

keep_ratio=True),

dict(type='RandomFlip', flip_ratio=0.5),

dict(

type='Normalize',

mean=[102.9801, 115.9465, 122.7717],

std=[1.0, 1.0, 1.0],

to_rgb=False),

dict(type='Pad', size_divisor=32),

dict(type='DefaultFormatBundle'),

dict(type='Collect', keys=['img', 'gt_bboxes', 'gt_labels'])

]

test_pipeline = [

dict(type='LoadImageFromFile'),

dict(

type='MultiScaleFlipAug',

img_scale=(1024, 1024),

flip=False,

transforms=[

dict(type='Resize', keep_ratio=True),

dict(type='RandomFlip'),

dict(

type='Normalize',

mean=[102.9801, 115.9465, 122.7717],

std=[1.0, 1.0, 1.0],

to_rgb=False),

dict(type='Pad', size_divisor=32),

dict(type='ImageToTensor', keys=['img']),

dict(type='Collect', keys=['img'])

])

]

cfg.data.train.pipeline = cfg.train_pipeline

cfg.data.val.pipeline = cfg.test_pipeline

cfg.data.test.pipeline = cfg.test_pipeline

# modify num classes of the model in box head

cfg.model.bbox_head.num_classes = len(cfg.classes)

#cfg.load_from = '../checkpoints/resnet50_caffe-788b5fa3.pth'

cfg.work_dir = '../tutorial_exps/2-dota_fcos_1024_backbone'

# The original learning rate (LR) is set for 8-GPU training.

# We divide it by 8 since we only use one GPU.

cfg.optimizer.lr = 0.02 / 8

cfg.lr_config.warmup = None

cfg.log_config.interval = 10

# Change the evaluation metric since we use customized dataset.

# cfg.evaluation.metric = 'mAP'

cfg.evaluation.metric = 'bbox'

cfg.evaluation.save_best = 'bbox_mAP'

# We can set the evaluation interval to reduce the evaluation times

cfg.evaluation.interval = 1

# We can set the checkpoint saving interval to reduce the storage cost

cfg.checkpoint_config.interval = 12

# Set seed thus the results are more reproducible

cfg.seed = 0

set_random_seed(0, deterministic=False)

# cfg.gpu_ids = range(1)

cfg.gpu_ids = (0,)

# We can initialize the logger for training and have a look

# at the final config used for training

print(f'Config:\n{cfg.pretty_text}')

# 保存模型的各种参数(一定要记得嗷)

cfg.dump(F'{cfg.work_dir}/customformat_fcos.py')

# 训练主要进程

# Build dataset

datasets = [build_dataset(cfg.data.train)]

print(cfg.data.train)

print(datasets[0])

print(datasets[0].CLASSES)

# Build the detector

model = build_detector(

cfg.model, train_cfg=cfg.get('train_cfg'), test_cfg=cfg.get('test_cfg'))

print("数据集加载完毕!")

# Add an attribute for visualization convenience

model.CLASSES = datasets[0].CLASSES

# Create work_dir

mmcv.mkdir_or_exist(osp.abspath(cfg.work_dir))

train_detector(model, datasets, cfg, distributed=False, validate=True)

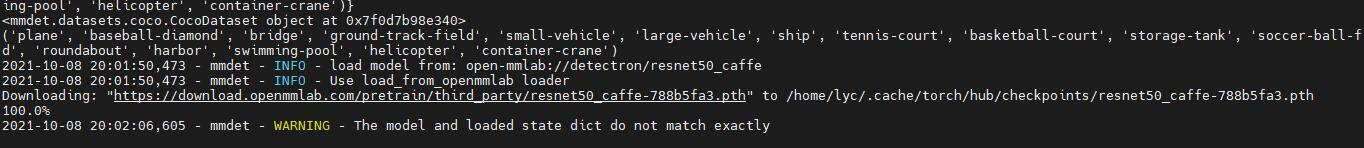

!!!成功下载权重文件

附上第一轮的结果,好像不会太离谱了

# 改之前

DONE (t=8.48s).

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.005

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=1000 ] = 0.020

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=1000 ] = 0.001

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=1000 ] = 0.001

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=1000 ] = 0.005

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=1000 ] = 0.009

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.035

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=300 ] = 0.035

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=1000 ] = 0.035

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=1000 ] = 0.010

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=1000 ] = 0.030

# 改了之后

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.076

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=1000 ] = 0.187

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=1000 ] = 0.049

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=1000 ] = 0.008

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=1000 ] = 0.092

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=1000 ] = 0.101

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.167

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=300 ] = 0.167

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=1000 ] = 0.167

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=1000 ] = 0.032

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=1000 ] = 0.182

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=1000 ] = 0.246

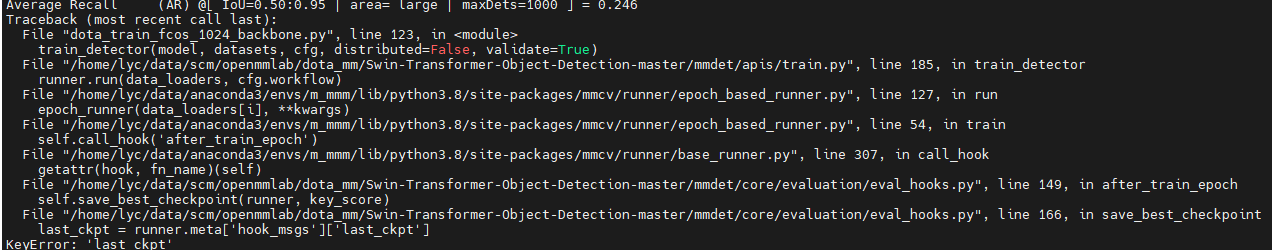

但是有新的bug,后面在解决,应该是配置文件的问题

附录

swintransformer挺牛的,大家可以自己试试看

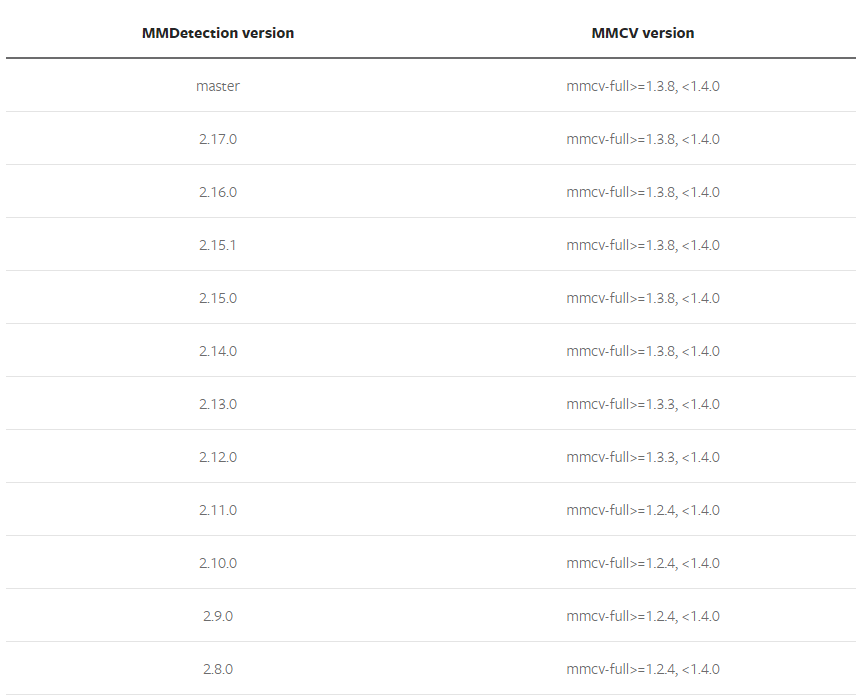

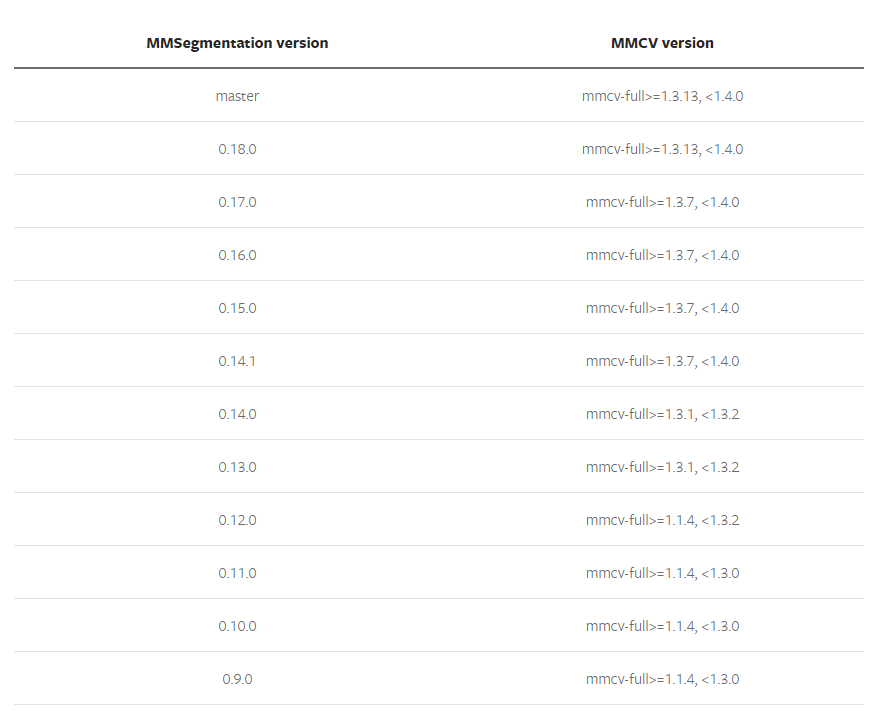

最后附上mmdetection和mmsegmnetation的对照表。