# -*-encoding:utf-8-*-

import re,collections

# 把语料库中的单词全部抽取出来,转成小写,并去除单词中间的特殊符号

def words(text):

return re.findall('[a-z]+',text.lower())

def train(features):

model = collections.defaultdict(lambda:1)

for f in features:

model[f] += 1

return model

NWORDS = train(words(open(r'C:\Users\86156\Desktop\nlp\big.txt').read()))

alphabet = 'abcdefghijklmnnopqrstuvwxyz'

# 返回所有与单词w编辑距离为1的集合

def edits1(word):

n = len(word)

return set([word[0:i]+word[i+1:] for i in range(n)]+

[word[0:i]+word[i+1]+word[i]+word[i+2:] for i in range(n-1)]+

[word[0:i]+c+word[i+1:] for i in range(n) for c in alphabet]+

[word[0:i]+c+word[i:] for i in range(n+1) for c in alphabet])

# 返回所有与单词w编辑距离为2的集合

# 在这些编辑距离小于2的词中词,只把那些正确的词作为候选词

def known_edits2(word):

return set(e2 for e1 in edits1(word) for e2 in edits1(e1) if e2 in NWORDS)

def known(words):

return set(w for w in words if w in NWORDS)

# 如果known(set)得空,candidate就会选取这个集合,而不继续计算后面的

def correct(word):

candidates = known([word]) or known(edits1(word)) or known_edits2(word) or [word]

return max(candidates,key=lambda w:NWORDS[w])

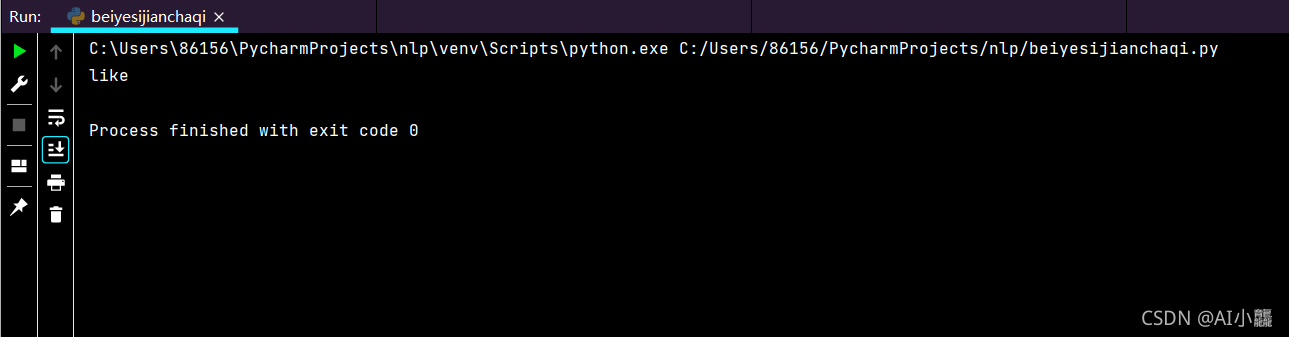

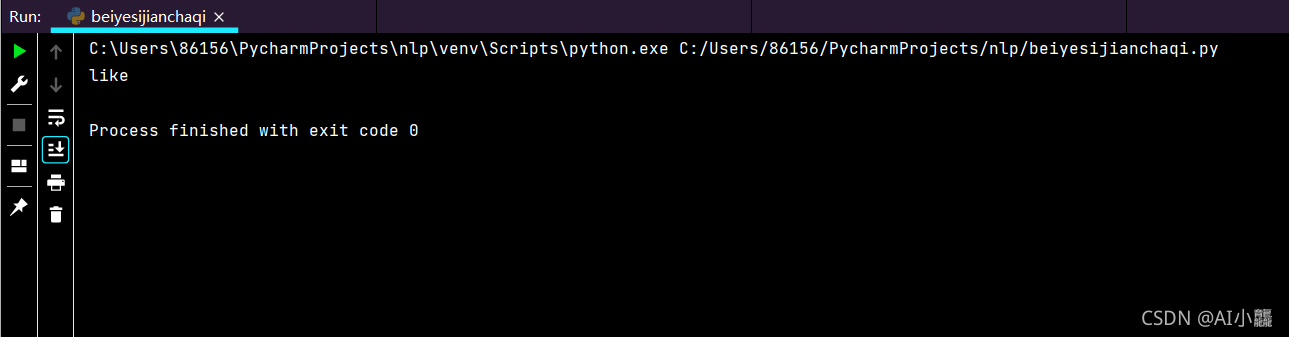

print(correct('likr'))

|