以求二元函数

的极小值为例,程序如下:

# coding: utf-8

import numpy as np

import matplotlib.pylab as plt

def _numerical_gradient_no_batch(f, x):

h = 1e-4 # 0.0001

grad = np.zeros_like(x)

for idx in range(x.size):

tmp_val = x[idx]

x[idx] = float(tmp_val) + h

fxh1 = f(x) # f(x+h)

x[idx] = tmp_val - h

fxh2 = f(x) # f(x-h)

grad[idx] = (fxh1 - fxh2) / (2*h)

x[idx] = tmp_val # 还原值

return grad

def numerical_gradient(f, X):

if X.ndim == 1:

return _numerical_gradient_no_batch(f, X)

else:

grad = np.zeros_like(X)

for idx, x in enumerate(X):

grad[idx] = _numerical_gradient_no_batch(f, x)

return grad

def gradient_descent(f, init_x, lr=0.01, step_num=100):

x = init_x

x_history = []

for i in range(step_num):

x_history.append( x.copy() )

grad = numerical_gradient(f, x)

x -= lr * grad

return x, np.array(x_history)

def function_2(x):

return x[0]**2 + x[1]**2

init_x = np.array([-3.0, 4.0])

lr = 0.2

step_num = 80

x, x_history = gradient_descent(function_2, init_x, lr=lr, step_num=step_num)

print(x)

plt.plot( [-5, 5], [0,0], '--b')

plt.plot( [0,0], [-5, 5], '--b')

# x[:,0]就是取矩阵x的所有行的第0列的元素,x[:,1] 就是取所有行的第1列的元素.

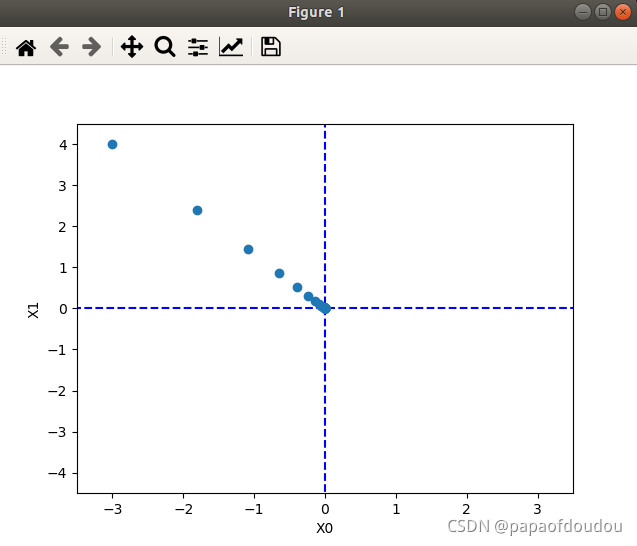

plt.plot(x_history[:,0], x_history[:,1], 'o')

plt.xlim(-3.5, 3.5)

plt.ylim(-4.5, 4.5)

plt.xlabel("X0")

plt.ylabel("X1")

plt.show()运行程序,结果如下:

运行结果显示,在学习率为0.2,迭代学习80次后,得到的绩值点坐标为

[-5.36392878e-18 ?7.14657579e-18]

这已经非常接近于实际的极小值点[0,0]了。