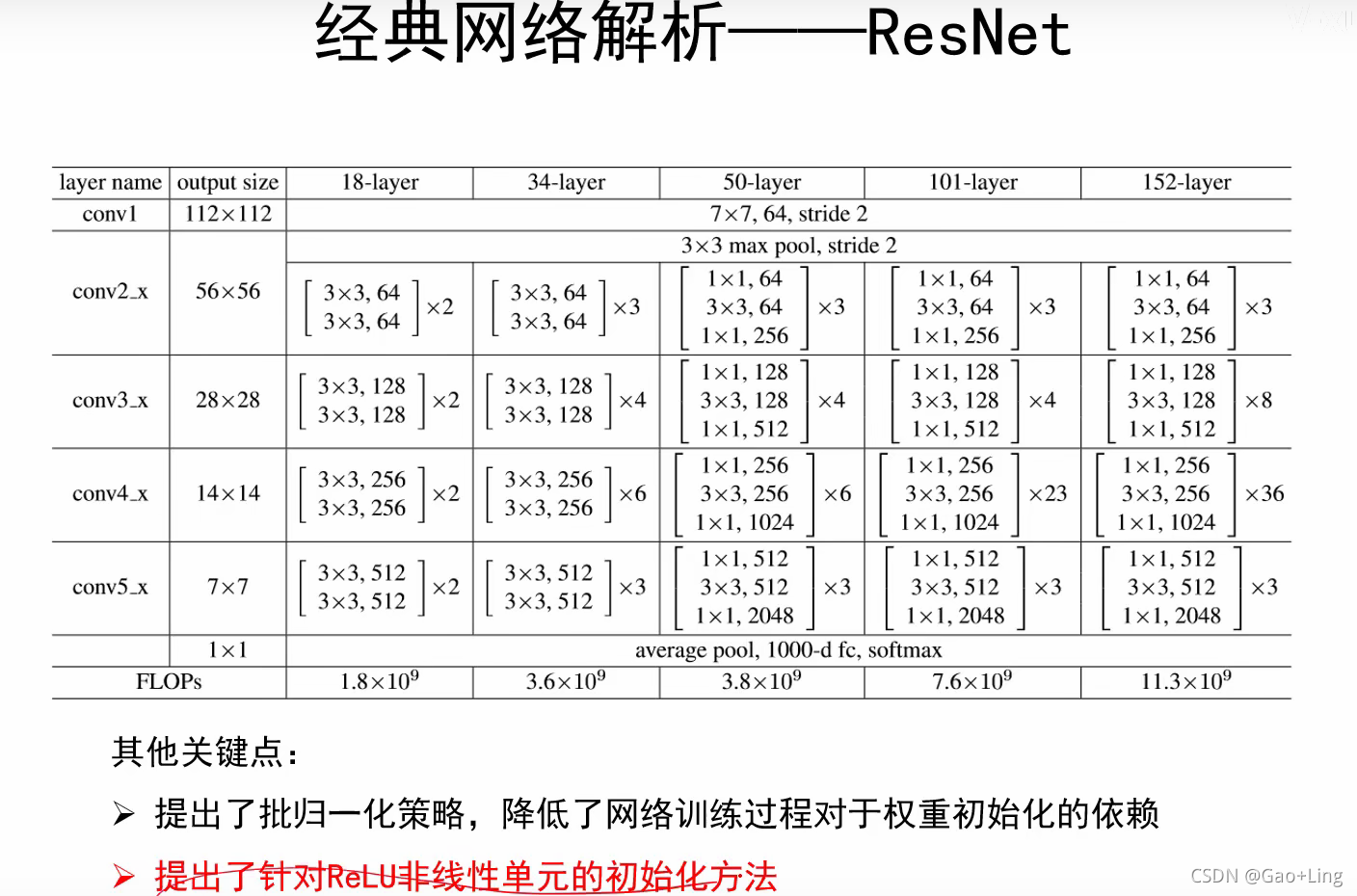

ResNet

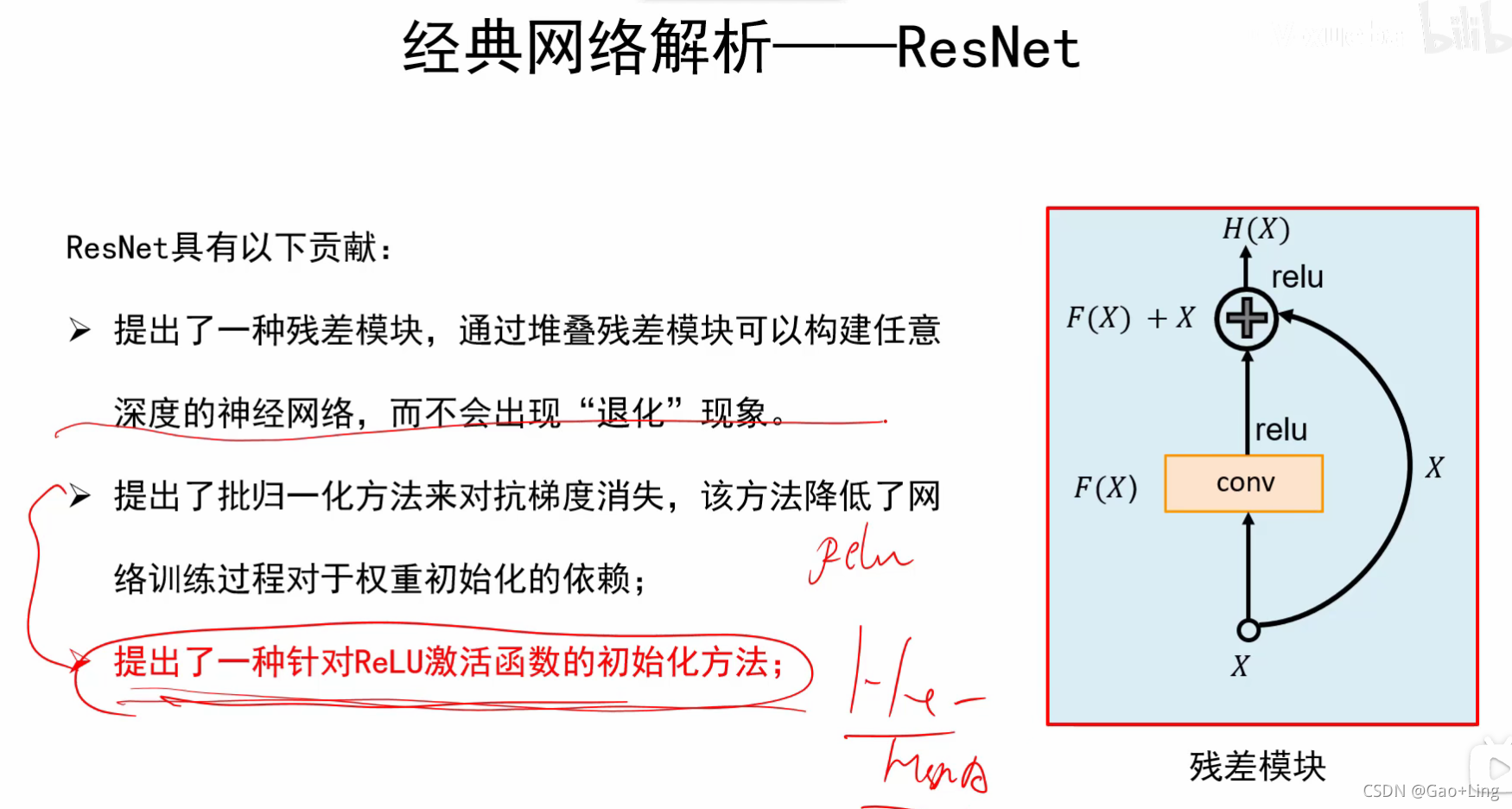

ResNet又名残差神经网络,指的是在传统卷积神经网络中加入残差学习(residual learning)的思想,解决了深层网络中梯度弥散和精度下降(训练集)的问题,使网络能够越来越深,既保证了精度,又控制了速度。

研究背景

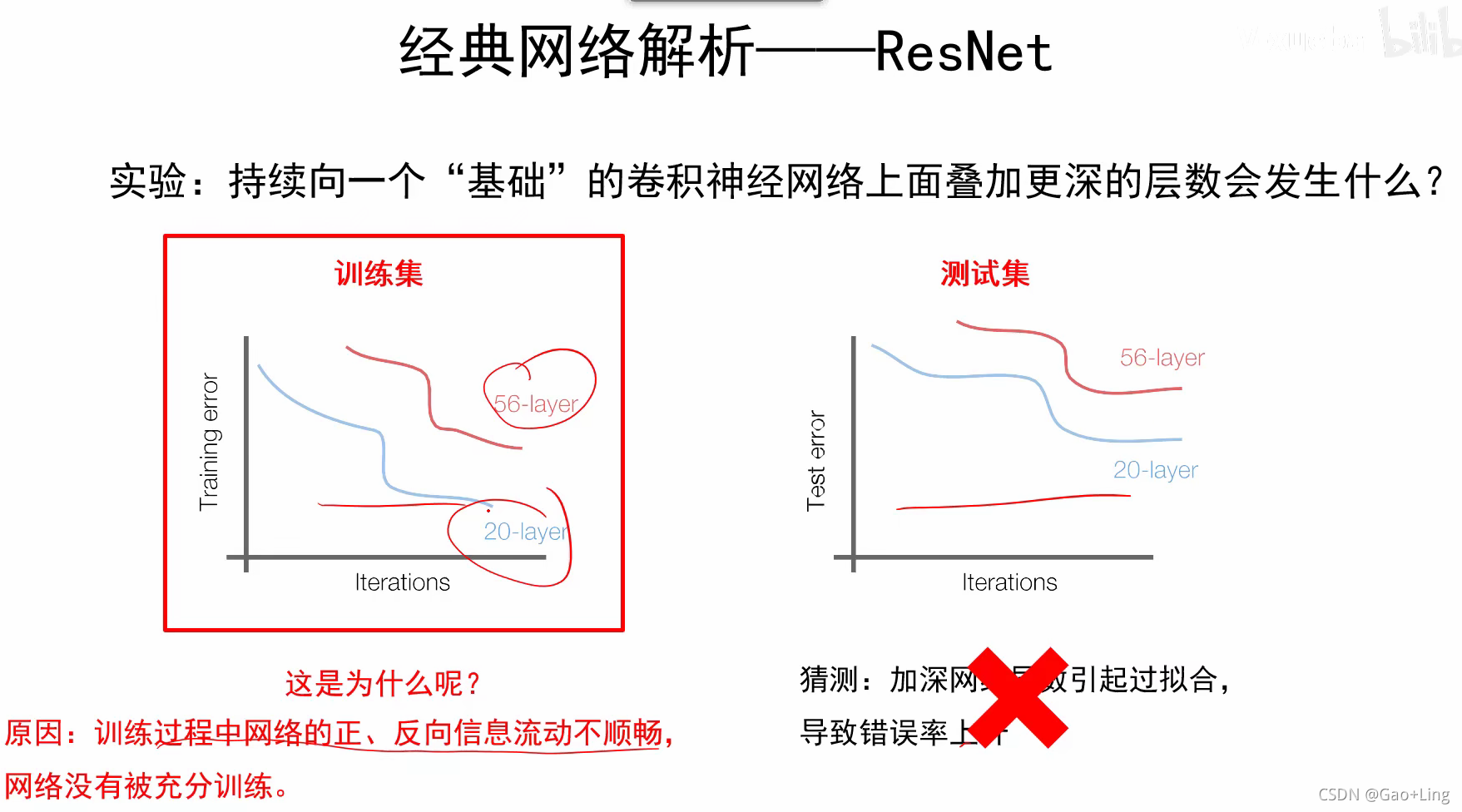

随着网络的加深,梯度弥散问题会越来越严重,导致网络很难收敛甚至无法收敛。梯度弥散问题目前有很多的解决办法,包括网络初始标准化,数据标准化以及中间层的标准化(Batch Normalization)等。但是网络加深还会带来另外一个问题:随着网络加深,出现训练集准确率下降的现象,如下图,

这不是由于过拟合引起的。过拟合通常指模型在训练集表现很好,在测试集很差。而上图显示的结果是在参数集和训练集中的结果表现得都不是很好。针对这个问题,残差学习的思想被提出。

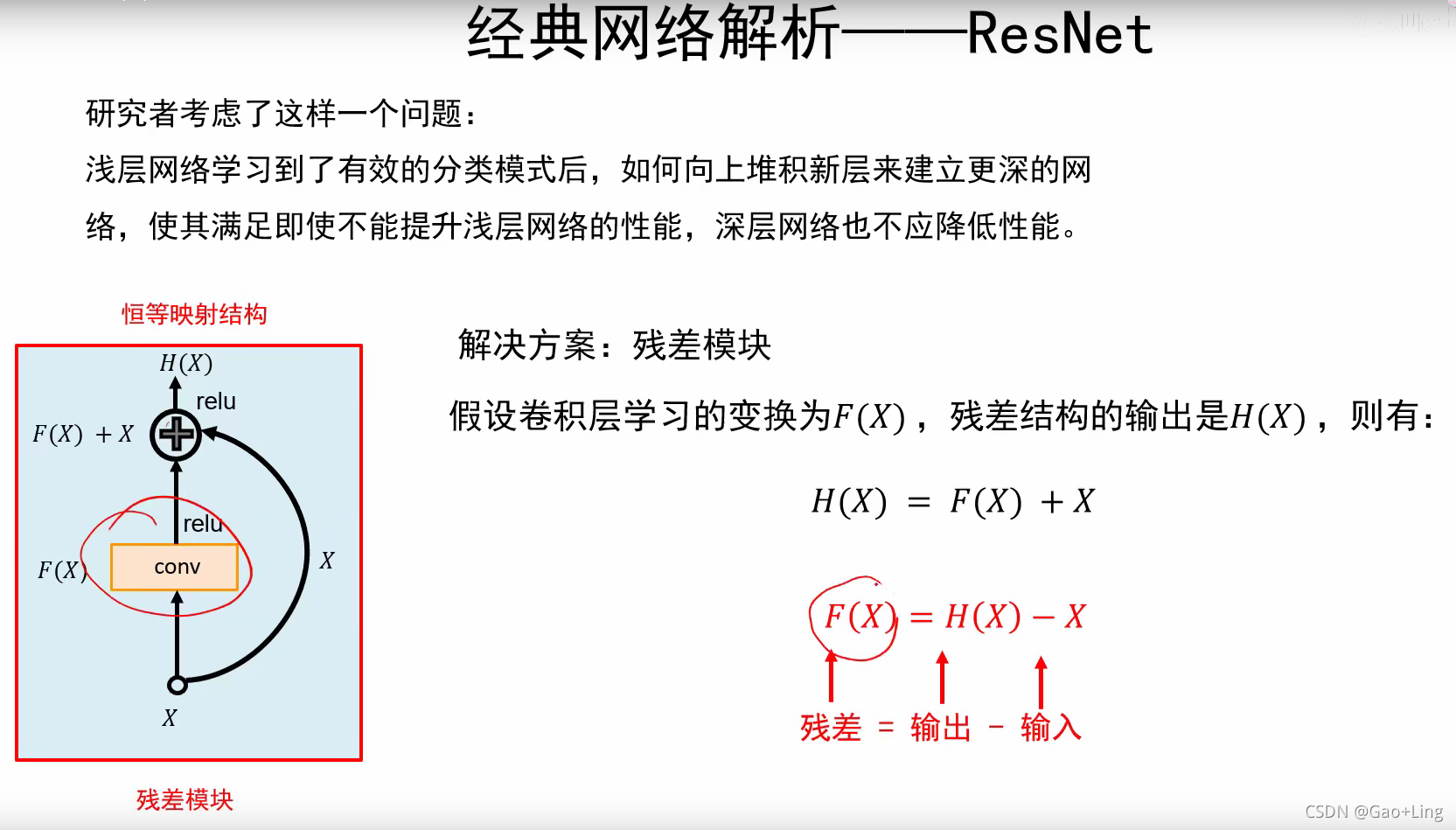

残差学习

结构

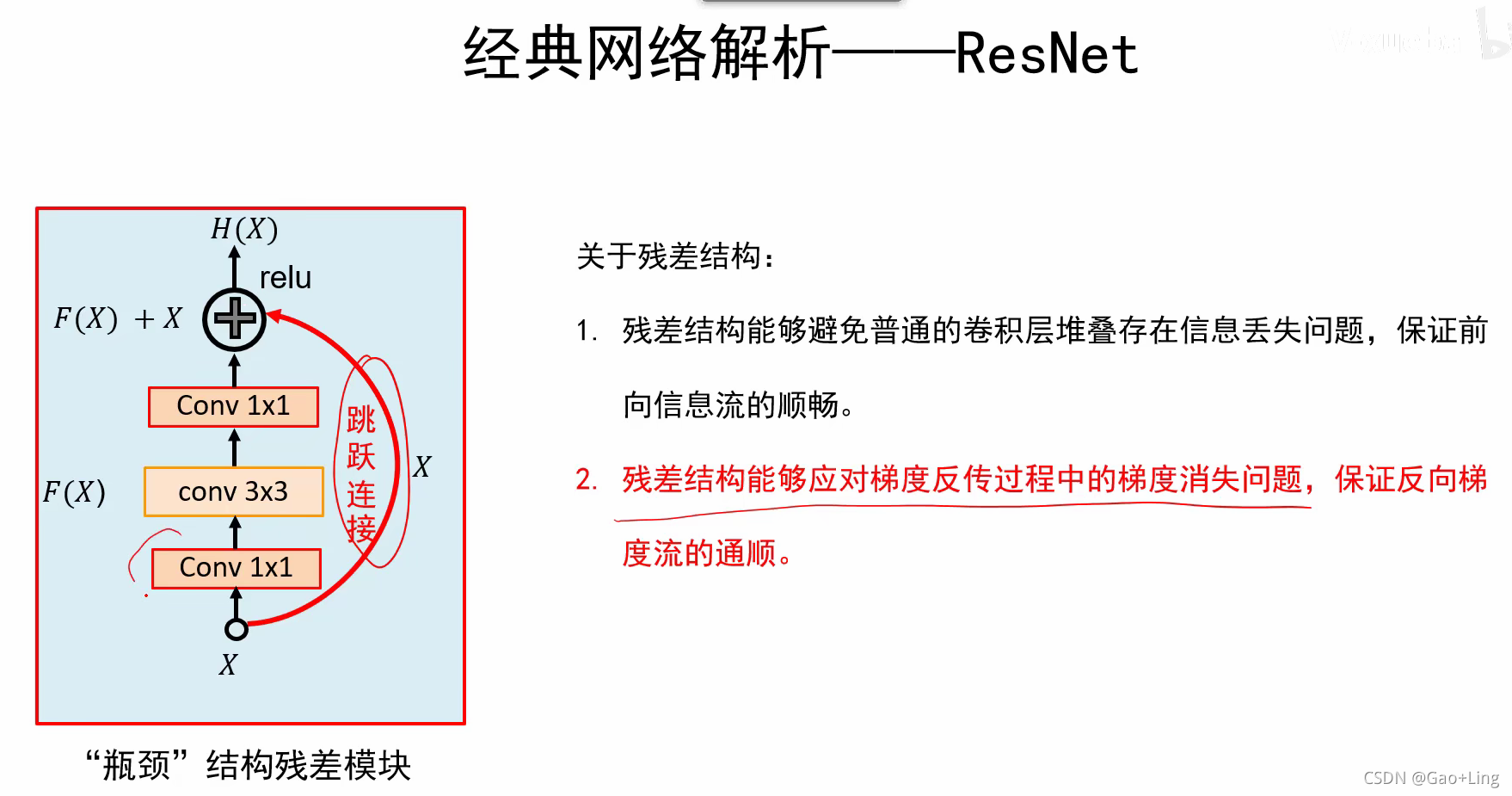

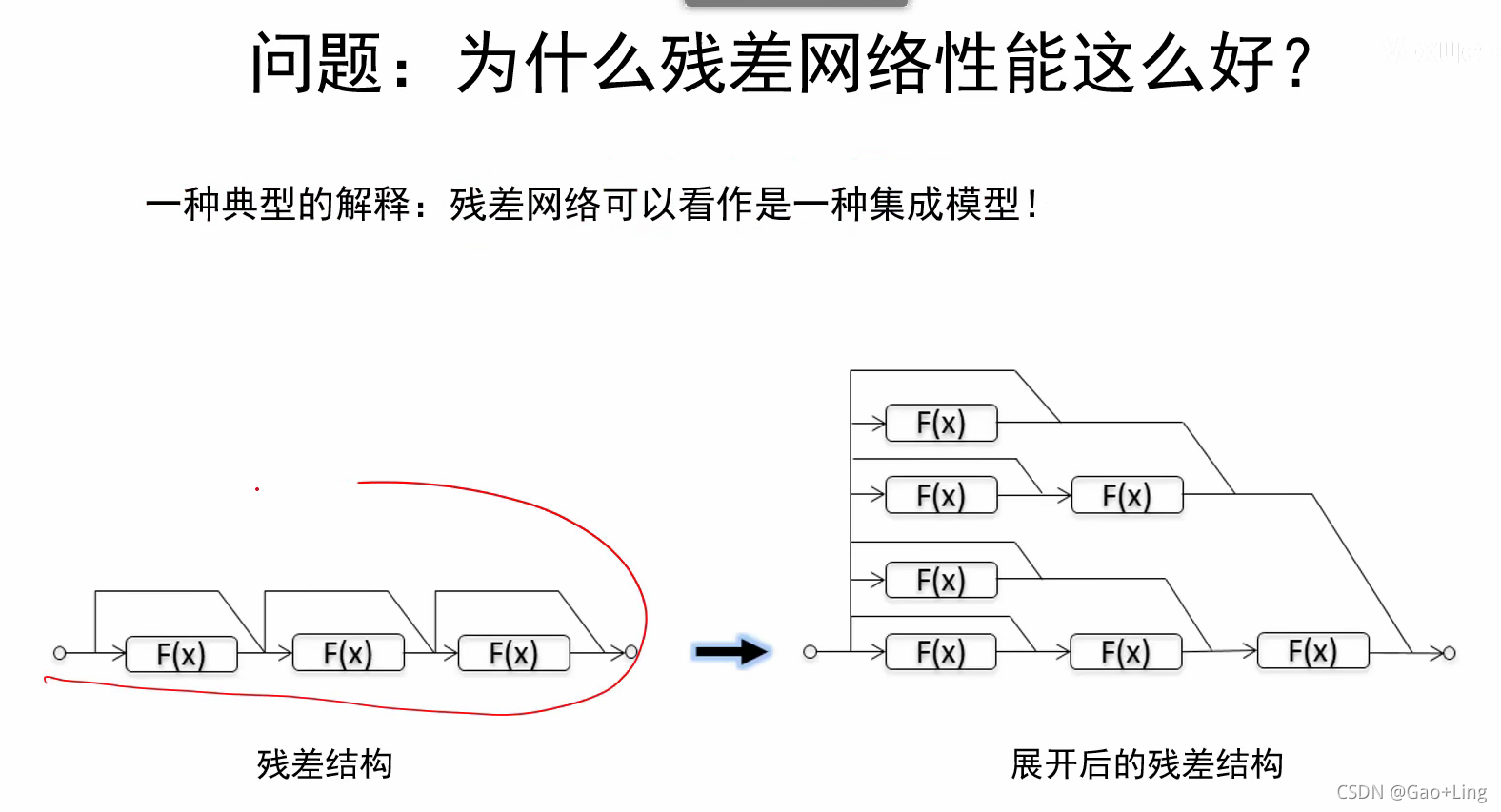

ResNet性能好的原因

代码实现

1)bottleneck——残差模块

def bottleneck(inputs, depth, depth_bottleneck, stride, outputs_collections = None, scope = None):

with tf.variable_scope(scope, 'bottleneck_v2', [inputs]) as sc:

depth_in = slim.utils.last_dimension(inputs.get_shape(), min_rank = 4)

# 使用 slim.utils.last_dimension获取输入的最后一个维度,即输入通道数

preact = slim.batch_norm(inputs, activation_fn = tf.nn.relu, scope = 'preact')

if depth == depth_in:

shortcut = subsample(inputs, stride, 'shortcut')

else:

shortcut = slim.conv2d(preact, depth, [1, 1], stride = stride, normalizer_fn = None, activation_fn = None, scope = 'shortcut')

residual = slim.conv2d(preact, depth_bottleneck, [1, 1], stride = 1, scope = 'conv1')

residual = conv2d_same(residual, depth_bottleneck, 3, stride, scope = 'conv2')

residual = slim.conv2d(residual, depth, [1, 1], stride = 1, normalizer_fn = None, activation_fn = None, scope = 'conv3')

# 定义residual,即残差,共有3层,分别是[1x1]卷积(stride=1),[3x3]卷积(stride可设置)和最后的[1x1]卷积(stride=1),

# 其中前两个卷积的输出通道数都是depth_bottleneck,最后1个卷积输出通道数为depth,最终得到residual

output = shortcut + residual

# 最后将shortcut(输入x)和residual(F(x))相加,得到output(输出H(x))

return slim.utils.collect_named_outputs(outputs_collections, sc.name, output)

# 使用slim.utils.collect_named_outputs将结果添加进collection并返回output作为函数结果。

inputs 输入,depth 输出通道数,depth_bottleneck 残差的第1、2层输出通道数,stride 步长

2)ResNet 152-layer网络

def resnet_v2_152(inputs, num_classes = None, global_pool = True, reuse = None, scope = 'resnet_v2_152'):

blocks = [

Block('block1', bottleneck, [(256, 64, 1)] * 2 + [(256, 64, 2)]),

# (256, 64, 1)的结构对应的是一个三元的tuple,分别代表(depth, depth_bottleneck, stride)

Block('block2', bottleneck, [(512, 128, 1)] * 7 + [(512, 128, 2)]),

Block('block3', bottleneck, [(1024, 256, 1)] * 35 + [(1024, 256, 2)]),

Block('block4', bottleneck, [(2048, 512, 1)] * 3)]

# 四个Block,其中每个Block中包含数量不等的残差模块。

return resnet_v2(inputs, blocks, num_classes, global_pool, include_root_block = True, reuse = reuse, scope = scope)

3)主函数

def resnet_v2(inputs, blocks, num_classes = None, global_pool = True, include_root_block = True, reuse = None, scope = None):

with tf.variable_scope(scope, 'resnet_v2', [inputs], reuse = reuse) as sc:

end_points_collection = sc.original_name_scope + '_end_points'

with slim.arg_scope([slim.conv2d, bottleneck, stack_blocks_dense], outputs_collections = end_points_collection):

net = inputs

if include_root_block:

with slim.arg_scope([slim.conv2d], activation_fn = None, normalizer_fn = None):

net = conv2d_same(net, 64, 7, stride = 2, scope = 'conv1')

net = slim.max_pool2d(net, [3, 3], stride = 2, scope = 'pool1')

# 对原始输入图片进行卷积和池化操作,将图片缩小为原来的1/4,然后送入残差模块

net = stack_blocks_dense(net, blocks) # 使用stack_blocks_dense函数对整个网络的残差模块封装和生成(该函数是自定义的一个函数)

net = slim.batch_norm(net, activation_fn = tf.nn.relu, scope = 'postnorm')

if global_pool:

net = tf.reduce_mean(net, [1, 2], name = 'pool5', keep_dims = True)

# 使用reduce_mean进行全局平均池化,效率比直接用avg_pool高

if num_classes is not None:

net = slim.conv2d(net, num_classes, [1, 1], activation_fn = None, normalizer_fn = None, scope = 'logits')

end_points = slim.utils.convert_collection_to_dict(end_points_collection)

# 根据是否有分类数添加卷积、激活和分类器

if num_classes is not None:

end_points['predictions'] = slim.softmax(net, scope = 'predictions')

return net, end_points