第一层 l0 输入层 3个节点

第二层 l1 隐藏层 4个节点

第三层 l2 输出层 2个节点

全连接网络,所以可以看到每个节点都和上一层的所有节点有连接

输入层有三个节点,我们将其依次编号为1、2、3;隐藏层的4个节点,编号依次为4、5、6、7;最后输出层的两个节点编号为8、9

前向算法的作用是计算计算每个结点对其下一层结点的影响,也就是说,把网络正向的走一遍:输入层—->隐藏层—->输出层

节点1、2、3是输入层的节点,所以,他们的输出值就是输入向量本身

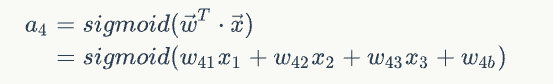

隐藏层的输入 例如a4

输出层的输入是输入层的

import numpy as np

from numpy import random, dot, exp, array

# 前向算法

def forward(input):

l1_out = 1 / (1 + exp(-(dot(w0, input.T)))) # l1_out.shape = (4,)

l2_out = 1 / (1 + exp(-dot(w1, l1_out))) # l2_out.shape = (2,)

return l1_out, l2_out

# 后向算法

def backward(l1_out, l2_out, y1):

l2_delta = l2_out * (1 - l2_out) * (y1 - l2_out) # l2_delta.shape =(2,)

l1_delta = l1_out * (1 - l1_out) * (l2_delta.dot(w1)) # l1_delta.shape =(4,)

return l1_delta, l2_delta

x = array([[0, 0, 1], [0, 1, 1], [1, 0, 1], [1, 1, 1]])

y = array([[0, 0], [1, 1], [1, 1], [0, 0]])

random.seed(20211016)

w0 = random.random((4, 3))

w1 = random.random((2, 4))

b0 = random.random((4, 1))

b1 = random.random((2, 1))

alpha = 0.01

j = 0

for i in range(100000):

l0 = x[j, :]

l1_out, l2_out = forward(l0)

y1 = y[j, :]

l1_delta, l2_delta = backward(l1_out, l2_out, y1)

l1_delta = l1_delta.reshape(4, 1)

l0 = l0.reshape(1, 3)

w0 = w0 - alpha * dot(l1_delta, l0)

l2_delta = l2_delta.reshape(2, 1)

l1_out = l1_out.reshape(1, 4)

w1 = w1 - alpha * dot(l2_delta, l1_out)

if j < 3:

j += 1

else:

j = 0

print(sum(l1_delta), sum(l2_delta))

运行结果 [-0.00029686] [-0.00061398]