文章目录

一、环境配置

二、本地新建工程

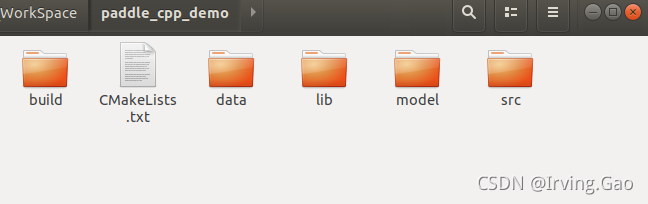

1.创建工程

mkdir paddle_cpp_demo

cd paddle_cpp_demo

touch CMakeLists.txt

mkdir src # 存放代码

mkdir model # 存放模型文件

mkdir data # 存放实验图片和数据

mkdir build # 编译文件

mkdir lib # 存放库文件

2.跑通示例程序

解压文件夹。

3.配置Paddle Inference库文件

(1)下载Paddle Inference预测库文件

根据自己电脑的版本型号进行选择即可:

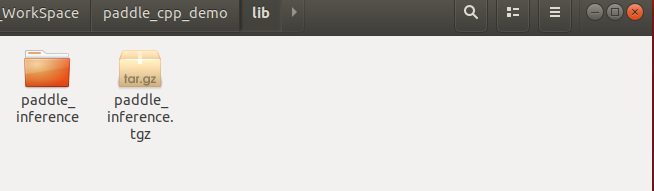

(2)放置Paddle Inference库文件

将在官网下载好的Linux预测库放在lib路径下并解压。

(3)配置CMakeLists.txt文件

CMakeLists.txt

cmake_minimum_required(VERSION 3.10)

project(cpp_inference_demo CXX C)

if(COMMAND cmake_policy)

cmake_policy(SET CMP0003 NEW)

endif(COMMAND cmake_policy)

option(WITH_MKL "Compile demo with MKL/OpenBlas support, default use MKL." ON)

option(WITH_GPU "Compile demo with GPU/CPU, default use CPU." OFF)

option(WITH_STATIC_LIB "Compile demo with static/shared library, default use static." ON)

option(USE_TENSORRT "Compile demo with TensorRT." OFF)

macro(safe_set_static_flag)

foreach(flag_var

CMAKE_CXX_FLAGS CMAKE_CXX_FLAGS_DEBUG CMAKE_CXX_FLAGS_RELEASE

CMAKE_CXX_FLAGS_MINSIZEREL CMAKE_CXX_FLAGS_RELWITHDEBINFO)

if(${flag_var} MATCHES "/MD")

string(REGEX REPLACE "/MD" "/MT" ${flag_var} "${${flag_var}}")

endif(${flag_var} MATCHES "/MD")

endforeach(flag_var)

endmacro()

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++11 -g")

set(CMAKE_STATIC_LIBRARY_PREFIX "")

message("flags" ${CMAKE_CXX_FLAGS})

if(NOT DEFINED PADDLE_LIB)

message(FATAL_ERROR "please set PADDLE_LIB with -DPADDLE_LIB=/path/paddle/lib")

endif()

if(NOT DEFINED DEMO_NAME)

message(FATAL_ERROR "please set DEMO_NAME with -DDEMO_NAME=demo_name")

endif()

include_directories("${PADDLE_LIB}")

include_directories("${PADDLE_LIB}/third_party/install/protobuf/include")

include_directories("${PADDLE_LIB}/third_party/install/glog/include")

include_directories("${PADDLE_LIB}/third_party/install/gflags/include")

include_directories("${PADDLE_LIB}/third_party/install/xxhash/include")

include_directories("${PADDLE_LIB}/third_party/install/zlib/include")

include_directories("${PADDLE_LIB}/third_party/boost")

include_directories("${PADDLE_LIB}/third_party/eigen3")

link_directories("${PADDLE_LIB}/third_party/install/zlib/lib")

link_directories("${PADDLE_LIB}/third_party/install/protobuf/lib")

link_directories("${PADDLE_LIB}/third_party/install/glog/lib")

link_directories("${PADDLE_LIB}/third_party/install/gflags/lib")

link_directories("${PADDLE_LIB}/third_party/install/xxhash/lib")

link_directories("${PADDLE_LIB}/paddle/lib")

add_executable(${DEMO_NAME} src/${DEMO_NAME}.cc)

if(WITH_MKL)

include_directories("${PADDLE_LIB}/third_party/install/mklml/include")

set(MATH_LIB ${PADDLE_LIB}/third_party/install/mklml/lib/libmklml_intel${CMAKE_SHARED_LIBRARY_SUFFIX}

${PADDLE_LIB}/third_party/install/mklml/lib/libiomp5${CMAKE_SHARED_LIBRARY_SUFFIX})

set(MKLDNN_PATH "${PADDLE_LIB}/third_party/install/mkldnn")

if(EXISTS ${MKLDNN_PATH})

include_directories("${MKLDNN_PATH}/include")

set(MKLDNN_LIB ${MKLDNN_PATH}/lib/libmkldnn.so.0)

endif()

else()

set(MATH_LIB ${PADDLE_LIB}/third_party/install/openblas/lib/libopenblas${CMAKE_STATIC_LIBRARY_SUFFIX})

endif()

# Note: libpaddle_inference_api.so/a must put before libpaddle_fluid.so/a

if(WITH_STATIC_LIB)

set(DEPS

${PADDLE_LIB}/paddle/lib/libpaddle_inference${CMAKE_STATIC_LIBRARY_SUFFIX})

else()

set(DEPS

${PADDLE_LIB}/paddle/lib/libpaddle_inference${CMAKE_SHARED_LIBRARY_SUFFIX})

endif()

set(EXTERNAL_LIB "-lrt -ldl -lpthread")

set(DEPS ${DEPS}

${MATH_LIB} ${MKLDNN_LIB}

glog gflags protobuf xxhash

${EXTERNAL_LIB})

if(WITH_GPU)

if (USE_TENSORRT)

set(DEPS ${DEPS} ${TENSORRT_LIB_DIR}/libnvinfer${CMAKE_SHARED_LIBRARY_SUFFIX})

set(DEPS ${DEPS} ${TENSORRT_LIB_DIR}/libnvinfer_plugin${CMAKE_SHARED_LIBRARY_SUFFIX})

endif()

set(DEPS ${DEPS} ${CUDA_LIB}/libcudart${CMAKE_SHARED_LIBRARY_SUFFIX})

endif()

target_link_libraries(${DEMO_NAME} ${DEPS})

(4)配置编译脚本compile.sh

compile.sh

#!/bin/bash

set +x

set -e

work_path=$(dirname $(readlink -f $0))

# 1. check paddle_inference exists

if [ ! -d "${work_path}/lib/paddle_inference" ]; then

echo "Please download paddle_inference lib and move it in your_project/lib"

exit 1

fi

# 2. compile

mkdir -p build

cd build

rm -rf *

# 可配置参数

DEMO_NAME=yolov3_test

WITH_MKL=ON

WITH_GPU=ON

USE_TENSORRT=OFF

# 需要更改为自己的路径

LIB_DIR=${work_path}/lib/paddle_inference

CUDNN_LIB=/usr/lib/x86_64-linux-gnu/

CUDA_LIB=/usr/local/cuda/lib64

TENSORRT_ROOT=/home/pc/Softwares/cuda/tensorRT7/TensorRT-7.2.3.4

cmake .. -DPADDLE_LIB=${LIB_DIR} \

-DWITH_MKL=${WITH_MKL} \

-DDEMO_NAME=${DEMO_NAME} \

-DWITH_GPU=${WITH_GPU} \

-DWITH_STATIC_LIB=OFF \

-DUSE_TENSORRT=${USE_TENSORRT} \

-DCUDNN_LIB=${CUDNN_LIB} \

-DCUDA_LIB=${CUDA_LIB} \

-DTENSORRT_ROOT=${TENSORRT_ROOT}

make -j4

配置模型文件

cd model

wget https://paddle-inference-dist.bj.bcebos.com/Paddle-Inference-Demo/yolov3_r50vd_dcn_270e_coco.tgz

tar xzf yolov3_r50vd_dcn_270e_coco.tgz

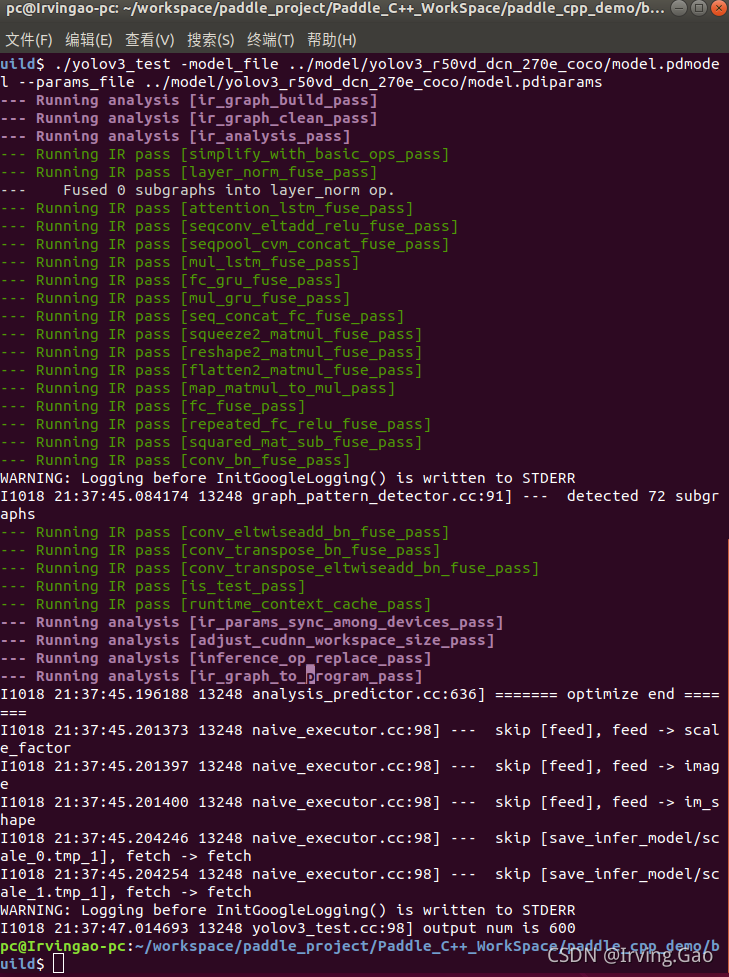

运行

sudo ./compile.sh

cd build

./yolov3_test -model_file ../model/yolov3_r50vd_dcn_270e_coco/model.pdmodel --params_file ../model/yolov3_r50vd_dcn_270e_coco/model.pdiparams

接下来就可以在自己的工程中自行更改代码啦~