0.Abstract

0.1逐句翻译

The recent breakthrough achieved by contrastive learning accelerates the pace for deploying unsupervised training on real-world data applications.

最近通过对比学习取得的突破加快了在真实数据应用中部署无监督训练的步伐。

However, unlabeled data in reality is commonly imbalanced and shows a long-tail distribution, and it is unclear how robustly the latest contrastive learning methods could perform in the practical scenario.

然而,现实中未标记的数据通常是不平衡的,呈长尾分布,目前尚不清楚最新的对比学习方法在实际场景中的表现如何。

This paper proposes to explicitly tackle this challenge, via a principled framework called Self-Damaging Contrastive Learning (SDCLR),to automatically balance the representation learning without knowing the classes.

本文提出了一个名为“自损对比学习”(SDCLR)的原则框架来明确解决这一挑战,在不知道类别的情况下自动平衡表示学习。

Our main inspiration is drawn from the recent finding that deep models have difficult-to-memorize samples, and those may be exposed through network pruning (Hooker et al., 2020).

我们的主要灵感来自最近的发现,深层模型有难以记忆的样本,这些样本可能通过网络修剪暴露出来(Hooker et al., 2020)。

It is further natural to hypothesize that long-tail samples are also tougher for the model to learn well due to insufficient examples.

更自然的假设是,由于样本不足,长尾样本对模型来说也更难以学习。

Hence, the key innovation in SDCLR is to create a dynamic self-competitor model to contrast with the target model, which is a pruned version of the latter.

因此,SDCLR的关键创新在于建立一个动态self-competitor模型来与目标模型进行对比,动态self-competitor模型是后者的精简版。(self-competitor model是这个文章的核心一个点,他是通过原来模型在线剪枝获得的,之后和原来的模型进行对比,获得更多的信息)

During training, contrasting the two models will lead to adaptive online mining of the most easily forgotten samples for the current target model, and implicitly emphasize them more in the contrastive loss.

在训练过程中,对比两种模型会导致自适应在线挖掘当前目标模型中最容易被遗忘的样本,并在对比损失中隐含地更加强调它们。

Extensive experiments across multiple datasets and imbalance settings show that SDCLR significantly improves not only overall accuracies but also balancedness, in terms of linear evaluation on the full-shot and fewshot settings.

在多个数据集和不平衡设置上的广泛实验表明,SDCLR不仅显著提高了整体精度,而且在全镜头和少镜头设置的线性评价方面也显著提高了平衡。(说明效果很好)

Our code is available at https://github.com/VITA-Group/SDCLR.

0.2总结

大约就是使用对比学习的方法来解决长尾的问题。

1. Introduction

1.1. Background and Research Gaps

1.1.1逐句翻译

第一段(引出对比学习是否会受到长尾问题影响的疑问)

Contrastive learning (Chen et al., 2020a; He et al., 2020; Grill et al., 2020; Jiang et al., 2020; You et al., 2020) recently prevails for deep neural networks (DNNs) to learnpowerful visual representations from unlabeled data.

对比学习最近在深度神经网络(DNNs)从未标记数据学习强大的视觉表征方面占据上风。(就是对比学习最近取得了很好的实验效果)

The state-of-the-art contrastive learning frameworks consistently benefit from using bigger models and training on more task agnostic unlabeled data (Chen et al., 2020b).

最先进的对比学习框架一直受益于使用更大的模型和训练更多的任务不可知的未标记的数据。

The predominant promise implied by those successes is to leverage contrastive learning techniques to pre-train strong and transferable representations from internet-scale sources of unlabeled data.

这些成功所暗示的主要前景是,利用对比学习技术,预先训练来自互联网规模的未标记数据来源的强大和可转移的表征。(对比学习的前景就是使用互联网时代的大量数据进行预训练以此来达到很好的运行效果)

However, going from the controlled benchmark data to uncontrolled real-world data will run into several gaps.

然而,从受控制的基准数据到不受控制的真实数据将会遇到几个缺口。

For example, most natural image and language data exhibit a Zipf long-tail distribution where various feature attributes have very different occurrence frequencies (Zhu et al., 2014; Feldman, 2020).

例如,大多数自然图像和语言数据显示Zipf长尾分布,其中各种特性属性的出现频率非常不同。()

Broadly speaking, such imbalance is not only limited to the standard single-label classification with majority versus minority class (Liu et al., 2019), but also can extend to multi-label problems along many attribute dimensions (Sarafianos et al., 2018).

广义上说,这种不平衡不仅局限于多数与少数群体的标准单标签分类(Liu et al., 2019),还可以延伸到多个属性维度的多标签问题(Sarafianos et al., 2018)。

That naturally questions whether contrastive learning can still generalize well in those long-tail scenarios.

这自然会引出对比学习是否仍能在长尾情况下很好地推广的疑问。

第二段(在监督学习当中的传统方法这里不能使用了)

We are not the first to ask this important question.

我们不是第一个提出这个重要问题的人。

Earlier works (Yang & Xu, 2020; Kang et al., 2021) pointed out that when the data is imbalanced by class, contrastive learning can learn more balanced feature space than its supervised counterpart.

更早的工作(杨&徐,2020;Kang et al., 2021)指出,当数据按类别不均衡时,对比学习比有监督的对应学习更均衡的特征空间。

Despite those preliminary successes, we find that the state-of-the-art contrastive learning methods remain certain vulnerability to the long-tailed data (even indeed improving over vanilla supervised learning), after digging into more experiments and imbalance settings (see Sec 4).

尽管有了这些初步的成功,我们发现,在深入研究了更多的实验和不平衡设置(见第4节)后,最先进的对比学习方法仍然存在一定的弱点,容易受到长尾数据的影响(甚至确实比普通的监督学习受到更多的影响)。

(就是说对比学习在均衡方面仍然有一定的弱点)

Such vulnerability is reflected on the linear separability of pretrained features (the instance-rich classes has much more separable features than instance-scarce classes), and affects downstream tuning or transfer performance.

这种漏洞反映在预先训练的特征的线性可分离性上(实例丰富的类比实例匮乏的类具有更多的可分离特征),并影响下游的调优或传输性能。

这种漏洞影响了提取出来的特征的 the linear separability of pretrained features,可能就是有的特征没有拉开,因而影响了下游的任务。

To conquer this challenge further, the main hurdle lies in the absence of class information;

为了进一步克服这一挑战,主要障碍在于分类信息的缺乏;

therefore, existing approaches for supervised learning, such as re-sampling the data distribution (Shen et al., 2016; Mahajan et al., 2018) or re-balancing the loss for each class (Khan et al., 2017; Cui et al., 2019; Cao et al., 2019), cannot be straightforwardly made to work here.

因此,现有的监督学习方法,如重新抽样数据分布(Shen et al., 2016;Mahajan等人,2018年)或重新平衡每个类别的损失(Khan等人,2017年;Cui et al., 2019;曹等人,2019),不能直接在这里工作。

(因为我们没有类别的信息,所以我们没法使用传统的两种方法1.重新抽样数据分布和2.重新调整loss的权重)

1.1.2总结

一切的大背景是对比学习大发展

- 1.传统深度学习当中就存在这个问题。

- 2.虽然之前的工作指出,对比学习受到长尾问题影响比较小;但是作者实验发现长尾问题可能对对比学习影响更大。

- 3.因为我们在无监督学习当中没有有效的分类标签,所以传统的解决长尾问题的方法可能并不奏效。(大约隐含的就是还是得需要我们来解决这个问题)

1.2. Rationale and Contributions

1.2.1 逐句翻译

第一段(模型更容易忘记出现少的类别,所以可以利用这个来找出出现少的类别)

Our overall goal is to find a bold push to extend the loss re-balancing and cost-sensitive learning ideas (Khan et al., 2017; Cui et al., 2019; Cao et al., 2019) into an unsupervised setting.

我们的总体目标是寻找一个广泛使用的方法:这个方法可以扩大损失函数的平衡性,并且开销比较小。

The initial hypothesis arises from the recent observations that DNNs tend to prioritize learning simple patterns (Zhang et al., 2016; Arpit et al., 2017; Liu et al., 2020; Yao et al., 2020; Han et al., 2020; Xia et al., 2021).

最初的假设来自于最近的观察,即深度神经网络(DNN)倾向于优先学习简单模式。(也就是深度网络更倾向学习出简单的模型)

More precisely, the DNN optimization is content-aware, taking advantage of patterns shared by more training examples, and therefore inclined towards memorizing the majority samples.

更准确地说,DNN优化是内容感知的,利用了更多训练示例共享的模式,因此倾向于记忆大多数样本(主要的样本)。

(也就是出现比较多的内容确实更容易被记住)

Since long-tail samples are underrepresented in the training set, they will tend to be poorly memorized, or more “easily forgotten” by the model - a characteristic that one can potentially leverage to spot long-tail samples from unlabeled data in a model-aware yet class-agnostic way.

由于长尾样本在训练集中的表现不足,它们往往记忆力差,或者更容易被模型“遗忘”——这是一种可以利用的特征,以一种模型感知但类不可知的方式从未标记的数据中发现长尾样本。

(因为出现频率比较低得样本会提前被神经网络遗忘,所以我们可以利用这个特点识别出这些长尾样本)

第二段(使用低频率样本会被逐渐遗忘的特点寻找这些样本)

However, it is in general tedious, if ever feasible, to measure how well each individual training sample is memorized in a given DNN (Carlini et al., 2019).

然而,如果可行的话,衡量在给定的DNN中每个单独的训练样本的记忆程度通常是乏味的。(也就是我们不能挨个进行普查这个样子)

One blessing comes from the recent empirical finding (Hooker et al., 2020) in the context of image classification.

最近在图像分类方面的经验发现(Hooker et al., 2020)带来了一个好消息。

The authors observed that, network pruning, which usually removes the smallest magnitude weights in a trained DNN, does not affect all learned classes or samples equally.

作者观察到,网络修剪通常去除训练有素的DNN中最小的幅度权值(数量最小的权重),但对所有学习到的类或样本的影响并不相同。

Rather, it tends to disproportionally hamper the DNN memorization and generalization on the long-tailed and most difficult images from the training set.

相反,它倾向于不成比例地妨碍DNN记忆和泛化训练集中的长尾和最困难的图像。

In other words, long-tail images are not “memorized well” and may be easily “forgotten” by pruning the model, making network pruning a practical tool to spot the samples not yet well learned or represented by the DNN.

换句话说,长尾图像不能“很好地记忆”,而且通过修剪模型很容易“忘记”,使得网络修剪成为一种实用的工具,可以发现尚未被DNN很好地学习或表示的样本。

第三段(详细介绍文章的实现原理)

Inspired by the aforementioned, we present a principled framework called Self-Damaging Contrastive Learning (SDCLR), to automatically balance the representation learning without knowing the classes.

受上述启发,我们提出了一个叫做自损对比学习(SDCLR)的原则性框架,可以在不知道类别的情况下自动平衡表征学习。

The workflow of SDCLR is illustrated in Fig. 1.

SDCLR工作流程如图1所示。(大约就是基于优化速度进行剪枝,然后那些不经常出现的就会因为优化的不明显,而被减去。这样我们就得到了剪枝之后的网络,这个只能识别出常见的类别。之后用这个和原来的对比,也就知道了不常出现的内容。)

In addition to creating strong contrastive views by input data augmentation, SDCLR intro duces another new level of contrasting via“model augmentation, by perturbing the target model’s structure and/or current weights.

除了通过输入数据增强来创建强烈的对比视图,SDCLR还通过“模型增强,通过扰乱目标模型的结构和/或当前权重”引入了另一个新的对比级别。

In particular, the key innovation in SDCLR is to create a dynamic self-competitor model by pruning the target model online, and contrast the pruned model’s features with the target model’s.

具体来说,SDCLR的关键创新在于通过在线剪枝目标模型建立动态自竞争模型,并将剪枝模型与目标模型的特征进行对比。

(self-competitor model是通过在线剪枝获得)

Based on the observation (Hooker et al., 2020) that pruning impairs model ability to predict accurately on rare and atypical instances, those samples in practice will also have the largest prediction differences before then pruned and non-pruned models.

根据观察(Hooker et al., 2020),修剪会削弱模型对罕见和非典型实例的准确预测能力,在实际应用中,这些样本在剪枝模型和非剪枝模型之前的预测差异也最大。

(也就是剪枝对非典型样本的预测影响很大)

That effectively boosts their weights in the contrastive loss and leads to implicit loss re-balancing.

这有效地增加了它们在对比损失中的权重,并导致隐性损失的重新平衡。(直接将这两者对比,直接增加了这两者在对比损失当中的权重)

Moreover, since the self-competitor is always obtained from the updated target model, the two models will co-evolve, which allows the target model to spot diverse memorization failures at different training stages and to progressively learn more balanced representations.

此外,由于自竞争对手总是从更新后的目标模型中获得,因此两种模型将共同进化,这使得目标模型能够在不同的训练阶段发现不同的记忆失败,并逐步学习更均衡的表征。

(这使得目标模型能够在不同的训练阶段发现不同的记忆失败,也就是在某个时间段当中某个预测很少,那么这个就会被对比损失抓出来,所以在不同的时间段,发现不同的失败。)

1.2.1 总结

因为本文是研究长尾效应的问题,所以我们先得分析这些很少出现的种类具有什么特点。

- 1.因为出现的少,所以对这些低概率类别比较重要的分支优化的比较慢,所以会被依据更新速度进行剪枝的剪枝方法减去。

- 2.所以就导致了剪枝之后的模型,缺少了预测长尾样本的能力(预测低频率样本的能力)。

本文提出的模式与传统的异同:

先来看看传统的方法

- 1.传统模型当中的正例(通过孪生网络的例子)是来自于两个不同的数据增强。

- 2.传统模型当中的孪生网络都是来自于动量优化(Moco)或是停止梯度传播(BYLO、simSiam)。

本文提出的模型: - 1.传统模型当中的正例(通过孪生网络的例子)是来自于两个不同的数据增强。

- 2.这里的不同网络是来自于剪枝。

1.3contributions

1.3.1逐句翻译

Below we outline our main contributions:

以下是我们的主要贡献:

? Seeing that unsupervised contrastive learning is not immune to the imbalance data distribution, we design a Self-Damaging Contrastive Learning (SDCLR) framework to address this new challenge.

鉴于无监督对比学习不能避免不平衡的数据分布,我们设计了一个自我损害对比学习(SDCLR)框架来解决这个新的挑战。

? SDCLR innovates to leverage the latest advances in understanding DNN memorization. By creating and updating a self-competitor online by pruning the target model during training, SDCLR provides an adaptive online mining process to always focus on the most easily forgotten (long tailed) samples throughout training.

:SDCLR创新地利用了理解DNN记忆的最新进展。SDCLR通过在训练过程中修剪目标模型,在线创建和更新一个自竞争对手,提供了一个自适应的在线挖掘过程,在整个训练过程中始终关注最容易被遗忘的(长尾)样本。

? Extensive experiments across multiple datasets and imbalance settings show that SDCLR can significantly improve not only the balancedness of the learned representation.

在多个数据集和不平衡设置的广泛实验表明,SDCLR不仅可以显著改善学习表示的平衡性。

2. Related works

暂时跳过

3. Method

3.1. Preliminaries(初期内容)

第一段(Contrastive Learning. 其实就是又简单介绍了一下simCLR)

Contrastive learning learns visual representation via enforcing similarity of the positive pairs(vi, vi+) and enlarging the distance of negative pairs (vi, vi?).

对比学习通过加强正负对(vi, vi+)的相似性和扩大负数对(vi, vi?)的距离来学习视觉表征。

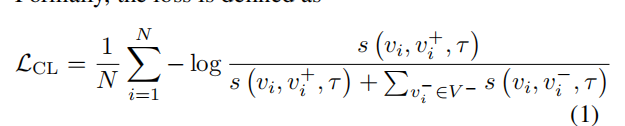

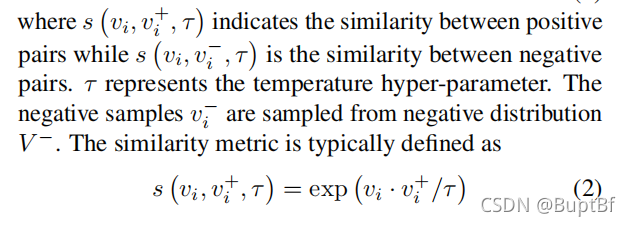

Formally, the loss is defined as

在形式上,损失被定义为(就是传统的对比损失嘛)

SimCLR (Chen et al., 2020a) is one of the state-of-theart contrastive learning frameworks.

SimCLR (Chen et al., 2020a)是目前最先进的对比学习框架之一。

For an input image SimCLR would augment it twice with two different augmentations, and then process them with two branches that share the same architecture and weights.

对于一个输入图像,SimCLR会用两种不同的增强方法对其进行两次增强,然后用共享相同架构和权值的两个分支对其进行处理。

Two different versions of the same image are set as positive pairs, and the negative image is sampled from the rest images in the same batch.

将同一幅图像的两个不同版本设置为正负对,从同一批的其余图像中抽取负图像。

第二段(Pruning Identified Exemplars. 剪枝之后的原型,剪枝经常剪去长尾的信息)

(Hooker et al., 2020) systematically investigates the model output changes introduced by pruning and finds that certain examples are particularly sensitive to sparsity.

(Hooker et al., 2020)系统研究了剪枝引入的模型输出变化,发现某些例子对稀疏性特别敏感。

These images most impacted after pruning are termed as Pruning Identified Exemplars (PIEs), representing the difficult-to-memorize samples in training.

这些剪枝后受影响最大的图像被称为剪枝识别样本(pruning Identified Exemplars, pie),代表训练中难以记忆的样本。

Pruning Identified Exemplars (PIEs),被剪枝识别的样本,识别的其实就是剪掉的那些。

Moreover, the authors also demonstrate that PIEs often show up at the long-tail of a distribution.

此外,作者还证明了PIEs经常出现在分布的长尾。

(也就是剪枝经常剪去那些长尾例子)

第三段(明确本文的贡献,将PIEs的识别从有监督扩展到无监督)

We extend (Hooker et al., 2020)’s PIE hypothesis from supervised classification to the unsupervised setting for the first time.

我们首次将(Hooker et al., 2020)的PIE假设从监督分类扩展到无监督设置。

Moreover, instead of pruning a trained model and expose its PIEs once, we are now integrating pruning into the training process as an online step.

此外,我们现在将修剪作为一个在线步骤集成到训练过程中,而不是修剪一个训练模型并一次暴露它的PIEs。

With PIEs dynamically generated by pruning a target model under training, we expect them to expose different long-tail examples during training, as the model continues to be trained.

使用通过修剪训练中的目标模型而动态生成的PIEs,我们希望它们在训练过程中暴露不同的长尾示例,因为模型仍在继续训练。

Our experiments show that PIEs answer well to those new challenges.

我们的实验表明,PIEs能够很好地应对这些新挑战。

3.1.2总结

大约就是说

- 1.simCLR的结构

- 2.剪枝很容易剪掉不经常出现的长尾数据

- 3.我们通过剪枝获得不同的模型,这个模型忘记了长尾数据,之后使用对比损失函数让他和原来的网络拉近一点。这个拉近的过程其实就无形的强化了长尾数据。

3.2. Self-Damaging Contrastive Learning

第一段()

Observation: Contrastive learning is NOT immune to imbalance. Long-tail distribution fails many supervised approaches build on balanced benchmarks (Kang et al., 2019).

Observation:对比学习也不能避免不平衡。长尾分布使许多建立在平衡基准上的监督方法失效。(Kang et al., 2019).

Even contrastive learning does not rely on class labels, it still learns the transformation invariances in a data-driven manner, and will be affected by dataset bias (Purushwalkam & Gupta, 2020).

使对比学习不依赖类标签,它仍然以数据驱动的方式学习转换不变性,并且会受到数据集偏差的影响

Particularly for long-tail data, one would naturally hypothesize that the instance-rich head classes may dominate the invariance learning procedure and leaves the tail classes under-learned.

特别是对于长尾数据,人们自然会假设实例丰富的头类可能主导不变学习过程,而尾类学习不足。