Introduction

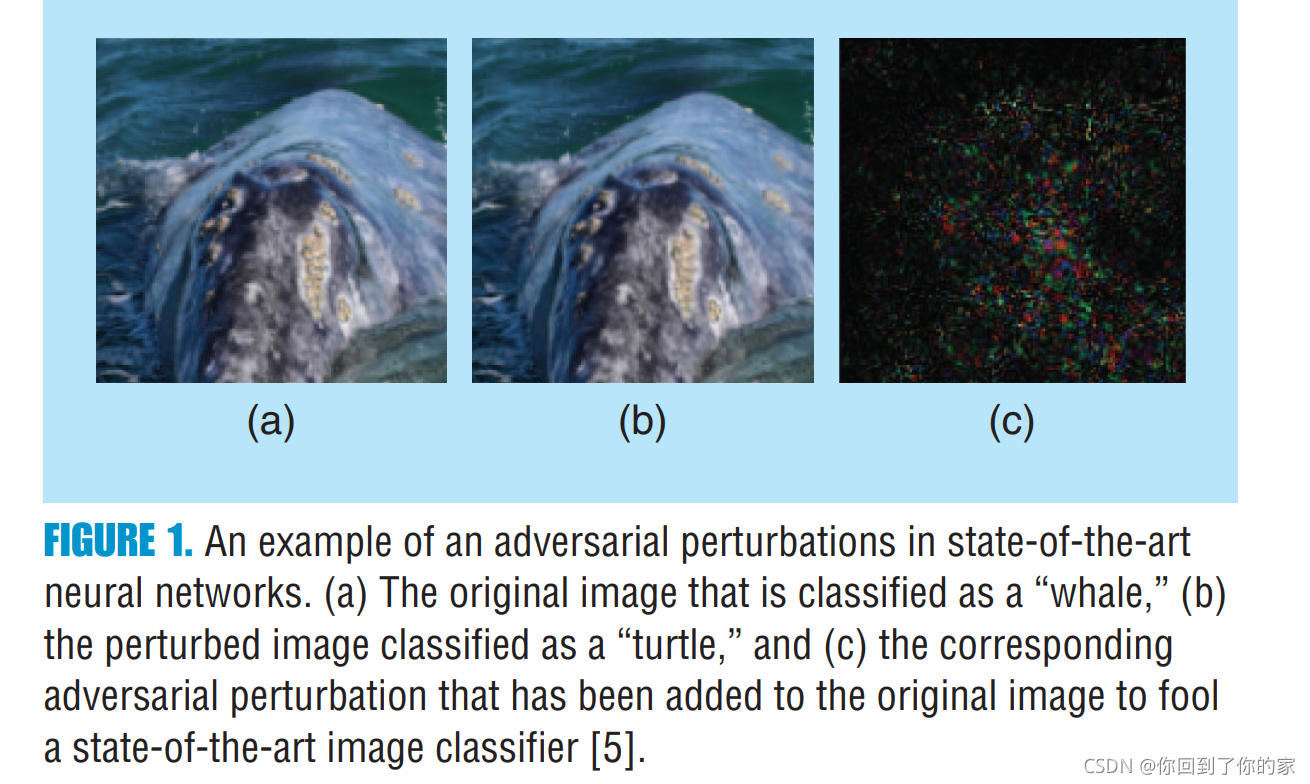

神经网络近些年已经在许多场景中得到了应用,但是一些神经网络的基础属性还没有被理解,这也是近些年研究的重点。更具体来说,神经网络对各种类型perturbation的robustness受到了很大的关注。图1阐述了深度神经网络对small additive perturbation的脆弱性:

A dual phenomenon was observed in [3],这时人眼无法识别的图片会被深度神经网络以很高的confidence进行分类。

The fundamental challenges raised by the robustness of deep networks to perturbation已经催生出了许多重要的工作。这些工作从实验上和理论上对深度网络在不同种类的perturbation上的robustness进行了研究,这些perturbation包括adversarial perturbations,additive random noise,structured transformations甚至universal perturbation。The robustness is usually measured as the sensitivity of the discrete classification function(即the function that assigns a label to each image)to such perturbation。尽管对于robustness的分析并不是一个新的问题,we provide an overview of the recent works that propose to assess the vulnerability of deep network architectures。除了量化在各种perturbations下网络的robustness,对于robustness的分析has further contributed to developing important insights on the geometry of the complex decision boundary of such classifier, which remain hardly understood due to the very high dimensionality of the problems that they address。实际上,一个分类器的robustness性质和决策边界的几何性质是紧密相关的。例如,the high instability of 深度神经网络to 对抗扰动揭示出数据点reside extremely close to the classifier‘s decision boundary。对于robustness的研究因此不仅能帮助我们从实际角度理解系统的稳定性,还能允许我们理解分类区域的几何特征并且使得我们derives insight toward the improvement of current architectures。

整篇文章有多个目的。First, it provides an accessible review of the recent works in the analysis of the robustness of deep neural network classifiers to different forms of perturbations, with a particular emphasis on image analysis and visual understanding applications. Second, it presents connections between the robustness of deep networks and the geometry of the decision boundaries of such classifiers. Third, the article discusses ways to improve the robustness in deep networks architectures and finally highlights some of the important open problems.

Robustness of classifiers

在大多数的分类设定中,测试数据集中误分类的比例是用于评价分类器的主要performance metric。The empirical test error提供了关于分类器的risk, defined as the probability of misclassification,when considering samples from the data distribution。正式来讲,将 μ \mu μ 定义为a distribution defined over images。分类器 f f f的risk等价于:

R ( f ) = P x ~ μ ( f ( x ) ≠ y ( x ) ) ( 1 ) R(f)=\mathbb{P}_{x\sim\mu}(f(x)\ne y(x))\quad\quad\quad\quad\quad\quad(1) R(f)=Px~μ?(f(x)?=y(x))(1)

x x x和 y ( x ) y(x) y(x)分别对应于图片和图片的label。While the risk captures the error of f f f on the data distribution μ \mu μ,它并没有捕捉到the robustness to small arbitrary perturbations of data points。在视觉分类任务中,现在一个比较迫切的任务是学习到classifiers that achieve robustness to small perturbation to images。

这里定义一些符号,让 X \mathcal{X} X表示the ambient space where images live。让 R \mathcal{R} R表示我们所容许的扰动集合。例如,当考虑geometric perturbation时, R \mathcal{R} R is set to be the group of geometric(即 affine)transformation under study。如果我们想要衡量在任意additive perturbation下的robustness,那么我们设定 R = X \mathcal{R}=\mathcal{X} R=X。对 r ∈ R r\in\mathcal{R} r∈R,我们定义 T r : X → X T_r:\mathcal{X}\to\mathcal{X} Tr?:X→Xto be the perturbation operator by r r r,对于一个数据点 x ∈ X x\in\mathcal{X} x∈X, T r ( x ) T_r(x) Tr?(x)表示被 r r r扰动后的图片 x x x。使用上面这些notations,我们将改变判别器关于图片 x x x的label的最小perturbation定义为:

r ? ( x ) = arg?min ? r ∈ R ∥ r ∥ R s u b j e c t ? t o f ( T r ( x ) ) ≠ f ( x ) ( 2 ) r^*(x)=\argmin\limits_{r\in\mathcal{R}}\Vert r\Vert_{R}\quad\quad\quad\quad\quad\quad\quad\quad\quad\\subject\ to\quad f(T_r(x))\ne f(x)\quad\quad\quad\quad\quad\quad\quad\quad\quad(2) r?(x)=r∈Rargmin?∥r∥R?subject?tof(Tr?(x))?=f(x)(2)

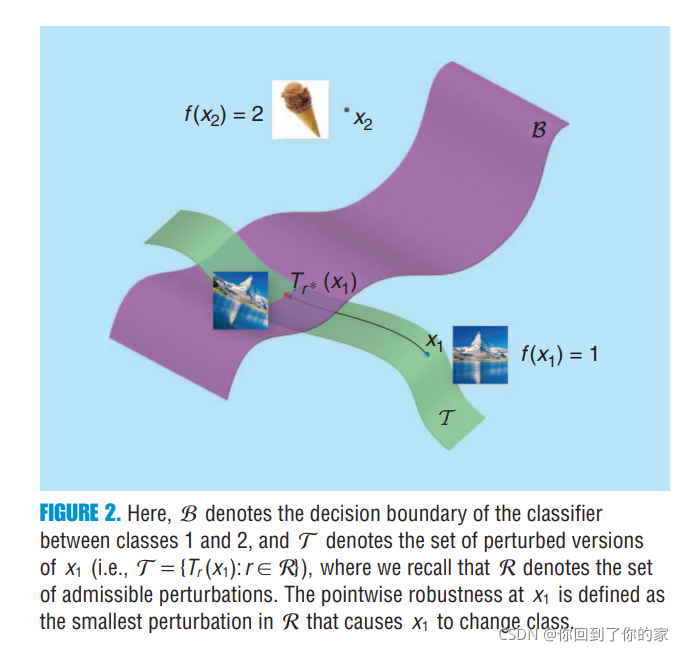

这里 ∥ ? ∥ R \Vert \cdot\Vert_R ∥?∥R? 是 R \mathcal{R} R 上的metric。为了notation simplicity,我们省略了the dependence of r ? ( x ) r^*(x) r?(x) on f f f, R \mathcal{R} R, δ \delta δand operator T T T。Moreover,当图片 x x x is clear from the context,we will use r ? r^* r? to refer to r ? ( x ) r^*(x) r?(x)。图2对扰动过程进行了阐述:

The pointwise robustness of

f

f

f at

x

x

x 因此通过

∥

r

?

(

x

)

∥

R

\Vert r^*(x)\Vert_{\mathcal{R}}

∥r?(x)∥R?进行衡量。注意

∥

?

∥

R

\Vert\cdot\Vert_{\mathcal{R}}

∥?∥R?值越大表明

x

x

x处的robustness越高。尽管这种robustness的定义考虑到了使得分类器

f

f

f改变分类的结果的smallest perturbation

r

?

(

x

)

r^*(x)

r?(x),其他的工作却采用了一些稍微不同的定义,这些工作中a ‘sufficiently small’ perturbation is sought(instead of the minimal one)[7-9]。为了衡量分类器

f

f

f的global robustness,我们可以计算

∥

r

?

(

x

)

∥

R

\Vert r^*(x)\Vert_{\mathcal{R}}

∥r?(x)∥R?在数据分布上的期望。这表明global robustness

ρ

(

f

)

\rho(f)

ρ(f)可以通过如下的方式进行定义:

ρ ( f ) = E x ~ μ ( ∥ r ? ( x ) ∥ R ( 3 ) \rho(f)=\mathbb{E}_{x\sim\mu}(\Vert r^*(x)\Vert_{\mathcal{R}}\quad\quad\quad\quad\quad\quad(3) ρ(f)=Ex~μ?(∥r?(x)∥R?(3)

需要注意的比较重要的一点是,在我们的robustness setting中,the perturbed point T r ( x ) T_r(x) Tr?(x) need not belong to the support of the data distribution。因此,尽管the focus of the risk in(1)is the accuracy on typical images(从 μ \mu μ中进行采样),the focus of the robustness computed from (2)is instead on the distance to the ‘closest’ image(potentially outside the support of μ \mu μ)that changes the label of the classifier。The risk and robustness hence capture two fundamentally different properties of the classifier,如如下所述:

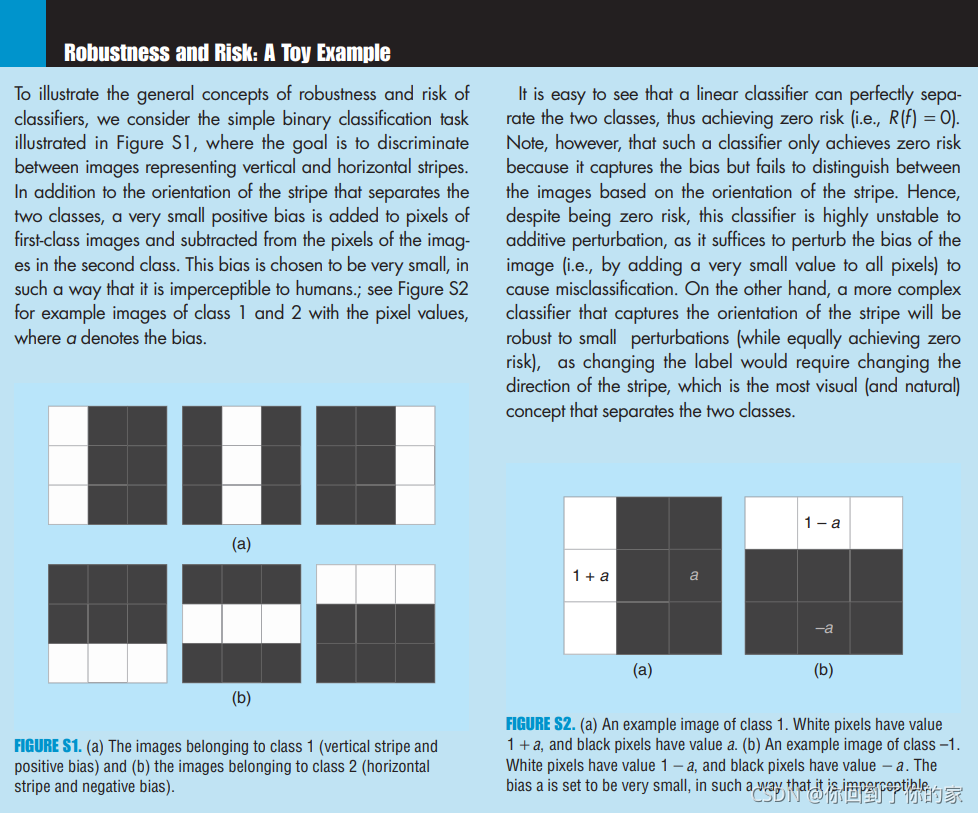

Observe that classification robustness和支持向量机classifier有较强的关联,后者的目标是最大化robustness,defined as the margin between support vectors. Importantly, the max-margin classifier in a given family of classifiers might, however, still not achieve robustness (in the sense of high

ρ

(

f

)

\rho(f)

ρ(f)). An illustration is provided in “Robustness and Risk: A Toy Example,” where a no zero-risk linear classifier—in particular, the max-margin classifier— achieves robustness to perturbations. Our focus in this article is turned toward assessing the robustness of the family of deep neural network classifiers that are used in many visual recognition tasks.

Perturbation forms

Robustness to additive perturbations

We first start by considering the case where the perturbation operator is simply additive,即 T r ( x ) = x + r T_r(x)=x+r Tr?(x)=x+r。在这种情况下, the magnitude of the perturbation can be measured with the l p l_p lp? norm of the minimal perturbation that is necessary to change the label of a classifier. According to (2), the robustness to additive perturbations of a data point x is defined as:

min ? r ∈ R ∥ r ∥ p s u b j e c t ? t o f ( x + r ) ≠ f ( x ) ( 4 ) \min\limits_{r\in\mathcal{R}}\Vert r\Vert_p\quad\quad\quad\quad\quad\quad\quad\quad\\subject\ to\quad f(x+r)\ne f(x)\quad\quad\quad\quad\quad\quad\quad\quad\quad\quad\quad\quad(4) r∈Rmin?∥r∥p?subject?tof(x+r)?=f(x)(4)

Depending on the conditions that one sets on the set R \mathcal{R} R that supports the perturbations, the additive model leads to different forms of robustness.

Adversarial perturbations

我们首先考虑additive perturbations不受限制的情况,即 R = X \mathcal{R}=\mathcal{X} R=X。通过解决(4)得到的perturbation通常被称作adversarial perturbation, as it corresponds to the perturbation that an adversary (having full knowledge of the model) would apply to change the label of the classifier, while causing minimal changes to the original image.

The optimization problem in (4) is nonconvex, as the constraint involves the (potentially highly complex) classification function f f f. Different techniques exist to approximate adversarial perturbations. In the following, we briefly mention some of the existing algorithms for computing adversarial perturbations:

Regularized variant[1]: 这种方式通过解决a regularized variant of the problem in (4)来获取adversarial perturbation,这个变种问题可以用如下的公式进行描述:

min ? r c ∥ r ∥ p + J ( x + r , y ~ , θ ) ( 5 ) \min\limits_{r}c\Vert r\Vert_p+J(x+r,\tilde{y},\theta)\quad\quad\quad\quad\quad(5) rmin?c∥r∥p?+J(x+r,y~?,θ)(5)

这里 y ~ \tilde{y} y~?是perturbed sample的target label, J J J是loss function, c c c是一个regularization 参数, θ \theta θ是模型参数。在[1]中最原始的公式中,添加了一个额外的变量限制来保证 x + r ∈ [ 0 , 1 ] x+r\in[0,1] x+r∈[0,1],这在(5)中为了简单性进行了省略。为了解决(5)中的优化问题,a line search is performed over c c c to find the maximum c > 0 c>0 c>0 for which the minimizer of (5) satisfies f ( x + r ) = y ~ f(x+r)=\tilde{y} f(x+r)=y~?。尽管可以产生非常精准的估计,这种方法can be costly to compute on high-dimensional and large-scale datasets。Moreover,it computes targeted adversarial perturbation,where the target label is known。

Fast gradient sign(FGS)[11]:这种方式通过going in the direction of the sign of gradient of the loss function来估计an untargeted adversarial perturbation,loss function定义如下:

? s i g n ( ? x J ( x , y ( x ) , θ ) ) \epsilon sign(\nabla_xJ(x,y(x),\theta)) ?sign(?x?J(x,y(x),θ))

这里 J J J是loss function,用来训练神经网络并且 θ \theta θ表示模型参数。尽管十分高效,这种one-step的算法仅能提供a coarse approximation to the solution of the optimization problem in (4) for p = ∞ p=\infty p=∞

DeepFool[5]: 这个算法通过一个迭代的步骤来最小化(4),where each iteration involves the linearization of the constraint。The linearized (constrained)problem is solved in closed form at each iteration,and the current estimate is updated;优化过程当当前的estimate of the perturbation fools the classifier时终止。In practice,DeepFool provides a tradeoff between the accuracy and efficiency of the two previous approaches。

In addition to the aforementioned optimization methods, several other approaches have recently been proposed to compute adversarial perturbations, see, e.g., [9], [12], and [13]. Different from the previously mentioned gradient-based techniques, the recent work in [14] learns a network (the adversarial transformation network) to efficiently generate a set of perturbations with a large diversity, without requiring the computation of the gradients.

使用先前提及到的这些技巧, one can compute the robustness of classifiers to additive adversarial perturbations. Quite surprisingly, deep networks are extremely vulnerable to such additive perturbations; i.e.,

small and even imperceptible adversarial perturbations can be computed to fool them with high probability. For example, the average perturbations required to fool the CaffeNet [15] and GoogleNet [16] architectures on the ILSVRC 2012 task [17] are 100 times smaller than the typical norm of natural images [5] when using the

l

2

l_2

l2? norm. The high instability of deep neural networks to adversarial perturbations, which was first highlighted in [1], shows that these networks rely heavily on proxy concepts to classify objects, as opposed to strong visual concepts typically used by humans to distinguish between objects.

To illustrate this idea, we consider once again the toy classification example (see “Robustness and Risk: A Toy Example”), where the goal is to classify images based on the orientation of the stripe. In this example, linear classifiers could achieve a perfect recognition rate by exploiting the imperceptibly small bias that separates the two classes. While this proxy concept achieves zero risk, it is not robust to perturbations: one could design an additive perturbation that is as simple as a minor variation of the bias, which is sufficient to induce data misclassification. On the same line of thought, the high instability of classifiers to additive perturbations observed in [1] suggests that deep neural networks potentially capture one of the proxy concepts that separate the different classes. Through a quantitative analysis of polynomial classifiers, [10] suggests that higher-degree classifiers tend to be more robust to perturbations, as they capture the “stronger” (and more visual) concept that separates the classes (e.g., the orientation of the stripe in Figure S1 in “Robustness and Risk: A Toy Example”). For neural networks, however, the relation between the flexibility of the architecture (e.g., depth and breadth) and adversarial robustness is not well understood and remains an open problem.

Random noise

待补充

Geometric insights from robustness

对robustness的研究允许我们获得关于classifier的insights,更准确地讲,是关于the geometry of the

classification function acting on the high-dimensional input space.

f

:

X

→

{

1

,

]

…

,

C

}

f:\mathcal{X}\to\{1,]\dots,C\}

f:X→{1,]…,C}指代我们的C-class分类器, 并且我们用

g

1

,

…

,

g

c

g_1,\dots,g_c

g1?,…,gc? 表示分类器对

C

C

C个类中的每个判定的概率。特别地,对于一个给定的

x

∈

X

x\in\mathcal{X}

x∈X,

f

(

x

)

f(x)

f(x) is assigned to the class having a maximal score,即

f

(

x

)

=

a

r

g

m

a

x

i

{

(

g

i

(

x

)

}

f(x)=argmax_i\{(g_i(x)\}

f(x)=argmaxi?{(gi?(x)}。对于深度神经网络,函数

g

i

g_i

gi?代表网络中最后一层的输出(通常指softmax层)。Note that the classifier

f

f

f can be seen as a mapping that partitions the input space

X

\mathcal{X}

X into classification regions, each of which has a constant estimated label (i.e.,

f

(

x

)

f(x)

f(x) is constant for each such region). The decision boundary

B

\mathcal{B}

B of the classifier is defined as the union of the boundaries of such classification regions (see Figure 2).

Adversarial perturbations

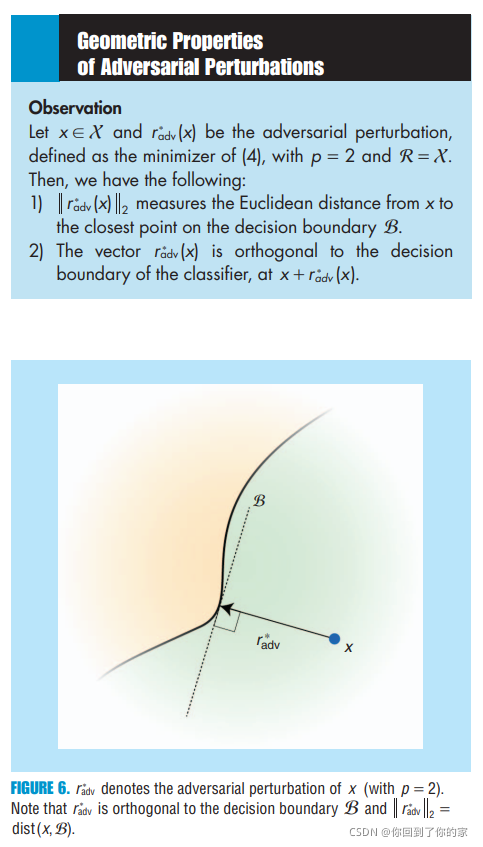

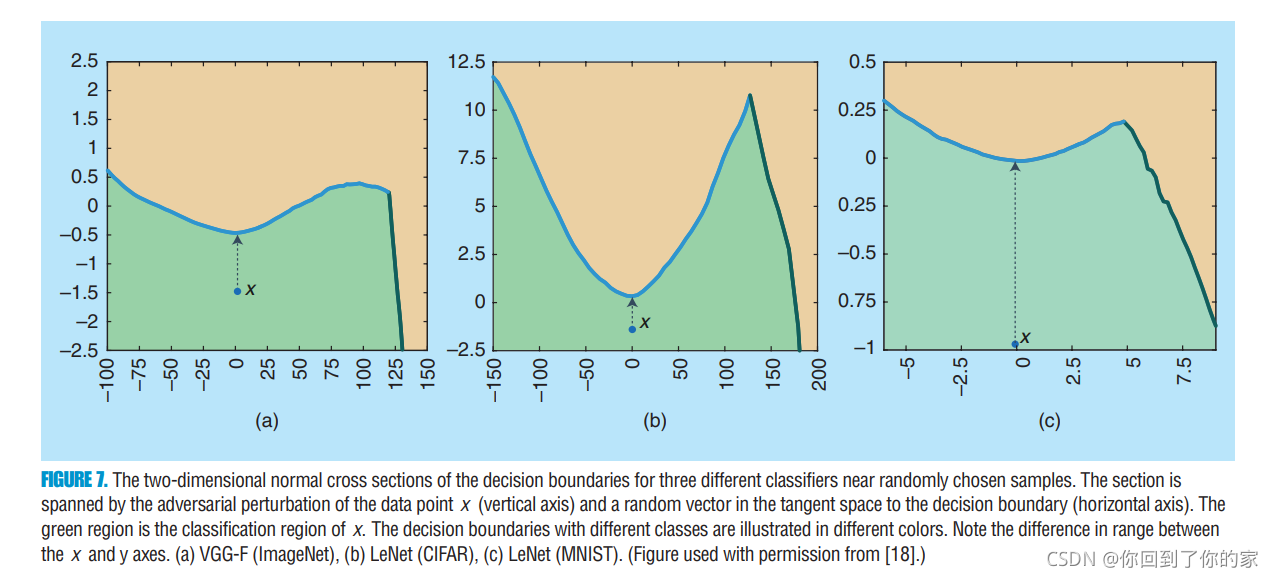

我们首先聚焦于adversarial perturbation并且highlight这些扰动和决策边界几何结构的关系。这种关系relies on the simple observation shown in “Geometric Properties of Adversarial Perturbations.”。两个几何性质都在图6中进行了展示:

注意这些几何性质are specific to the

l

2

l_2

l2?范数 The high instability of classifiers to adversarial perturbations, which we highlighted in the previous section, 揭示了自然图片都位于非常接近于决策边界的位置。尽管这个结果是理解the geometry of the data points with regard to the classifier‘s决策边界的关键,它并没有提供任何有关于决策边界形状的insight . 一个关于决策边界的local geometric描述 (位于

x

x

x的周围地区) is rather captured通过

r

a

d

v

?

(

x

)

r^*_{adv}(x)

radv??(x)的方向,due to adversarial perturbation的垂直性. In [18] and [25], 这些adversarial perturbation的几何性质被利用来进行在数据点的周围地区可视化决策边界的typical cross sections. 特别地,a two-dimensional normal section of the decision boundary is illustrated, where the sectioning plane is spanned by the adversarial perturbation (垂直于决策边界) and a random vector in the tangent space. Examples of normal sections of decision boundaries are illustrated in Figure 7:

我们可以观察到SOTA深度神经网络的决策边界on these two-dimensional cross sections有一个非常低的curvature (注意x轴和y轴的区别n)。换句话说,这些图表揭示了

x

x

x附近位置的决策边界can be locally well approximated by a hyperplane passing through

x

+

r

a

d

v

?

(

x

)

x+r^*_{adv}(x)

x+radv??(x) with the normal vector

r

a

d

v

?

(

x

)

r^*_{adv}(x)

radv??(x)。 In [11], it is hypothesized that state-of-the-art classifiers are “too linear,” 导致了决策边界with very small curvature并进一步解释了 the high instability of such classifiers to adversarial perturbations. To motivate the linearity hypothesis of deep networks, the success of the FGS method (which is exact for linear classifiers) in finding adversarial perturbations is invoked. 然而,最近的一些工作对线性假设做出了挑战,例如[26], the authors show that there exist adversarial perturbations that cannot be explained with this hypothesis, and, in [27], the authors provide a new explanation based on the tilting of the decision boundary with respect to the data manifold. We stress here that the low curvature of the decision boundary does not, in general, imply that the function learned

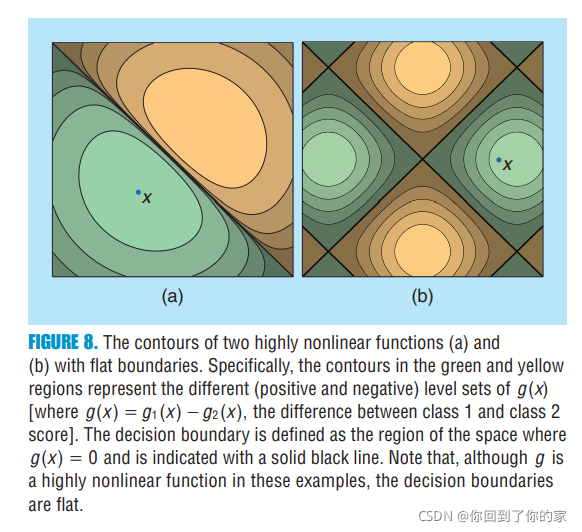

by the deep neural network (as a function of the input image) is linear, or even approximately linear. Figure 8 shows illustrative examples of highly nonlinear functions resulting in flat decision boundaries.:

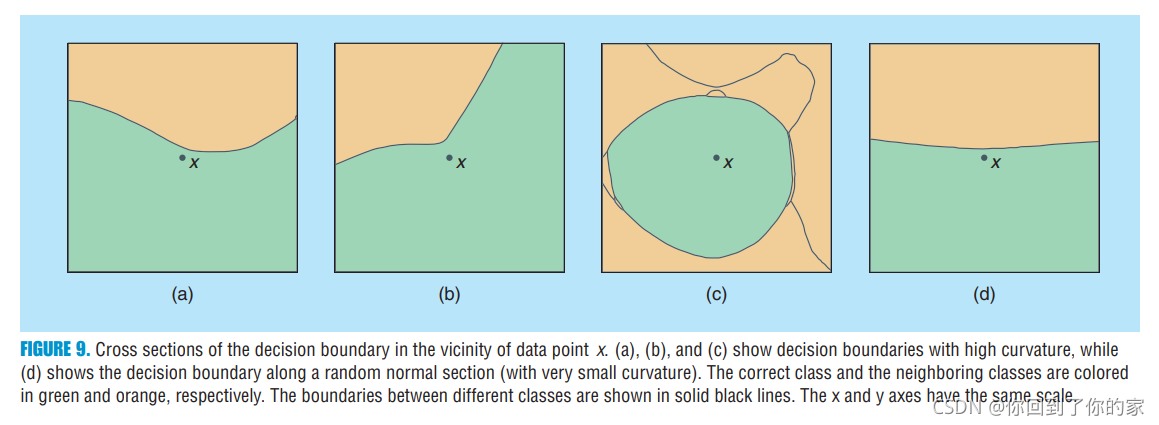

Moreover, it should be noted that, while the decision boundary of deep networks is very flat on random two-dimensional cross sections, these boundaries are not flat on all cross sections. That is, there exist directions in which the boundary is very curved. Figure 9 provides some illustrations of such cross sections, where the decision boundary has large curvature and therefore significantly departs from the first-order linear approximation, suggested by the flatness of the decision boundary on random sections in Figure 7.:

Hence, these visualizations of the decision boundary strongly suggest that the curvature along a small set of directions can be very large and that the curvature is relatively small along random directions in the input space. Using a numerical computation of the curvature, the sparsity of the curvature profile is empirically verified in [28] for deep neural networks, and the directions where the decision boundary is curved are further shown to play a major role in explaining the robustness properties of classifiers. In [29], the authors provide a complementary analysis on the curvature of the decision boundaries induced by deep networks and show that the first principal curvatures increase exponentially with the depth of a random neural network. The analyses of [28] and [29] hence suggest that the curvature profile of deep networks is highly sparse (i.e., the decision boundaries are almost flat along most directions) but can have a very large curvature along a few directions.

Universal perturbations

待补充