文章目录

前言

本文从数据预处理开始,基于LeNet搭建一个最简单的3D的CNN,计算医学图像分类常用指标AUC,ACC,Sep,Sen,并用5折交叉验证来提升预测指标,来实现3D的MRI图像二分类

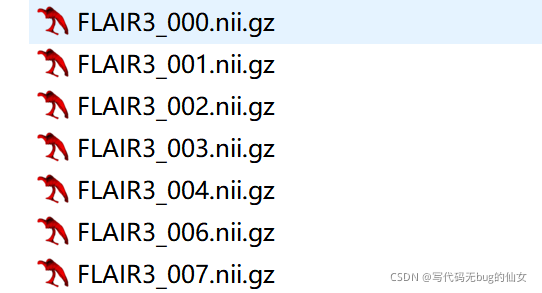

一、将nii图像数据转成npy格式

首先将nii图像数据转成npy格式,方便输入网络

import nibabel as nib

import os

import numpy as np

from skimage.transform import resize

import pandas as pd

def mkdir(path):

if not os.path.exists(path):

os.makedirs(path)

img_path = 'E:\TSC\deep_learing_need\data for paper\FLAIR3' #nii文件

save_path = 'E:\TSC\deep_learing_need\data for paper\FLAIR3_npy' #npy文件

mkdir(save_path)

#FLAIR3_000.nii.gz 文件类型命名举例

label_pd = pd.read_excel('E:\TSC\deep_learing_need\clinical_features.xlsx',sheet_name = 'label')#label excel 存放每一个数据的pid和对应的label,如:pid:100,label:0

for img_name in os.listdir(img_path):

net_data = []

pid = img_name.split('_')[1].split('.')[0]

print(pid)

print(img_name)

label = label_pd[label_pd['pid'] == int(pid) ]['label']

print(os.path.join(img_path,img_name))

img_data = nib.load(os.path.join(img_path,img_name))

img = img_data.get_fdata()

img = resize(img, (128,128,128), order=0)#将图像大小进行统一缩放,方便输入网络,分别为(h,w,c),可根据自己的数据集来更改

# img = nib.load(os.path.join(img_path,img_name).get_fdata() #载入

img = np.array(img)

#nomalization

if np.min(img) < np.max(img):

img = img - np.min(img)

img = img / np.max(img)

if np.unique(label==1):

label_data = 1

net_data.append([img,label_data])

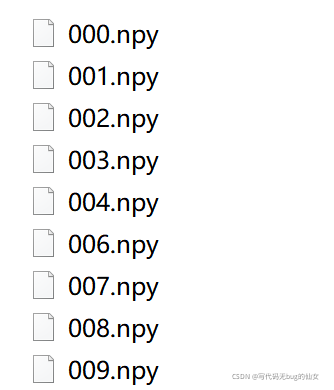

np.save(os.path.join(save_path,pid), net_data) #保存

if np.unique(label==0):

label_data = 0

net_data.append([img,label_data])

np.save(os.path.join(save_path,pid), net_data) #保存

print('Done!')

二、加载数据

1.加载数据,Dataset.py:

import torch

# 定义GetLoader类,继承Dataset方法,并重写__getitem__()和__len__()方法

class GetLoader(torch.utils.data.Dataset):

# 初始化函数,得到数据

def __init__(self, data_root, data_label):

self.data = data_root

self.label = data_label

# index是根据batchsize划分数据后得到的索引,最后将data和对应的labels进行一起返回

def __getitem__(self, index):

data = self.data[index]

labels = self.label[index]

return torch.tensor(data).float(), torch.tensor(labels).float()

# 该函数返回数据大小长度,目的是DataLoader方便划分,如果不知道大小,DataLoader会一脸懵逼

def __len__(self):

return len(self.data)

1.一些其他函数,utils.py:

from skimage import transform,exposure

from sklearn import model_selection, preprocessing, metrics, feature_selection

import os

import numpy as np

import random

import torch

#加载npy数据和label

def load_npy_data(data_dir,split):

datanp=[] #images

truenp=[] #labels

for file in os.listdir(data_dir):

data=np.load(os.path.join(data_dir,file),allow_pickle=True)

# data[0][0] = resize(data[0][0], (224,224,224))

if(split =='train'):

data_sug= transform.rotate(data[0][0], 60) #旋转60度,不改变大小

data_sug2 = exposure.exposure.adjust_gamma(data[0][0], gamma=0.5)#变亮

datanp.append(data_sug)

truenp.append(data[0][1])

datanp.append(data_sug2)

truenp.append(data[0][1])

datanp.append(data[0][0])

truenp.append(data[0][1])

datanp = np.array(datanp)

#numpy.array可使用 shape。list不能使用shape。可以使用np.array(list A)进行转换。

#不能随意加维度

datanp=np.expand_dims(datanp,axis=4) #加维度,from(1256,256,128)to(256,256,128,1),according the cnn tabel.png

datanp = datanp.transpose(0,4,1,2,3)

truenp = np.array(truenp)

print(datanp.shape, truenp.shape)

print(np.min(datanp), np.max(datanp), np.mean(datanp), np.median(datanp))

return datanp,truenp

#定义随机种子

def set_seed(seed):

random.seed(seed)

os.environ["PYTHONHASHSEED"] = str(seed)

np.random.seed(seed)

torch.manual_seed(seed)

if torch.cuda.is_available():

torch.cuda.manual_seed_all(seed)

torch.backends.cudnn.deterministic = True

def _init_fn(worker_id):

np.random.seed(int(12) + worker_id)

#计算分类的各项指标

def calculate(score, label, th):

score = np.array(score)

label = np.array(label)

pred = np.zeros_like(label)

pred[score >= th] = 1

pred[score < th] = 0

TP = len(pred[(pred > 0.5) & (label > 0.5)])

FN = len(pred[(pred < 0.5) & (label > 0.5)])

TN = len(pred[(pred < 0.5) & (label < 0.5)])

FP = len(pred[(pred > 0.5) & (label < 0.5)])

AUC = metrics.roc_auc_score(label, score)

result = {'AUC': AUC, 'acc': (TP + TN) / (TP + TN + FP + FN), 'sen': (TP) / (TP + FN + 0.0001),

'spe': (TN) / (TN + FP + 0.0001)}

# print('acc',(TP+TN),(TP+TN+FP+FN),'spe',(TN),(TN+FP),'sen',(TP),(TP+FN))

return result

def mkdir(path):

if not os.path.exists(path):

os.makedirs(path)

二、建模 model.py

import torch.nn as nn

import torch.nn.functional as F

class LeNet(nn.Module):

def __init__(self):

super(LeNet, self).__init__()

self.conv1 = nn.Conv3d(1, 16, kernel_size=3, stride=2, padding=1)

self.pool1 = nn.MaxPool3d(2, 2)

self.conv2 = nn.Conv3d(16, 32, kernel_size=3, stride=2, padding=1)

self.pool2 = nn.MaxPool3d(2, 2)

self.fc1 = nn.Linear(32*8*8*8, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84,1)

def forward(self, x):

x = F.relu(self.conv1(x)) # input(1, 128, 128,128) output(16, 65, 65,65)

x = self.pool1(x) # output(16, 32, 32,32)

x = F.relu(self.conv2(x)) # output(32, 16, 16)

x = self.pool2(x) # output(32, 8, 8)

x = x.view(-1, 32*8*8*8) # output(32*8*8*8)

x = F.relu(self.fc1(x)) # output(120)

x = F.relu(self.fc2(x)) # output(84)

x = self.fc3(x) # output(10)

return x

二、训练 train.py

接下来就是训练了

from model import LeNet

from skimage import transform,exposure

from sklearn import model_selection, preprocessing, metrics, feature_selection

import os

import time

import numpy as np

import pandas as pd

import torch

from torch.utils import data as torch_data

from torch.nn import functional as torch_functional

from Dataset import GetLoader

from sklearn.model_selection import StratifiedKFold

from sklearn.metrics import roc_auc_score

from utils import mkdir,load_npy_data,calculate,_init_fn,set_seed

set_seed(12)

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

train_path = '/home/mist/cloud/T1_sag_npy/train'

model_path = 'cloud/model_save_t1_sag'

model_floder = 'model_t1_sag_10.26_lenet'

save_path = os.path.join(model_path, model_floder)

mkdir(save_path)

datanp_train,truenp_train = load_npy_data(train_path,'1')

# 通过GetLoader将数据进行加载,返回Dataset对象,包含data和labels

train_data_retriever2 = GetLoader(datanp_train, truenp_train)

class Trainer:

def __init__(

self,

model,

device,

optimizer,

criterion

):

self.model = model

self.device = device

self.optimizer = optimizer

self.criterion = criterion

self.best_valid_score = 0 # np.inf

self.n_patience = 0

self.lastmodel = None

def fit(self, epochs, train_loader, valid_loader, modility, save_path, patience, fold):

best_auc = 0

for n_epoch in range(1, epochs + 1):

self.info_message("EPOCH: {}", n_epoch)

train_loss, train_auc, train_time, rst_train = self.train_epoch(train_loader)

valid_loss, valid_auc, valid_time, rst_val = self.valid_epoch(valid_loader)

self.info_message(

"[Epoch Train: {}] loss: {:.4f}, auc: {:.4f},time: {:.2f} s ",

n_epoch, train_loss, train_auc, train_time

)

self.info_message(

"[Epoch Valid: {}] loss: {:.4f}, auc: {:.4f}, time: {:.2f} s",

n_epoch, valid_loss, valid_auc, valid_time

)

# if True:

# if self.best_valid_score > valid_loss:

if self.best_valid_score < valid_auc and n_epoch > 20:

# if self.best_valid_score > valid_loss:

self.save_model(n_epoch, modility, save_path, valid_loss, valid_auc, fold)

self.info_message(

"loss decrease from {:.4f} to {:.4f}. Saved model to '{}'",

self.best_valid_score, valid_auc, self.lastmodel

)

self.best_valid_score = valid_auc

self.n_patience = 0

final_rst_train = rst_train

final_rst_val = rst_val

else:

self.n_patience += 1

if self.n_patience >= patience:

self.info_message("\nValid auc didn't improve last {} epochs.", patience)

break

all_rst = [final_rst_train, final_rst_val]

rst = pd.concat(all_rst, axis=1)

print(rst)

print('fold ' + str(fold) + ' finished!')

return rst

def train_epoch(self, train_loader):

self.model.train()

t = time.time()

sum_loss = 0

y_all = []

outputs_all = []

for step, batch in enumerate(train_loader, 1):

X = batch[0].to(self.device)

targets = batch[1].to(self.device)

self.optimizer.zero_grad()

outputs = self.model(X).squeeze(1)

loss = self.criterion(outputs, targets)

loss.backward()

sum_loss += loss.detach().item()

y_all.extend(batch[1].tolist())

outputs_all.extend(outputs.tolist())

self.optimizer.step()

message = 'Train Step {}/{}, train_loss: {:.4f}'

self.info_message(message, step, len(train_loader), sum_loss / step, end="\r")

y_all = [1 if x > 0.5 else 0 for x in y_all]

auc = roc_auc_score(y_all, outputs_all)

fpr_micro, tpr_micro, th = metrics.roc_curve(y_all, outputs_all)

max_th = -1

max_yd = -1

for i in range(len(th)):

yd = tpr_micro[i] - fpr_micro[i]

if yd > max_yd:

max_yd = yd

max_th = th[i]

rst_train = pd.DataFrame([calculate(outputs_all, y_all, max_th)])

return sum_loss / len(train_loader), auc, int(time.time() - t), rst_train

def valid_epoch(self, valid_loader):

self.model.eval()

t = time.time()

sum_loss = 0

y_all = []

outputs_all = []

for step, batch in enumerate(valid_loader, 1):

with torch.no_grad():

X = batch[0].to(self.device)

targets = batch[1].to(self.device)

outputs = self.model(X).squeeze(1)

loss = self.criterion(outputs, targets)

sum_loss += loss.detach().item()

y_all.extend(batch[1].tolist())

outputs_all.extend(outputs.tolist())

message = 'Valid Step {}/{}, valid_loss: {:.4f}'

self.info_message(message, step, len(valid_loader), sum_loss / step, end="\r")

y_all = [1 if x > 0.5 else 0 for x in y_all]

auc = roc_auc_score(y_all, outputs_all)

fpr_micro, tpr_micro, th = metrics.roc_curve(y_all, outputs_all)

max_th = -1

max_yd = -1

for i in range(len(th)):

yd = tpr_micro[i] - fpr_micro[i]

if yd > max_yd:

max_yd = yd

max_th = th[i]

rst_val = pd.DataFrame([calculate(outputs_all, y_all, max_th)])

return sum_loss / len(valid_loader), auc, int(time.time() - t), rst_val

def save_model(self, n_epoch, modility, save_path, loss, auc, fold):

os.makedirs(save_path, exist_ok=True)

self.lastmodel = os.path.join(save_path, f"{modility}-fold{fold}.pth")

# self.lastmodel = f"{save_path}-e{n_epoch}-loss{loss:.3f}-auc{auc:.3f}.pth"

torch.save(

{

"model_state_dict": self.model.state_dict(),

"optimizer_state_dict": self.optimizer.state_dict(),

"best_valid_score": self.best_valid_score,

"n_epoch": n_epoch,

},

self.lastmodel,

)

@staticmethod

def info_message(message, *args, end="\n"):

print(message.format(*args), end=end)

def train_mri_type(mri_type):

#5折交叉验证,每一折保存一个模型

skf = StratifiedKFold(n_splits=5, shuffle=True, random_state=0)

rst_dfs = []

for fold, (train_idx, val_idx) in enumerate(skf.split(datanp_train, truenp_train)):

print(f'Training for fold {fold} ...')

train_x, train_y, val_x, val_y = datanp_train[train_idx], truenp_train[train_idx], datanp_train[val_idx], \

truenp_train[val_idx]

train_data_retriever = GetLoader(train_x, train_y)

valid_data_retriever = GetLoader(val_x, val_y)

train_loader = torch_data.DataLoader(

train_data_retriever,

batch_size=4,

shuffle=True,

num_workers=8,

pin_memory=False,

worker_init_fn=_init_fn

)

valid_loader = torch_data.DataLoader(

valid_data_retriever,

batch_size=4,

shuffle=False,

num_workers=8,

pin_memory=False,

worker_init_fn=_init_fn

)

model = LeNet()

model.to(device)

optimizer = torch.optim.Adam(model.parameters(), lr=0.001)

# optimizer = torch.optim.SGD(model.parameters(), lr=0.001, momentum=0.9)

criterion = torch_functional.binary_cross_entropy_with_logits

# criterion = nn.BCELoss()

trainer = Trainer(

model,

device,

optimizer,

criterion

)

rst = trainer.fit(

50,

train_loader,

valid_loader,

f"{mri_type}",

save_path,

30,

fold,

)

rst_dfs.append(rst)

rst_dfs = pd.concat(rst_dfs)

print(rst_dfs)

rst_dfs = pd.DataFrame(rst_dfs)

rst_dfs.to_csv(os.path.join(save_path, 'train_val_res_pf.csv'))#保存每一折的指标

return trainer.lastmodel, rst_dfs

modelfiles = None

#开始训练

if not modelfiles:

modelfiles, rst_dfs = train_mri_type('t1_sag')

print(modelfiles)

二、预测 predict.py

用5折交叉验证保存的5个模型,分别进行预测,预测之后计算两种指标:1,对5个模型预测指标直接取平均,2,对5个模型预测的分数取平均,用平均分数计算最后的指标

from model import LeNet

from skimage import transform,exposure

from sklearn import model_selection, preprocessing, metrics, feature_selection

import os

import numpy as np

import pandas as pd

import torch

from torch.utils import data as torch_data

from sklearn.metrics import roc_auc_score

from utils import mkdir,load_npy_data,calculate,_init_fn,set_seed

from Dataset import GetLoader

test_path = '/home/mist/cloud/T1_sag_npy/test'

model_path = 'cloud/model_save_t1_sag'

model_floder = 'model_t1_sag_10.26_lenet' # model_TSC3:reset the train and test as machine learning

save_path = os.path.join(model_path, model_floder)

mkdir(save_path)

datanp_test, truenp_test = load_npy_data(test_path,'1')

# 通过GetLoader将数据进行加载,返回Dataset对象,包含data和labels

test_data_retriever = GetLoader(datanp_test, truenp_test)

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

def predict(modelfile, mri_type, split):

print(modelfile)

data_retriever = test_data_retriever

data_loader = torch_data.DataLoader(

data_retriever,

batch_size=4,

shuffle=False,

num_workers=8,

)

model = LeNet()

model.to(device)

checkpoint = torch.load(modelfile)

model.load_state_dict(checkpoint["model_state_dict"])

model.eval()

y_pred = []

ids = []

y_all = []

for e, batch in enumerate(data_loader, 1):

print(f"{e}/{len(data_loader)}", end="\r")

with torch.no_grad():

tmp_pred = torch.sigmoid(model(batch[0].to(device))).cpu().numpy().squeeze()

# tmp_pred = model(batch[0].to(device)).cpu().numpy().squeeze()

targets = batch[1].to(device)

if tmp_pred.size == 1:

y_pred.append(tmp_pred)

else:

y_pred.extend(tmp_pred.tolist())

y_all.extend(batch[1].tolist())

y_all = [1 if x > 0.5 else 0 for x in y_all]

auc = roc_auc_score(y_all, y_pred)

fpr_micro, tpr_micro, th = metrics.roc_curve(y_all, y_pred)

max_th = -1

max_yd = -1

for i in range(len(th)):

yd = tpr_micro[i] - fpr_micro[i]

if yd > max_yd:

max_yd = yd

max_th = th[i]

rst_val = pd.DataFrame([calculate(y_pred, y_all, max_th)])

preddf = pd.DataFrame({"label": y_all, "y_pred": y_pred})

return preddf, rst_val#返回每一个病人的预测分数的表格和预测指标AUC,ACC,Sep,sen

save_path = os.path.join(model_path,model_floder)

#加载保存的5个模型

modelfiles = []

for model_name in os.listdir(save_path):

if model_name.endswith('.pth'):

model_path_final = os.path.join(save_path,model_name)

modelfiles.append(model_path_final)

#1,用5个模型对测试集数据进行预测,并取平均值

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

rst_test_all = []

scores = []

df_test = {}

for m in modelfiles:

mtype = m.split('/')[-1].split('-')[1].split('.')[0]

preddf,rst_test = predict(m,'T2', 'test')

rst_test_all.append(rst_test)

df_test[mtype] = preddf["y_pred"]

rst_test_all = pd.concat(rst_test_all)

rst_test_all = pd.DataFrame(rst_test_all)

df_test['label'] = preddf["label"]

df_test = pd.DataFrame(df_test)

rst_test_all.loc['mean'] = rst_test_all.mean(axis = 0)

rst_test_all.to_csv(os.path.join(save_path, 'test_res_pf.csv'))

print('测试集5折5个模型预测,取平均指标:',rst_test_all)

#2,对5折预测的分数取平均值,并计算指标

df_test = pd.DataFrame(df_test)

df_test["Average"] = 0

# for mtype in mri_types:

for i in range(0,5):

df_test["Average"] += df_test.iloc[:,i]

df_test["Average"] /= 5

df_test.to_csv(os.path.join(save_path, 'test_score.csv'))

auc = roc_auc_score(df_test["label"], df_test["Average"])

print(f"test ensemble AUC: {auc:.4f}")

fpr_micro, tpr_micro, th = metrics.roc_curve(df_test["label"], df_test["Average"])

max_th = -1

max_yd = -1

for i in range(len(th)):

yd = tpr_micro[i]-fpr_micro[i]

if yd > max_yd:

max_yd = yd

max_th = th[i]

print(max_th)

rst_test = pd.DataFrame([calculate( df_test["Average"],df_test["label"], max_th)])

rst_test.to_csv(os.path.join(save_path, 'test_ensembel_res.csv'))

print('5折分数取平均之后的测试集指标:',rst_test)

print('5折预测的分数,以及分数平均值表格:',df_test)

总结

本文从数据预处理开始,用最简单的3D的CNN实现五折交叉验证的MRI图像二分类