自然语言处理NLP星空智能对话机器人系列:深入理解Transformer自然语言处理 Semantic Role Labeling

(SRL).

目录

Semantic Role Labeling with BERT-Based Transformers

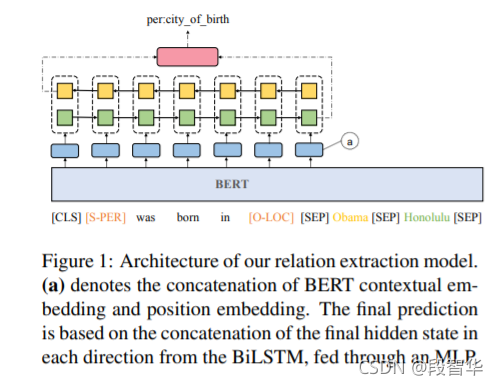

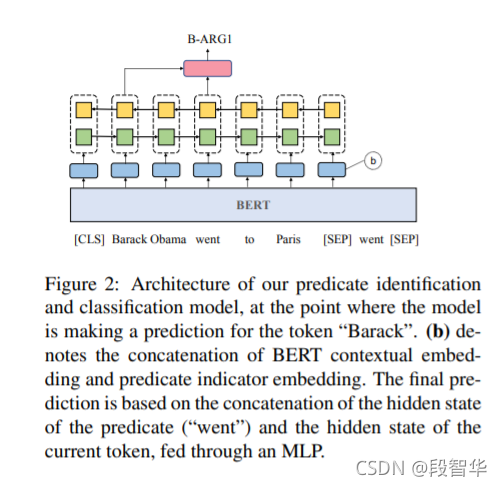

Transformers在过去几年中取得了比上一代NLP更大的进步。标准NLU方法首先学习句法和词汇特征来解释句子结构。在运行语义角色标记(SRL)之前,之前的NLP模型将接受训练,以了解语言的基本语法。Shi和Lin(2019)在论文开始时询问是否可以跳过初步的语法和词汇训练。基于BERT的模型能在不经过那些经典训练阶段的情况下执行SRL吗?答案是肯定的!Shi和Lin(2019)认为,SRL可以被视为序列标签,并提供标准化的输入格式。他们的基于BERT的模型产生了惊人的好结果。在本文中,我们将使用Allen Institute为AI提供的基于预训练的基于BERT的模型,该模型基于Shi和Lin(2019)的论文。Shi和Lin通过放弃句法和词汇训练将SRL提升到了一个新的水平。我们将从定义SRL和序列标签输入格式的标准化开始。然后我们将从Allen Institute为AI提供的资源,运行SRL任务获取结果。

我们将通过运行SRL样本来挑战基于BERT的模型。第一个示例将显示SRL是如何工作的。我们将运行一些更困难的样本。我们将逐步将基于BERT的模型推向SRL的极限,找出模型的限制是确保Transformer模型保持现实和实用性的最佳方法。

本文涵盖以下主题:

-

定义语义角色标签

-

定义SRL输入格式的标准化

-

基于BERT的模型架构的主要方面

-

编码器堆栈如何管理掩码SRL输入格式

-

基于BERT模型的SRL注意过程

-

使用Allen Institute人工智能研究所提供的资源

-

构建TensorFlow笔记本,运行预训练的基于BERT的模型

-

在基本示例上测试句子标签

-

在困难示例上测试SRL并解释结果

-

将基于BERT的模型限制在SRL范围内,并解释如何完成。

我们的第一步将是探索Shi和Lin(2019)定义的SRL方法。

Getting started with SRL

SRL对于人类和机器来说都是困难的。然而,transformers再一次向我们的人类基线迈进了一步。在本节中,我们将首先定义SRL并形象化一个例子。然后,我们将运行一个基于Bert的精确模型。让我们首先定义SRL的有问题的任务。

Defining Semantic Role Labeling

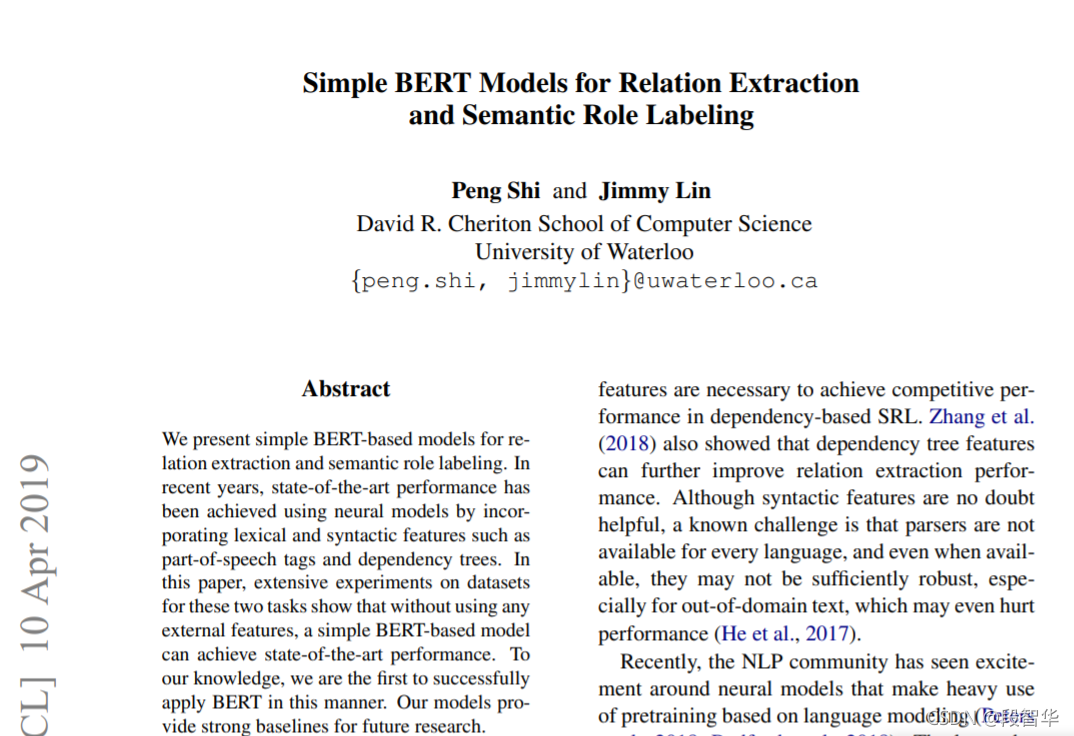

Shi和Lin(2019)提出并证明了一个观点,即我们可以在不依赖词汇或句法特征的情况下确定谁做了什么,在哪里做了什么。本文基于 Peng Shi和Jimmy Lin在University of Waterloo, California大学的研究。他们展示了transformers如何通过注意层更好地学习语言结构。

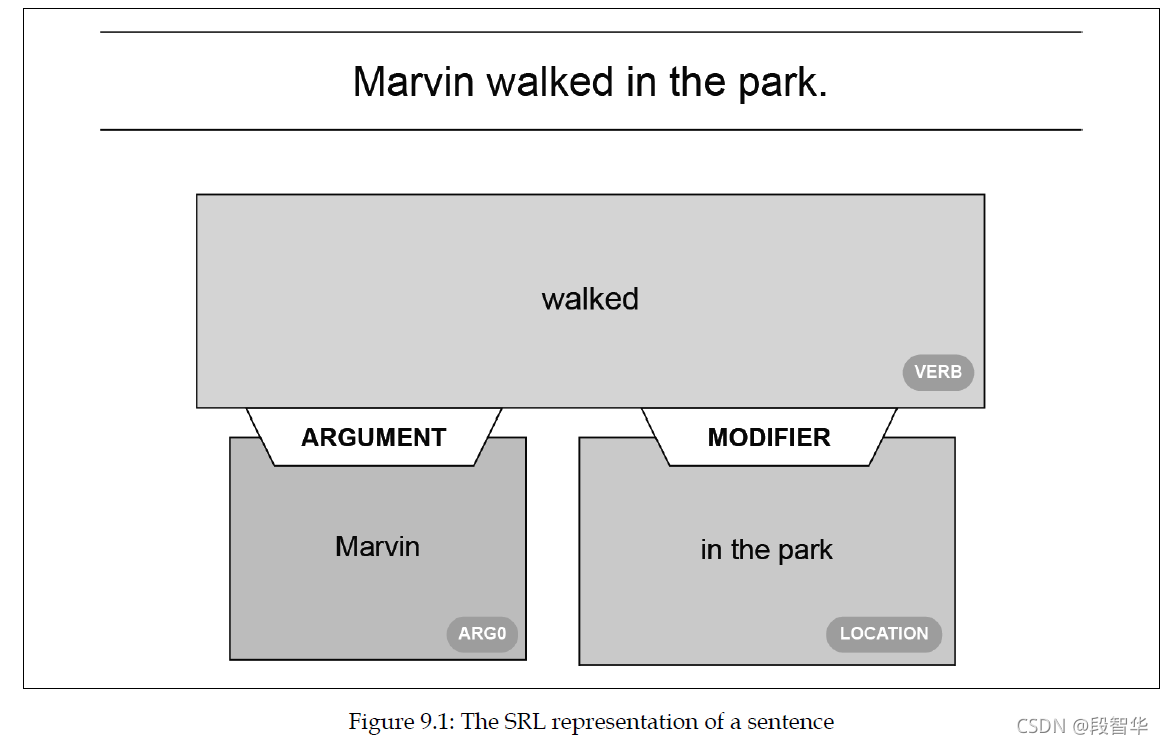

SRL标记了一个词或一组词在句子中所扮演的语义角色以及与谓词建立的关系。语义角色是名词或名词短语在句子中与主动词的关系中所扮演的角色。在“Marvin walked in the park,”一句中,Marvin是句子中发生事件的代理人。代理人是事件的实施者。主动词,支配动词是“walked”。

谓语描述有关主语或代词的内容。谓词可以是任何提供主语特征或动作信息的东西。在我们的方法中,我们将谓词作为主要动词。在句子“Marvin walked In the park”中,谓语是限定形式的“walked”。

单词“In the park”修改了“walked”的含义,是修饰语。

围绕谓语的名词或名词短语是参数或参数项。例如,“Marvin”是谓词“walk”的一个参数。我们可以看到SRL不需要语法树或词法分析。让我们将示例的SRL可视化。

Visualizing SRL

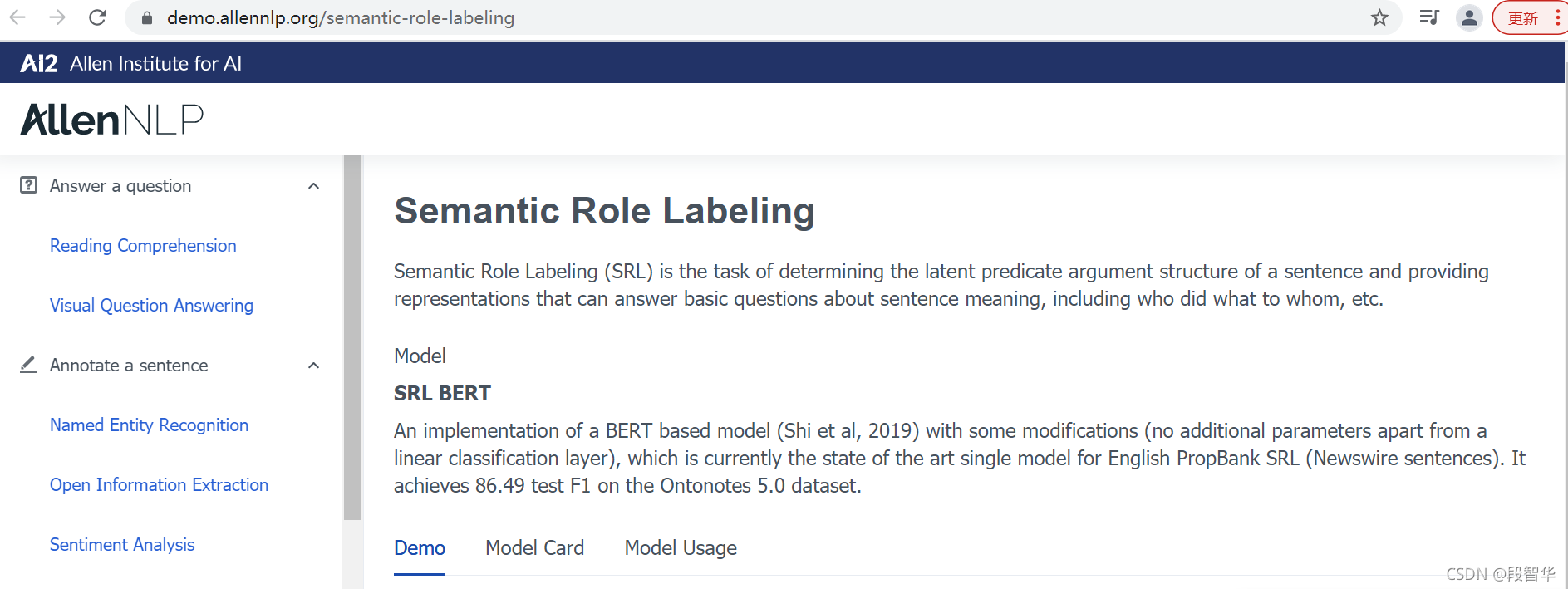

我们将使用Allen Institute研究所的可视化代码资源, Allen Institute for AI 拥有出色的交互式在线工具, 我们用来直观表示 SRL 的工具。 您可以在 https://demo.allennlp.org/ 访问这些工具。Allen Institute for AI 倡导“AI for the Common Good”。 我们会好好利用这个积极分享的方法。 本文中的所有图形都是使用 AllenNLP 工具创建的。

Allen Institute研究所提供了不断发展的transformer 模型。本文中的示例在运行时可能会产生不同的结果,充分利用本文的最佳方式是:

阅读并理解所解释的内容,而不仅仅是运行程序。

-

花时间理解所提供的示例。

-

然后使用该工具对自己选择的句子进行实验

Semantic Role Labeling example

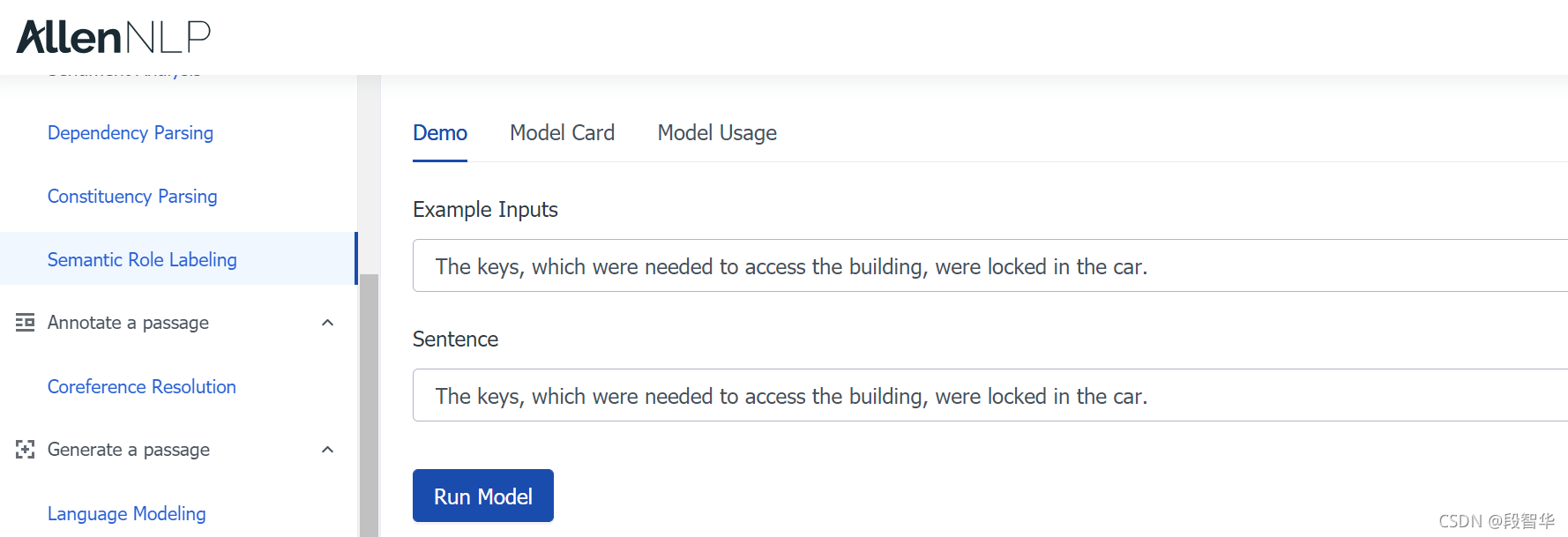

链接:https://demo.allennlp.org/semantic-role-labeling

论文:https://arxiv.org/pdf/1904.05255.pdf

输入示例

The keys, which were needed to access the building, were locked in the car.

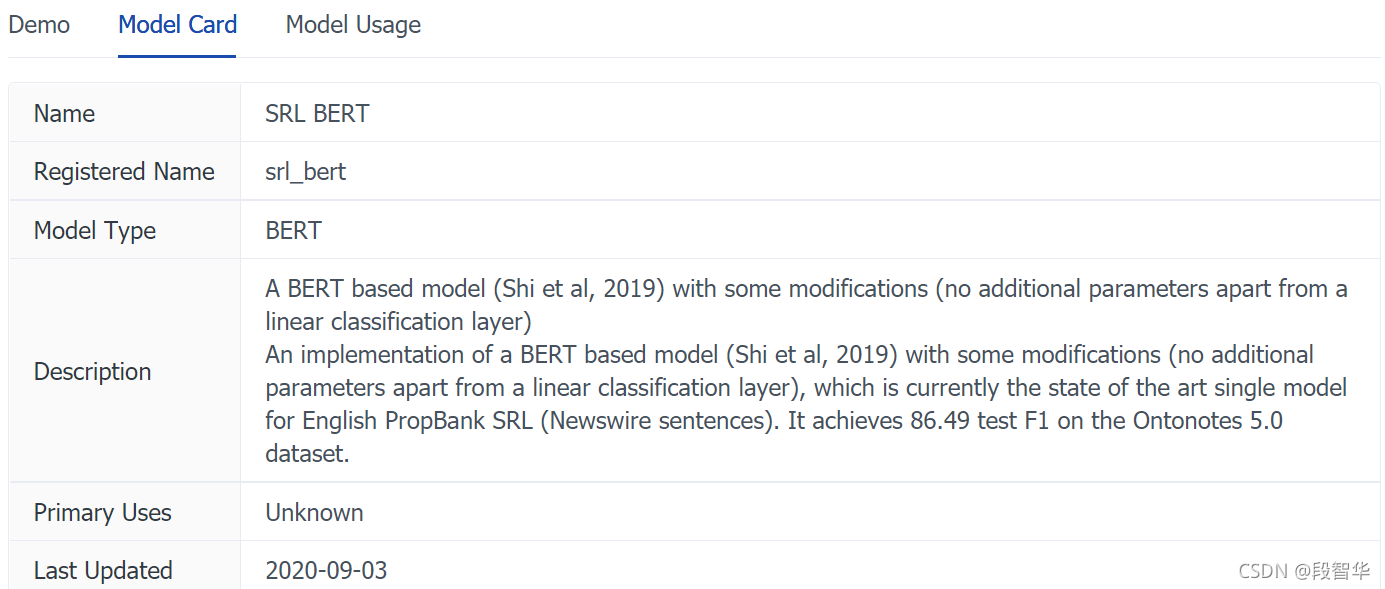

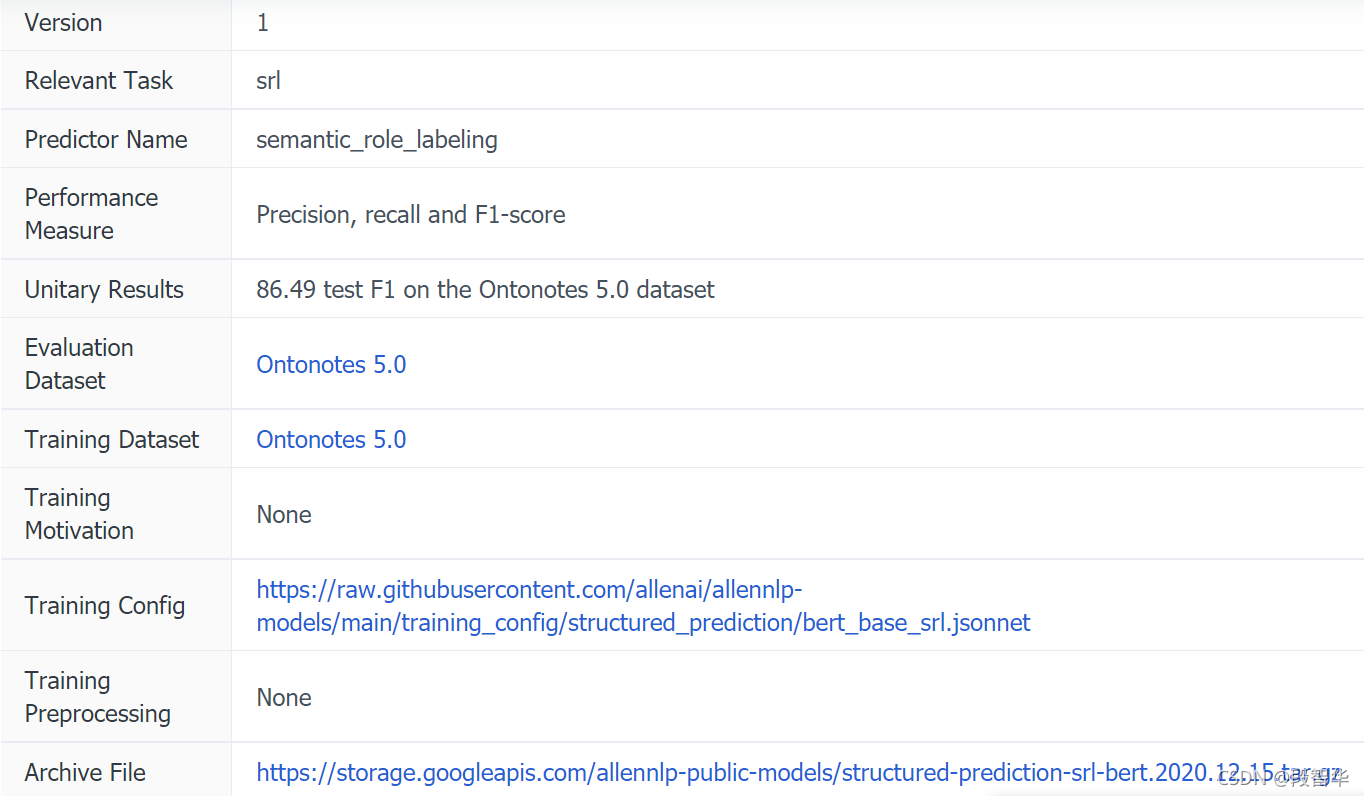

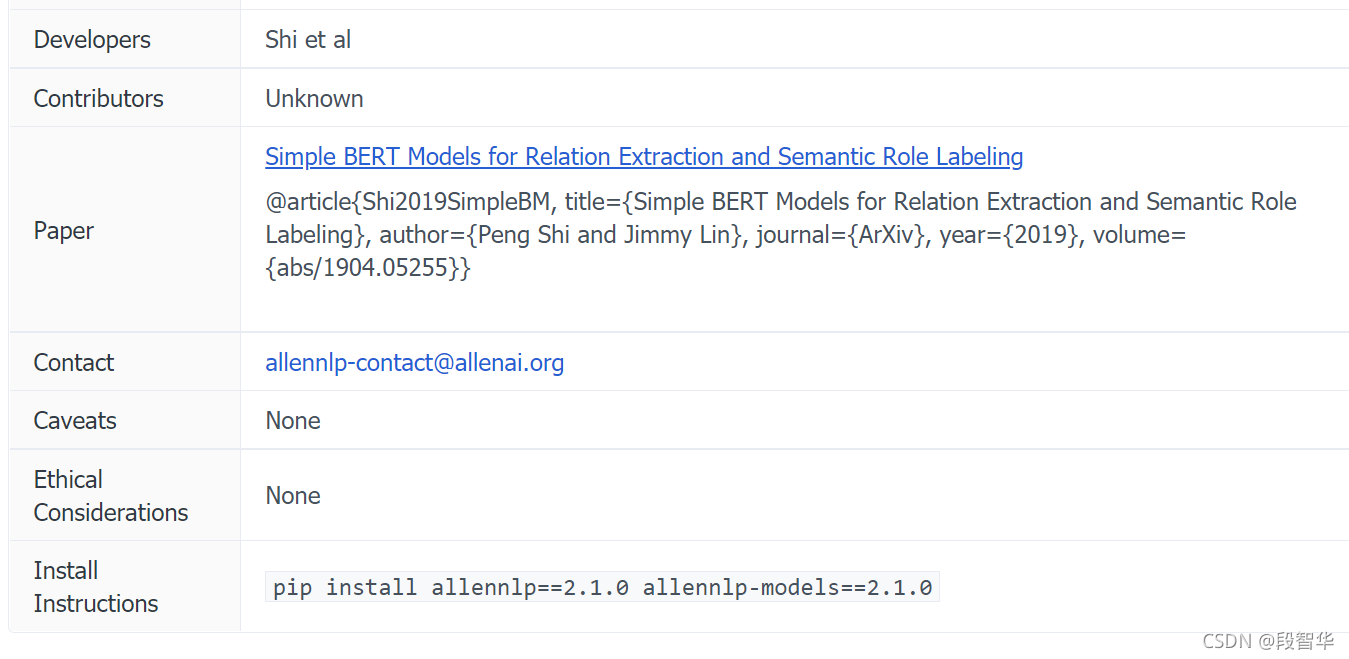

模型信息

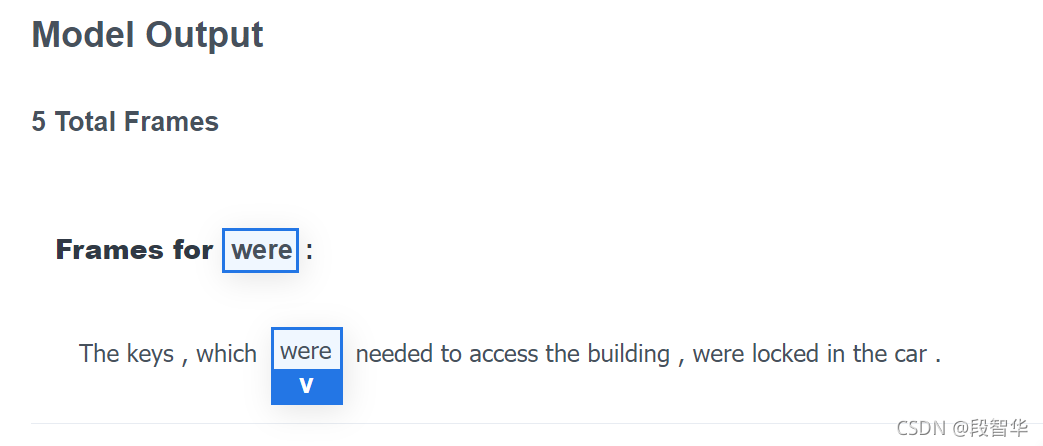

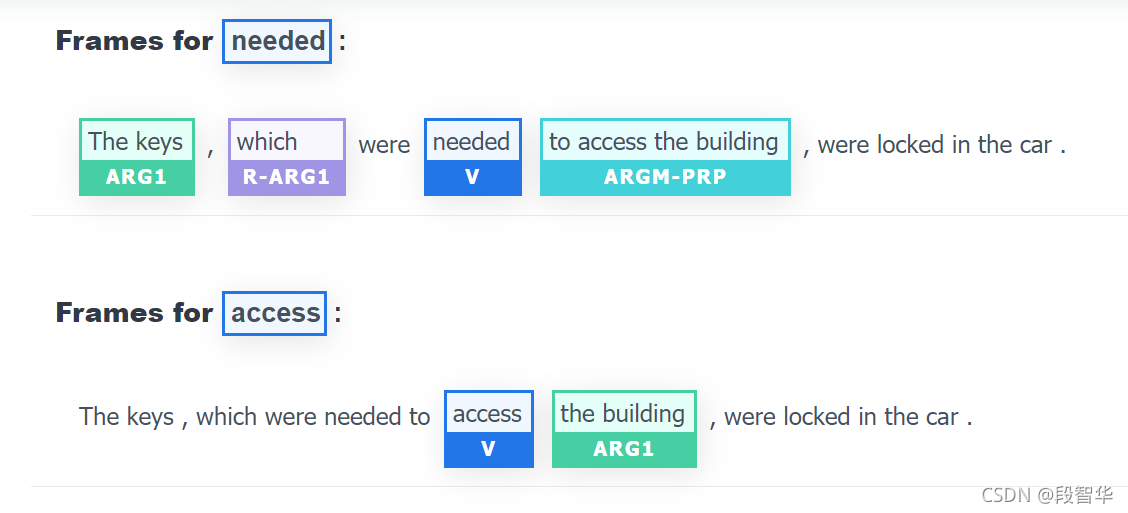

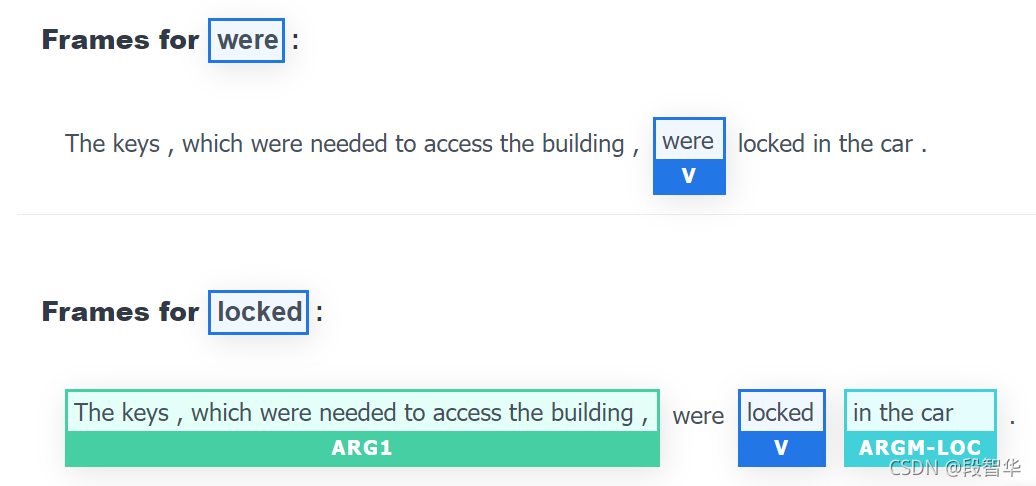

运行结果如下:

命令行格式:

- 输入

{

"sentence": "The keys, which were needed to access the building, were locked in the car."

}

- 模型

{

"id": "semantic-role-labeling",

"card": {

"archive_file": "https://storage.googleapis.com/allennlp-public-models/structured-prediction-srl-bert.2020.12.15.tar.gz",

"contact": "allennlp-contact@allenai.org",

"date": "2020-09-03",

"description": "An implementation of a BERT based model (Shi et al, 2019) with some modifications (no additional parameters apart from a linear classification layer), which is currently the state of the art single model for English PropBank SRL (Newswire sentences). It achieves 86.49 test F1 on the Ontonotes 5.0 dataset.",

"developed_by": "Shi et al",

"display_name": "SRL BERT",

"evaluation_dataset": {

"name": "Ontonotes 5.0",

"notes": "We cannot release this data due to licensing restrictions.",

"processed_url": null,

"url": "https://catalog.ldc.upenn.edu/LDC2013T19"

},

"install_instructions": "pip install allennlp==2.1.0 allennlp-models==2.1.0",

"model_performance_measures": "Precision, recall and F1-score",

"model_type": "BERT",

"paper": {

"citation": "\n@article{Shi2019SimpleBM,\ntitle={Simple BERT Models for Relation Extraction and Semantic Role Labeling},\nauthor={Peng Shi and Jimmy Lin},\njournal={ArXiv},\nyear={2019},\nvolume={abs/1904.05255}}\n",

"title": "Simple BERT Models for Relation Extraction and Semantic Role Labeling",

"url": "https://api.semanticscholar.org/CorpusID:131773936"

},

"registered_model_name": "srl_bert",

"registered_predictor_name": "semantic_role_labeling",

"short_description": "A BERT based model (Shi et al, 2019) with some modifications (no additional parameters apart from a linear classification layer)",

"task_id": "srl",

"training_config": "https://raw.githubusercontent.com/allenai/allennlp-models/main/training_config/structured_prediction/bert_base_srl.jsonnet",

"training_dataset": {

"name": "Ontonotes 5.0",

"notes": "We cannot release this data due to licensing restrictions.",

"processed_url": null,

"url": "https://catalog.ldc.upenn.edu/LDC2013T19"

},

"unitary_results": "86.49 test F1 on the Ontonotes 5.0 dataset",

"version": "1"

}

}

-输出

{

"verbs": [

{

"description": "The keys , which [V: were] needed to access the building , were locked in the car .",

"tags": [

"O",

"O",

"O",

"O",

"B-V",

"O",

"O",

"O",

"O",

"O",

"O",

"O",

"O",

"O",

"O",

"O",

"O"

],

"verb": "were"

},

{

"description": "[ARG1: The keys] , [R-ARG1: which] were [V: needed] [ARGM-PRP: to access the building] , were locked in the car .",

"tags": [

"B-ARG1",

"I-ARG1",

"O",

"B-R-ARG1",

"O",

"B-V",

"B-ARGM-PRP",

"I-ARGM-PRP",

"I-ARGM-PRP",

"I-ARGM-PRP",

"O",

"O",

"O",

"O",

"O",

"O",

"O"

],

"verb": "needed"

},

{

"description": "The keys , which were needed to [V: access] [ARG1: the building] , were locked in the car .",

"tags": [

"O",

"O",

"O",

"O",

"O",

"O",

"O",

"B-V",

"B-ARG1",

"I-ARG1",

"O",

"O",

"O",

"O",

"O",

"O",

"O"

],

"verb": "access"

},

{

"description": "The keys , which were needed to access the building , [V: were] locked in the car .",

"tags": [

"O",

"O",

"O",

"O",

"O",

"O",

"O",

"O",

"O",

"O",

"O",

"B-V",

"O",

"O",

"O",

"O",

"O"

],

"verb": "were"

},

{

"description": "[ARG1: The keys , which were needed to access the building ,] were [V: locked] [ARGM-LOC: in the car] .",

"tags": [

"B-ARG1",

"I-ARG1",

"I-ARG1",

"I-ARG1",

"I-ARG1",

"I-ARG1",

"I-ARG1",

"I-ARG1",

"I-ARG1",

"I-ARG1",

"I-ARG1",

"O",

"B-V",

"B-ARGM-LOC",

"I-ARGM-LOC",

"I-ARGM-LOC",

"O"

],

"verb": "locked"

}

],

"words": [

"The",

"keys",

",",

"which",

"were",

"needed",

"to",

"access",

"the",

"building",

",",

"were",

"locked",

"in",

"the",

"car",

"."

]

}

SRL example "Marvin walked in the park

现在,我们将可视化我们的SRL示例。"Marvin walked in the park"的SRL表示:

我们可以在图9.1中看到以下标签:

-

动词:句子的谓语。

-

参数:名为ARG0的句子的参数。

-

修饰语:句子的修饰语。在本例中,是一个位置。它可能是一个副词,一个形容词,或者任何改变谓语含义的东西。文本输出也很有趣,其中包含可视化表示标签的版本:

walked: [ARG0: Marvin] [V: walked] [ARGM-LOC: in the park]

我们定义了SRL,并给出了一个示例。现在是时候来看看基于bert的模型了。

星空智能对话机器人系列博客

-

NLP星空智能对话机器人系列:深入理解Transformer自然语言处理 多头注意力架构-通过Python实例计算Q, K, V

-

NLP星空智能对话机器人系列:深入理解Transformer自然语言处理 多头注意力架构 Concatenation of the output of the heads

-

NLP星空智能对话机器人系列:深入理解Transformer自然语言处理 位置编码(positional_encoding)

-

NLP星空智能对话机器人系列:深入理解Transformer自然语言处理 KantaiBERT ByteLevelBPETokenizer

-

NLP星空智能对话机器人系列:深入理解Transformer自然语言处理 KantaiBERT Initializing model

-

NLP星空智能对话机器人系列:深入理解Transformer自然语言处理 KantaiBERT Exploring the parameters

-

NLP星空智能对话机器人系列:深入理解Transformer自然语言处理 KantaiBERT Initializing the trainer

-

NLP星空智能对话机器人系列:深入理解Transformer自然语言处理 KantaiBERT Language modeling with FillMaskPipeline

-

NLP星空智能对话机器人系列:深入理解Transformer自然语言处理 GLUE Winograd schemas and NER

-

NLP星空智能对话机器人系列:深入理解Transformer自然语言处理 Workshop on Machine Translation (WMT)

-

NLP星空智能对话机器人系列:深入理解Transformer自然语言处理 Pattern-Exploiting Training (PET)

-

NLP星空智能对话机器人系列:深入理解Transformer自然语言处理 The philosophy of Pattern-Exploiting Training (PET)

-

NLP星空智能对话机器人系列:深入理解Transformer自然语言处理 It‘s time to make a decision

-

NLP星空智能对话机器人系列:深入理解Transformer自然语言处理 Text completion with GPT-2

-

NLP星空智能对话机器人系列:深入理解Transformer自然语言处理 Text completion with GPT-2 step3-5

-

NLP星空智能对话机器人系列:深入理解Transformer自然语言处理 Text completion with GPT-2 step 6-8

-

NLP星空智能对话机器人系列:深入理解Transformer自然语言处理 Text completion with GPT-2 step 9

-

NLP星空智能对话机器人系列:深入理解Transformer自然语言处理 Training a GPT-2 language model

-

NLP星空智能对话机器人系列:论文学习 Do Transformers Really Perform Bad for Graph Representation

-

NLP星空智能对话机器人系列:深入理解Transformer自然语言处理 Training a GPT-2 language model Steps 2 to 6

-

NLP星空智能对话机器人系列:深入理解Transformer自然语言处理 Training a GPT-2 language model Steps 7 to 9

-

NLP星空智能对话机器人系列:深入理解Transformer自然语言处理 Training a GPT-2 language model Steps 10

-

NLP星空智能对话机器人系列:深入理解Transformer自然语言处理 T5-large transformer model

-

NLP星空智能对话机器人系列:深入理解Transformer自然语言处理 Architecture of the T5 model

-

NLP星空智能对话机器人系列:深入理解Transformer自然语言处理 Summarizing documents with T5-large

-

自然语言处理NLP星空智能对话机器人系列:深入理解Transformer自然语言处理 Matching datasets and tokenizers

-

NLP星空智能对话机器人系列:深入理解Transformer自然语言处理 Standard NLP tasks with specific vocabulary

-

NLP星空智能对话机器人系列:深入理解Transformer自然语言处理 T5 Bill of Rights Sample

-

NLP星空智能对话机器人系列:论文解读 How Good is Your Tokenizer? (你的词元分析器有多好?多语言模型的单语性能研究)