-

1.环境配置

1.安装anaconda,利用anaconda激活虚拟环境

#在shell中输入如下命令来新建环境

conda create -n tensor_flow_25 python=3.8#这里指定python的版本为3.8 (>=3.6)

#查看虚拟环境的列表

conda list

#激活虚拟环境

conda activate tensor_flow_252.在虚拟环境内部输入如下代码配置环境

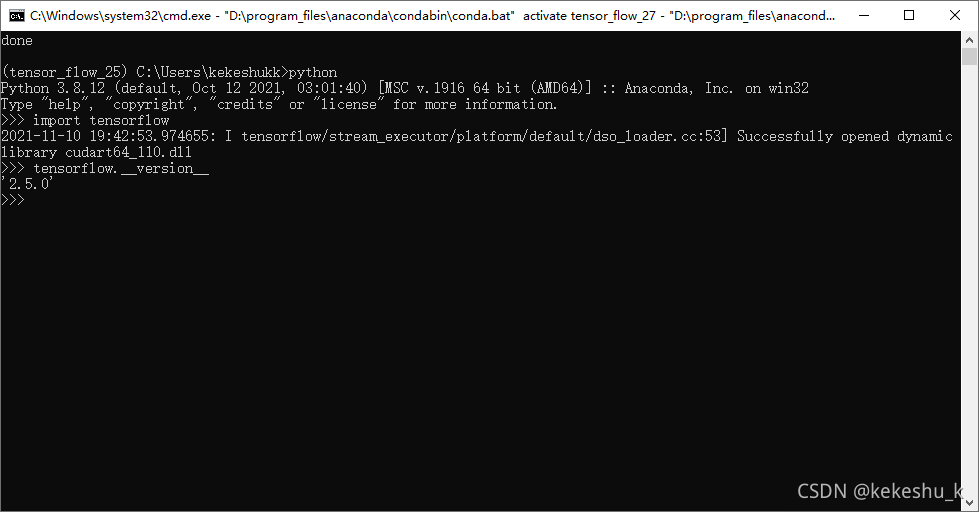

conda install tensorflow-gpu=2.5.0?等待安装完成,输入如下代码进行测试,通过

#进入python

python

#导入包

import tensorflow

#查看版本

tensorflow.__version__

?2.量化权重并且读取中间结果

import logging

import re

from tensorflow.python.keras.applications.vgg16 import decode_predictions

logging.getLogger("tensorflow").setLevel(logging.DEBUG)

from tensorflow.lite.python import schema_py_generated as schema_fb

import matplotlib.pyplot as plt

from tensorflow.keras import models

import tensorflow as tf

import numpy as np

assert float(tf.__version__[:3]) >= 2.3

from tensorflow import keras

from tensorflow.python import pywrap_tensorflow

import pathlib

from tensorflow.keras.applications.vgg16 import VGG16

from tensorflow.keras.preprocessing import image

from tensorflow.keras.applications.vgg16 import preprocess_input

import random

import flatbuffers

# 加载我的数据集

data_path = pathlib.Path('E:\\represent')

all_image_paths = list(data_path.glob('*/*'))

all_image_paths = [str(path) for path in all_image_paths] # 所有图片路径的列表

#random.shuffle(all_image_paths) # 打散

image_count = len(all_image_paths)

#print(image_count)

#print(all_image_paths[:5])

label_names = sorted(item.name for item in data_path.glob('*/') if item.is_dir())

#print(label_names[:5])

label_to_index = dict((name, index) for index, name in enumerate(label_names))

#print(label_to_index)

all_image_labels = [label_to_index[pathlib.Path(path).parent.name] for path in all_image_paths]

ds = tf.data.Dataset.from_tensor_slices((all_image_paths, all_image_labels))

#从路径获得图片

def load_and_preprocess_from_path_label(path, label):

image = tf.io.read_file(path) # 读取图片

image = tf.image.decode_jpeg(image, channels=3)

image = tf.image.resize(image, [224, 224]) # 原始图片大小为(266, 320, 3),重设为(192, 192)

image /= 255.0 # 归一化到[0,1]范围

return image, label

image_label_ds = ds.map(load_and_preprocess_from_path_label)

# 使用vgg的模型

model = VGG16(weights='imagenet',include_top=False, input_shape=(224,224,3))

# IMG_PATH = 'F:\\tiger_cat.jpg'

# img = image.load_img(IMG_PATH, target_size=(224,224))

# x = image.img_to_array(img)

# x = np.expand_dims(x, axis = 0)

# print(x.shape)

# x = preprocess_input(x)

# fea = model.predict(x)

# print('Predicted:', np.array(fea).shape)

# for image,_ in image_label_ds.batch(1).take(4):

# plt.imshow(image[0])

# print(image.shape)

# plt.show()

'''***************************************************************************test quantization************************************************************************'''

"""

对模型进行量化

"""

# # converter = tf.lite.TFLiteConverter.from_keras_model(model)

# # tflite_model = converter.convert()

# def representative_data_gen():

# for input_value,_ in image_label_ds.batch(1).take(400):

# # Model has only one input so each data point has one element.

# yield[input_value]

# converter = tf.lite.TFLiteConverter.from_keras_model(model)

# converter.optimizations = [tf.lite.Optimize.DEFAULT]

# converter.representative_dataset = representative_data_gen

# # Ensure that if any ops can't be quantized, the converter throws an error

# converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS_INT8]

# # Set the input and output tensors to uint8 (APIs added in r2.3)

# converter.inference_input_type = tf.uint8

# converter.inference_output_type = tf.uint8

# tflite_model_quant = converter.convert()

# tflite_models_dir = pathlib.Path.cwd() / "tmp"

# tflite_models_dir.mkdir(exist_ok=True, parents=True)

# # Save the unquantized/float model:

# # tflite_model_file = tflite_models_dir/"my_cnn_model.tflite"

# # tflite_model_file.write_bytes(tflite_model)

# # Save the quantized model:

# tflite_model_quant_file = tflite_models_dir/"my_cnn_model_quant_1.tflite"

# tflite_model_quant_file.write_bytes(tflite_model_quant)

"""

读取量化后的模型

"""

# # Helper function to run inference on a TFLite model

# def run_tflite_model(tflite_file, test_image_indices):

# global test_images

# # Initialize the interpreter

# interpreter = tf.lite.Interpreter(model_path=str(tflite_file))

# interpreter.allocate_tensors()

# print(interpreter.get_tensor_details())

# input_details = interpreter.get_input_details()[0]

# output_details = interpreter.get_output_details()[0]

# predictions = np.zeros((len(test_image_indices),), dtype=int)

# for i, test_image_index in enumerate(test_image_indices):

# test_image = test_images[test_image_index]

# test_label = test_labels[test_image_index]

# # Check if the input type is quantized, then rescale input data to uint8

# if input_details['dtype'] == np.uint8:

# input_scale, input_zero_point = input_details["quantization"]

# test_image = test_image / input_scale + input_zero_point

# test_image = np.expand_dims(test_image, axis=0).astype(input_details["dtype"])

# interpreter.set_tensor(input_details["index"], test_image)

# interpreter.invoke()

# output = interpreter.get_tensor(output_details["index"])[0]

# predictions[i] = output.argmax()

# return predictions

# # Change this to test a different image

# test_image_index = 1

# ## Helper function to test the models on one image

# def test_model(tflite_file, test_image_index, model_type):

# global test_labels

# predictions = run_tflite_model(tflite_file, [test_image_index])

# plt.imshow(test_images[test_image_index])

# template = model_type + " Model \n True:{true}, Predicted:{predict}"

# _ = plt.title(template.format(true= str(test_labels[test_image_index]), predict=str(predictions[0])))

# plt.grid(False)

# plt.show()

# test_model(tflite_model_file, test_image_index, model_type="Float")

# test_model(tflite_model_quant_file, test_image_index, model_type="Quantized")

'''尝试读取中间结果'''

#获取一个图像,推理

d_iter = iter(image_label_ds)

input_image,_ = d_iter.get_next()

#更改网络的函数

def OutputsOffset(subgraph, j):

o = flatbuffers.number_types.UOffsetTFlags.py_type(subgraph._tab.Offset(8))

if o != 0:

a = subgraph._tab.Vector(o)

return a + flatbuffers.number_types.UOffsetTFlags.py_type(j * 4)

return 0

def buffer_change_output_tensor_to(model_buffer, new_tensor_i):

root = schema_fb.Model.GetRootAsModel(model_buffer, 0)

output_tensor_index_offset = OutputsOffset(root.Subgraphs(0), 0)

# Flatbuffer scalars are stored in little-endian.

new_tensor_i_bytes = bytes([

new_tensor_i & 0x000000FF, \

(new_tensor_i & 0x0000FF00) >> 8, \

(new_tensor_i & 0x00FF0000) >> 16, \

(new_tensor_i & 0xFF000000) >> 24 \

])

# Replace the 4 bytes corresponding to the first output tensor index

return model_buffer[:output_tensor_index_offset] + new_tensor_i_bytes + model_buffer[output_tensor_index_offset + 4:]

# Read the model.

with open('tmp/my_cnn_model_quant_1.tflite', 'rb') as f:

model_buffer = f.read()

# 修改输出idx

idx = 0 #0是第一层的输入数据,27是第一层的输出数据

model_buffer = buffer_change_output_tensor_to(model_buffer, idx)

# 推理

interpreter = tf.lite.Interpreter(model_content=model_buffer)

interpreter.allocate_tensors()

input_details = interpreter.get_input_details()[0]

print(input_details)

output_details = interpreter.get_output_details()[0]

if(input_details['dtype'] == np.uint8):

input_scale,input_zero_point = input_details["quantization"]

input_image = input_image / input_scale + input_zero_point

input_image = np.expand_dims(input_image,axis=0).astype(input_details["dtype"])

interpreter.set_tensor(input_details["index"], input_image)

interpreter.invoke()

# 中间层的output值

out_val = interpreter.get_tensor(output_details["index"])

print(out_val.dtype)

注意这里分步进行,先执行模型量化,再保存模型后进行读取。

如果这里的网络下载不顺利的话,建议换源

最终的网络中间值的类型如下:

![]()

3.查看权重?

?1.获得量化好的模型。本文的保存在了tmp/路径之下。

?2.安装netron,终端输入

pip install netron? ?之后启动netron

netron? ? 再浏览器打开网址:![]() 选择我们的模型即可。

选择我们的模型即可。

?3.查看权重和idx

这里面的filter即可查看,然后点开内部的细节,location即张量的序号。