Abstract

这篇文章中我们介绍了Boundary Attack, a decision-based attack that starts from a large adversarial perturbation and then seeks to reduce the perturbation while staying adversarial。这种攻击在概念上十分简单,requires close to no hyperparameter tuning,并不依赖于替代模型 and is competitive with the best gradient-based attacks in standard computer vision tasks like ImageNet. We apply the attack on two black-box algorithms from Clarifai.com. The Boundary Attack in particular and the class of decision-based attacks in general open new avenues to study the robustness of machine learning models and raise new questions regarding the safety of deployed machine learning systems. An implementation of the attack is available as part of Foolbox (https://github.com/bethgelab/foolbox)。

1 Introduction

对抗扰动从两方面吸引了很多关注。一方面, they are worrisome for the integrity and security of deployed machine learning algorithms such as autonomous cars or face recognition systems. 对于街道标志(例如将一个stop-sign识别为一个限速两百的标志牌)的微小扰动可能会导致很严重的后果。另一方面, adversarial perturbations provide an exciting spotlight on the gap between the sensory information processing in humans and machines and thus provide guidance towards more robust, human-like architectures。

本文关注于一个目前仅收到很少关注的黑盒攻击类别:

- Decision-based attacks. Direct attacks that solely rely on the final decision of the model(such as the top-1 class label or the transcribed sentence).

The 轮廓 of this category is justified for the following reasons: First, compared to score-based attacks decision-based attacks are much more relevant in real-world machine learning applications where confidence scores or logits are rarely accessible. At the same time decision-based attacks have the potential to be much more robust to standard defences like gradient masking, intrinsic stochasticity or robust training than attacks from the other categories. Finally, compared to transfer-based attacks they need much less information about the model (neither architecture nor training data) and are much simpler to apply.

There currently exists no effective decision-based attack that scales to natural datasets such as ImageNet and is applicable to deep neural networks (DNNs).

Throughout the paper we focus on the threat scenario in which the adversary aims to change the decision of a model (either targeted or untargeted) for a particular input sample by inducing a minimal

perturbation to the sample. The adversary can observe the final decision of the model for arbitrary

inputs and it knows at least one perturbation, however large, for which the perturbed sample is

adversarial.

本文贡献如下:

- 我们强调decision-based attacks是对抗攻击的一个重要的类别因为这种攻击 are highly relevant for real-world applications and important to gauge model robustness.

- 我们介绍了第一个有效的decision-based attack能够拓展到复杂的机器学习模型以及natural datasets. The Boundary Attack is (1) 概念上十分简单 (2) 非常灵活 (3) 不需要调整过多的超参数 (4) is competitive with the best gradient-based attacks in both targeted and untargeted computer vision scenarios.

- We show that the Boundary Attack is able to break previously suggested defence mechanisms like defensive distillation.

- We demonstrate the practical applicability of the Boundary Attack on two black-box machine learning models for brand and celebrity recognition available on Clarifai.com.

论文中要用到的术语:

- o o o指代原始输入(即一张图片)

- y = F ( o ) y=F(o) y=F(o)指代模型 F ( ? ) F(\cdot) F(?)的全部输出(即logits或probabilities)

- y m a x y_{max} ymax?指代预测的标签(即类别标签)

- o ~ \tilde{o} o~指代对抗扰动后的图片, o ~ k \tilde{o}^k o~k指代攻击算法第 k k k步处理过的扰动图片

向量用黑体进行了标注。

2 Boundary Attack

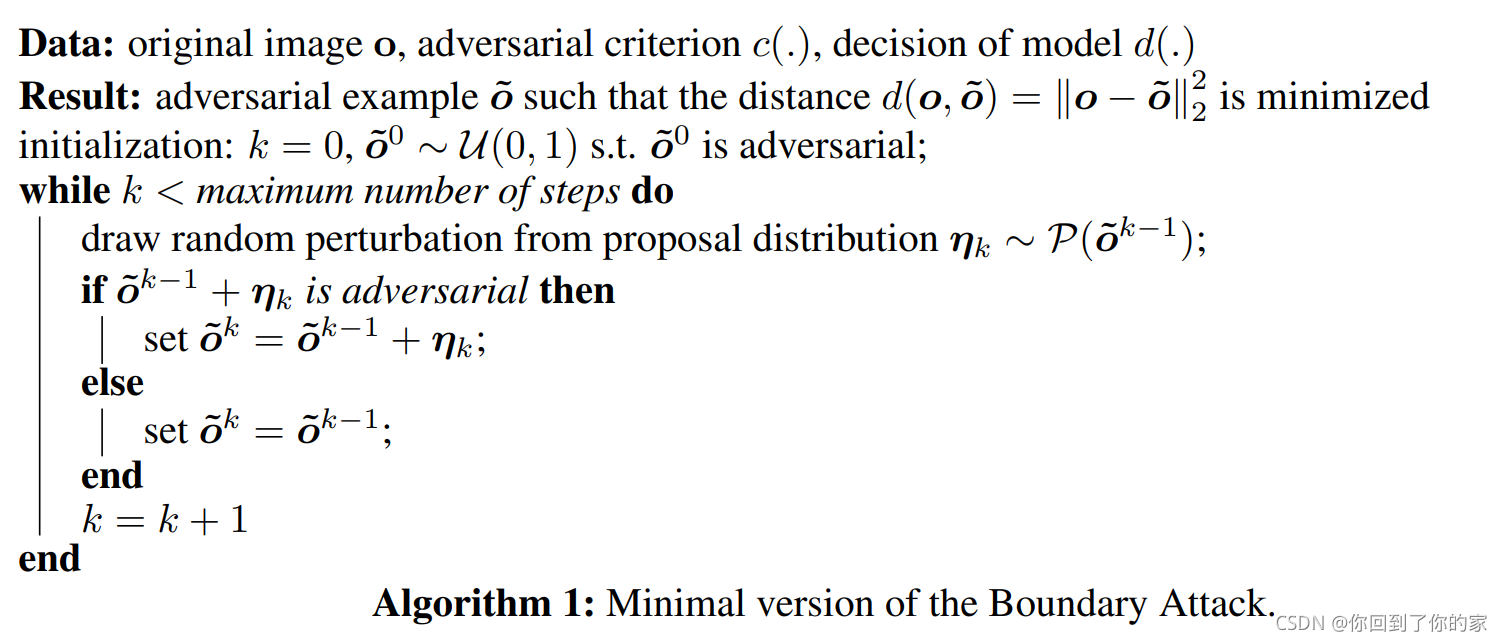

boundary attack算法在图2中进行了描述:

算法从一个已经是对抗图片的点出发,然后沿着对抗图片和原始图片之间的边界进行随机行走,但这个过程需要满足:(1)停留在对抗区域内(2)距离原始图片的距离不断减小。

换句话说 we perform rejection sampling with a suitable proposal distribution P \mathcal{P} P to find progressively smaller adversarial perturbations according to a given adversarial criterion c ( ? ) c(\cdot) c(?)。算法的基本逻辑在算法1中进行了描述:

2.1 initialization

boundary attack需要从一个已经是对抗图片的样本出发。在一个非目标性的场景下,我们simply sample from a maximum entropy distribution given the valid domain of the input. In the computer vision applications below, where the input is constrained to a range of [ 0 , 255 ] [0,255] [0,255] per pixel, we sample each pixel in the initial image o ~ 0 \tilde{o}^0 o~0 from a uniform distribution U ( 0 , 255 ) \mathcal{U}(0,255) U(0,255)。We reject samples that are not adversarial. In a targeted scenario we start from any sample that is classified by the model as being from the target class.

2.2 proposal distribution

算法的效率严重取决于proposal distribution P \mathcal{P} P,即which random directions are explored in each step of the algorithm. The optimal proposal distribution will generally depend on the domain and / or model to be attacked, but for all vision-related problems tested here a very simple proposal distribution worked surprisingly well. The basic idea behind this proposal distribution is as follows: in the k-th step we want to draw perturbations η k \eta^k ηk from a maximum entropy distribution subject to the following constraints:

- 扰动的样本位于输入域内:

o ~ i k ? 1 + η i k ∈ [ 0 , 255 ] ( 1 ) \tilde{o}^{k-1}_i+\eta_i^k\in[0,255]\quad\quad\quad\quad(1) o~ik?1?+ηik?∈[0,255](1) - The perturbation has a relative size of

δ

\delta

δ:

∥ η k ∥ 2 = δ ? d ( o , o ~ k ? 1 ) ( 2 ) \Vert \eta^k\Vert_2=\delta\cdot d(o,\tilde{o}^{k-1})\quad\quad\quad\quad(2) ∥ηk∥2?=δ?d(o,o~k?1)(2) - 扰动会reduces the distance of the perturbed image towards the original input by a relative amount

?

\epsilon

?

d ( o , o ~ k ? 1 ) ? d ( o , o ~ k ? 1 + η k ) = ? ? ( o , o ~ k ? 1 ) ( 3 ) d(o,\tilde{o}^{k-1})-d(o,\tilde{o}^{k-1}+\eta^k)=\epsilon\cdot(o,\tilde{o}^{k-1})\quad\quad\quad(3) d(o,o~k?1)?d(o,o~k?1+ηk)=??(o,o~k?1)(3)

实际上想要从这个分布中取样是十分困难的,因此我们采取了一种更简单的启发式算法:

- 首先,我们从一个独立同分布的高斯分布 η i k ~ N ( 0 , 1 ) \eta_i^k\sim\mathcal{N}(0,1) ηik?~N(0,1)中取样,and then rescale and clip the sample such that (1) and (2) hold。

- 第二步我们 project η k \eta^k ηk onto a sphere around the original image o such that d ( o , o ~ k ? 1 + η k ) = d ( o , o ~ k ? 1 ) d(o,\tilde{o}^{k-1}+\eta^k)=d(o,\tilde{o}^{k-1}) d(o,o~k?1+ηk)=d(o,o~k?1) and (1) hold. We denote this as the orthogonal perturbation and use it later for hyperparameter tuning.

- In the last step we make a small movement towards the original image such that (1) and (3) hold. For high-dimensional inputs and small δ , ? \delta,\epsilon δ,? the constraint (2) will also hold approximately.

2.3 adversarial criterion

一个经典的判定一个输入是对抗样本的criterion是观察这个样本是否被误分类,即模型是否将扰动后的样本识别为和扰动前的图片不同的类。另外一个常用的选择是targeted misclassification for which the perturbed input has to be classified in a given target class. 其他的选择包括 top-k misclassification (the top-k classes predicted for the perturbed input do not contain the original class label) or thresholds on certain confidence scores. Outside of computer vision many other choices exist such as criteria on the worderror rates. In comparison to most other attacks, the Boundary Attack is extremely flexible with regards to the adversarial criterion. It basically allows any criterion (including non-differentiable ones) as long as for that criterion an initial adversarial can be found (which is trivial in most cases).

2.4 hyperparameter adjustment

boundary attack仅有两个相关的超参数:the length of the total perturbation δ \delta δ and the length of the step ? \epsilon ? towards the original input (参考图二)。 We adjust both parameters dynamically according to the local geometry of the boundary. The adjustment is inspired by Trust Region methods. In essence, we first test whether the orthogonal perturbation is still adversarial. If this is true, then we make a small movement towards the target and test again. The orthogonal step tests whether the step-size is small enough so that we can treat the decision boundary between the adversarial and the non-adversarial region as being approximately linear. If this is the case, then we expect around 50% of the orthogonal perturbations to still be adversarial. If this ratio is much lower, we reduce the step-size δ, if it is close to 50% or higher we increase it. If the orthogonal perturbation is still adversarial we add a small step towards the original input. The maximum size of this step depends on the angle of the decision boundary in the local neighbourhood (see also Figure 2). If the success rate is too small we decrease , if it is too large we increase it. Typically, the closer we get to the original image, the flatter the decision boundary becomes and the smaller has to be to still make progress. The attack is converged whenever converges to zero.