-

分析目标:

- 通过处理后的房价数据,筛选对房价有显著影响的特征变量。

- 确定特征变量,建立深圳房价预测模型并对假设情景进行模拟

-

数据预处理?

import pandas as pd

import os

file_path="D:\Python数据分析与挖掘实战\深圳二手房价分析\data"

#读取file_path目录下的所有文件

file_name=os.listdir(file_path)

df=pd.DataFrame()

lis=[]

#使用两种方法读取数据

for i in file_name:

file=pd.read_excel(os.path.join(file_path,i))

# lis.append(file)

df=df.append(file)

# df=pd.concat(lis)

#更改第一列的名字

df=df.rename(columns={'Unnamed: 0':'house_id'})

#查看数据描述

print(df.describe())

out:

house_id roomnum ... subway per_price

count 1.851400e+04 18514.000000 ... 18514.000000 18514.000000

mean 2.280900e+08 2.873339 ... 0.504159 6.118192

std 3.031648e+06 1.040839 ... 0.499996 3.050218

min 4.217338e+06 1.000000 ... 0.000000 1.010100

25% 2.276957e+08 2.000000 ... 0.000000 4.052600

50% 2.284619e+08 3.000000 ... 1.000000 5.246300

75% 2.288307e+08 3.000000 ... 1.000000 7.357400

max 2.289965e+08 9.000000 ... 1.000000 26.396800

[8 rows x 8 columns]

#查看数据信息,是否有缺失值,可以看到总共有10个字段,其中house_id字段是没有用的,我们可以删除

df=df.drop(columns='house_id')

print(df.info())

out:

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 district 18514 non-null object

1 roomnum 18514 non-null int64

2 hall 18514 non-null int64

3 AREA 18514 non-null float64

4 C_floor 18514 non-null object

5 floor_num 18514 non-null int64

6 school 18514 non-null int64

7 subway 18514 non-null int64

8 per_price 18514 non-null float64

dtypes: float64(2), int64(5), object(2)

#我们可以看到字段中只有每平米的单价,我们可以加一个字段为总价,多一个维度进行分析,总价为面积乘以每平米单价

df['total_price']=df['AREA']*df['per_price']

print(df['total_price'])

out:

0 632.002890

1 879.995700

2 110.000800

3 93.990400

4 395.998200

...

1487 116.000040

1488 119.999383

1489 145.001298

1490 128.999772

1491 80.999928

Name: total_price, Length: 18514, dtype: float64

#查看是否有重复项

print(df.duplicated().sum())

out:

0

area_map={'baoan':'宝安','dapengxinqu':'大鹏新区','futian':'福田','guangming':'光明',

'longhua':'龙华','luohu':'罗湖','nanshan':'南山','pingshan':'坪山','yantian':'盐田'

,'longgang':'龙岗'}

df['district']=df['district'].apply(lambda x : area_map[x])-

?特征变量分析

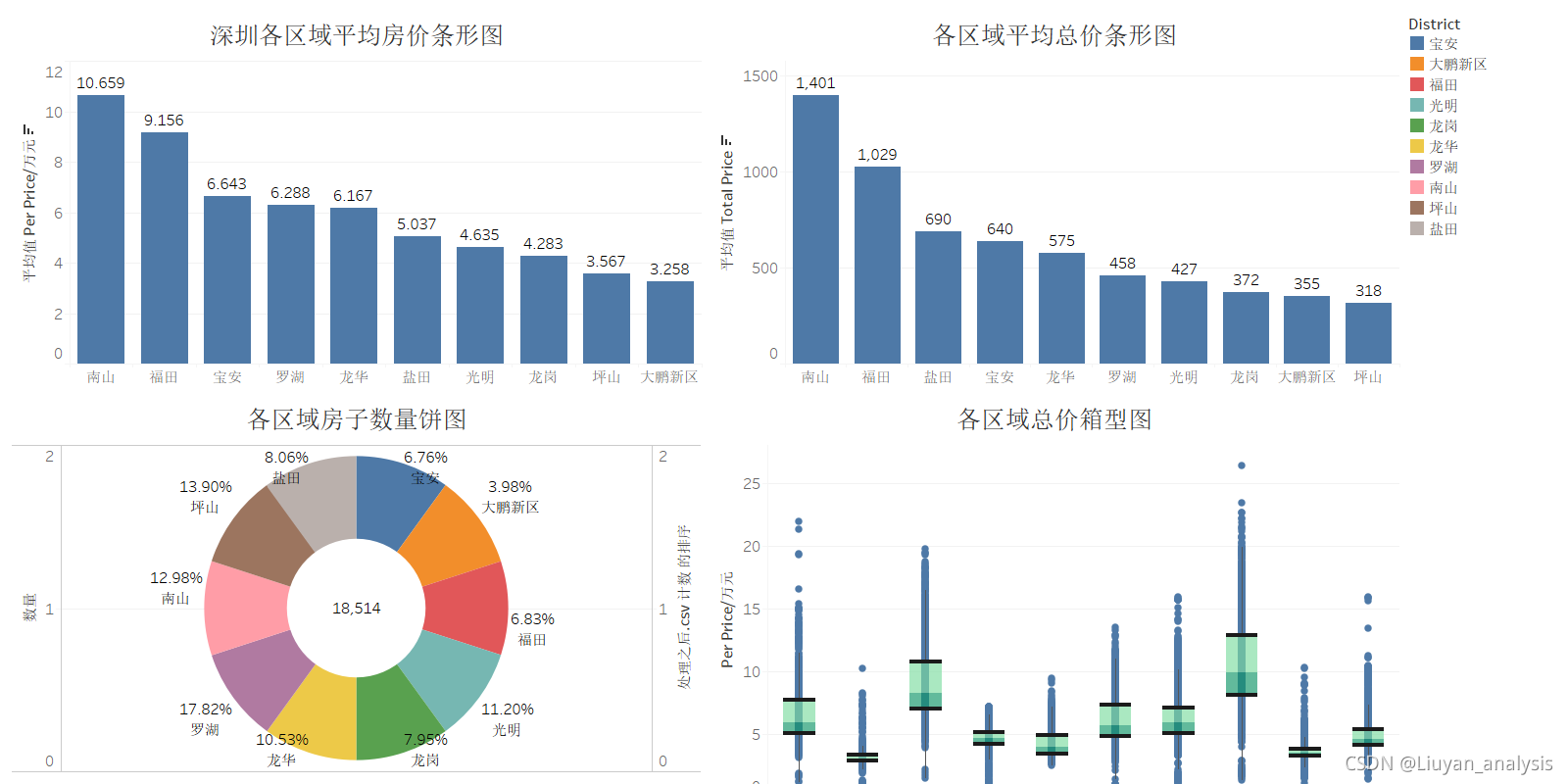

? ? ? ? ?1.district特征变量分析

?

?由上图可以看出:

- 南山区二点平均房价最高,大鹏新区最低。

- 平均总价南山区最高,坪山区最低。

- 二手房总数量有18514套,数量最多的为罗湖,接近18%。

- 由箱型图可以看出随着区域不同,箱子中心明显不同,说明房价跟区域有关系。

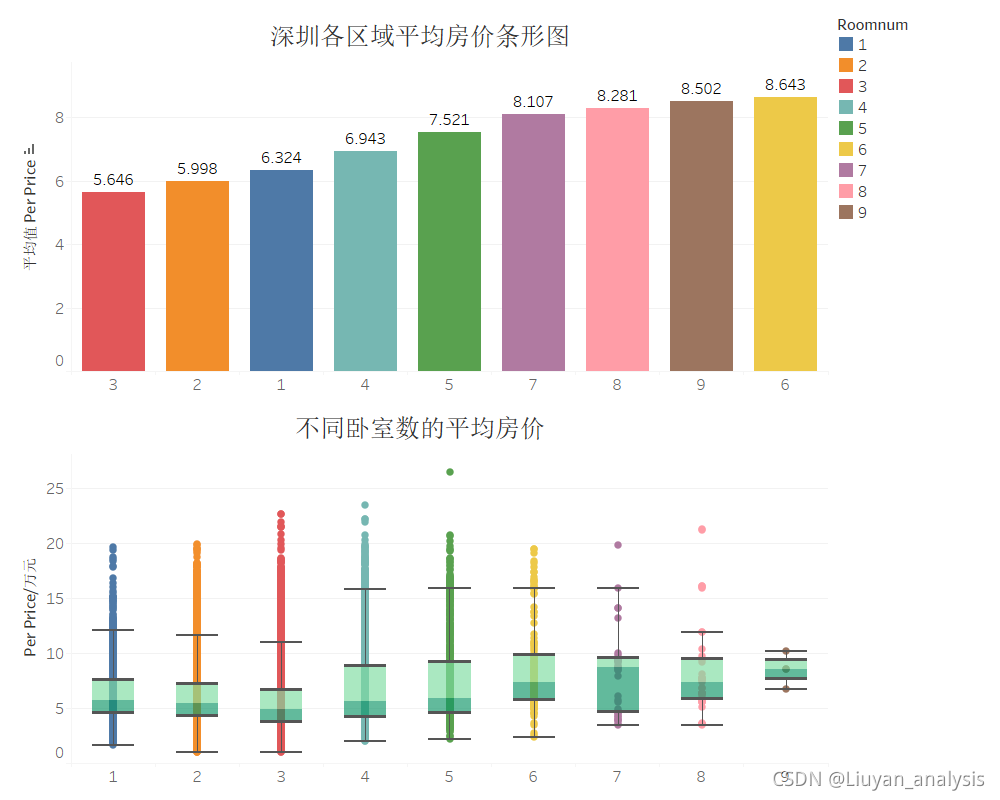

?2.roomnum特征变量分析

?

?由上图可以看出:

- 房间数量为6的平均单价最高。

- 卧室数量对平均单价的影响不明显。

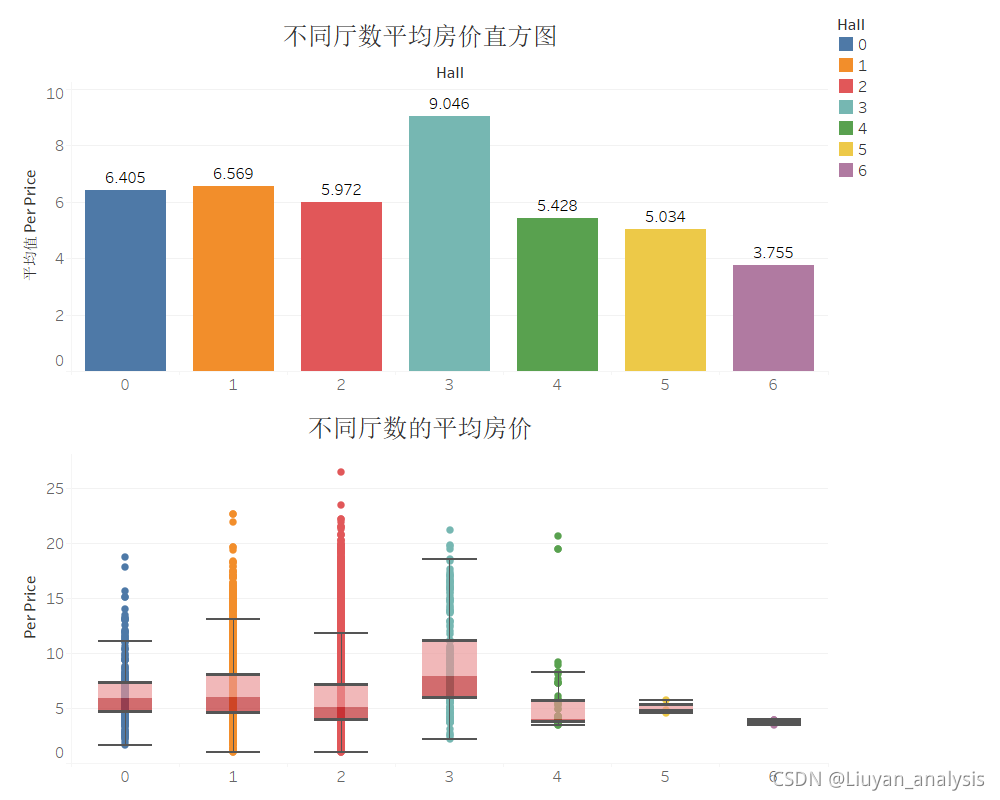

?3.hall特征变量分析

?

?由上图可以看出:

- 厅数量为3的平均单价最高。

- 厅数量对平均单价有一定影响。

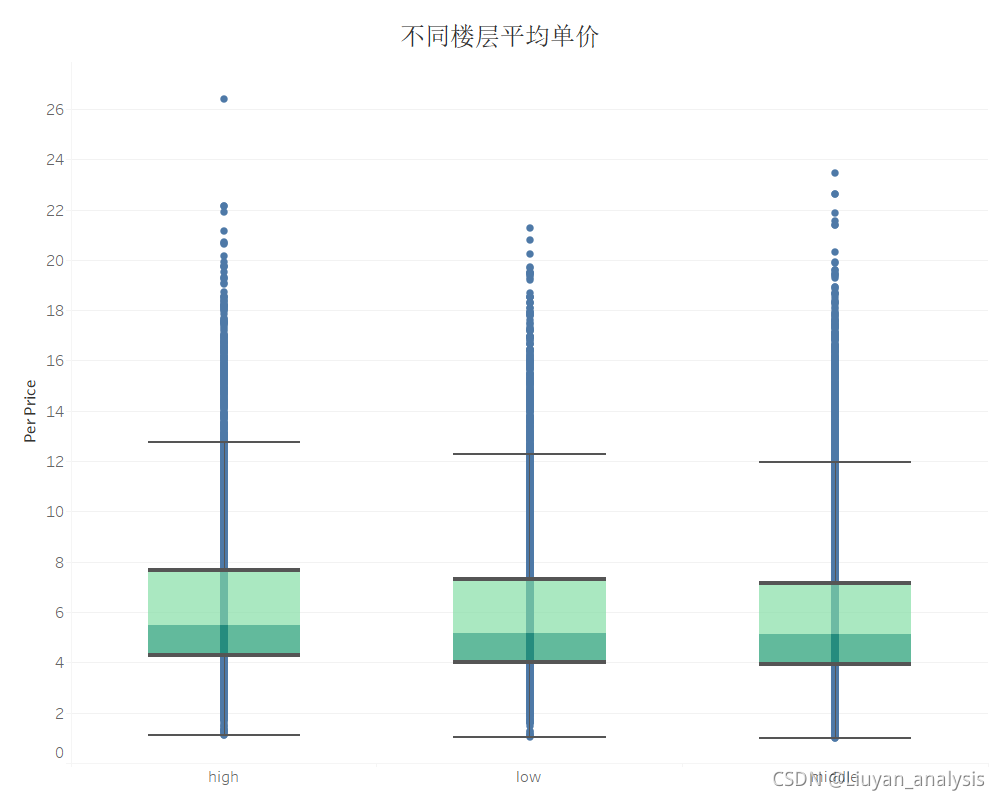

??4.c_floor特征变量分析

?由上图可以看出:

1.不同楼层对均价影响不大。

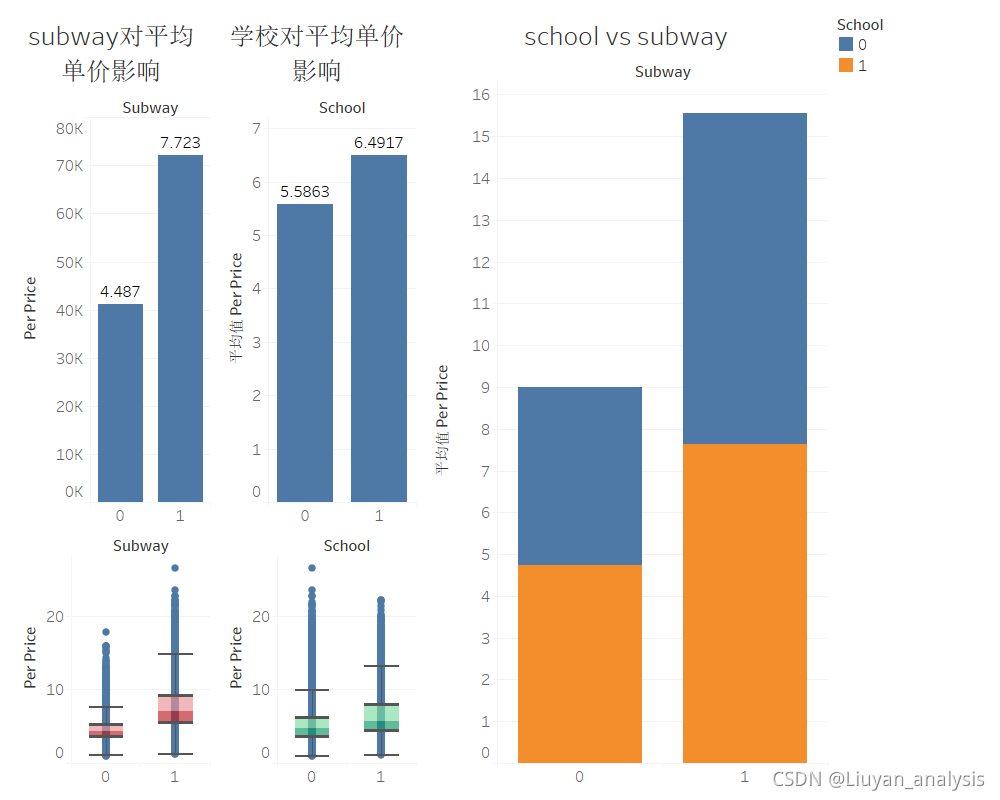

5.school,subway特征变量分析

?由上图可以看出:

1.可以看到,靠近地铁站的二手房均价要明显高于不靠近地铁站的二手房。

2.学校对房价的影响没有地铁站大。

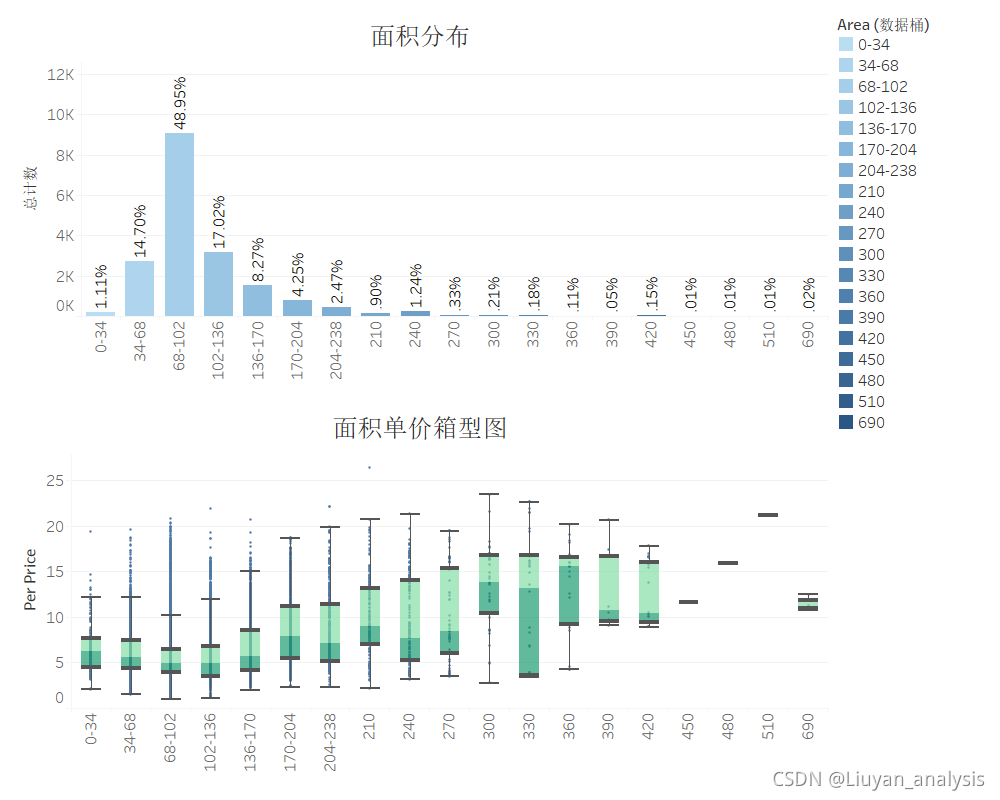

5.面积特征变量分析

plt.scatter(df.AREA,df.per_price,marker='x',color='b',alpha=0.5)

plt.title('面积AREA 和 单位面积房价per_price的散点图')

plt.ylabel("单位面积房价")

plt.xlabel("面积(平方米)")

plt.show()?

?

?

?由上图可以看出:

1.二手房以68-102的小户型居多,几乎占到了总数量的一半。

2.面积变化,单价波动明显,说明面积对单价有一定影响。

3.小户型明显比大户型受欢迎。

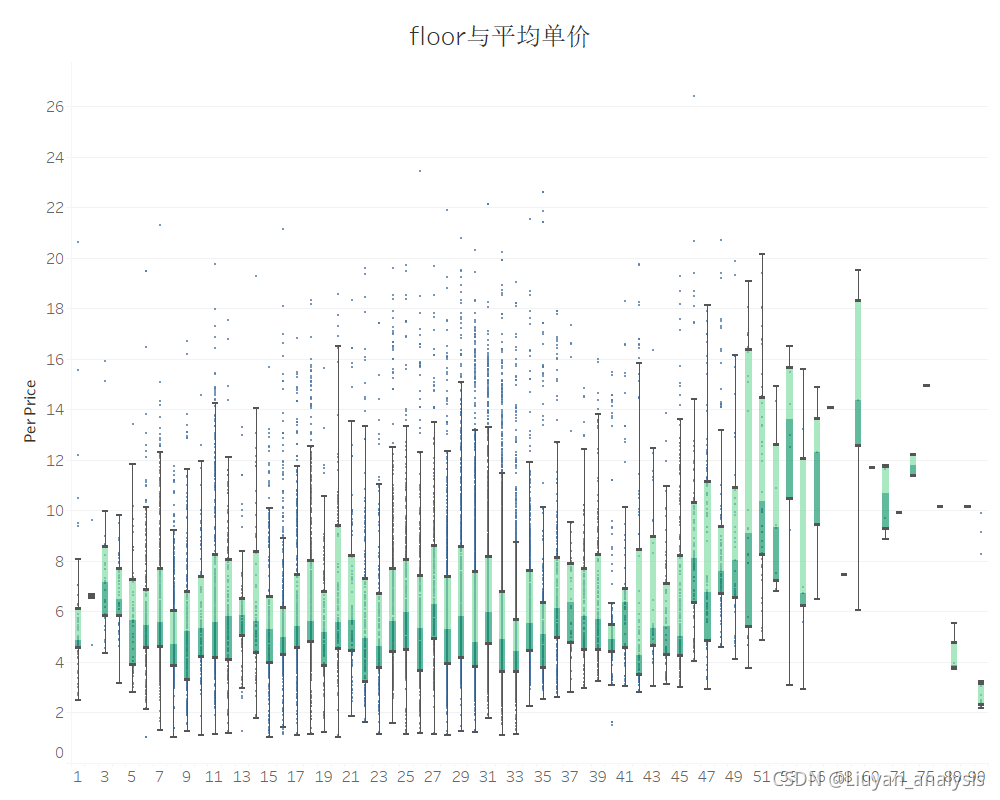

6.floor特征变量分析

?由上图可以看出:

1.随着楼层的变化,平均单价波动较大,所以楼层对单价有影响。

-

机器学习预测房价

?由上面的分析可以看出(区域、房间数量、学校、楼层数、是否靠近地铁站、面积、厅数)等7个特征对房价有影响,因此将这些特征作为作为机器算法的输入,经过训练拟合后输出预测的房价。

首先使用one-hot编码将类别变量(区域,房间数量,厅数)转化为数值型变量,学校和地铁已经转化过了不需要转化,连续变量可以不转为数值型变量。

from sklearn.linear_model import LinearRegression

from sklearn.metrics import r2_score

from sklearn.svm import SVR

import xgboost as xgb

from sklearn.preprocessing import StandardScaler

# 学校和是否靠近地铁不需要转化成数值型变量

Roomnum = pd.get_dummies(df['roomnum'])

Roomnum.rename(columns={i: 'roomnum_' + str(i) for i in Roomnum.columns}, inplace=True)

District = pd.get_dummies(df['district'])

District.rename(columns={i: 'district_' + str(i) for i in District.columns}, inplace=True)

Hall = pd.get_dummies(df['hall'])

Hall.rename(columns={i: 'hall_' + str(i) for i in Hall.columns}, inplace=True)

data_new = pd.concat([Roomnum, District, Hall, df], axis=1)

data_new = data_new.drop(columns=['district', 'hall', 'roomnum', 'C_floor', 'total_price'], axis=1)

# 确定数据中的特征与标签

x = data_new.loc[:, data_new.columns != "per_price"]

fea_imp = x.columns

y = data_new.loc[:, 'per_price']

# 数据分割,随机采样30%作为测试样本,其余作为训练样本

from sklearn.model_selection import train_test_split

x_train, x_test, y_train, y_test = train_test_split(x, y, random_state=10, test_size=0.3)

# print(x_train.shape, x_test.shape, y_train.shape, y_test.shape)

# reshape(-1,1)表示任意行,一列

y_train = y_train.values.reshape(-1, 1)

y_test = y_test.values.reshape(-1, 1)

# 数据标准化处理

ss_x = StandardScaler()

ss_y = StandardScaler()

# fit_transform是fit和transform的组合,既包括了训练又包含了转换。

# transform()和fit_transform()二者的功能都是对数据进行某种统一处理

# (比如标准化~N(0,1),将数据缩放(映射)到某个固定区间,归一化,正则化等)

x_train = ss_x.fit_transform(x_train)

x_test = ss_x.transform(x_test)

mean_y = np.mean(y_train)

s_y = np.var(y_train)

y_train = ss_y.fit_transform(y_train)

y_test = ss_y.transform(y_test)

# 线性回归

lr = LinearRegression()

# 支持向量机回归

svr = SVR(kernel="rbf")

param = {'max_depth': 3,

'learning_rate': 0.1,

'n_estimators': 100,

'objective': 'reg:linear', # 此默认参数与 XGBClassifier 不同

'booster': 'gbtree',

'gamma': 0,

'min_child_weight': 1,

'subsample': 1,

'colsample_bytree': 1,

'reg_alpha': 0,

'reg_lambda': 1,

'random_state': 2}

dtrain = xgb.DMatrix(x_train, label=y_train, feature_names=fea_imp)

dtest = xgb.DMatrix(x_test, label=y_test, feature_names=fea_imp)

num_round = 100

watchlist = [(dtrain, 'train'), (dtest, 'test')]

lr.fit(x_test, y_test)

svr.fit(x_test, y_test)

xg = xgb.train(param, dtrain, num_round, evals=watchlist, early_stopping_rounds=10)

out:

[0] train-rmse:1.04640 test-rmse:1.04475

[1] train-rmse:0.98451 test-rmse:0.98363

[2] train-rmse:0.93073 test-rmse:0.93074

[3] train-rmse:0.88439 test-rmse:0.88517

[4] train-rmse:0.84244 test-rmse:0.84438

[5] train-rmse:0.80710 test-rmse:0.81022

[6] train-rmse:0.77707 test-rmse:0.78133

[7] train-rmse:0.75142 test-rmse:0.75731

[8] train-rmse:0.72903 test-rmse:0.73546

[9] train-rmse:0.71017 test-rmse:0.71765

[10] train-rmse:0.69146 test-rmse:0.69960

[11] train-rmse:0.67692 test-rmse:0.68635

[12] train-rmse:0.66298 test-rmse:0.67298

[13] train-rmse:0.65210 test-rmse:0.66320

[14] train-rmse:0.64173 test-rmse:0.65345

[15] train-rmse:0.63378 test-rmse:0.64664

[16] train-rmse:0.62579 test-rmse:0.63936

[17] train-rmse:0.61993 test-rmse:0.63410

[18] train-rmse:0.61405 test-rmse:0.62849

[19] train-rmse:0.60906 test-rmse:0.62385

[20] train-rmse:0.60482 test-rmse:0.62023

[21] train-rmse:0.60097 test-rmse:0.61680

[22] train-rmse:0.59790 test-rmse:0.61411

[23] train-rmse:0.59470 test-rmse:0.61117

[24] train-rmse:0.59193 test-rmse:0.60857

[25] train-rmse:0.58939 test-rmse:0.60670

[26] train-rmse:0.58688 test-rmse:0.60459

[27] train-rmse:0.58516 test-rmse:0.60302

[28] train-rmse:0.58327 test-rmse:0.60164

[29] train-rmse:0.58163 test-rmse:0.60073

[30] train-rmse:0.58036 test-rmse:0.59952

[31] train-rmse:0.57869 test-rmse:0.59794

[32] train-rmse:0.57696 test-rmse:0.59644

[33] train-rmse:0.57596 test-rmse:0.59559

[34] train-rmse:0.57463 test-rmse:0.59455

[35] train-rmse:0.57330 test-rmse:0.59346

[36] train-rmse:0.57212 test-rmse:0.59236

[37] train-rmse:0.57119 test-rmse:0.59174

[38] train-rmse:0.57041 test-rmse:0.59106

[39] train-rmse:0.56929 test-rmse:0.59012

[40] train-rmse:0.56834 test-rmse:0.58927

[41] train-rmse:0.56733 test-rmse:0.58862

[42] train-rmse:0.56628 test-rmse:0.58777

[43] train-rmse:0.56491 test-rmse:0.58683

[44] train-rmse:0.56391 test-rmse:0.58618

[45] train-rmse:0.56332 test-rmse:0.58569

[46] train-rmse:0.56253 test-rmse:0.58515

[47] train-rmse:0.56154 test-rmse:0.58434

[48] train-rmse:0.56082 test-rmse:0.58371

[49] train-rmse:0.56017 test-rmse:0.58302

[50] train-rmse:0.55971 test-rmse:0.58265

[51] train-rmse:0.55917 test-rmse:0.58233

[52] train-rmse:0.55806 test-rmse:0.58155

[53] train-rmse:0.55745 test-rmse:0.58125

[54] train-rmse:0.55672 test-rmse:0.58080

[55] train-rmse:0.55569 test-rmse:0.57971

[56] train-rmse:0.55514 test-rmse:0.57927

[57] train-rmse:0.55455 test-rmse:0.57894

[58] train-rmse:0.55408 test-rmse:0.57853

[59] train-rmse:0.55361 test-rmse:0.57818

[60] train-rmse:0.55305 test-rmse:0.57795

[61] train-rmse:0.55269 test-rmse:0.57773

[62] train-rmse:0.55180 test-rmse:0.57714

[63] train-rmse:0.55151 test-rmse:0.57698

[64] train-rmse:0.55115 test-rmse:0.57673

[65] train-rmse:0.55049 test-rmse:0.57638

[66] train-rmse:0.54969 test-rmse:0.57585

[67] train-rmse:0.54928 test-rmse:0.57555

[68] train-rmse:0.54904 test-rmse:0.57539

[69] train-rmse:0.54829 test-rmse:0.57457

[70] train-rmse:0.54804 test-rmse:0.57442

[71] train-rmse:0.54737 test-rmse:0.57405

[72] train-rmse:0.54685 test-rmse:0.57380

[73] train-rmse:0.54622 test-rmse:0.57343

[74] train-rmse:0.54584 test-rmse:0.57330

[75] train-rmse:0.54572 test-rmse:0.57320

[76] train-rmse:0.54557 test-rmse:0.57312

[77] train-rmse:0.54502 test-rmse:0.57257

[78] train-rmse:0.54446 test-rmse:0.57215

[79] train-rmse:0.54392 test-rmse:0.57191

[80] train-rmse:0.54342 test-rmse:0.57153

[81] train-rmse:0.54309 test-rmse:0.57132

[82] train-rmse:0.54299 test-rmse:0.57130

[83] train-rmse:0.54251 test-rmse:0.57103

[84] train-rmse:0.54239 test-rmse:0.57095

[85] train-rmse:0.54197 test-rmse:0.57077

[86] train-rmse:0.54146 test-rmse:0.57042

[87] train-rmse:0.54137 test-rmse:0.57035

[88] train-rmse:0.54091 test-rmse:0.57010

[89] train-rmse:0.54067 test-rmse:0.56994

[90] train-rmse:0.54059 test-rmse:0.56993

[91] train-rmse:0.54031 test-rmse:0.56971

[92] train-rmse:0.54009 test-rmse:0.56960

[93] train-rmse:0.53972 test-rmse:0.56913

[94] train-rmse:0.53932 test-rmse:0.56885

[95] train-rmse:0.53903 test-rmse:0.56882

[96] train-rmse:0.53881 test-rmse:0.56873

[97] train-rmse:0.53849 test-rmse:0.56849

[98] train-rmse:0.53814 test-rmse:0.56835

[99] train-rmse:0.53806 test-rmse:0.56828

print('逻辑回归模型的r2-score为:', r2_score(lr.predict(x_test), y_test))

print('支持向量机模型的r2-score为:',r2_score(svr.predict(x_test), y_test))

print('xgboost模型的r2-score为:',r2_score(xg.predict(dtest), y_test))

out:

逻辑回归模型的r2-score为: 0.3927518839179279

支持向量机模型的r2-score为: 0.47270739927209093

xgboost模型的r2-score为: 0.5097990013836289

# 新版本将get_fscore()替换了旧的feature_importance_

im = pd.DataFrame({'importance': xg.get_fscore().values(), 'var': xg.get_fscore().keys()})

im = im.sort_values(by='importance', ascending=False)

print(im.head(10))

out;

importance var

22 201.0 AREA

23 168.0 floor_num

24 36.0 school

9 34.0 district_南山

14 29.0 district_福田

25 28.0 subway

12 25.0 district_宝安

17 25.0 district_龙岗

10 20.0 district_坪山

11 15.0 district_大鹏新区

xgb.plot_importance(xg, max_num_features=10, importance_type='gain')

plt.show()

# (三) 假想情形,做预测,x_new是新的自变量

'''

预测要找一个条件为:

1.南山区

2.有3个房间

3.面积大概再80㎡左右

4.有地铁

5.学区房

的房子的大概花费

'''

room = Roomnum.loc[Roomnum['roomnum_3'] == 1].head(1).reset_index(drop=True)

dis = District.loc[District['district_南山'] == 1].head(1).reset_index(drop=True)

hal = Hall.loc[Hall['hall_3'] == 1].head(1).reset_index(drop=True)

x_new1 = pd.concat([room, dis, hal], axis=1)

x_new1['AREA'] = 80

x_new1['floor_num'] = 3

x_new1['school'] = 1

x_new1['subway'] = 1

x_new1_scale = ss_x.transform(x_new1)

dtt = xgb.DMatrix(x_new1_scale, feature_names=fea_imp)

p=xg.predict(dtt)

# 反标准化

per_price=p*s_y+mean_y

print("单位面积房价:", per_price)

print("总价:", per_price * 80)

out:

单位面积房价: [15.402145]

总价: [1232.1716]

?