使用华为云MindSpore框架实现目标分类___实验报告(二)

华为云MindSpore框架是深度学习和机器学习过程中常用的框架之一,使用过程同Tensorflow,pytorch等框架技术类似,详细使用过程可参考官方API

实验介绍

本实验基于卷积神经网络实现五类花的识别,与传统图像分类方法不同,卷积神经网络无需人工提取特征,可以根据输入图像,自动学习包含丰富语义信息的特征,实验内容为搭建分类神经网络,实现网络的训练和预测过程。

实验环境

- Pycharm工具

- MindSpore1.2.1-CPU

- numpy,matiplotlib

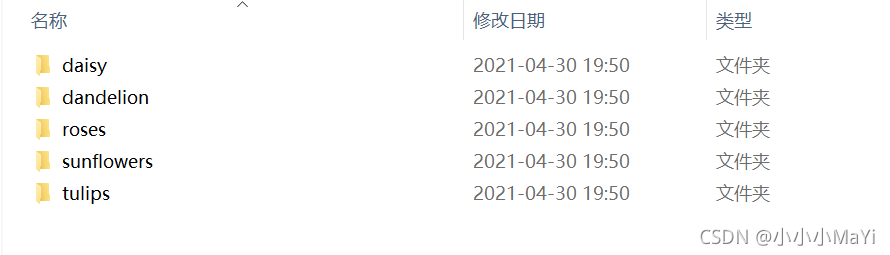

数据集介绍

链接:百度云

提取码:xxmy

该数据集包括5类花的图像数据,每个文件夹名代表一类花名,文件夹中包括该类花的图像信息。分别是 daisy(雏菊,633 张),dandelion(蒲公英,898 张),roses(玫瑰,641 张),sunflowers(向日葵,699 张),tulips(郁金香,799 张)总共 3670 张图像。

例:daisy雏菊

实验过程

- 加载数据集

- 定义网络结构

- 设置优化器,损失函数和回调函数

- 执行训练

- 验证集预测

- 单张图像预测

核心代码

配置训练过程参数

#配置参数

cfg = EasyDict({

'data_path':r'H:/dataset/flower_data/flower_photos/',#数据集路径

'data_size':3670,#数据总量(张)

'image_width':100,#图像宽

'image_height':100,#图像高

'batch_size':32,#每个batch的大小

'channel':3,#输入图像通道数

'num_class':5,#数据集类别数

'weight_decay':0.01,#权重衰减

'lr':0.001,#学习率

'dropout_ratio': 0.5,#dropout比率

'epoch_size': 1, # 训练次数

'sigma': 0.01,#权重初始化参数

'save_checkpoint_steps': 1, # 多少步保存一次模型

'keep_checkpoint_max': 1, # 最多保存多少个模型

'output_directory': './', # 保存模型路径

'output_prefix': "checkpoint_classification" # 保存模型文件名字

})

加载数据集(载入、裁剪、维度变换,类型转换、打乱、切分、打包)

de_dataset = ds.ImageFolderDataset(dataset_dir=cfg.data_path,

class_indexing={'daisy':0,

'dandelion':1,

'roses':2,

'sunflowers':3,

'tulips':4})

#裁剪输入图像为统一大小

transform_img = CV.RandomCropDecodeResize(size=[cfg.image_width,cfg.image_height])

#图像形状调整(H,W,C)->(C,H,W)

hwc2chw_op = CV.HWC2CHW()

#类型调整

type_cast_op = C.TypeCast(mstype.float32)

#将操作应用在数据集上

de_dataset = de_dataset.map(input_columns='image',num_parallel_workers=3,operations=transform_img)

de_dataset = de_dataset.map(input_columns='image',num_parallel_workers=3,operations=hwc2chw_op)

de_dataset = de_dataset.map(input_columns='image',num_parallel_workers=3,operations=type_cast_op)

#打乱数据

de_dataset = de_dataset.shuffle(buffer_size=cfg.data_size)

#数据集划分为训练集和验证集(9:1)

(de_train,de_test) = de_dataset.split([0.9,0.1])

print("训练集(张):"+str(de_train.count))

print("验证集(张):"+str(de_test.count))

#数据集按照batch_size大小打包

de_train = de_train.batch(batch_size=cfg.batch_size,drop_remainder=True)

de_test = de_test.batch(batch_size=cfg.batch_size,drop_remainder=True)

定义神经网络结构

#定义神经网络结构

print('---------------定义网络结构-------------')

class Our_Net(nn.Cell):

def __init__(self,num_class=5,channel=3,dropout_ratio=0.5,init_sigma=0.01):

super(Our_Net,self).__init__()

self.num_class = num_class

self.channel = channel

self.dropout_ratio = dropout_ratio

#(3*5*5+1)*32=2432

self.conv1 = nn.Conv2d(in_channels=self.channel,

out_channels=32,

kernel_size=5,

stride=1,

padding=0,has_bias=True,pad_mode='same',

bias_init='zeros',

weight_init= TruncatedNormal(sigma=init_sigma))

self.relu = nn.ReLU()

self.max_pool = nn.MaxPool2d(kernel_size=2,stride=2,pad_mode='valid')

#(32*5*5+1)*64=51264

self.conv2 = nn.Conv2d(in_channels=32,

out_channels=64,

kernel_size=5,

stride=1,

padding=0,has_bias=True,

pad_mode='same',

bias_init='zeros',

weight_init= TruncatedNormal(sigma=init_sigma))

#(64*3*3+1)*128=73856

self.conv3 = nn.Conv2d(in_channels=64,

out_channels=128,

kernel_size=3,

stride=1,

padding=0,has_bias=True,

pad_mode='same',

bias_init='zeros',

weight_init= TruncatedNormal(sigma=init_sigma))

#(128*3*3+1)*128=147584

self.conv4 = nn.Conv2d(in_channels=128,

out_channels=128,

kernel_size=3,

stride=1,

padding=0,has_bias=True,

pad_mode='same',

bias_init='zeros',

weight_init= TruncatedNormal(sigma=init_sigma))

self.flatten = nn.Flatten()

self.fc1 = nn.Dense(6*6*128,1024,weight_init= TruncatedNormal(sigma=init_sigma),bias_init=0.1)

#471,9616

self.dropout = nn.Dropout(self.dropout_ratio)

#52,4800

self.fc2 = nn.Dense(1024,512,weight_init= TruncatedNormal(sigma=init_sigma),bias_init=0.1)

#2565

self.fc3 = nn.Dense(512,self.num_class,weight_init= TruncatedNormal(sigma=init_sigma),bias_init=0.1)

def construct(self,x):

x = self.conv1(x) #100*100*32

x = self.relu(x)

x = self.max_pool(x) #50*50*32

x = self.conv2(x) # 50*50*64

x = self.relu(x)

x = self.max_pool(x) # 25*25*64

x = self.conv3(x) #25*25*128

x = self.max_pool(x) #12*12*128

x = self.conv4(x) #12*12*128

x = self.max_pool(x) #6*6*128

x = self.flatten(x)

x = self.fc1(x)

x = self.relu(x)

x = self.dropout(x)

x = self.fc2(x)

x = self.relu(x)

x = self.dropout(x)

x = self.fc3(x)

return x

net = Our_Net(num_class=cfg.num_class,channel=cfg.channel,dropout_ratio=cfg.dropout_ratio,init_sigma=cfg.sigma)

实例化网络结构

#定义损失函数

loss = nn.SoftmaxCrossEntropyWithLogits(sparse=True,reduction="mean")

#定义优化器(待更新的参数,学习率,权重衰减)

net_opt = nn.Adam(params=net.trainable_params(), learning_rate=cfg.lr, weight_decay=0.0)

#实例化模型对象(网络结构,损失函数,优化器,评价指标)

model = Model(network=net,loss_fn=loss,optimizer=net_opt,metrics={"acc"})

计算验证集准确率的回调函数

#回调计算验证集准确率,继承Callback类。

class valAccCallback(Callback):

'''

网络完成一个batch训练后的回调(自定义)

'''

def __init__(self, net, eval_data):

self.net = net

self.eval_data = eval_data

def step_end(self, run_context):

metric = self.net.eval(self.eval_data)

print('验证集准确率:',metric)

#实例化回调对象

valAcc_cb = valAccCallback(model,de_test)

配置模型权重输出

#实例化loss记录对象

loss_cb = LossMonitor(per_print_times=1)

#实例化权重配置对象

config_ck = CheckpointConfig(save_checkpoint_steps=cfg.save_checkpoint_steps,

keep_checkpoint_max=cfg.keep_checkpoint_max)

#实例化权重对象对象

ckpoint_cb = ModelCheckpoint(prefix=cfg.output_prefix, directory=cfg.output_directory, config=config_ck)

开始训练

print('---------------开始训练-------------')

model.train(cfg.epoch_size, de_train, callbacks=[loss_cb, ckpoint_cb,valAcc_cb], dataset_sink_mode=False)

print('---------------训练结束-------------')

训练结束,计算验证集准确率。

# 使用验证集评估模型,打印总体准确率

metric = model.eval(de_test)

print(metric)

训练代码

只需要修改数据集对应的路径,单击鼠标右键run即可运行。

# -*- coding: utf-8 -*-

# @Time : 2021-10-25 17:04

# @Author : Anle

# @FileName: pro_2.py.py

# @Software: PyCharm

# @Email :2212086365@qq.com

import mindspore

import numpy as np

from easydict import EasyDict

import mindspore.dataset as ds

import mindspore.dataset.vision.c_transforms as CV#数据增强模块

import mindspore.dataset.transforms.c_transforms as C

from mindspore.common import dtype as mstype

from mindspore.common.initializer import TruncatedNormal

from mindspore import nn

from mindspore.train import Model

from mindspore.train.callback import ModelCheckpoint, CheckpointConfig, LossMonitor, TimeMonitor, Callback

#配置参数

cfg = EasyDict({

'data_path':r'H:/dataset/flower_data/flower_photos/',#数据集路径

'data_size':3670,#数据总量(张)

'image_width':100,#图像宽

'image_height':100,#图像高

'batch_size':32,#每个batch的大小

'channel':3,输入图像的通道数

'num_class':5,#网预测的类别数

'weight_decay':0.01,#权重衰减

'lr':0.001,#学习率

'dropout_ratio': 0.5,#dropout比率

'epoch_size': 1, # 训练次数

'sigma': 0.01,#权重初始化参数

'save_checkpoint_steps': 1, # 多少步保存一次模型

'keep_checkpoint_max': 1, # 最多保存多少个模型

'output_directory': './', # 保存模型路径

'output_prefix': "checkpoint_classification" # 保存模型文件名字

})

print("--------------数据集读入--------------")

#按文件夹读入数据,并将文件夹名字映射成类别号

de_dataset = ds.ImageFolderDataset(dataset_dir=cfg.data_path,

class_indexing={'daisy':0,

'dandelion':1,

'roses':2,

'sunflowers':3,

'tulips':4})

#裁剪输入图像为统一大小

transform_img = CV.RandomCropDecodeResize(size=[cfg.image_width,cfg.image_height])

#图像形状调整(H,W,C)->(C,H,W)

hwc2chw_op = CV.HWC2CHW()

#类型调整

type_cast_op = C.TypeCast(mstype.float32)

#将操作应用在数据集上

de_dataset = de_dataset.map(input_columns='image',num_parallel_workers=3,operations=transform_img)

de_dataset = de_dataset.map(input_columns='image',num_parallel_workers=3,operations=hwc2chw_op)

de_dataset = de_dataset.map(input_columns='image',num_parallel_workers=3,operations=type_cast_op)

#打乱数据

de_dataset = de_dataset.shuffle(buffer_size=cfg.data_size)

#数据集划分为训练集和验证集(9:1)

(de_train,de_test) = de_dataset.split([0.9,0.1])

print("训练集(张):"+str(de_train.count))

print("验证集(张):"+str(de_test.count))

#数据集按照batch_size大小打包

de_train = de_train.batch(batch_size=cfg.batch_size,drop_remainder=True)

de_test = de_test.batch(batch_size=cfg.batch_size,drop_remainder=True)

#定义神经网络结构

print('---------------定义网络结构-------------')

class Our_Net(nn.Cell):

def __init__(self,num_class=5,channel=3,dropout_ratio=0.5,init_sigma=0.01):

super(Our_Net,self).__init__()

self.num_class = num_class

self.channel = channel

self.dropout_ratio = dropout_ratio

#(3*5*5+1)*32=2432

self.conv1 = nn.Conv2d(in_channels=self.channel,

out_channels=32,

kernel_size=5,

stride=1,

padding=0,has_bias=True,pad_mode='same',

bias_init='zeros',

weight_init= TruncatedNormal(sigma=init_sigma))

self.relu = nn.ReLU()

self.max_pool = nn.MaxPool2d(kernel_size=2,stride=2,pad_mode='valid')

#(32*5*5+1)*64=51264

self.conv2 = nn.Conv2d(in_channels=32,

out_channels=64,

kernel_size=5,

stride=1,

padding=0,has_bias=True,

pad_mode='same',

bias_init='zeros',

weight_init= TruncatedNormal(sigma=init_sigma))

#(64*3*3+1)*128=73856

self.conv3 = nn.Conv2d(in_channels=64,

out_channels=128,

kernel_size=3,

stride=1,

padding=0,has_bias=True,

pad_mode='same',

bias_init='zeros',

weight_init= TruncatedNormal(sigma=init_sigma))

#(128*3*3+1)*128=147584

self.conv4 = nn.Conv2d(in_channels=128,

out_channels=128,

kernel_size=3,

stride=1,

padding=0,has_bias=True,

pad_mode='same',

bias_init='zeros',

weight_init= TruncatedNormal(sigma=init_sigma))

self.flatten = nn.Flatten()

self.fc1 = nn.Dense(6*6*128,1024,weight_init= TruncatedNormal(sigma=init_sigma),bias_init=0.1)

#471,9616

self.dropout = nn.Dropout(self.dropout_ratio)

#52,4800

self.fc2 = nn.Dense(1024,512,weight_init= TruncatedNormal(sigma=init_sigma),bias_init=0.1)

#2565

self.fc3 = nn.Dense(512,self.num_class,weight_init= TruncatedNormal(sigma=init_sigma),bias_init=0.1)

def construct(self,x):

x = self.conv1(x) #100*100*32

x = self.relu(x)

x = self.max_pool(x) #50*50*32

x = self.conv2(x) # 50*50*64

x = self.relu(x)

x = self.max_pool(x) # 25*25*64

x = self.conv3(x) #25*25*128

x = self.max_pool(x) #12*12*128

x = self.conv4(x) #12*12*128

x = self.max_pool(x) #6*6*128

x = self.flatten(x)

x = self.fc1(x)

x = self.relu(x)

x = self.dropout(x)

x = self.fc2(x)

x = self.relu(x)

x = self.dropout(x)

x = self.fc3(x)

return x

net = Our_Net(num_class=cfg.num_class,channel=cfg.channel,dropout_ratio=cfg.dropout_ratio,init_sigma=cfg.sigma)

#计算网络结构的总参数量

total_params = 0

for param in net.trainable_params():

total_params += np.prod(param.shape)

print('参数量:',total_params)

#定义损失函数

loss = nn.SoftmaxCrossEntropyWithLogits(sparse=True,reduction="mean")

#定义优化器(待更新的参数,学习率,权重衰减)

net_opt = nn.Adam(params=net.trainable_params(), learning_rate=cfg.lr, weight_decay=0.0)

#实例化模型对象(网络结构,损失函数,优化器,评价指标)

model = Model(network=net,loss_fn=loss,optimizer=net_opt,metrics={"acc"})

#回调计算验证集准确率,继承Callback类。

class valAccCallback(Callback):

'''

网络完成一个batch训练后的回调(自定义)

'''

def __init__(self, net, eval_data):

self.net = net

self.eval_data = eval_data

def step_end(self, run_context):

metric = self.net.eval(self.eval_data)

print('验证集准确率:',metric)

#实例化回调对象

valAcc_cb = valAccCallback(model,de_test)

#实例化loss记录对象

loss_cb = LossMonitor(per_print_times=1)

#实例化权重配置对象

config_ck = CheckpointConfig(save_checkpoint_steps=cfg.save_checkpoint_steps,

keep_checkpoint_max=cfg.keep_checkpoint_max)

#实例化权重对象对象

ckpoint_cb = ModelCheckpoint(prefix=cfg.output_prefix, directory=cfg.output_directory, config=config_ck)

print('---------------开始训练-------------')

model.train(cfg.epoch_size, de_train, callbacks=[loss_cb, ckpoint_cb,valAcc_cb], dataset_sink_mode=False)

print('---------------训练结束-------------')

# 使用验证集评估模型,打印总体准确率

metric = model.eval(de_test)

print(metric)

加载单张图像,并调整至网络输入需要的格式。

img_path = input('Enter Path:')

try:

#读取图像

img = Image.open(fp=img_path)

#调整大小

temp = img.resize((100, 100))

#转换成numpy格式

temp = np.array(temp)

#将HWC格式转化成CHW格式

temp = temp.transpose(2, 0, 1)

#增加一个batch维度

temp = np.expand_dims(temp, 0)

#将图像转成向量

img_tensor = Tensor(temp, dtype=mindspore.float32)

加载网络权重,实例化网络模型

权重文件是华为云ModelArts上训练任务完成后自动生成的,下载到本地即可使用。

#权重路径

CKPT = os.path.join('./checkpoint_classification-200_104.ckpt')

#实例化网络结构

net = Our_Net(num_class=cfg.num_class,

channel=cfg.channel,

dropout_ratio=cfg.dropout_ratio)

#网络加载权重

load_checkpoint(CKPT, net=net)

#实例化模型

model = Model(net)

预测图像类别,输出结果。

#五类花分别对应的标签值

class_names = {0: 'daisy', 1: 'dandelion', 2: 'roses', 3: 'sunflowers', 4: 'tulips'}

#网络预测

predictions = model.predict(img_tensor)

#将预测结果转成numpy格式

predictions = predictions.asnumpy()

#获取预测结果最大值所对应的索引,根据索引获取类别名称

label = class_names[np.argmax(predictions)]

#展示预测结果

plt.title("预测结果:{}".format(label))

#展示图像信息

plt.imshow(np.array(img))

plt.show()

预测全部代码

单键鼠标右键点击run即可执行该文件。

# -*- coding: utf-8 -*-

# @Time : 2021-11-13 14:30

# @Author : Anle

# @FileName: pro_2_predict.py

# @Software: PyCharm

# @Email :2212086365@qq.com

import os

import traceback

import matplotlib.pyplot as plt

import mindspore

from easydict import EasyDict

import numpy as np

from mindspore.common.initializer import TruncatedNormal

from mindspore import nn, load_checkpoint, Model

from PIL import Image

from mindspore import context, Tensor

plt.rcParams['font.sans-serif'] = ['SimHei']#显示中文处理

plt.rcParams['axes.unicode_minus'] = False

#配置参数

cfg = EasyDict({

'data_path':'H:/dataset/flower_data/flower_photos/',

'data_size':3670,#数据量

'image_width':100,#宽

'image_height':100,#高

'batch_size':32,

'channel':3,#通道

'num_class':5,#类别数

'weight_decay':0.01,#权重衰减

'lr':0.0001,#学习率

'dropout_ratio': 0.5,

'epoch_size': 1, # 训练次数

'sigma': 0.01,

'save_checkpoint_steps': 1, # 多少步保存一次模型

'keep_checkpoint_max': 1, # 最多保存多少个模型

'output_directory': './', # 保存模型路径

'output_prefix': "checkpoint_classification" # 保存模型文件名字

})

#定义神经网络结构

class Our_Net(nn.Cell):

def __init__(self,num_class=5,channel=3,dropout_ratio=0.5,init_sigma=0.01):

super(Our_Net,self).__init__()

self.num_class = num_class

self.channel = channel

self.dropout_ratio = dropout_ratio

#(3*5*5+1)*32=2432

self.conv1 = nn.Conv2d(in_channels=self.channel,

out_channels=32,

kernel_size=5,

stride=1,

padding=0,has_bias=True,pad_mode='same',

bias_init='zeros',

weight_init= TruncatedNormal(sigma=init_sigma))

self.relu = nn.ReLU()

self.max_pool = nn.MaxPool2d(kernel_size=2,stride=2,pad_mode='valid')

#(32*5*5+1)*64=51264

self.conv2 = nn.Conv2d(in_channels=32,

out_channels=64,

kernel_size=5,

stride=1,

padding=0,has_bias=True,

pad_mode='same',

bias_init='zeros',

weight_init= TruncatedNormal(sigma=init_sigma))

#(64*3*3+1)*128=73856

self.conv3 = nn.Conv2d(in_channels=64,

out_channels=128,

kernel_size=3,

stride=1,

padding=0,has_bias=True,

pad_mode='same',

bias_init='zeros',

weight_init= TruncatedNormal(sigma=init_sigma))

#(128*3*3+1)*128=147584

self.conv4 = nn.Conv2d(in_channels=128,

out_channels=128,

kernel_size=3,

stride=1,

padding=0,has_bias=True,

pad_mode='same',

bias_init='zeros',

weight_init= TruncatedNormal(sigma=init_sigma))

self.flatten = nn.Flatten()

self.fc1 = nn.Dense(6*6*128,1024,weight_init= TruncatedNormal(sigma=init_sigma),bias_init=0.1)

#471,9616

self.dropout = nn.Dropout(self.dropout_ratio)

#52,4800

self.fc2 = nn.Dense(1024,512,weight_init= TruncatedNormal(sigma=init_sigma),bias_init=0.1)

#2565

self.fc3 = nn.Dense(512,self.num_class,weight_init= TruncatedNormal(sigma=init_sigma),bias_init=0.1)

def construct(self,x):

x = self.conv1(x) #100*100*32

x = self.relu(x)

x = self.max_pool(x) #50*50*32

x = self.conv2(x) # 50*50*64

x = self.relu(x)

x = self.max_pool(x) # 25*25*64

x = self.conv3(x) #25*25*128

x = self.max_pool(x) #12*12*128

x = self.conv4(x) #12*12*128

x = self.max_pool(x) #6*6*128

x = self.flatten(x)

x = self.fc1(x)

x = self.relu(x)

x = self.dropout(x)

x = self.fc2(x)

x = self.relu(x)

x = self.dropout(x)

x = self.fc3(x)

return x

while(True):

img_path = input('Enter Path:')

try:

#读取图像

img = Image.open(fp=img_path)

#调整大小

temp = img.resize((100, 100))

#转换成numpy格式

temp = np.array(temp)

#将HWC格式转化成CHW格式

temp = temp.transpose(2, 0, 1)

#增加一个batch维度

temp = np.expand_dims(temp, 0)

#将图像转成向量

img_tensor = Tensor(temp, dtype=mindspore.float32)

#权重路径

CKPT = os.path.join('./checkpoint_classification-200_104.ckpt')

#实例化网络结构

net = Our_Net(num_class=cfg.num_class,

channel=cfg.channel,

dropout_ratio=cfg.dropout_ratio)

#网络加载权重

load_checkpoint(CKPT, net=net)

#实例化模型

model = Model(net)

#五类花分别对应的标签值

class_names = {0: 'daisy', 1: 'dandelion', 2: 'roses', 3: 'sunflowers', 4: 'tulips'}

#网络预测

predictions = model.predict(img_tensor)

#将预测结果转成numpy格式

predictions = predictions.asnumpy()

#获取预测结果最大值所对应的索引,根据索引获取类别名称

label = class_names[np.argmax(predictions)]

#展示预测结果

plt.title("预测结果:{}".format(label))

#展示图像信息

plt.imshow(np.array(img))

plt.show()

except:

traceback.print_exc()

print("路径有误,请重新输入!")