1.环境配置

??Python、Pytorch、Torchvision、Pandas、PIL等。

2.数据集

??数据来源中国交通标志检测数据集,数据集包含58个类别的5998个交通标志图像。每个图像都是单个交通标志的缩放视图。选择90%数据作为训练数据,10%作为测试数据。

3.数据展示

??下图为torchvision预处理后的图片,不是原图。

4.数据加载

??加载数据需要继承Dataset父类,并且__getitem__()和__len__()两个方法必须要重写,getitem()方法是获取数据及数据标签,一次返回一张图片数据,len()方法是整个数据的长度,也就是图片的数量。最后通过DataLoader类加载。

class TrafficData(Dataset):

def __init__(self, path, train=True):

super(TrafficData, self).__init__()

df = pd.read_csv(os.path.join(path, 'annotations.csv'))

labels = df.category.tolist()

image_files = df.file_name.tolist()

self.path = path

del df

self.transform = torchvision.transforms.Compose([

torchvision.transforms.Resize((128, 128)),

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize((0.1307), (0.3081))

])

if train:

self.image_files = image_files[:int(len(image_files)*0.9)]

self.labels = labels[:int(len(image_files)*0.9)]

else:

self.image_files = image_files[int(len(image_files)*0.9):]

self.labels = labels[int(len(image_files)*0.9):]

def __getitem__(self, index):

image = Image.open(os.path.join(self.path + '/images/', self.image_files[index]))

return self.transform(image), self.labels[index]

def __len__(self):

return len(self.image_files)

train_loader = DataLoader(dataset=TrafficData('../input/chinese-traffic-signs', train=True),

batch_size=32, shuffle=True, drop_last=True)

test_loader = DataLoader(dataset=TrafficData('../input/chinese-traffic-signs', train=False),

batch_size=32, shuffle=True, drop_last=True)

5.模型类

??该模型由四层卷积层构成,每层卷积包括卷积、归一化、激活和池化构成,通道数量依次递增,最后通过一个全连接层输出。

class CNN(nn.Module):

def __init__(self):

super(CNN, self).__init__()

self.conv1 = nn.Sequential(

nn.Conv2d(in_channels = 3, out_channels = 16,kernel_size = 3,

stride = 1,padding = 0),

nn.BatchNorm2d(16),

nn.ReLU(),

nn.MaxPool2d(kernel_size = 2,stride = 2)

)

self.conv2 = nn.Sequential(

nn.Conv2d(

in_channels = 16, out_channels = 32,kernel_size = 3,

stride = 1,padding = 0),

nn.BatchNorm2d(32),

nn.ReLU(),

nn.MaxPool2d(kernel_size = 2,stride = 2)

)

self.conv3 = nn.Sequential(

nn.Conv2d(in_channels = 32, out_channels = 64,kernel_size = 3,

stride = 1,padding = 0),

nn.BatchNorm2d(64),

nn.ReLU(),

nn.MaxPool2d(kernel_size = 2,stride = 2)

)

self.conv4 = nn.Sequential(

nn.Conv2d(in_channels = 64, out_channels = 128,kernel_size = 3,

stride = 1,padding = 0),

nn.BatchNorm2d(128),

nn.ReLU(),

nn.MaxPool2d(kernel_size = 2,stride = 2)

)

self.fc = nn.Sequential(nn.Linear(4608, 58))

def forward(self, x):

out = self.conv1(x)

out = self.conv2(out)

out = self.conv3(out)

out = self.conv4(out)

out = out.view(out.size(0), -1)

out = self.fc(out)

return F.log_softmax(out, dim=1)

6.定义参数

??定义一些模型训练的基本参数,训练次数,小批次数据,学习率和优化器等。

n_epochs = 10

batch_size_train = 16

batch_size_test = 1000

learning_rate = 0.001

momentum = 0.5

log_interval = 10

random_seed = 42

torch.manual_seed(random_seed)

network = CNN()

optimizer = optim.RMSprop(network.parameters(),lr=learning_rate, alpha=0.99,eps=1e-08, weight_decay=0.0, momentum=momentum, centered=False)

train_losses = []

train_counter = []

test_losses = []

test_counter = [i*len(train_loader.dataset) for i in range(n_epochs + 1)]

train_accuracy = []

test_accuracy = []

7.训练函数

??用于该模型的训练。

def train(epoch):

network.train()

tr_correct = 0

for batch_idx, (data, target) in enumerate(train_loader):

data, target = data, target

optimizer.zero_grad()

out = network(data)

loss = F.nll_loss(out, target)

loss.backward()

optimizer.step()

tr_pred = out.data.max(dim=1, keepdim=True)[1]

tr_correct += tr_pred.eq(target.data.view_as(tr_pred)).sum()

if batch_idx % log_interval == 9:

print('\rTrain Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(

epoch, (batch_idx+1) * len(data), len(train_loader.dataset),

100. * batch_idx / len(train_loader), loss.item()),end='')

train_losses.append(loss.item())

train_counter.append(

(batch_idx*64) + ((epoch-1)*len(train_loader.dataset)))

tr_acc = 100. * tr_correct/len(train_loader.dataset)

train_accuracy.append(tr_acc)#

print(', Avg.loss: {:.6f}, Accuracy: {:.2f}%'.format(

torch.mean(torch.Tensor(train_losses[

int((epoch-1)*len(train_loader.dataset)/batch_size_train):])), tr_acc))

8.测试函数

??测定模型效果。

def test():

network.eval()

test_loss = 0

correct = 0

with torch.no_grad():

for data, target in test_loader:

data, target = data, target

output = network(data)

test_loss += F.nll_loss(output, target, reduction='sum').item()

pred = output.data.max(dim=1, keepdim=True)[1]

correct += pred.eq(target.data.view_as(pred)).sum()

test_loss /= (len(test_loader.dataset)/1.)

test_losses.append(test_loss)

test_accuracy.append(100. * correct / len(test_loader.dataset))

if test_accuracy[-1] >= max(test_accuracy):

torch.save(network.state_dict(), './model.pth')

torch.save(optimizer.state_dict(), './optimizer.pth')

print('\nTest set: Avg. loss: {:.6f}, Accuracy: {}/{} ({:.2f}%)\n'.format(

test_loss, correct, len(test_loader.dataset),

100. * correct / len(test_loader.dataset)))

9.训练并绘图

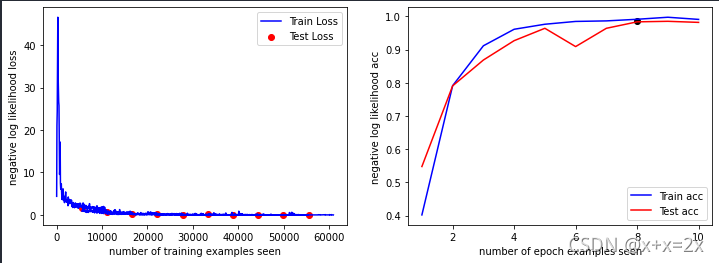

??模型收敛速度较快,进行了十次训练模型基本收敛,效果较好,测试准确率最高98.54%,如果实际需求可以适当增加数据集,本文只是作为测试。

for epoch in range(1, n_epochs + 1):

train(epoch)

test()

print('\n\t\tTest Max_Accuracy: ({:.2f}%)\n'.format(max(test_accuracy)))

fig = plt.figure(figsize=(12,4))

plt.subplot(1,2,1)

plt.plot(train_counter, train_losses, color='blue')

plt.scatter(test_counter[1:], test_losses, color='red')

plt.legend(['Train Loss', 'Test Loss'], loc='upper right')

plt.xlabel('number of training examples seen')

plt.ylabel('negative log likelihood loss')

plt.subplot(1,2,2)

plt.plot([epoch+1 for epoch in range(n_epochs)], [tr/100 for tr in train_accuracy], color='blue')

plt.plot([epoch+1 for epoch in range(n_epochs)], [te/100 for te in test_accuracy], color='red')

x_te_epoch, y_te_acc = test_accuracy.index(max(test_accuracy)), max(test_accuracy)/100.0

plt.scatter(x_te_epoch, y_te_acc, color='black')

plt.legend(['Train acc', 'Test acc'], loc='lower right')

plt.xlabel('number of epoch examples seen')

plt.ylabel('negative log likelihood acc')

plt.show()