2021.11.27

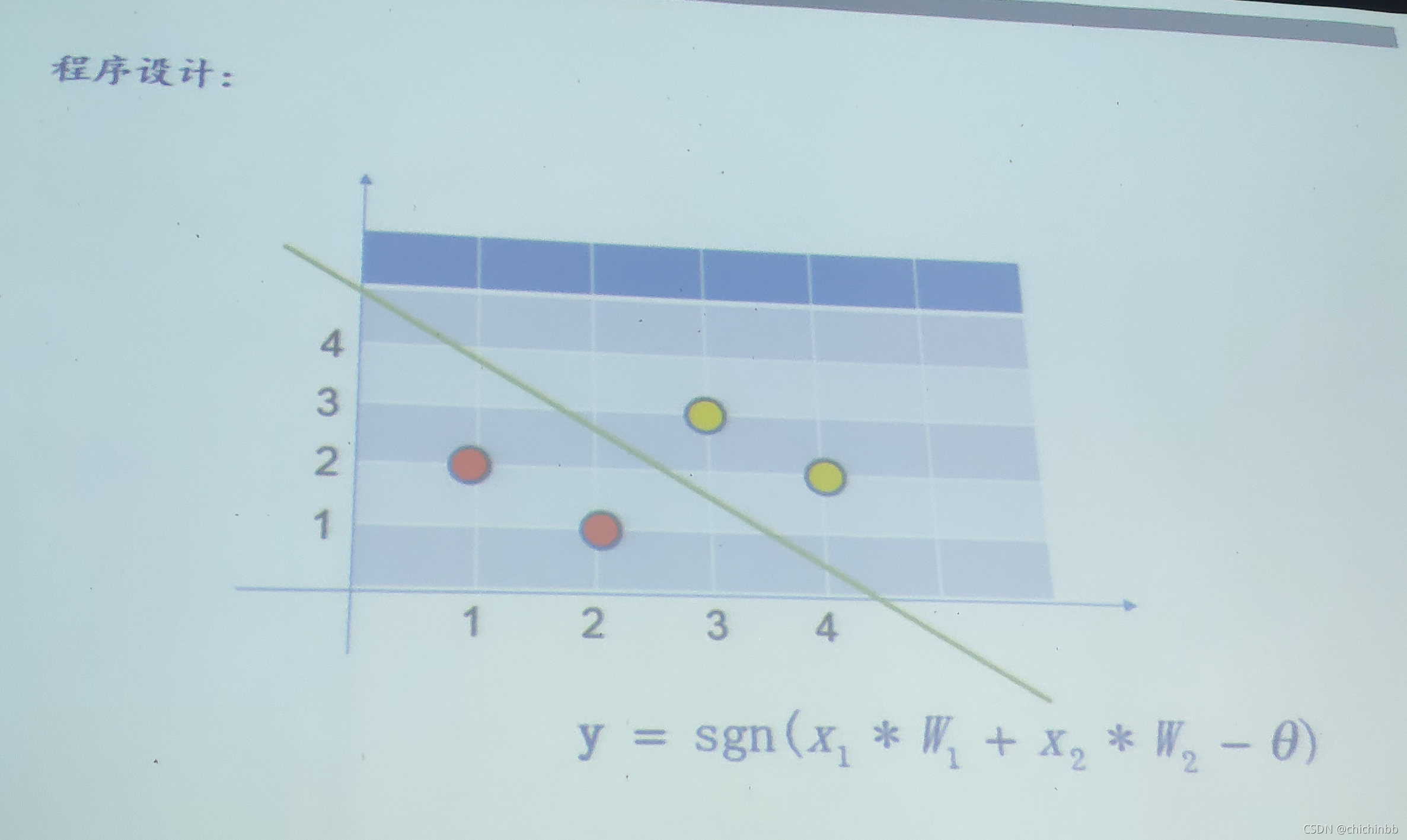

今天解决了一个困扰了我两天的代码,主要是课程作业中老师要求用神经网络算法解决一个简单的分类问题,如图。

于是,我参照网上的分享完成了这样的一个程序。网上找的程序在这:用Python从头实现一个神经网络 - 知乎

?程序如下

import numpy as np

import matplotlib.pyplot as plt

def sigmoid(x):

# Our activation function: f(x) = 1 / (1 + e^(-x))

return 1 / (1 + np.exp(-x))

def diff(x):

# Our activation function: f'(x) = f(x)*(1-f(x))

a = sigmoid(x)

return a * (1 - a)

class Neuron:

def __init__(self, weights, bias):

self.weights = weights

self.bias = bias

def feedforward(self, inputs):

# Weight inputs, add bias, then use the activation function

total = np.dot(self.weights, inputs) + self.bias

return sigmoid(total)

class NeuralNetwork_2_2_1:

'''

A neural network with:

- 2 inputs

- a hidden layer with 2 neurons (h1, h2)

- an output layer with 1 neuron (o1)

Each neuron has the same weights and bias:

- w = [0, 1]

- b = 0

'''

def __init__(self):

# The Neuron class here is from the previous section

weights0 = np.random.normal(size=2)

bias0 = np.random.normal()

self.h1 = Neuron(weights0,bias0)

self.h2 = Neuron(weights0,bias0)

self.o1 = Neuron(weights0,bias0)

def feedforward(self, x):

out_h1 = self.h1.feedforward(x)

out_h2 = self.h2.feedforward(x)

# The inputs for o1 are the outputs from h1 and h2

out_o1 = self.o1.feedforward(np.array([out_h1, out_h2]))

return out_o1

def train(self,learn_rate,times,x_train,y_train):

n = times/100

loss = np.zeros(100)

for time in range(times):

for x,y in zip(x_train,y_train):

w1 = self.h1.weights[0]

w2 = self.h1.weights[1]

b1 = self.h1.bias

w3 = self.h2.weights[0]

w4 = self.h2.weights[1]

b2 = self.h2.bias

w5 = self.o1.weights[0]

w6 = self.o1.weights[1]

b3 = self.o1.bias

h1 = self.h1.feedforward(x)

h2 = self.h2.feedforward(x)

x1 = x[0]

x2 = x[1]

s_h1 = w1*x1+w2*x2+b1

s_h2 = w3*x1+w4*x2+b2

s_o1 = w5*h1+w6*h2+b3

y_obs = self.feedforward(x)

j = -2 * (y - y_obs)

d_j_d_w5 = j*h1*diff(s_o1)

d_j_d_w6 = j*h2*diff(s_o1)

d_j_d_b3 = j*diff(s_o1)

d_j_d_h1 = j*w5*diff(s_o1)

d_j_d_h2 = j*w6*diff(s_o1)

d_h2_d_w3 = x1*diff(s_h2)

d_h2_d_w4 = x2*diff(s_h2)

d_h2_d_b2 = diff(s_h2)

d_h1_d_w1 = x1*diff(s_h1)

d_h1_d_w2 = x2*diff(s_h1)

d_h1_d_b1 = diff(s_h1)

self.h1.weights[0] -= learn_rate * d_j_d_h1 * d_h1_d_w1

self.h1.weights[1] -= learn_rate * d_j_d_h1 * d_h1_d_w2

self.h1.bias -= learn_rate * d_j_d_h1 * d_h1_d_b1

self.h2.weights[0] -= learn_rate * d_j_d_h2 * d_h2_d_w3

self.h2.weights[1] -= learn_rate * d_j_d_h2 * d_h2_d_w4

self.h2.bias -= learn_rate * d_j_d_h2 * d_h2_d_b2

self.o1.weights[0] -= learn_rate * d_j_d_w5

self.o1.weights[1] -= learn_rate * d_j_d_w6

self.o1.bias -= learn_rate * d_j_d_b3

if time%n == 0 :

i = int(time/n)

a = 0

for x,y in zip(x_train,y_train):

y_obs = self.feedforward(x)

j = y_obs-y

J = pow(j,2)/2

a += J

loss[i] = a

print('第%d次训练,误差为%.3f'%(time,a))

plt.plot(range(100),loss)

plt.show()

# Define dataset

data = np.array([

[0, 0],[0, 4],[4, 0],[1, 3],[2, 2],[3, 1],[1,1],[0,2],

[0, 5],[1, 4],[2, 3],[3, 2],[4, 1],[5, 0],[3,4],[2,4]

])

all_y_trues = np.array([1,1,1,1,1,1,1,1,0,0,0,0,0,0,0,0])

network = NeuralNetwork_2_2_1()

network.train(0.1,10000,data,all_y_trues)

x0 = np.array([[1, 2],[2, 1],[3, 3],[4, 2]])

y0 = np.array([])

for i in x0:

j = network.feedforward(i)

y0 = np.append(y0,j)

y0 = np.round(y0) #[1, 1, 0, 0]

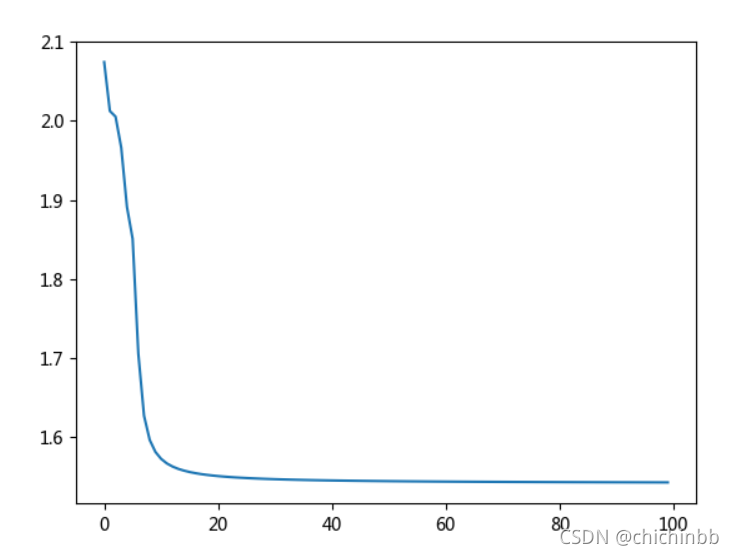

print(y0)输出结果为[0,0,1,0],误差图像如图

经过和知乎专栏中的程序对比,我发现了问题所在。问题代码段如下

class NeuralNetwork_2_2_1:

'''

A neural network with:

- 2 inputs

- a hidden layer with 2 neurons (h1, h2)

- an output layer with 1 neuron (o1)

Each neuron has the same weights and bias:

- w = [0, 1]

- b = 0

'''

def __init__(self):

# The Neuron class here is from the previous section

weights0 = np.random.normal(size=2)

bias0 = np.random.normal()

self.h1 = Neuron(weights0,bias0)

self.h2 = Neuron(weights0,bias0)

self.o1 = Neuron(weights0,bias0)?这个神经网络的init函数(创建实例的初始函数)中,我本意是想在一开始建立h1、h2、o1神经元时,对其参数初始化。但在调试过程中我发现在反向传播函数运算完成后,h1、h2、o1神经元的权重和偏移项更新了。故怀疑是这里出现问题。更改如下:

class NeuralNetwork_2_2_1:

'''

A neural network with:

- 2 inputs

- a hidden layer with 2 neurons (h1, h2)

- an output layer with 1 neuron (o1)

Each neuron has the same weights and bias:

- w = [0, 1]

- b = 0

'''

def __init__(self):

# The Neuron class here is from the previous section

self.h1 = Neuron(np.random.normal(size=2),np.random.normal())

self.h2 = Neuron(np.random.normal(size=2),np.random.normal())

self.o1 = Neuron(np.random.normal(size=2),np.random.normal())

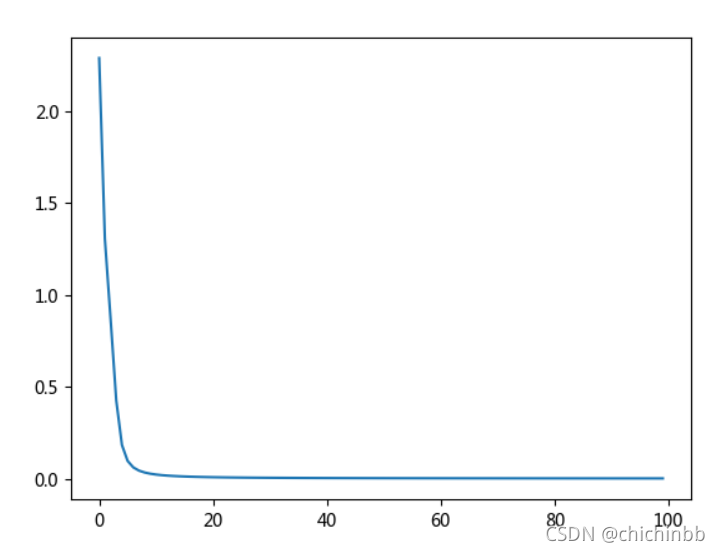

计算结果[1,1,0,0],输出正确。误差图像如图

?为什么会出现这种结果呢?

在调试过程中,我发现在这里

self.h1.weights[0] -= learn_rate * d_j_d_h1 * d_h1_d_w1

self.h1.weights[1] -= learn_rate * d_j_d_h1 * d_h1_d_w2

self.h1.bias -= learn_rate * d_j_d_h1 * d_h1_d_b1

self.h2.weights[0] -= learn_rate * d_j_d_h2 * d_h2_d_w3

self.h2.weights[1] -= learn_rate * d_j_d_h2 * d_h2_d_w4

self.h2.bias -= learn_rate * d_j_d_h2 * d_h2_d_b2

self.o1.weights[0] -= learn_rate * d_j_d_w5

self.o1.weights[1] -= learn_rate * d_j_d_w6

self.o1.bias -= learn_rate * d_j_d_b3我进行权重更新时,更新完h2、o1的权重时,h1的权重会跟着变成相同的值。

可能是由于这里逻辑的错误使得h1、h2、o1的权重和偏移项都指向了同一个存储空间,任何神经元的权值和偏移项的改变都会影响其他神经元的权重和偏移项。

更改后的权重和偏移项有各自的存储空间,就不会互相影响了。

不过是不是因为这样我没有考证过,故留下此文,有大佬愿意帮我看看自然是感谢万分

我不是计算机专业出身,可能代码可读性很差,望读者海涵!!