共4个py文件,按照顺序运行即可会指出图云,效果图在最后,参考b占up主“龙王山小青椒”【Python爬虫+本科毕业论文速成】豆瓣评论-我是余欢水-【数据抓取-情感分析-评分统计-词云制作】的视频,并进行了完善

douban_book_comment.py

# coding:utf-8

# time:2021年11月27日

import requests

import pandas as pd

import re

import time

import csv

from bs4 import BeautifulSoup

import os

from urllib import request

#url请求文件头 header处粘贴自己的header

header = {

'Content-Type':'text/html; charset=utf-8','User-Agent':'header'

}

#登录cookies

#cookie处粘贴自己的cookie

Cookie = {

'cookie'

}

#构造请求网址

url_1="https://book.douban.com/subject/6709783/comments/?start="

url_2="&status=P&sort=new_score"

#循环抓取多页,循环变量为start,0,20,40...

i=0

while True:

#拼接url

url=url_1+str(i*20)+url_2

print(url)

try:

#request请求

html=requests.get(url,headers=header,cookies=Cookie)

#beautifulsoup解析网址

soup = BeautifulSoup(html.content,'lxml')

#评论时间

comment_time_list = soup.find_all('span', attrs={'class': 'comment-time'})

#设置循环终止变量

if len(comment_time_list)==0:

break

#评论用户名

use_name_list=soup.find_all('span', attrs={'class': 'comment-info'})

#评论文本

comment_list=soup.find_all('span', attrs={'class': 'short'})

#评分

rating_list=soup.find_all('span',attrs={'class': re.compile(r"allstar(\s\w+)?")})

for jj in range(len(comment_time_list)):

data1=[(comment_time_list[jj].string,

use_name_list[jj].a.string,

comment_list[jj].string,

rating_list[jj].get('class')[1],

rating_list[jj].get('title'))]

data2 = pd.DataFrame(data1)

data2.to_csv('douban_book.csv', header=False, index=False, mode='a+',encoding="utf-8-sig")

print('page '+str(i+1)+' has done')

except:

print("something is wrong")

print('page'+str(i+1)+' has done')

i=i+1

time.sleep(2)

html.close()

douban_score_analysis.py

# coding:utf-8

# time:2021年11月27日

import pandas as pd

from collections import Counter

# 读取csv文件

df = pd.read_csv('douban_book.csv')

# 统计打分数量

recommend_list = df['recommend'].values.tolist()

num_count = Counter(recommend_list)

# 显示热评中不同分值的评论数量

print(num_count)

#分组求平均

grouped = df.groupby('recommend').describe().reset_index()

recommend = grouped['recommend'].values.tolist()

print(recommend)

# 根据用户打分的分组,对每组的情感值

sentiment_average = df.groupby('recommend')['score'].mean()

sentiment_scores = sentiment_average.values

print(sentiment_scores)

douban_sentiment_analysis.py

# coding:utf-8

# time:2021年11月27日

import pandas as pd

from snownlp import SnowNLP

from snownlp import sentiment

# 读取抓取的csv文件,标题在第3列,序号为2

df = pd.read_csv('douban_book.csv',header=None,usecols=[2])

# 将dataframe转换为list

contents = df.values.tolist()

# 数据长度

print(len(contents))

# 定义空列表存储情感分值

score = []

for content in contents:

#print(content)

try:

s = SnowNLP(content[0])

score.append(s.sentiments)

except:

print("something is wrong")

score.append(0.5)

# 显示情感得分长度,与数据长度比较

print(len(score))

# 存储

data2 = pd.DataFrame(score)

data2.to_csv('sentiment.csv', header=False, index=False, mode='a+')

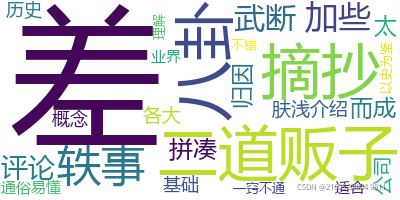

douban_wordcloud.py

# coding:utf-8

# time:2021年11月27日

import jieba

import numpy as np

import PIL.Image as Image

import pandas as pd

from wordcloud import WordCloud

from wordcloud import STOPWORDS

def chinese_jieba(text):

wordlist_jieba = jieba.cut(text)

space_wordlist =" ".join(wordlist_jieba)

return space_wordlist

# 读取csv文件

df = pd.read_csv('douban_book.csv')

comment_list = df['comment'].values.tolist()

recommend_list = df['recommend'].values.tolist()

text = ""

for jj in range(len(comment_list)):

if recommend_list[jj] == 1:

text = text + chinese_jieba(comment_list[jj])

print(text)

# 掉包用PIL中的open方法,读取图片文件,通过numpy中的array方法生成数组

# mask_pic = np.array(Image.open("background.jgp"))

stopwords = set()

content = [line.strip() for line in open('hit_stopwords.txt', 'r', encoding='utf-8').readlines()]

stopwords.update(content)

stopwords.update(['说','本','书','豆瓣','本书','一本','想','写','阅读','建议'])

w = WordCloud(font_path="msyh.ttc",

background_color="white",

max_font_size=150,

max_words=2000,

stopwords=stopwords)

w.generate(text)

image = w.to_image()

w.to_file('ciyun1.png')

image.show()

print(stopwords)