目录

一:朴素贝叶斯简介

????????朴素贝叶斯法是基于贝叶斯定理与特征条件独立性假设的分类方法。对于给定的训练集,首先基于特征条件独立假设学习输入输出的联合概率分布(朴素贝叶斯法这种通过学习得到模型的机制,显然属于生成模型);然后基于此模型,对给定的输入 x,利用贝叶斯定理求出后验概率最大的输出 y。

1.1基于贝叶斯决策理论的分类方法

? ? ? ? 首先对于朴素贝叶斯来说,其优点在于在数据较少的情况想依旧有效,并且可以处理多类别问题,缺点在于对于输入数据的准备方式较为敏感。

? ? ? ? 朴素贝叶斯的思想可以通过下图的示例来了解,我们现在用p1(x,y)表示数据点(x,y)属于类别1的概率,即下图中的圆点,p2(x,y)表示数据点(x,y)属于类别2的概率,即下图的三角形,那么我们就可以用下面的规则来判断它的类别:

????????如果p1(x,y)>p2(x,y),那么类别为1

????????如果p2(x,y)>p1(x,y),那么类别为2

? ? ? ? 也是就是选择高概率对应的类别,这就是朴素贝叶斯的核心思想。

????????

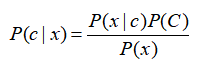

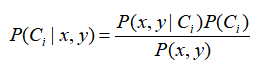

?1.2条件概率

????????条件概率即为在已知B发生的条件下,求事件A发生的概率。我们之前也学过概率论,所以这里直接给出计算公式。条件概率的计算公式为:

? ? ? ? ?另外一种有效的计算方法为贝叶斯准则,计算公式如下:

?下面我们用条件概率来进行分类

????????按照前面提出的贝叶斯决策理论需要计算两个概率p1(x,y)和p2(x,y),这里只是简化描述,需要计算和比较的是P(C1|x,y)和P(C2|x,y).代表含义为给定某个有x,y表示的数据点,那么该数据点来着类别C1的概率和来着C2的概率。这里计算公式为

如果P(C1|x,y)>P(C2|x,y),那么类别为1;如果P(C2|x,y)>P(C1|x,y),那么类别为2

二:文档分类

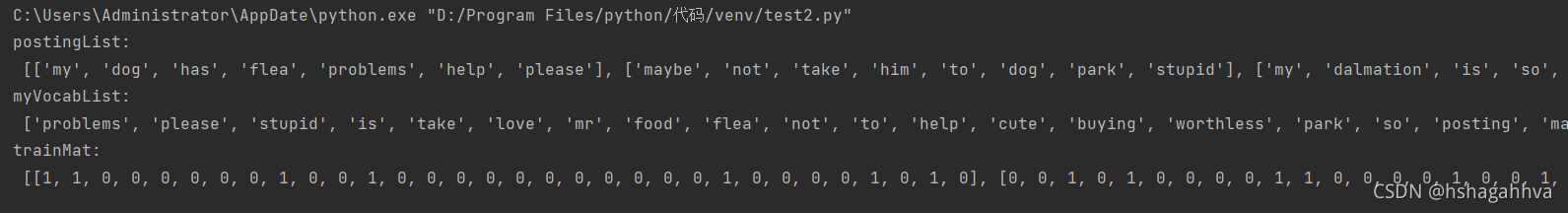

2.1从文本构建词向量

? ? ? ? 在文档分类中,整个文档是实例,而电子邮件中某些元素构成特征。我们可以观察文档中出现的词,并把每个词的出现或者不出现作为一个特征,这样得到的特征数目就会跟词汇表中的词目一样多。朴素贝叶斯是用于文档分类的常用方法

def loadDataSet():

postingList = [['my','dog','has','flea','problems','help','please'],

['maybe','not','take','him','to','dog','park','stupid'],

['my','dalmation','is','so','cute','I','love','him'],

['stop','posting','stupid','worthless','garbage'],

['mr','licks','ate','my','steak','how','to','stop','him'],

['quit','buying','worthless','dog','food','stupid']

]

classVec = [0,1,0,1,0,1]

return postingList,classVec

def createVocabList(dataSet):

vocabSet = set([])

for document in dataSet:

vocabSet = vocabSet | set(document)

return list(vocabSet)

def setOfWords2Vec(vocabList,inputSet):

returnVec = [0]*len(vocabList)

for word in inputSet:

if word in vocabList:

returnVec[vocabList.index(word)] = 1

else: print("this word:%s is not in my Vocabulary!" % word)

return returnVec

if __name__ == '__main__':

postingList,classVec = loadDataSet()

print("postingList:\n",postingList)

myVocabList = createVocabList(postingList)

print('myVocabList:\n',myVocabList)

trainMat = []

for postingLIst in postingList:

trainMat.append(setOfWords2Vec(myVocabList,postingLIst))

print('trainMat:\n',trainMat)截图不够长就截取了前面一部分

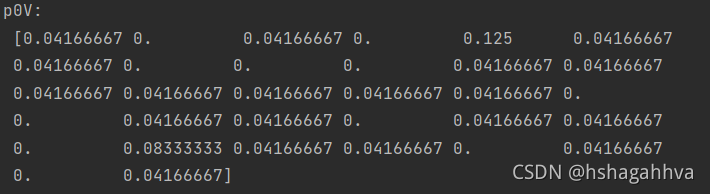

?2.2从词向量计算概率

? ? ? ? 前面介绍了将一组单词转换成一组数字,下面就是根据这些数字计算概率。

def trainNBO(trainMatrix,trainCategory):

numTrainDocs = len(trainMatrix)

numWords = len(trainMatrix[0])

pAbusive = sum(trainCategory)/float(numTrainDocs)

p0Num = zeros(numWords); p1Num = zeros(numWords)

p0Denom = 0.0;p1Denom = 0.0

for i in range(numTrainDocs):

if trainCategory[i] ==1:

p1Num += trainMatrix[i]

p1Denom +=sum(trainMatrix[i])

else:

p0Num += trainMatrix[i]

p0Denom += sum(trainMatrix[i])

p1Vect = p1Num/p1Denom

p0Vect = p0Num/p0Denom

return p0Vect,p1Vect,pAbusive

if __name__ == '__main__':

postingList,classVec = loadDataSet()

myVocabList = createVocabList(postingList)

trainMat = []

for postingLIst in postingList:

trainMat.append(setOfWords2Vec(myVocabList,postingLIst))

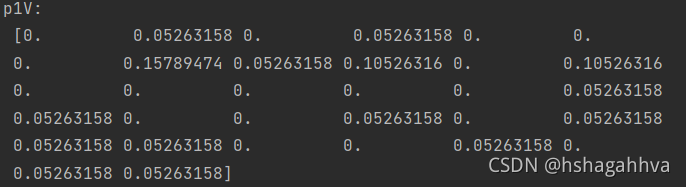

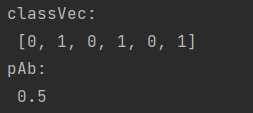

p0V,p1V,pAb = trainNBO(trainMat,classVec)

print('p0V:\n',p0V)

print('p1V:\n', p1V)

print('classVec:\n', classVec)

print('pAb:\n', pAb)

2.3根据现实情况修改分类器

? ? ? ? 在利用贝叶斯分类器对文档进行分类的时候,要计算多个概率的乘积一获取文档属于某个类型的概率,即计算p(w0|1),p(w1|1),p(w2|1),如果出现一个概率为0,那么最后乘积也就为0,为降低这种影响,可以将所有词出现次数初始化为1,并将分母初始化为2

? ? ? ? 然后是下溢出问题,由于太多很小的数相乘,程序可能会下溢出或者得不到正确答案。这时候采用对数相乘就可以解决修改如下:

p0Num = ones(numWords); p1Num = ones(numWords)

p0Denom = 2.0;p1Denom = 2.0

p1Vect = log(p1Num/p1Denom)

p0Vect = log(p0Num/p0Denom)?然后就可以很好的完成分类器了

def classifyNB(vec2Classify,p0Vec,p1Vec,pClass1):

p1 = sum(vec2Classify*p1Vec)+log(pClass1)

p0 = sum(vec2Classify*p0Vec)+log(1.0-pClass1)

if p1>p0:

return 1

else:

return 0

def testingNB():

listOPosts,listClasses = loadDataSet()

myVocabList = createVocabList(listOPosts)

trainMat = []

for posinDoc in listOPosts:

trainMat.append(setOfWords2Vec(myVocabList,posinDoc))

p0V,p1V,pAb = trainNBO(array(trainMat),array(listClasses))

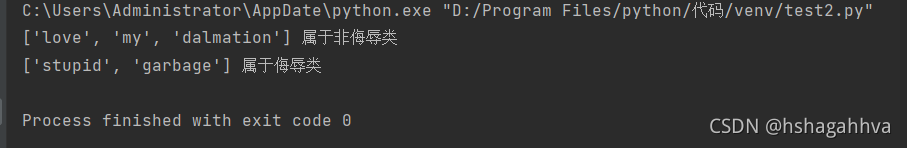

testEntry = ['love','my','dalmation']

thisDoc = array(setOfWords2Vec(myVocabList,testEntry))

if classifyNB(thisDoc,p0V,p1V,pAb):

print(testEntry,'属于侮辱类')

else:

print(testEntry,'属于非侮辱类')

testEntry = ['stupid','garbage']

thisDoc = array(setOfWords2Vec(myVocabList,testEntry))

if classifyNB(thisDoc,p0V,p1V,pAb):

print(testEntry, '属于侮辱类')

else:

print(testEntry, '属于非侮辱类')

?2.4文档词袋模型

? ? ? ? 目前为止,我们将每个词的出现与否作为一个特征,这可以被描述为词集模型,如果一个词在文档中出现不止一次,可能意味着包含该词是否出现在文档中所不能表达的某种信息,这种方法称为文档词袋模型。

def bagOfWords2VecMN(vocabList,inputSet):

returnVec = [0]*len[vocabList]

for word in inputSet:

if word in vocabList:

returnVec[vocabList.index(word)] +=1

return returnVec

三:朴素贝叶斯过滤垃圾邮件

????????

import numpy as np

import random

import re

def createVocabList(dataSet):

vocabSet = set([])

for document in dataSet:

vocabSet = vocabSet | set(document)

return list(vocabSet)

def setOfWords2Vec(vocabList,inputSet):

returnVec = [0]*len(vocabList)

for word in inputSet:

if word in vocabList:

returnVec[vocabList.index(word)] = 1

else: print("this word:%s is not in my Vocabulary!" % word)

return returnVec

def trainNBO(trainMatrix,trainCategory):

numTrainDocs = len(trainMatrix)

numWords = len(trainMatrix[0])

pAbusive = sum(trainCategory)/float(numTrainDocs)

p0Num = ones(numWords); p1Num = ones(numWords)

p0Denom = 2.0;p1Denom = 2.0

for i in range(numTrainDocs):

if trainCategory[i] ==1:

p1Num += trainMatrix[i]

p1Denom +=sum(trainMatrix[i])

else:

p0Num += trainMatrix[i]

p0Denom += sum(trainMatrix[i])

p1Vect = log(p1Num/p1Denom)

p0Vect = log(p0Num/p0Denom)

return p0Vect,p1Vect,pAbusive

def classifyNB(vec2Classify,p0Vec,p1Vec,pClass1):

p1 = sum(vec2Classify*p1Vec)+log(pClass1)

p0 = sum(vec2Classify*p0Vec)+log(1.0-pClass1)

if p1>p0:

return 1

else:

return 0

def textParse(bigString):

listOfTokens = re.split(r'\W+',bigString)

return [tok.lower() for tok in listOfTokens if len(tok) >2]

def spamTest():

docList = [];classList = []; fullText = []

for i in range(1,26):

wordList = textParse(open('email/spam/%d.txt' % i, 'r').read())

docList.append(wordList)

fullText.append(wordList)

classList.append(1)

wordList = textParse(open('./email/ham/%d.txt' % i, 'r').read())

docList.append(wordList)

fullText.append(wordList)

classList.append(0)

vocabList = createVocabList(docList)

trainingSet = list(range(50));testSet = []

for i in range(10):

randIndex = int(random.uniform(0, len(trainingSet)))

testSet.append(trainingSet[randIndex])

del (trainingSet[randIndex])

trainMat = [];

trainClasses = []

for docIndex in trainingSet:

trainMat.append(setOfWords2Vec(vocabList, docList[docIndex]))

trainClasses.append(classList[docIndex])

p0V, p1V, pSpam = trainNBO(np.array(trainMat), np.array(trainClasses))

errorCount = 0

for docIndex in testSet:

wordVector = setOfWords2Vec(vocabList, docList[docIndex])

if classifyNB(np.array(wordVector), p0V, p1V, pSpam) != classList[docIndex]:

errorCount += 1

print("分类错误的测试集:", docList[docIndex])

print('the error rate is:'(float(errorCount) / len(testSet) * 100))

if __name__ == '__main__':

spamTest()