类别不平衡

imblance problem

查找一些资料

样本不均讨论:

https://blog.csdn.net/sp_programmer/article/details/48047101

上采样、下采样、代价敏感

代价敏感:设计objective function的时候给不同misclassification的情况不同的relative weights。也就是说给从小数量的样本被分成大数量的样本更大的penalty

正样本样本绝对数很小。需要扩散正样本方法

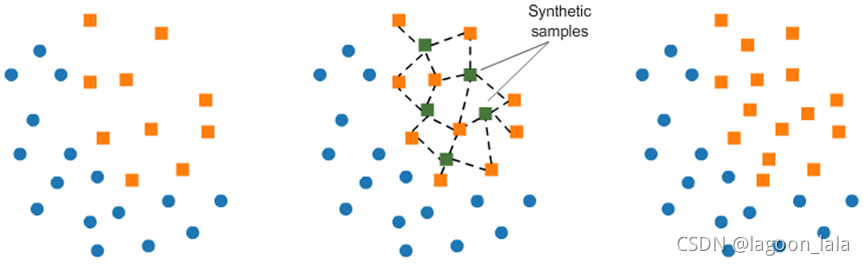

Synthetic Minority Over-sampling Technique 我试过这个方法,解决部分问题,主要还是需要增加样本在特征空间的覆盖! 工程上光靠算法也解决不了问题,有的还是需要加入下经验知识来做

1,比例是一个关键的参数,据我们的经验,1:14是一个常见的“好”比例。[哈哈] 2,stratified sampling,也就是根据特征来采样,尽量使样本都覆盖特征空间。3,训练的时候,这个正负样本的“出场”顺序也很关键,不然可能还没遇到正(负)样 本模型就已经收敛了。

伪标注方法support cluster machine: 聚类然后从负样本中找和正样本比较紧邻的作为正样本

如果1:10算是均匀的话,可以将多数类分割成为1000份。然后将每一份跟少数类的样本组合进行训练得到分类器。而后将这1000个分类器用assemble的方法组合位一个分类器。记得读到的论文可行,但没有验证过

类别不平衡问题,可以参考Haibo, H. and E. A. Garcia (2009). "Learning from Imbalanced Data." Knowledge and Data Engineering, IEEE Transactions on" 的survey.已有很多方法提出

Transductive SVM (TSVM) 用当前训练的模型标注新的sample

imbalance-problem

http://www.chioka.in/class- imbalance-problem /

单类分类算法

如svdd参考positive only learning等半监督学习中如早期的spy算法等来构造合适的负例来解决正负例不平衡的问题。在只有正例的学习问题中,负例千差万别,且数量也远超正例

最经典的imbalanced的方法,cascade learning,详见viola的face detection paper.

知乎方法归纳

https://www.zhihu.com/question/264220817/answer/1889196091

常见3类方法

参考HyperGBM知乎讲解:

https://zhuanlan.zhihu.com/p/350052055

GitHub文档:

https://github.com/DataCanvasIO/HyperGBM

通常应对不均衡问题主要有三类方法:

Class Weight(类别权重)

Resampling/Oversampling (重采样)

Undersampling (降采样)

一、类别权重

Class Weight

Class Weight方法通过为计算各分类的样本占比来重新分配不同分类的样本权重,使得样本更少的分类样本在训练时获得更高的权重来改善少数类的有效学习。

二、重采样

Resampling/Oversampling

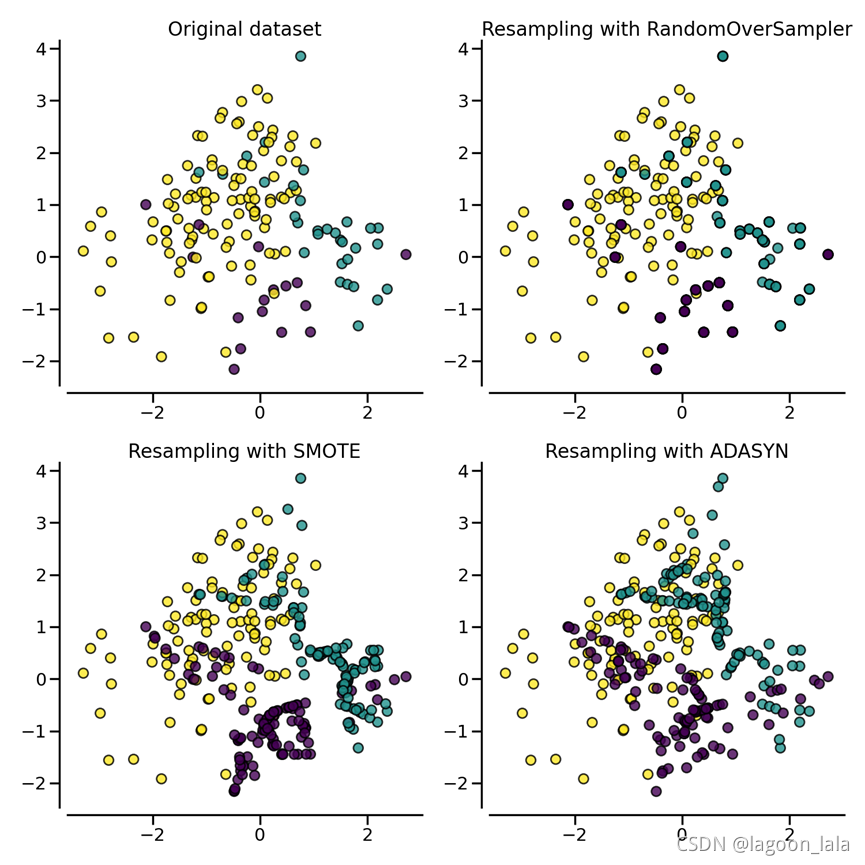

包括随机采样、SMOTE、ADASYN

1.RandomOversampling(随机重采样)

有放回地在少数类别中重复采样,直到样本均衡为止。

缺点: 人为减少数据集的方差。

2.SMOTE(Synthetic Minority Over-sampling Technique)

对少数类别, 通过原始样本的插值来生成新样本

3.ADASYN(Adaptive Synthetic)

采样同SMOTE,但是,为给定xi生成的样本数量与附近样本的数量成正比,而邻近样本的数量与xi不属于同一类别。 因此,ADASYN在生成新的样本时往往只关注异常值。

两种方法区别参考:

https://imbalanced-learn.org/stable/over_sampling.html

ADASYN在原始样本旁边生成样本,这些样本使用k-最近邻分类器容易被错误地分类,而SMOTE的基本实现不会区分使用最近邻规则进行分类的简单和困难样本

SMOTE 可能会连接靠类内部的值和外侧边缘(inliers and outliers)值,而 ADASYN 可能只关注外侧值,在这两种情况下,这都可能导致决策函数欠优。SMOTE一些变体可以侧重于最优决策函数边界附近的样本,并生成与最近邻类相反方向的样本. (BorderlineSMOTE、 SVMSMOTE和KMeansSMOTE)

ill-posed参考:

https://blog.csdn.net/Mr_Lowbee/article/details/109738200

ill-posed不存在稳定的唯一解的问题: 需要做各种先验假设,来约束它,使它变为well posed,从而能够求解

三、下采样

Undersampling

包括:RandomUndersampling、NearMiss、TomekLinks及EditedNearestNeighbours

1.RandomUndersampling(随机降采样)

随机删除多数类中的样本,直到各分类样本平衡为止。

2.NearMiss

采用KNN方式挑选那些多数类中和少数类区分度最高的样本

3.TomekLinks

当来自不同分类中的样本相互之间距离很近,这个时候, 这些样本很有可能是噪声数据,我们通过删除多数类中的这类样本来实现类平衡。

4.EditedNearestNeighbours

如果多数类中的某些样本和其他类的样本距离更接近,就选择删除这些样本

常见错误

数据泄漏(data leakage): 在重采样中,存在一个常见的陷阱: 在将整个数据集拆分为训练和测试分区之前对其进行重采样。

这样的处理会导致两个问题:

1. 通过对整个数据集进行重新采样,训练集和测试集都进行了类别平衡,而模型应该在自然不平衡的数据集上进行测试,以评估模型的潜在偏差;

2. 重采样过程可能会使用数据集中有关测试样本的信息来生成或选择某些样本。因此,我们可能会使用稍后将用作测试样品的样品信息,这是典型的数据泄漏问题。

HyperGBM

https://github.com/DataCanvasIO/HyperGBM

HyperGBM是一个支持全流水线(full-pipeline)的AutoML库,它完全涵盖了数据清清洗,预处理,特征生成和选择,模型选择和超参数优化的端到端各个阶段。是用于表格(tabular)数据AutoML 工具。

将从数据清理到算法选择的整个过程放在一个搜索空间中进行优化。端到端的流水线优化更像是一个顺序决策过程,因此HyperGBM使用强化学习、蒙特卡洛树搜索、进化算法与元学习相结合

使用的ML算法都是GBM梯度提升树模型,目前包括XGBoost,LightGBM和Catboost

基于框架Hypernets:

https://github.com/DataCanvasIO/Hypernets

其中不平衡数据文档:

https://hypergbm.readthedocs.io/zh_CN/latest/example_imbalance.html

imbalanced-learn

处理样本不平衡的包imbalanced-learn:

https://github.com/scikit-learn-contrib/imbalanced-learn

为scikit-learn兼容项目

(查了一下, 根据视频中pip 团队成员自己对PyPI 和pip的发音, 分别为"pie pea eye"和[p?p])

对应期刊论文:

Imbalanced-learn: A Python Toolbox to Tackle the Curse of Imbalanced Datasets in Machine Learning

(2017CCFa)

http://jmlr.org/papers/v18/16-365

文档:

https://imbalanced-learn.org/stable/

推荐使用流水线(pipeline), 可以避免数据泄露

| from imblearn.pipeline import make_pipeline model = make_pipeline( ??? RandomUnderSampler(random_state=0), ??? HistGradientBoostingClassifier(random_state=0) ) cv_results = cross_validate( ??? model, X, y, scoring="balanced_accuracy", ??? return_train_score=True, return_estimator=True, ??? n_jobs=-1 ) print( ??? f"Balanced accuracy mean +/- std. dev.: " ??? f"{cv_results['test_score'].mean():.3f} +/- " ??? f"{cv_results['test_score'].std():.3f}" ) |

公开数据集参考:

https://imbalanced-learn.org/stable/datasets/index.html

基于真实数据集的预测示例:

https://imbalanced-learn.org/stable/auto_examples/index.html#general-examples

各个采样方法中, 参数sampling_strategy的作用:

https://imbalanced-learn.org/stable/auto_examples/api/plot_sampling_strategy_usage.htm

该参数是浮点数时, 定义采样前后目标类别的样本数的比率

sampling_strategy可以作为字符串给出,该字符串指定重采样所针对的类。使用欠采样和过度采样时,采样数量将被均衡(the number of samples will be equalized)。

字典键值对形式时: 键对应于目标类, 值对应于每个目标类所需的样本数

过采样示例:

https://imbalanced-learn.org/stable/auto_examples/over-sampling/plot_comparison_over_sampling.html

过采样demo

安装imbalanced-learn包, 参考官网安装指南:

https://imbalanced-learn.org/stable/install.html#getting-started

激活虚拟环境

| activate Liver |

Pip安装

| pip install -U imbalanced-learn |

完整示例代码:

| """ ============================== Compare over-sampling samplers ============================== The following example attends to make a qualitative comparison between the different over-sampling algorithms available in the imbalanced-learn package. """ # Authors: Guillaume Lemaitre <g.lemaitre58@gmail.com> # License: MIT # %% print(__doc__) import matplotlib.pyplot as plt import seaborn as sns sns.set_context("poster") # %% [markdown] # The following function will be used to create toy dataset. It uses the # :func:`~sklearn.datasets.make_classification` from scikit-learn but fixing # some parameters. # %% from sklearn.datasets import make_classification def create_dataset( ??? n_samples=1000, ??? weights=(0.01, 0.01, 0.98), ??? n_classes=3, ??? class_sep=0.8, ??? n_clusters=1, ): ??? return make_classification( ??????? n_samples=n_samples, ??????? n_features=2, ??????? n_informative=2, ??????? n_redundant=0, ??????? n_repeated=0, ??????? n_classes=n_classes, ??????? n_clusters_per_class=n_clusters, ??????? weights=list(weights), ??????? class_sep=class_sep, ??????? random_state=0, ??? ) # %% [markdown] # The following function will be used to plot the sample space after resampling # to illustrate the specificities of an algorithm. # %% def plot_resampling(X, y, sampler, ax, title=None): ??? X_res, y_res = sampler.fit_resample(X, y) ??? ax.scatter(X_res[:, 0], X_res[:, 1], c=y_res, alpha=0.8, edgecolor="k") ??? if title is None: ??????? title = f"Resampling with {sampler.__class__.__name__}" ??? ax.set_title(title) ??? sns.despine(ax=ax, offset=10) # %% [markdown] # The following function will be used to plot the decision function of a # classifier given some data. # %% import numpy as np def plot_decision_function(X, y, clf, ax, title=None): ??? plot_step = 0.02 ??? x_min, x_max = X[:, 0].min() - 1, X[:, 0].max() + 1 ??? y_min, y_max = X[:, 1].min() - 1, X[:, 1].max() + 1 ??? xx, yy = np.meshgrid( ??????? np.arange(x_min, x_max, plot_step), np.arange(y_min, y_max, plot_step) ??? ) ??? Z = clf.predict(np.c_[xx.ravel(), yy.ravel()]) ??? Z = Z.reshape(xx.shape) ??? ax.contourf(xx, yy, Z, alpha=0.4) ??? ax.scatter(X[:, 0], X[:, 1], alpha=0.8, c=y, edgecolor="k") ??? if title is not None: ??????? ax.set_title(title) # %% [markdown] # Illustration of the influence of the balancing ratio # ---------------------------------------------------- # # We will first illustrate the influence of the balancing ratio on some toy # data using a logistic regression classifier which is a linear model. # %% from sklearn.linear_model import LogisticRegression clf = LogisticRegression() # %% [markdown] # We will fit and show the decision boundary model to illustrate the impact of # dealing with imbalanced classes. # %% fig, axs = plt.subplots(nrows=2, ncols=2, figsize=(15, 12)) weights_arr = ( ??? (0.01, 0.01, 0.98), ??? (0.01, 0.05, 0.94), ??? (0.2, 0.1, 0.7), ??? (0.33, 0.33, 0.33), ) for ax, weights in zip(axs.ravel(), weights_arr): ??? X, y = create_dataset(n_samples=300, weights=weights) ??? clf.fit(X, y) ??? plot_decision_function(X, y, clf, ax, title=f"weight={weights}") ??? fig.suptitle(f"Decision function of {clf.__class__.__name__}") fig.tight_layout() # %% [markdown] # Greater is the difference between the number of samples in each class, poorer # are the classification results. # # Random over-sampling to balance the data set # -------------------------------------------- # # Random over-sampling can be used to repeat some samples and balance the # number of samples between the dataset. It can be seen that with this trivial # approach the boundary decision is already less biased toward the majority # class. The class :class:`~imblearn.over_sampling.RandomOverSampler` # implements such of a strategy. # %% from imblearn.pipeline import make_pipeline from imblearn.over_sampling import RandomOverSampler X, y = create_dataset(n_samples=100, weights=(0.05, 0.25, 0.7)) fig, axs = plt.subplots(nrows=1, ncols=2, figsize=(15, 7)) clf.fit(X, y) plot_decision_function(X, y, clf, axs[0], title="Without resampling") sampler = RandomOverSampler(random_state=0) model = make_pipeline(sampler, clf).fit(X, y) plot_decision_function(X, y, model, axs[1], f"Using {model[0].__class__.__name__}") fig.suptitle(f"Decision function of {clf.__class__.__name__}") fig.tight_layout() # %% [markdown] # By default, random over-sampling generates a bootstrap. The parameter # `shrinkage` allows adding a small perturbation to the generated data # to generate a smoothed bootstrap instead. The plot below shows the difference # between the two data generation strategies. # %% fig, axs = plt.subplots(nrows=1, ncols=2, figsize=(15, 7)) sampler.set_params(shrinkage=None) plot_resampling(X, y, sampler, ax=axs[0], title="Normal bootstrap") sampler.set_params(shrinkage=0.3) plot_resampling(X, y, sampler, ax=axs[1], title="Smoothed bootstrap") fig.suptitle(f"Resampling with {sampler.__class__.__name__}") fig.tight_layout() # %% [markdown] # It looks like more samples are generated with smoothed bootstrap. This is due # to the fact that the samples generated are not superimposing with the # original samples. # # More advanced over-sampling using ADASYN and SMOTE # -------------------------------------------------- # # Instead of repeating the same samples when over-sampling or perturbating the # generated bootstrap samples, one can use some specific heuristic instead. # :class:`~imblearn.over_sampling.ADASYN` and # :class:`~imblearn.over_sampling.SMOTE` can be used in this case. # %% from imblearn import FunctionSampler? # to use a idendity sampler from imblearn.over_sampling import SMOTE, ADASYN X, y = create_dataset(n_samples=150, weights=(0.1, 0.2, 0.7)) fig, axs = plt.subplots(nrows=2, ncols=2, figsize=(15, 15)) samplers = [ ??? FunctionSampler(), ??? RandomOverSampler(random_state=0), ??? SMOTE(random_state=0), ??? ADASYN(random_state=0), ] for ax, sampler in zip(axs.ravel(), samplers): ??? title = "Original dataset" if isinstance(sampler, FunctionSampler) else None ??? plot_resampling(X, y, sampler, ax, title=title) fig.tight_layout() # %% [markdown] # The following plot illustrates the difference between # :class:`~imblearn.over_sampling.ADASYN` and # :class:`~imblearn.over_sampling.SMOTE`. # :class:`~imblearn.over_sampling.ADASYN` will focus on the samples which are # difficult to classify with a nearest-neighbors rule while regular # :class:`~imblearn.over_sampling.SMOTE` will not make any distinction. # Therefore, the decision function depending of the algorithm. X, y = create_dataset(n_samples=150, weights=(0.05, 0.25, 0.7)) fig, axs = plt.subplots(nrows=1, ncols=3, figsize=(20, 6)) models = { ??? "Without sampler": clf, ??? "ADASYN sampler": make_pipeline(ADASYN(random_state=0), clf), ??? "SMOTE sampler": make_pipeline(SMOTE(random_state=0), clf), } for ax, (title, model) in zip(axs, models.items()): ??? model.fit(X, y) ??? plot_decision_function(X, y, model, ax=ax, title=title) fig.suptitle(f"Decision function using a {clf.__class__.__name__}") fig.tight_layout() # %% [markdown] # Due to those sampling particularities, it can give rise to some specific # issues as illustrated below. # %% X, y = create_dataset(n_samples=5000, weights=(0.01, 0.05, 0.94), class_sep=0.8) samplers = [SMOTE(random_state=0), ADASYN(random_state=0)] fig, axs = plt.subplots(nrows=2, ncols=2, figsize=(15, 15)) for ax, sampler in zip(axs, samplers): ??? model = make_pipeline(sampler, clf).fit(X, y) ??? plot_decision_function( ??????? X, y, clf, ax[0], title=f"Decision function with {sampler.__class__.__name__}" ??? ) ??? plot_resampling(X, y, sampler, ax[1]) fig.suptitle("Particularities of over-sampling with SMOTE and ADASYN") fig.tight_layout() # %% [markdown] # SMOTE proposes several variants by identifying specific samples to consider # during the resampling. The borderline version # (:class:`~imblearn.over_sampling.BorderlineSMOTE`) will detect which point to # select which are in the border between two classes. The SVM version # (:class:`~imblearn.over_sampling.SVMSMOTE`) will use the support vectors # found using an SVM algorithm to create new sample while the KMeans version # (:class:`~imblearn.over_sampling.KMeansSMOTE`) will make a clustering before # to generate samples in each cluster independently depending each cluster # density. # %% from imblearn.over_sampling import BorderlineSMOTE, KMeansSMOTE, SVMSMOTE X, y = create_dataset(n_samples=5000, weights=(0.01, 0.05, 0.94), class_sep=0.8) fig, axs = plt.subplots(5, 2, figsize=(15, 30)) samplers = [ ??? SMOTE(random_state=0), ??? BorderlineSMOTE(random_state=0, kind="borderline-1"), ??? BorderlineSMOTE(random_state=0, kind="borderline-2"), ??? KMeansSMOTE(random_state=0), ??? SVMSMOTE(random_state=0), ] for ax, sampler in zip(axs, samplers): ??? model = make_pipeline(sampler, clf).fit(X, y) ??? plot_decision_function( ??????? X, y, clf, ax[0], title=f"Decision function for {sampler.__class__.__name__}" ??? ) ??? plot_resampling(X, y, sampler, ax[1]) fig.suptitle("Decision function and resampling using SMOTE variants") fig.tight_layout() # %% [markdown] # When dealing with a mixed of continuous and categorical features, # :class:`~imblearn.over_sampling.SMOTENC` is the only method which can handle # this case. # %% from collections import Counter from imblearn.over_sampling import SMOTENC rng = np.random.RandomState(42) n_samples = 50 # Create a dataset of a mix of numerical and categorical data X = np.empty((n_samples, 3), dtype=object) X[:, 0] = rng.choice(["A", "B", "C"], size=n_samples).astype(object) X[:, 1] = rng.randn(n_samples) X[:, 2] = rng.randint(3, size=n_samples) y = np.array([0] * 20 + [1] * 30) print("The original imbalanced dataset") print(sorted(Counter(y).items())) print() print("The first and last columns are containing categorical features:") print(X[:5]) print() smote_nc = SMOTENC(categorical_features=[0, 2], random_state=0) X_resampled, y_resampled = smote_nc.fit_resample(X, y) print("Dataset after resampling:") print(sorted(Counter(y_resampled).items())) print() print("SMOTE-NC will generate categories for the categorical features:") print(X_resampled[-5:]) print() # %% [markdown] # However, if the dataset is composed of only categorical features then one # should use :class:`~imblearn.over_sampling.SMOTEN`. # %% from imblearn.over_sampling import SMOTEN # Generate only categorical data X = np.array(["A"] * 10 + ["B"] * 20 + ["C"] * 30, dtype=object).reshape(-1, 1) y = np.array([0] * 20 + [1] * 40, dtype=np.int32) print(f"Original class counts: {Counter(y)}") print() print(X[:5]) print() sampler = SMOTEN(random_state=0) X_res, y_res = sampler.fit_resample(X, y) print(f"Class counts after resampling {Counter(y_res)}") print() print(X_res[-5:]) print() |

随机过采样

使用类RandomOverSampler, 随机过度采样可用于重复某些样本,平衡数据集之间的样本数

| from sklearn.linear_model import LogisticRegression clf = LogisticRegression() |

| from imblearn.pipeline import make_pipeline from imblearn.over_sampling import RandomOverSampler X, y = create_dataset(n_samples=100, weights=(0.05, 0.25, 0.7)) fig, axs = plt.subplots(nrows=1, ncols=2, figsize=(15, 7)) clf.fit(X, y) plot_decision_function(X, y, clf, axs[0], title="Without resampling") |

| sampler = RandomOverSampler(random_state=0) model = make_pipeline(sampler, clf).fit(X, y) plot_decision_function(X, y, model, axs[1], f"Using {model[0].__class__.__name__}") fig.suptitle(f"Decision function of {clf.__class__.__name__}") fig.tight_layout() |

其中RandomOverSampler函数:

通过bootstrap方法采样.

参数sampling_strategy默认值'auto'= 'not majority':对除多数类以外的所有类重新采样;

例子:

| from collections import Counter from sklearn.datasets import make_classification from imblearn.over_sampling import RandomOverSampler X, y = make_classification(n_classes=2, class_sep=2, weights=[0.1, 0.9], n_informative=3, n_redundant=1, flip_y=0, n_features=20, n_clusters_per_class=1, n_samples=1000, random_state=10) print('Original dataset shape %s' % Counter(y)) Original dataset shape Counter({1: 900, 0: 100}) ros = RandomOverSampler(random_state=42) X_res, y_res = ros.fit_resample(X, y) print('Resampled dataset shape %s' % Counter(y_res)) Resampled dataset shape Counter({0: 900, 1: 900}) |

SMOTE

对少数类别, 通过原始样本的插值来生成新样本

例子:

| from imblearn.over_sampling import RandomOverSampler,SMOTE sm = SMOTE(random_state=42) X_res, y_res = sm.fit_resample(X, y) print('Resampled dataset shape %s' % Counter(y_res)) |

ADASYN

Adaptive Synthetic 重视容易分错的样本

| from imblearn.over_sampling import ADASYN ada = ADASYN(random_state=42) X_res, y_res = ada.fit_resample(X, y) |