-

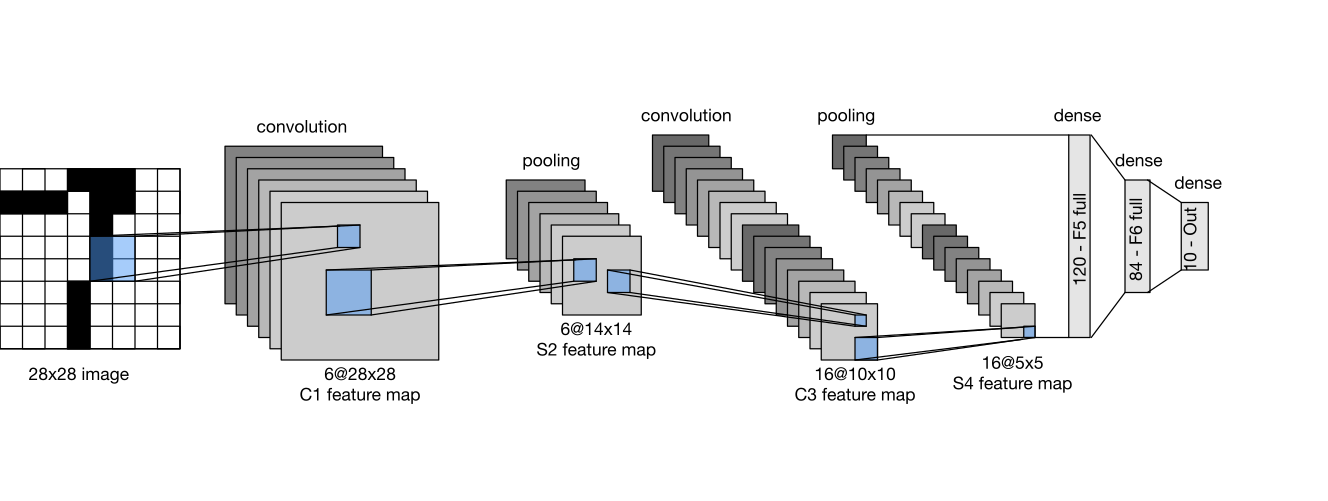

LeNet(1998)

LeNet是早期成功的神经网络;先使用卷积层来学习图片空间信息,然后通过池化层来降低卷积层对图片的敏感度,最后使用全连接层来转换到类别空间。

-

AlexNet(2012)

From LeNet (left) to AlexNet (right). AlexNet本质上是更大更深的LeNet;主要改进是加入了丢弃法(dropout)、激活函数从sigmoid变到ReLU(减缓梯度消失)、MaxPooling、数据增强;计算机视觉方法论的改变。

-

VGG(2014)

From AlexNet to VGG that is designed from building blocks. VGG可以看作是更大更深的AlexNet(重复的VGG块);VGG使用可重复使用的卷积块来构建深度神经网络,不同的卷积块个数和超参数可以得到不同复杂度的变种。

import torch from torch import nn def vgg_block(num_convs, in_channels, out_channels): layers = [] for _ in range(num_convs): layers.append(nn.Conv2d(in_channels, out_channels, kernel_size=3, padding=1)) layers.append(nn.ReLU()) in_channels = out_channels layers.append(nn.MaxPool2d(kernel_size=2, stride=2)) return nn.Sequential(*layers) conv_arch = ((1, 64), (1, 128), (2, 256), (2, 512), (2, 512)) def vgg(conv_arch): conv_blks = [] in_channels = 1 for (num_convs, out_channels) in conv_arch: conv_blks.append(vgg_block(num_convs, in_channels, out_channels)) in_channels = out_channels return nn.Sequential( *conv_blks, nn.Flatten(), nn.Linear(out_channels * 7 * 7, 4096), nn.ReLU(), nn.Dropout(0.5), nn.Linear(4096, 4096), nn.ReLU(), nn.Dropout(0.5), nn.Linear(4096, 10))