神经网络处理器(e.g. Cambricon-based accelerator) vs 神经网络加速器(e.g. DianNao, DaDianNao)

神经网络加速器(e.g. DianNao, DaDianNao)

- 指令是面向Layer的,硬件“理解”Layer,对各个Layer的优化直接体现在硬件设计中,“These accelerators often adopt high-level and informative instructions that directly specify the high-level functional blocks(e.g. layer type: convolution/ pooling/ classifier) or even an NN as a whole”。

- 只能支持具有相似计算模式和数据重用模式的一类神经网络,注重效率,通用性较差,DianNao系列针对通用性设计的主要思想是*“Duo to the limited number of target algorithms”*。

- 一般没有编译器,没有指令集的概念,调用Layer并针对Layer直接产生指令,“DianNao: there is a need for code generation, but a compiler would be overkill in our case as only three main types of code must be generated. So we have implemented three dedicated code generators for the three layers”。

- 指令译码复杂,开销大。

神经网络处理器(e.g. Cambricon-based accelerator)

- 神经网络处理器 = 指令集ISA + 硬件加速器,指令是面向“操作”的,这些“操作”是通过观察一类算法或应用抽取出来的,硬件设计只需要加速这些“操作”,对各种Layer的优化体现在对“操作”的抽取上,例如Cambricon的抽取思想:“In GoogLeNet, 99.992% arithmetic operations can be aggregated as vector operations, and 99.791% of the vector operations can be aggregated as matrix operations”。

- 广泛支持各种Neural Network Structure,注重通用性,ISA作为中间件可能对效率有影响,因此反复提到*“Negligible latency/ power/ area overheads”*。

- 一般有编译器,有指令集,编译生成各Layer的Code/Instructions,当然也可以像汇编一样直接写机器级代码,与code generator不同。

- 指令译码较简单,开销小。

举例(个人理解、想法)

- 比如我们在Python中直接写Convolution(), Pooling(), FC(),DianNao的code generator直接生成CP代码,这样的粒度是很高的,Cambricon的编译器也可以采用这样高粒度方式生成ISA中的指令,但Cambricon的编译器能支持更多的网络结构,如LSTM()等。

- 但Cambricon可以更细粒度,比如等到Python解释器先将Python代码转换为底层代码后,再将底层代码中的循环等编译为ISA中的Vector或Matrix操作,只要ISA 可支持/转化 该Layer的底层操作就可编译(CPU支持最底层的操作,因此CPU是万能的)。

Cambricon ISA

Overview

- 重点关注数据级并行,“Data-level parallelism enabled by vector/matrix instructions can be more efficient than instruction-level parallelism of traditional scalar instructions”。

- “Small yet representative set of vector/matrix instructions for existing NN techniques”。

- “Replace vector register files with on-chip scratchpad memory”,向量操作数的长度不再受固定宽度向量寄存器的限制,向量或矩阵的长度是可变的。

- “Load-store architecture”,*64 32-bit General-Purpose Registers(GPRs)*可用于片上SPM寄存器间接寻址或保存标量。

- “Four types of instructions: computational, logical, control, and data transfer instructions, and the instruction length is fixed to be 64-bit”。

Control Instructions

Jump instruction: the offset will be accumulated to the PC。

Conditional branch: the branch target(either PC+{ offset } or PC + 1) is determined by a comparison between the predictor and zero。

Data Transfer Instructions

支持可变长度的向量或矩阵;可以在主存与SPM之间 或 SPM与GPRs之间移动数据。

Matrix Instructions

Forward Pass,以MLP为例, y = f ( W x + b ) {y = f(Wx + b)} y=f(Wx+b),提供Matrix Mult Vector instruction(MMV)。

Backward Pass,以MLP为例,如果使用MMV,需要转置,因此提供Vector Mult Matrix Instruction。

Update,以MLP为例, W = W + η Δ W {W = W + \eta\Delta W} W=W+ηΔW,为产生 Δ W {\Delta W} ΔW提供the outer product of two vectors,还提供Matrix Mult Scalar instruction和Matrix Add Matrix instruction。

Vector Instructions

W

x

+

b

{Wx + b}

Wx+b,提供Vector Add Vector instruction。

f

(

W

x

+

b

)

{f(Wx + b)}

f(Wx+b),以Sigmoid activation function为例,提供 Vector Exponential instruction,Vector Add Scalar instruction,Vector Div Vector instruction。

Logical Instructions

主要用于池化层,

例如,Vector Greater Than Merge instruction,

V

o

u

t

[

i

]

=

(

V

i

n

0

[

i

]

>

V

i

n

1

[

i

]

)

?

V

i

n

0

[

i

]

:

V

i

n

1

[

i

]

{Vout[i] = (Vin0[i]>Vin1[i])?Vin0[i]:Vin1[i]}

Vout[i]=(Vin0[i]>Vin1[i])?Vin0[i]:Vin1[i]。

Scalar Instructions

Cambricon Based Accelerator

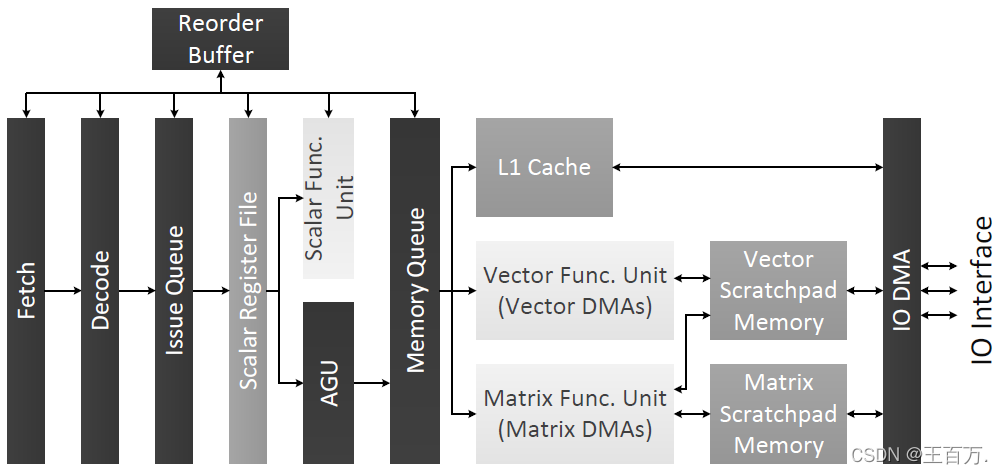

- Seven major instruction pipeline stages: fetching, decoding, issuing, register reading, execution, writting back, and committing。

- 用成熟的DMA和SPM技术,A promising ISA must be easy to implement and should not be tightly coupled with emerging techniques.。

- Issue Queue保证指令发射的同步关系,Memory Queue保证访存请求的同步关系,Reorder Buffer保证指令提交的同步关系,都是buffer。

- 经过取指,译码,从标量寄存器(GPRs)取到操作数(scalar data, or address/size of vector/matrix data)后,指令被分流。

- Control instructions and scalar computational/logical instructions被送入scalar functional unit执行,写回GPRs后,送入Reorder Buffer等待被提交。

- 涉及向量、矩阵、访存(Cache/ Scratchpad Memory)的指令,首先送入AGU产生地址,经过Memory Queue消除数据依赖,然后再次分流,标量的load/store指令送入L1 Cache,向量和矩阵相关操作的指令分别送入Vector Func. Unit和Matrix Func. Unit,执行完成后送入Reorder Buffer等待被提交。

- The vector unit contains 32 16-bit adders, 32 16-bit multipliers, and is equipped with a 64 KB scratchpad memory. The matrix unit contains 1024 multipliers and 1024 adders, which has been divided into 32 separate computational blocks to avoid excessive wire congestion and power consumption on long-distance data movements. Each computational block is equipped with a separate 24 KB scratchpad.

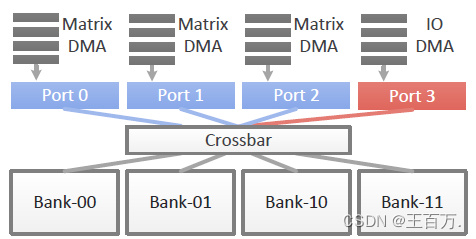

最后有关port和bank的概念一直没搞清楚,只能理解Matrix Scratchpad Memory Structure可以帮助进行并行的读写访问,但具体原理不太明白,以及为什么是4个并发读写请求?