论文题目:3D Bounding Box Estimation Using Deep Learning and Geometry

1. 环境搭建

系统:ubuntu18.04

显卡+驱动:Nvidia TITAN Xp + CUDA 11.2 + cuDNN 8.2.132

深度学习GPU环境搭建:python 3.8.10 + tensorflow-gpu 2.5.0 + keras-nightly 2.5.0 + keras-preprocessing 1.1.2

深度学习CPU环境搭建:python 3.8.12 + tensorflow-cpu 2.7.0 + keras 2.7.0

其他依赖功能包:graphviz pydot opencv-python ipython numpy

-

报错:

I tensorflow/core/platform/cpu_feature_guard.cc:151] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 FMA To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

解决:python import os os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2' -

报错:

fit_generator() got an unexpected keyword argument 'max_q_size'

解决:内部训练队列的最大大小,参考keras官方文档解释:https://keras.io/zh/models/model/ -

报错:

Unable to import SGD and Adam from 'keras.optimizers

解决:from tensorflow.keras.optimizers import RMSprop -

报错:

TypeError: 'range' object does not support item assignment

解决:将上面例子的代码: a = range(0,N)改为a = list(range(0,N)) -

报错:

AttributeError: module 'tensorflow.compat.v2.__internal__.tracking' has no attribute 'no_automatic_dependency_tracking'

解决:keras与tensorflow版本冲突,改从tensorflow中importkeras,部分模块仍无法import,仍需安装对应版本keras>>> from tensorflow.keras.models import Sequential >>> from tensorflow.keras.layers.core import Flatten, Dense, Dropout, Reshape, Lambda Traceback (most recent call last): File "<stdin>", line 1, in <module> ModuleNotFoundError: No module named 'tensorflow.keras.layers.core' >>> from tensorflow.keras.layers.convolutional import Conv2D, Convolution2D, MaxPooling2D, ZeroPadding2D Traceback (most recent call last): File "<stdin>", line 1, in <module> ModuleNotFoundError: No module named 'tensorflow.keras.layers.convolutional' >>> from tensorflow.keras.optimizers import SGD >>> from tensorflow.keras import backend as K >>> from IPython.display import SVG >>> from tensorflow.keras.utils.vis_utils import model_to_dot Traceback (most recent call last): File "<stdin>", line 1, in <module> ModuleNotFoundError: No module named 'tensorflow.keras.utils.vis_utils' >>> from tensorflow.keras.layers.advanced_activations import LeakyReLU Traceback (most recent call last): File "<stdin>", line 1, in <module> ModuleNotFoundError: No module named 'tensorflow.keras.layers.advanced_activations' >>> from tensorflow.keras.layers import Input, Dense >>> from tensorflow.keras.models import Model >>> from tensorflow.keras.callbacks import TensorBoard, EarlyStopping, ModelCheckpoint >>> from tensorflow.keras.applications.vgg16 import VGG16 >>> from tensorflow.keras.preprocessing import image >>> from tensorflow.keras.applications.vgg16 import preprocess_input -

报错:

Could not load dynamic library 'libcusolver.so.11'; dlerror: libcusolver.so.11: cannot open shared object file: No such file or directory

解决:到cuda的lib下面查看,只有libcusolver.so.10,将libsolver.so.11软连接到10仍报错。cuda版本不对,应安装显卡对应cuda,以及cuda对应tensorflow、python、keras -

报错:

Invalid argument: TypeError: 'NoneType' object is not subscriptable

解决:问题出在以下代码,检查后发现在某一张图片无法读取,为空,进入数据集后查看图片未发现问题,暂将该图片移出,代码成功运行。img = copy.deepcopy(img[ymin:ymax+1,xmin:xmax+1]).astype(np.float32) -

警告:

calling l2_normalize (from tensorflow.python.ops.nn_impl) with dim is deprecated and will be removed in a future version. Instructions for updating: dim is deprecated, use axis instead

解决:tf.nn.l2_normalize(x, axis=2) -

警告:

WARNING:tensorflow:'period' argument is deprecated. Please use 'save_freq' to specify the frequency in number of batches seen.

解决:checkpoint = ModelCheckpoint('weights_20111219.hdf5', monitor='val_loss', verbose=1, save_best_only=True, mode='min', save_freq=1) -

警告:

UserWarning: The 'lr' argument is deprecated, use 'learning_rate' instead.

解决:minimizer = SGD(learning_rate=0.0001) -

警告:

UserWarning: 'Model.fit_generator' is deprecated and will be removed in a future version. Please use 'Model.fit', which supports generators.

解决:model.fit(train_gen, steps_per_epoch = 2000, # np.floor(all_exams/batch_size), epochs = 500, verbose = 1, callbacks = [early_stop, checkpoint, tensorboard], validation_data = valid_gen, validation_steps = valid_num, class_weight = None, max_queue_size = 3, workers = 1, use_multiprocessing = False, shuffle = True, initial_epoch = 0)

2. 网络搭建

-

网络结构

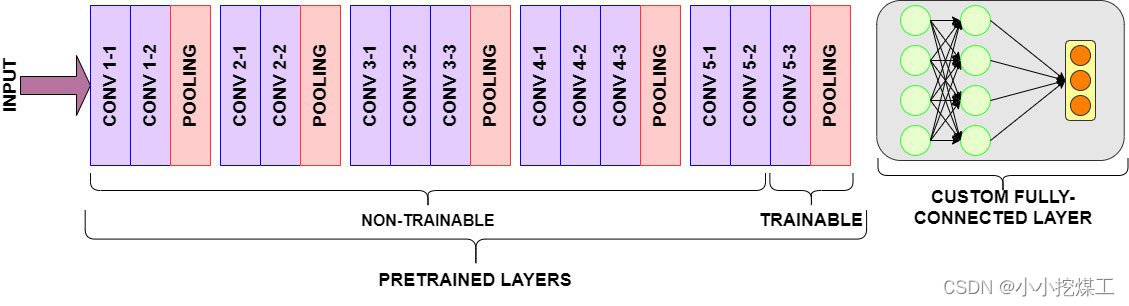

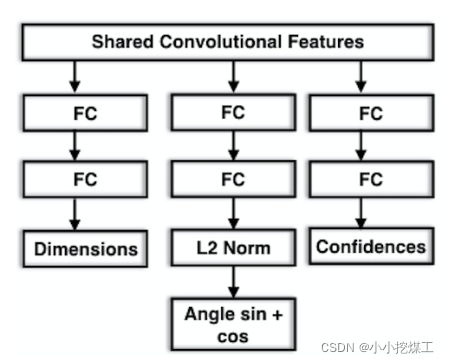

采用迁移学习,使用训练好的vgg16网络来学习图像特征,重新学习部分卷积层的权重用以适应新的数据集。去掉vgg16网络的全连接层后,按照论文所示添加全连接层输出。

-

输入:裁剪并且resize后的图像

读取真值txt文件,将truncated和occluded值均大于0.1的车辆(Car、Van、Trunk)二维真值用作二维检测框,裁剪图像,按照输入尺寸进行resize,可对输入进行水平翻转,进行数据增强。

尺寸:224×224×3 -

输出:multi-task网络,输出都服务于三维检测,包括三维尺寸的回归、heading角的回归以及heading角所属BIN的置信度

尺寸:dimensions 3 heading BIN×2 confidence BIN

注:由于车辆的尺寸与其所属分类关联性强,因此dimensions是车辆实际尺寸与所属类别平均尺寸差值 -

损失函数

dimension_loss采用mean sqared error

confidence_loss 原文内容为:The confidence loss Lconf is equal to the softmax loss of the confidences of each bin.

参考他人复现文章中的confidence_loss设定为:输出层采用softmax来激活,然后直接采用mean squared error。

这种方法与softmax loss定义存在出入: keras中的categorical_crossentropy或许也可以用于confidence的损失函数。

keras中的categorical_crossentropy或许也可以用于confidence的损失函数。

按照softmax loss定义实现的loss function:def softmax_loss(y_true, y_pred): loss = - y_true * tf.math.log(tf.clip_by_value(y_pred,1e-8,1.0)) # loss = tf.reduce_sum(loss, axis=1) loss = tf.reduce_mean(loss)*2 return lossorientation_loss原文提到的为L2 loss,实现代码如下:

def orientation_loss(y_true, y_pred): y_pred = l2_normalize(y_pred) y_true = l2_normalize(y_true) loss = tf.square(y_true[:,:,0]-y_pred[:,:,0]) + tf.square(y_true[:,:,1]-y_pred[:,:,1]) return tf.reduce_mean(loss)多次训练后发现orientation大小始终在0.55左右(范围为[0, 1])

尝试使用cosine similarity(余弦相似度)用作loss function

实现代码如下:

实现代码如下:def cosine_similarity(y_true, y_pred): y_pred = l2_normalize(y_pred) y_true = l2_normalize(y_true) loss = -(y_true[:,:,0]*y_pred[:,:,0] + y_true[:,:,1]*y_pred[:,:,1]) return (tf.reduce_mean(loss)+1)/2余弦相似度范围为[-1, 1],-1代表向量方向一致,0代表向量垂直,1代表向量完全反向,未归一化处理时,训练结束的loss一直为-0.9左右,loss值为负可能会影响权值反向传播更新(不确定是否有影响),归一化后为[0, 1]值越小向量越相似。但是归一化之后训练得到的loss为0.55左右,同上L2 loss,近似于随机数结果。

-

网络参数

论文中提及的训练参数:Overlap: 0.1

Learning Rate: 0.0001

Optimizer: SGD

Iterations: 20K

Batch Size: 8

Best Model: chosed by cross validation论文中进行了比较并且能取得较好效果的参数

Bins: 2

FC width of orientation: 256迁移学习unfreeze的layers数量,尝试了0/2/4/8均在50个epoch前由于下降速率过度调动了EarlyStop停止训练。解冻层数较小时,dimensions的loss很大,解冻层数增加后对dimensions loss明显降低。但解冻所有layers后,仍在50个epoch前调动了EarlyStop,此时orientation和confidence的loss还很大。

-

网络结构

使用vgg16卷积层+MultiBin FC层的网络结构如下:def net_construct(): # Construct the network # Use vgg-16 to get feature maps of images inputs = Input(shape=(224,224,3)) base_model = VGG16(input_tensor=inputs, weights='imagenet', include_top=False) # for i, layer in enumerate(base_model.layers): # if(i <= 6): # layer.trainable = False x = base_model.output x = Flatten()(x) dimension = Dense(512)(x) dimension = LeakyReLU(alpha=0.1)(dimension) dimension = Dropout(0.5)(dimension) dimension = Dense(3)(dimension) dimension = LeakyReLU(alpha=0.1, name='dimension')(dimension) orientation = Dense(256)(x) orientation = LeakyReLU(alpha=0.1)(orientation) orientation = Dropout(0.5)(orientation) orientation = Dense(BIN*2)(orientation) orientation = LeakyReLU(alpha=0.1)(orientation) orientation = Reshape((BIN,-1))(orientation) orientation = Lambda(l2_normalize, name='orientation')(orientation) confidence = Dense(256)(x) confidence = LeakyReLU(alpha=0.1)(confidence) confidence = Dropout(0.5)(confidence) confidence = Dense(BIN, activation='softmax', name='confidence')(confidence) model = Model(inputs=base_model.input, outputs=[dimension, orientation, confidence]) return model

3 训练记录

- change early_stop’s monitor into val_loss

early stop epoch: 10 - change early_stop’s patience into 25 && dimension’s loss weight into 0.1

early stop epoch: 25 - unfreeze last 2 layers of vgg16 and change dimension’s loss weight

back into 1 and change early_stop’s patience back into 10

early stop epoch: 27

val_loss: -0.3106 - val_dimension_loss: 0.3855 - val_orientation_loss: -0.9416 - val_confidence_loss: 0.2455 - change orientation loss and unfreeze last 4 layers

- val_loss: 1.7189 - val_dimension_loss: 0.9101 - val_orientation_loss: 0.5619 - val_confidence_loss: 0.2469

Epoch 00035: early stopping - unfreeze last 8 layers and change weights into 4, 8 and 1

val_loss: 7.3961 - val_dimension_loss: 0.6738 - val_orientation_loss: 0.5569 - val_confidence_loss: 0.2454

Epoch 00012: early stopping - unfreeze last 8 layers and change weights into 1, 1 and 1

val_loss: 1.4236 - val_dimension_loss: 0.6122 - val_orientation_loss: 0.5651 - val_confidence_loss: 0.2463

Epoch 00036: early stopping - unfreeze all layers with no flip

val_loss: 1.1356 - val_dimension_loss: 0.3449 - val_orientation_loss: 0.5504 - val_confidence_loss: 0.2403

Epoch 10/500

key interrupt - unfreeze all layers and add image flip with angle as 2*pi-heading and use original orientation_loss

0.2448 - val_loss: 0.0766 - val_dimension_loss: 0.7772 - val_orientation_loss: -0.9446 - val_confidence_loss: 0.2440

Epoch 00029: early stopping - unfreeze all layers with no image flip and use cosine similarity loss for orientation

confidence loss中使用tf.math.log()出现nan,使用tf.clip_by_value(y_pred,1e-8,1.0)截断为0的值

val_loss:1.3190 - val_dimension_loss: 0.3760 - val_orientation_loss:0.2624 - val_confidence_loss: 0.6805

Epoch 00021: early stopping

参考链接:

https://keras.io/zh/#_2

https://zhuanlan.zhihu.com/p/34044634

vgg16网络迁移学习图片的参考链接晚些补充

https://github.com/shashwat14/Multibin

https://github.com/smallcorgi/3D-Deepbox

https://github.com/experiencor/image-to-3d-bbox