文章目录

1.手工梯度下降法

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

#载入数据

data=np.genfromtxt("/root/jupyter_projects/data/fangjia1.csv",delimiter=',')

#x_data=data[0:,0]#分离data数据,取所有行第0列

x_data=data[:,0]#等价于上边那行

y_data=data[:,1]

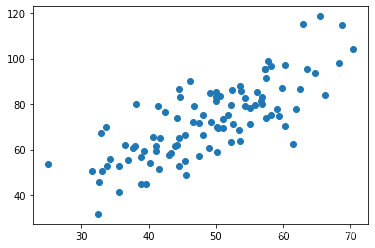

plt.scatter(x_data,y_data)#绘制散点图

plt.show()

#学习率learning rate

lr=0.0001

#截距

theta_0=0

#斜率

theta_1=0

#最大迭代次数

epochs=50

J ( θ 0 , θ 1 ) = 1 2 m ∑ i = 1 m ( ( ^ y ( i ) ) ? y ( i ) ) 2 = 1 2 m ∑ i = 1 m ( h θ ( x ( i ) ) ? y ( i ) ) 2 J(\theta_{0},\theta_{1})=\frac{1}{2m}\sum_{i=1}^{m}{(\hat(y^{(i)})-y^{(i)})^{2}}=\frac{1}{2m}\sum_{i=1}^{m}{(h_{\theta}(x^{^{(i)}})-y^{(i)})^{2}} J(θ0?,θ1?)=2m1?∑i=1m?((^?y(i))?y(i))2=2m1?∑i=1m?(hθ?(x(i))?y(i))2

#最小二乘法

#计算代价函数

def compute_error(theta_0,theta_1,x_data,y_data):

totalError=0

for i in range(0,len(x_data)):

totalError+=(theta_1*x_data[i]+theta_0-y_data[i])**2

totalError

return totalError/float(2*len(x_data))

对代价函数求偏导:

?

?

θ

j

J

(

θ

0

,

θ

1

)

=

?

?

θ

j

1

2

m

∑

i

=

1

m

(

h

θ

(

x

(

i

)

)

?

y

(

i

)

)

2

\frac{\partial }{\partial \theta_j}J(\theta_0,\theta_1)=\frac{\partial}{\partial \theta_j}\frac{1}{2m}\sum_{i=1}^{m}({h_\theta(x^{(i)})-y^{(i)})^2}

?θj???J(θ0?,θ1?)=?θj???2m1?∑i=1m?(hθ?(x(i))?y(i))2

θ 0 : j = 0 \theta_0:j=0 θ0?:j=0: ? ? θ 0 J ( θ 0 , θ 1 ) = 1 m ∑ i = 1 m ( h θ ( x ( i ) ) ? y ( i ) ) \frac{\partial }{\partial \theta_0}J(\theta_0,\theta_1)=\frac{1}{m}\sum_{i=1}^{m}({h_\theta(x^{(i)})-y^{(i)})} ?θ0???J(θ0?,θ1?)=m1?∑i=1m?(hθ?(x(i))?y(i))

θ 1 : j = 1 \theta_1:j=1 θ1?:j=1: ? ? θ 1 J ( θ 0 , θ 1 ) = 1 m ∑ i = 1 m ( h θ ( x ( i ) ) ? y ( i ) ) ? x ( i ) \frac{\partial }{\partial \theta_1}J(\theta_0,\theta_1)=\frac{1}{m}\sum_{i=1}^{m}({h_\theta(x^{(i)})-y^{(i)})*x^{(i)}} ?θ1???J(θ0?,θ1?)=m1?∑i=1m?(hθ?(x(i))?y(i))?x(i)

应用梯度下降法修改不断当前值

θ 0 \theta_0 θ0?= θ 0 ? α ? ? ? θ 0 J ( θ 0 , θ 1 ) = 1 m ∑ i = 1 m ( h θ ( x ( i ) ) ? y ( i ) ) \theta_0-\alpha*\frac{\partial }{\partial \theta_0}J(\theta_0,\theta_1)=\frac{1}{m}\sum_{i=1}^{m}({h_\theta(x^{(i)})-y^{(i)})} θ0??α??θ0???J(θ0?,θ1?)=m1?∑i=1m?(hθ?(x(i))?y(i))

θ 1 \theta_1 θ1?= θ 1 ? α ? ? ? θ 0 J ( θ 0 , θ 1 ) = 1 m ∑ i = 1 m ( h θ ( x ( i ) ) ? y ( i ) ) ? x ( i ) \theta_1-\alpha*\frac{\partial }{\partial \theta_0}J(\theta_0,\theta_1)=\frac{1}{m}\sum_{i=1}^{m}({h_\theta(x^{(i)})-y^{(i)})*x^{(i)}} θ1??α??θ0???J(θ0?,θ1?)=m1?∑i=1m?(hθ?(x(i))?y(i))?x(i)

#梯度下降

def gradient_descent_runner(x_data,y_data,theta_0,theta_1,lr,epochs):

m=len(x_data)

#达到最大迭代次数后停止

for i in range(epochs):

theta_0_grad=0

theta_1_grad=0#求梯度该变量

for j in range(0,len(x_data)):

theta_0_grad+=(1/m)*((theta_1*x_data[j]+theta_0)-y_data[j])

theta_1_grad+=(1/m)*((theta_1*x_data[j]+theta_0)-y_data[j])*x_data[j]

theta_0=theta_0-(theta_0_grad*lr)#同时更新参数模型

theta_1=theta_1-(theta_1_grad*lr)

#没迭代5次更新一次图像

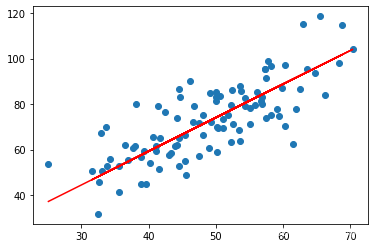

if i%5==0:

print("epochs:",i)

plt.scatter(x_data,y_data)

plt.plot(x_data,theta_1*x_data+theta_0,'r')

plt.show()

return theta_0,theta_1

print("starting theta_0={0},theta_1={1},error={2}".format(theta_0,theta_1,compute_error(theta_0,theta_1,x_data,y_data)))

print('running')

theta_0,theta_1=gradient_descent_runner(x_data,y_data,theta_0,theta_1,lr,epochs)

print("end{0} theta_0={1},theta_1={2},error={3}".format(epochs,theta_0,theta_1,compute_error(theta_0,theta_1,x_data,y_data)))

#画图

# plt.scatter(x_data,y_data)

# plt.plot(x_data,theta_1*x_data+theta_0,'r')

# plt.show()

starting theta_0=0,theta_1=0,error=2782.5539172416056

running

epochs: 0

epochs: 10

epochs: 20

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-2JG7rjll-1641220068004)(output_7_9.png)]](https://img-blog.csdnimg.cn/08aadcf4a1c84e328e56c8d6ea835929.png?x-oss-process=image/watermark,type_d3F5LXplbmhlaQ,shadow_50,text_Q1NETiBA5piv5b-Y55Sf5ZWK,size_12,color_FFFFFF,t_70,g_se,x_16)

epochs: 30

epochs: 40

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-S1bQzLt5-1641220068008)(output_7_17.png)]](https://img-blog.csdnimg.cn/2b1b6af72a574defad0b2a6a8d6511a5.png?x-oss-process=image/watermark,type_d3F5LXplbmhlaQ,shadow_50,text_Q1NETiBA5piv5b-Y55Sf5ZWK,size_12,color_FFFFFF,t_70,g_se,x_16)

epochs: 45

end50 theta_0=0.030569950649287983,theta_1=1.4788903781318357,error=56.32488184238028

2.sklearn实现

import numpy as np

import matplotlib.pyplot as plt

from sklearn.linear_model import LinearRegression

%matplotlib inline

#载入数据

data=np.genfromtxt("/root/jupyter_projects/data/fangjia1.csv",delimiter=',')

#x_data=data[0:,0]#分离data数据,取所有行第0列

x_data=data[:,0]#等价于上边那行

y_data=data[:,1]

plt.scatter(x_data,y_data)#绘制散点图

plt.show()

print(x_data.shape)

#x_data

(100,)

x_data=data[:,0,np.newaxis]

print(x_data.shape)#将x_data 转换为一个矩阵

y_data=data[:,1,np.newaxis]

#x_data

(100, 1)

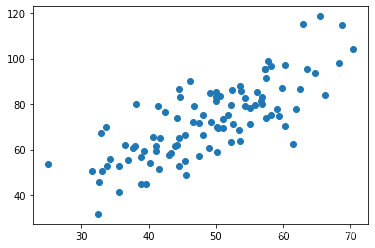

#创建并拟合模型

model=LinearRegression()

theta=model.fit(x_data,y_data)

#画图

plt.scatter(x_data,y_data)

plt.plot(x_data,model.predict(x_data),'r')

plt.show()

print(theta)

print(model.coef_)#theta_0

print(model.intercept_)#theta_1

```