1.自定义数据集(三分类)

import tensorflow as tf

import pandas as pd

import numpy as np

from sklearn.datasets import load_iris

from sklearn.preprocessing import StandardScaler,OneHotEncoder

from sklearn.model_selection import train_test_split

import matplotlib.pyplot as plt

x_data = [[1, 2, 1, 1],

[2, 1, 3, 2],

[3, 1, 3, 4],

[4, 1, 5, 5],

[1, 7, 5, 5],

[1, 2, 5, 6],

[1, 6, 6, 6],

[1, 7, 7, 7]]

# 3类别

y_data = [[0, 0, 1],

[0, 0, 1],

[0, 0, 1],

[0, 1, 0],

[0, 1, 0],

[0, 1, 0],

[1, 0, 0],

[1, 0, 0]]

#3.#定义占位符\权重和斜率

x=tf.placeholder(tf.float32,shape=[None,4])

y=tf.placeholder(tf.float32,shape=[None,3])

w=tf.Variable(tf.random_normal([4,3]))

b=tf.Variable(tf.random_normal([3]))

#4.定义前向传播函数

# model=tf.matmul(x,w)+b

hypothesis=tf.nn.softmax(tf.matmul(x,w)+b)

#5.代价函数

#方法一

cost=-tf.reduce_mean(y*tf.log(hypothesis),axis=1)

# 方法二

# cost=tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits_v2(labels=y_data, logits=model))

# 6.梯度向下函数

gradeDecline=tf.train.GradientDescentOptimizer(learning_rate=0.01).minimize(cost)

#7.计算精度

predicted=tf.argmax(hypothesis,axis=1)

true_value=tf.argmax(y_data,axis=1)

acc=tf.reduce_mean(tf.cast(tf.equal(predicted,true_value),dtype=tf.float32))

#8.初始化全局变量

sess=tf.Session()

sess.run(tf.global_variables_initializer())

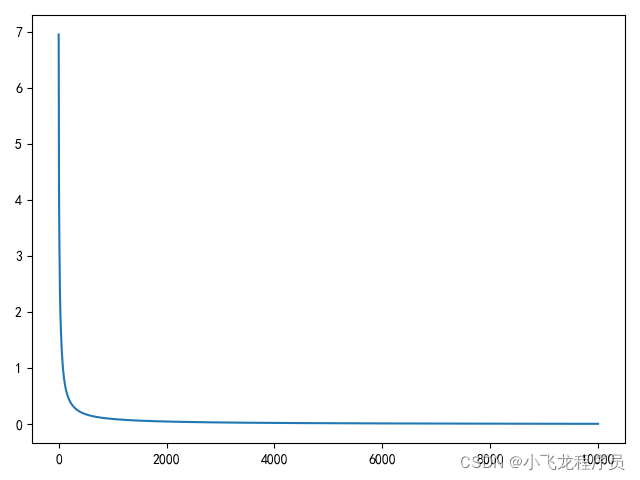

#9.画代价函数图

j=[]

for i in range(3000):

cost_val,_,acc_val=sess.run([cost,gradeDecline,acc],feed_dict={x:x_data,y:y_data})

j.append(cost_val)

if i%100==0:

print(i,cost_val)

plt.plot(j)

plt.show()

2. iris数据集(三分类)

import tensorflow as tf

import pandas as pd

import numpy as np

import os

from sklearn.datasets import load_iris

from sklearn.preprocessing import StandardScaler,OneHotEncoder

from sklearn.model_selection import train_test_split

import matplotlib.pyplot as plt

os.environ['TF_CPP_MIN_LOG_LEVEL']='2'

plt.rcParams['font.family']='SimHei'

tf.compat.v1.logging.set_verbosity(tf.compat.v1.logging.ERROR)

tf.set_random_seed(777)

#1.加载数据集

data=load_iris()

x_data=data.data

y_data=data.target

y_data=np.c_[y_data]

#2.特征缩放、独热

sobj=StandardScaler()

x_data=sobj.fit_transform(x_data)

train_x,test_x,train_y,test_y=train_test_split(x_data,y_data,train_size=0.7)

oobj=OneHotEncoder()

y_data=oobj.fit_transform(y_data).toarray()

train_y=oobj.fit_transform(train_y).toarray()

test_y=oobj.fit_transform(test_y).toarray()

#3.#定义占位符\权重和斜率

x=tf.placeholder(tf.float32,shape=[None,4])

y=tf.placeholder(tf.float32,shape=[None,3])

w=tf.Variable(tf.random_normal([4,3]))

b=tf.Variable(tf.random_normal([3]))

#4.定义前向传播函数

model=tf.matmul(x,w)+b

h=tf.nn.softmax(model)

#5.代价函数

#方法一

cost=-tf.reduce_mean(y*tf.log(h),axis=1)

# 方法二

# cost=tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits_v2(labels=y_data, logits=model))

# 6.梯度向下函数

gradeDecline=tf.train.GradientDescentOptimizer(learning_rate=0.01).minimize(cost)

#7.计算精度

predicted=tf.argmax(model,axis=1)

def acc(y):

true_value=tf.argmax(y,axis=1)

acc=tf.reduce_mean(tf.cast(tf.equal(predicted,true_value),dtype=tf.float32))

return acc

train_acc=acc(train_y)

test_acc=acc(test_y)

j=[]

#8.初始化全局变量

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

#9.画代价函数图

for i in range(2001):

cost_val, w_val, b_val, _ ,acc_val= sess.run([cost, w, b, gradeDecline,train_acc], feed_dict={x: train_x, y: train_y})

if i % 100 == 0:

print(i,cost_val)

j.append(cost_val)

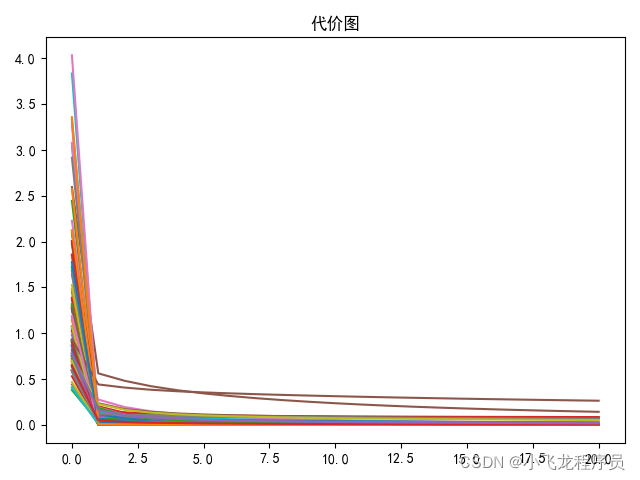

# 4.打印代价图

plt.title('代价图')

plt.plot(j)

plt.show()

h_val,predict_val,acc_val=sess.run([h,predicted,train_acc], feed_dict={x: train_x, y: train_y})

print(acc_val)

h_v, pre_val, acc_v = sess.run([h, predicted, test_acc], feed_dict={x: test_x, y: test_y})

print(acc_v)

注意:没有用softmax优化器效果,代价函数好多条.

import tensorflow as tf

import pandas as pd

import numpy as np

import os

from sklearn.datasets import load_iris

from sklearn.preprocessing import StandardScaler,OneHotEncoder

from sklearn.model_selection import train_test_split

import matplotlib.pyplot as plt

os.environ['TF_CPP_MIN_LOG_LEVEL']='2'

plt.rcParams['font.family']='SimHei'

tf.compat.v1.logging.set_verbosity(tf.compat.v1.logging.ERROR)

tf.set_random_seed(777)

#1.加载数据集

data=load_iris()

x_data=data.data

y_data=data.target

y_data=np.c_[y_data]

#2.特征缩放、独热

sobj=StandardScaler()

x_data=sobj.fit_transform(x_data)

train_x,test_x,train_y,test_y=train_test_split(x_data,y_data,train_size=0.7)

oobj=OneHotEncoder()

train_y_o=oobj.fit_transform(train_y).toarray()

test_y_o=oobj.fit_transform(test_y).toarray()

#3.#定义占位符\权重和斜率

x=tf.placeholder(tf.float32,shape=[None,4])

y=tf.placeholder(tf.float32,shape=[None,1])

w=tf.Variable(tf.random_normal([4,3]))

b=tf.Variable(tf.random_normal([3]))

#4.定义前向传播函数

model=tf.matmul(x,w)+b

h=tf.nn.softmax(model)

#5.代价函数

#方法一

# cost=-tf.reduce_mean(y*tf.log(h),axis=1)

# 方法二

cost=tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits_v2(labels=train_y_o, logits=model))

# 6.梯度向下函数

gradeDecline=tf.train.GradientDescentOptimizer(learning_rate=0.01).minimize(cost)

#7.计算精度

predicted=tf.argmax(model,axis=1)

def acc(y):

true_value=tf.argmax(y,axis=1)

acc=tf.reduce_mean(tf.cast(tf.equal(predicted,true_value),dtype=tf.float32))

return acc

train_acc=acc(train_y_o)

test_acc=acc(test_y_o)

j=[]

#8.初始化全局变量

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

#9.画代价函数图

for i in range(2001):

cost_val, w_val, b_val, _ ,acc_val= sess.run([cost, w, b, gradeDecline,train_acc], feed_dict={x: train_x, y: train_y})

if i % 100 == 0:

print(i,cost_val)

j.append(cost_val)

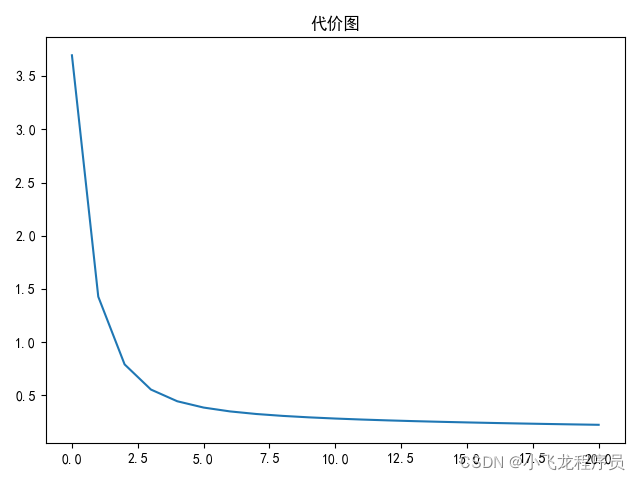

# 4.打印代价图

plt.title('代价图')

plt.plot(j)

plt.show()

h_val,predict_val,acc_val=sess.run([h,predicted,train_acc], feed_dict={x: train_x, y: train_y})

print(acc_val)

h_v, pre_val, acc_v = sess.run([h, predicted, test_acc], feed_dict={x: test_x, y: test_y})

print(acc_v)

注意:用softmax优化器效果最好,代价函数一条。

3. zoo数据集(7分类)

from sklearn.preprocessing import OneHotEncoder

import numpy as np

import os

import matplotlib.pyplot as plt

import tensorflow as tf

from sklearn.model_selection import train_test_split

os.environ['TF_CPP_MIN_LOG_LEVEL']='2'

plt.rcParams['font.family']='SimHei'

tf.compat.v1.logging.set_verbosity(tf.compat.v1.logging.ERROR)

data=np.loadtxt(r'E:\ana\envs\tf14\day07\data-04-zoo.csv',delimiter=',')

print(data)

x_data=data[:,:-1]

y_data=data[:,-1:]

print(x_data.shape)

print(y_data.shape)

tf.set_random_seed(777)

train_x,test_x,train_y,test_y=train_test_split(x_data,y_data,train_size=0.7)

oobj=OneHotEncoder()

y_data=oobj.fit_transform(y_data).toarray()

train_y_o=oobj.fit_transform(train_y).toarray()

test_y_o=oobj.fit_transform(test_y).toarray()

x=tf.placeholder(dtype=tf.float32,shape=[None,16])

y=tf.placeholder(dtype=tf.float32,shape=[None,1])

w=tf.Variable(tf.random_normal([16,7]))

b=tf.Variable(tf.random_normal([7]))

model=tf.matmul(x,w)+b

h=tf.nn.softmax(model)

# cost=-tf.reduce_mean(y*tf.log(h),axis=1)

cost=tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits_v2(labels=train_y_o,logits=model))

gradeDecline=tf.train.GradientDescentOptimizer(learning_rate=0.1).minimize(cost)

#计算准确率

y_predict=tf.argmax(model,axis=1)

def acc(y):

y_true = tf.argmax(y, axis=1)

acc =tf.reduce_mean(tf.cast(tf.equal(y_true,y_predict),dtype=tf.float32))

return acc

train_acc=acc(train_y_o)

test_acc=acc(test_y_o)

sess=tf.Session()

sess.run(tf.global_variables_initializer())

j=[]

for i in range(10000):

cost_val,_,acc_val=sess.run([cost,gradeDecline,train_acc],feed_dict={x:train_x,y:train_y})

j.append(cost_val)

if i%500==0:

print(i,cost_val)

plt.plot(j)

plt.show()

h1,p1,a1=sess.run([h,y_predict,train_acc],feed_dict={x:train_x,y:train_y})

print(a1)

h,p,a=sess.run([h,y_predict,test_acc],feed_dict={x:test_x,y:test_y})

print(a)