基于人工神经网络模型的股票策略

前言

随着机器学习算法和神经网络模型的日益发展,将神经网络模型应用到股票市场进行策略构建已非常常见。

本文将结合股票市场的一些常见技术指标以及常见的人工神经网络模型,进行一个简单的策略构建,这里假设交易不需要手续费,市场无摩擦。

数据采用沪深300指数2016年-2021年间的数据

一、股票市场指标

主要用到的股票市场指标为:

最高价与最低价之差(H-L)

收盘价减去开盘价(O-C)

三天移动平均线(3day MA)

十日均线(10day MA)

30天移动平均线(30day MA)

5天的标准差(Std_dev)

技术指标:相对强弱指数(RSI)

技术指标:威廉姆斯 %R

import numpy as np

import pandas as pd

import ta

df['H-L']=df['high']-df['low']

df['O-C']=-(df['open']-df['close'])

df['ma3']=df['close'].shift(1).rolling(window=3).mean()

df['ma10']=df['close'].shift(1).rolling(window=10).mean()

df['ma30']=df['close'].shift(1).rolling(window=30).mean()

df['std_dev5']=df['close'].rolling(5).std()

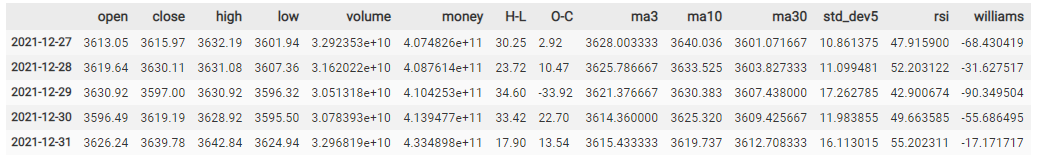

df.tail(5)

from finta import TA

rsi=TA.RSI(df,9)

df['rsi']=rsi

# 威廉指标

import pandas as pd

import pandas_ta as ta

williams=ta.willr(df['high'], df['low'], df['close'], length=7, talib=None, offset=None)

df['williams']=williams

df.tail(5)

# t日收盘价>t-1日收盘价,赋值为1

df['price_rise']=np.where(df['close'].shift(-1)>df['close'],1,0)

二、构建人工神经网络模型(ANN)

from tensorflow.python.keras.models import Sequential, load_model

from tensorflow.python.keras.layers.core import Dense

from tensorflow.python.keras import optimizers

from tensorflow.python.keras.callbacks import EarlyStopping

from sklearn.preprocessing import MinMaxScaler

from sklearn.metrics import mean_squared_error,r2_score,mean_absolute_error

from math import sqrt

import datetime as dt

将数据进行划分,然后构建ANN框架进行预测

(数据划分过程省略)

model = Sequential()

model.add(Dense(50, activation="sigmoid"))

model.add(Dense(1, activation="sigmoid"))

# model.add(Dense(1, activation="sigmoid"))

model.compile(loss='mean_squared_error', optimizer='adam')

history = model.fit(train_data,train_label, epochs=30, batch_size=128, validation_data = (test_data,test_label), verbose=1)

Train on 1145 samples, validate on 286 samples

Epoch 1/30

1145/1145 [==============================] - 2s 2ms/sample - loss: 0.3390 - val_loss: 0.3404

Epoch 2/30

1145/1145 [==============================] - 0s 27us/sample - loss: 0.3109 - val_loss: 0.3062

Epoch 3/30

1145/1145 [==============================] - 0s 35us/sample - loss: 0.2837 - val_loss: 0.2765

Epoch 4/30

1145/1145 [==============================] - 0s 27us/sample - loss: 0.2639 - val_loss: 0.2570

Epoch 5/30

1145/1145 [==============================] - 0s 31us/sample - loss: 0.2530 - val_loss: 0.2496

Epoch 6/30

1145/1145 [==============================] - 0s 37us/sample - loss: 0.2494 - val_loss: 0.2491

Epoch 7/30

1145/1145 [==============================] - 0s 25us/sample - loss: 0.2491 - val_loss: 0.2495

Epoch 8/30

1145/1145 [==============================] - 0s 26us/sample - loss: 0.2492 - val_loss: 0.2492

Epoch 9/30

1145/1145 [==============================] - 0s 31us/sample - loss: 0.2490 - val_loss: 0.2490

Epoch 10/30

1145/1145 [==============================] - 0s 27us/sample - loss: 0.2489 - val_loss: 0.2489

Epoch 11/30

1145/1145 [==============================] - 0s 30us/sample - loss: 0.2489 - val_loss: 0.2488

Epoch 12/30

1145/1145 [==============================] - 0s 30us/sample - loss: 0.2488 - val_loss: 0.2488

Epoch 13/30

1145/1145 [==============================] - 0s 41us/sample - loss: 0.2488 - val_loss: 0.2487

Epoch 14/30

1145/1145 [==============================] - 0s 29us/sample - loss: 0.2487 - val_loss: 0.2487

Epoch 15/30

1145/1145 [==============================] - 0s 29us/sample - loss: 0.2487 - val_loss: 0.2487

Epoch 16/30

1145/1145 [==============================] - 0s 33us/sample - loss: 0.2487 - val_loss: 0.2486

Epoch 17/30

1145/1145 [==============================] - 0s 29us/sample - loss: 0.2486 - val_loss: 0.2486

Epoch 18/30

1145/1145 [==============================] - 0s 37us/sample - loss: 0.2487 - val_loss: 0.2486

Epoch 19/30

1145/1145 [==============================] - 0s 30us/sample - loss: 0.2486 - val_loss: 0.2487

Epoch 20/30

1145/1145 [==============================] - 0s 32us/sample - loss: 0.2486 - val_loss: 0.2487

Epoch 21/30

1145/1145 [==============================] - 0s 29us/sample - loss: 0.2485 - val_loss: 0.2485

Epoch 22/30

1145/1145 [==============================] - 0s 28us/sample - loss: 0.2485 - val_loss: 0.2485

Epoch 23/30

1145/1145 [==============================] - 0s 29us/sample - loss: 0.2486 - val_loss: 0.2486

Epoch 24/30

1145/1145 [==============================] - 0s 30us/sample - loss: 0.2484 - val_loss: 0.2485

Epoch 25/30

1145/1145 [==============================] - 0s 33us/sample - loss: 0.2484 - val_loss: 0.2485

Epoch 26/30

1145/1145 [==============================] - 0s 29us/sample - loss: 0.2484 - val_loss: 0.2485

Epoch 27/30

1145/1145 [==============================] - 0s 32us/sample - loss: 0.2483 - val_loss: 0.2486

Epoch 28/30

1145/1145 [==============================] - 0s 30us/sample - loss: 0.2484 - val_loss: 0.2488

Epoch 29/30

1145/1145 [==============================] - 0s 30us/sample - loss: 0.2483 - val_loss: 0.2485

Epoch 30/30

1145/1145 [==============================] - 0s 33us/sample - loss: 0.2482 - val_loss: 0.2485

def plot_error(train_loss,val_loss):

plt.figure(figsize=(12,10))

plt.plot(train_loss,c = 'r')

plt.plot(val_loss,c = 'b')

plt.xticks(fontsize=15)

plt.yticks(fontsize=15)

plt.xlabel('Epochs',fontsize = 15)

plt.ylabel('Loss',fontsize = 15)

plt.legend(['train','val'],loc = 'upper right',fontsize = 15)

plt.show()

train_error = history.history['loss']

val_error = history.history['val_loss']

plot_error(train_error,val_error)

模型训练效果如图所示

从图中可以看出训练误差曲线和预测误差曲线最后贴合的比较完美,但是也有过度拟合之嫌。

毕竟ANN神经网络模型比较基础,可以将ANN进行改进,或者应用深度神经网络模型进行预测。

predictions = model.predict(test_data)

df['y_pred']=np.NaN

df=df.iloc[(len(df)-len(y_pred)):]

df['y_pred']=y_pred

df_dataset=df.dropna()

df_dataset

# y_pred为真时,买入,y_pred为假时,卖出

import warnings

warnings.filterwarnings('ignore')

df_dataset['tomorrow return']=0.

df_dataset['tomorrow return']=np.log(df_dataset['close']/df_dataset['close'].shift(1))

df_dataset['tomorrow return']=df_dataset['tomorrow return'].shift(-1)

# y_pred为真时,买入,y_pred为假时,卖出

import warnings

warnings.filterwarnings('ignore')

df_dataset['tomorrow return']=0.

df_dataset['tomorrow return']=np.log(df_dataset['close']/df_dataset['close'].shift(1))

df_dataset['tomorrow return']=df_dataset['tomorrow return'].shift(-1)

df_dataset[‘strategy return’]=0.

df_dataset[‘strategy return’]=np.where(df_dataset[‘y_pred’]==True,

df_dataset[‘tomorrow return’],-df_dataset[‘tomorrow return’])

df_dataset

df_dataset['market return']=np.cumsum(df_dataset['tomorrow return'])

df_dataset['strategy returns']=np.cumsum(df_dataset['strategy return'])

df_dataset

计算市场和策略的收益率情况

import matplotlib.pyplot as plt

plt.figure(figsize=(15,10))

df_dataset['market return'].plot(label='marker returns')

df_dataset['strategy returns'].plot(label='strategy returns')

plt.legend()

plt.xlabel('days')

plt.ylabel('returns')

可以看出,应用神经网络模型构建的策略收益率总体上是高于市场收益率的。

尤其在2021年大盘行情比较差的时候,策略的收益率大幅高于市场收益率。

最后,利用神经网络模型进行策略构建目前较为主流,但是单靠这种策略进行投资,无疑是风险巨大的。

总结

本章介绍了应用神经网络模型进行股票市场的策略构建。主要思想是利用神经网络模型进行股票市场的涨跌预测,当 预测值为真时,将持有多头头寸,而当预测信号为假时,将持有空头头寸。