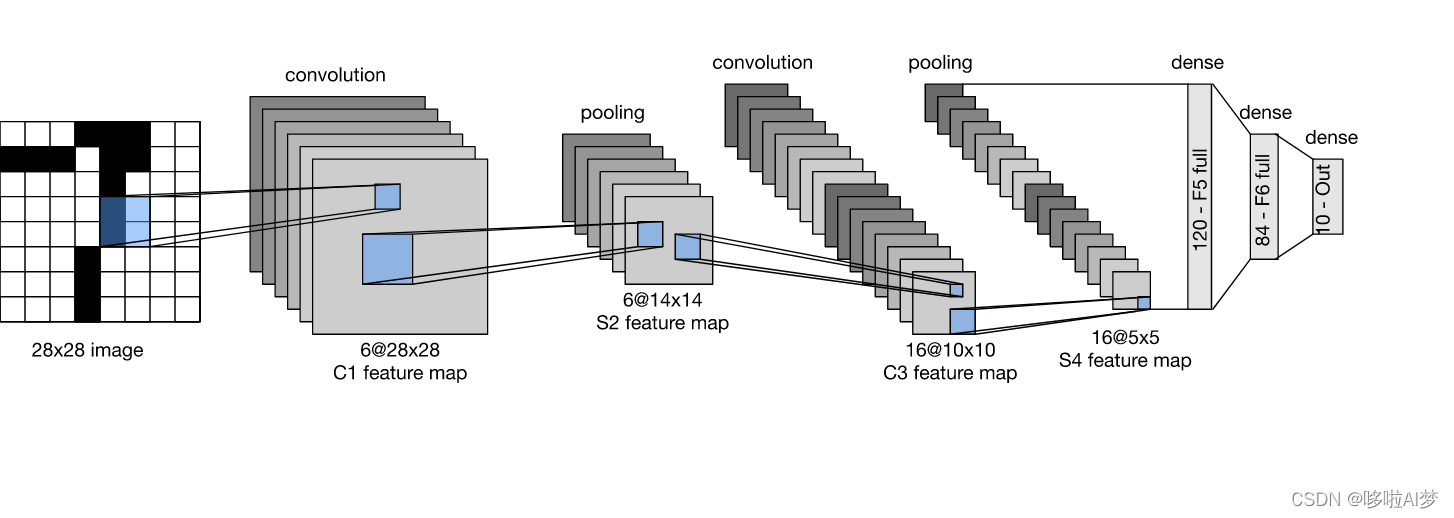

LeNet主要用于手写数字的识别。

import torch

import torchvision

from torch import nn

from torchvision import transforms

from torch.utils.data import Dataset,DataLoader

device = torch.device('cuda')

print(device)

#准备数据集

train_data = torchvision.datasets.FashionMNIST(root='./data',train=True,transform=torchvision.transforms.ToTensor(),download=True)

test_data = torchvision.datasets.FashionMNIST(root='./data',train=False,transform=torchvision.transforms.ToTensor(),download=True)

#数据集长度

print("The length of train_data is {}".format(len(train_data)))

print("The length of test_data is {}".format(len(test_data)))

#加载数据集

train_dataloader = DataLoader(dataset=train_data,batch_size=256,shuffle=True)

test_dataloader = DataLoader(dataset=test_data,batch_size=256,shuffle=False)

#搭建网络

class LeNet(nn.Module):

def __init__(self):

super(LeNet, self).__init__()

self.model = nn.Sequential(

nn.Conv2d(1,6,kernel_size=5,padding=2),

nn.Sigmoid(),

nn.AvgPool2d(kernel_size=2,stride=2),

nn.Conv2d(6,16,kernel_size=5),

nn.Sigmoid(),

nn.AvgPool2d(kernel_size=2,stride=2),

nn.Flatten(),

nn.Linear(16*5*5,120),

nn.Sigmoid(),

nn.Linear(120,84),

nn.Sigmoid(),

nn.Linear(84,10)

)

def forward(self,x):

return self.model(x)

#实例化

lenet = LeNet()

lenet.to(device)

#损失函数

loss = nn.CrossEntropyLoss()

loss.to(device)

#优化器

optimer = torch.optim.SGD(lenet.parameters(),lr=0.01)

#训练

epoches = 10

total_train_step = 0

total_test_step = 0

for epoch in range(epoches):

print('-----------第{}轮训练开始-----------'.format(epoch+1))

lenet.train()

for data in train_dataloader:

imgs,targets = data

imgs = imgs.to(device)

targets = targets.to(device)

outputs = lenet(imgs)

l = loss(outputs,targets)

optimer.zero_grad()

l.backward()

optimer.step()

total_train_step += 1

if total_train_step % 100 == 0:

print("训练次数:{},Loss:{}".format(total_train_step,l.item()))

lenet.eval()

total_test_loss = 0

total_accuracy = 0

with torch.no_grad():

for data in test_dataloader:

imgs, targets = data

imgs = imgs.to(device)

targets = targets.to(device)

outputs = lenet(imgs)

l = loss(outputs, targets)

total_test_loss += l.item()

accuracy = (outputs.argmax(1) == targets).sum()

total_accuracy += accuracy

print("整体测试集上的Loss:{}".format(total_test_loss))

print("整体测试集上的正确率:{}".format(total_accuracy / len(test_data)))

total_test_step += 1

总结:

- 在CNN中,组合使用卷积层、非线性激活函数和汇聚层

- 为了构造高性能的CNN,通常对卷积层进行排列,逐渐降低其表示的空间分辨率,同时增加通道数

- 在传统的CNN中,卷积快编码得到的表征在输出之前需要一个或者多个全连接层处理