Pytorch: 卷积神经网络识别 Fashion-MNIST

Copyright: Jingmin Wei, Pattern Recognition and Intelligent System, School of Artificial and Intelligence, Huazhong University of Science and Technology

本教程不商用,仅供学习和参考交流使用,如需转载,请联系本人。

import numpy as np

import pandas as pd

from sklearn.metrics import accuracy_score, confusion_matrix

import matplotlib.pyplot as plt

import seaborn as sns

import copy

import time

import torch

import torch.nn as nn

from torch.optim import Adam

import torch.utils.data as Data

from torchvision import transforms

from torchvision.datasets import FashionMNIST

本章将通过自己搭建卷积神经网络识别 Fashion-MNIST ,并对结果进行可视化分析。

图像数据准备

调用 sklearn 的 datasets 模块的 FashionMNIST 的 API 函数读取。

# 使用 FashionMNIST 数据,准备训练数据集

train_data = FashionMNIST(

root = './data/FashionMNIST',

train = True,

transform = transforms.ToTensor(),

download = False

)

# 定义一个数据加载器

train_loader = Data.DataLoader(

dataset = train_data, # 数据集

batch_size = 64, # 批量处理的大小

shuffle = False, # 不打乱数据

num_workers = 2, # 两个进程

)

# 计算 batch 数

print(len(train_loader))

938

上述程序块定义了一个数据加载器,批量的数据块为 64.

接下来我们进行数据可视化分析,将 tensor 数据转为 numpy 格式,然后利用 imshow 进行可视化。

# 获得 batch 的数据

for step, (b_x, b_y) in enumerate(train_loader):

if step > 0:

break

# 可视化一个 batch 的图像

batch_x = b_x.squeeze().numpy()

batch_y = b_y.numpy()

label = train_data.classes

label[0] = 'T-shirt'

plt.figure(figsize = (12, 5))

for i in np.arange(len(batch_y)):

plt.subplot(4, 16, i + 1)

plt.imshow(batch_x[i, :, :], cmap = plt.cm.gray)

plt.title(label[batch_y[i]], size = 9)

plt.axis('off')

plt.subplots_adjust(wspace = 0.05)

然后我们处理测试集样本,将所有样本看成一个整体,作为一个 batch 测试。即导入了 10000 10000 10000 张 28 × 28 28\times28 28×28 的图像

# 处理测试集

test_data = FashionMNIST(

root = './data/FashionMNIST',

train = False, # 不使用训练数据集

download = False

)

# 为数据添加一个通道维度,并且取值范围归一化

test_data_x = test_data.data.type(torch.FloatTensor) / 255.0

test_data_x = torch.unsqueeze(test_data_x, dim = 1)

test_data_y = test_data.targets # 测试集标签

print(test_data_x.shape)

print(test_data_y.shape)

torch.Size([10000, 1, 28, 28])

torch.Size([10000])

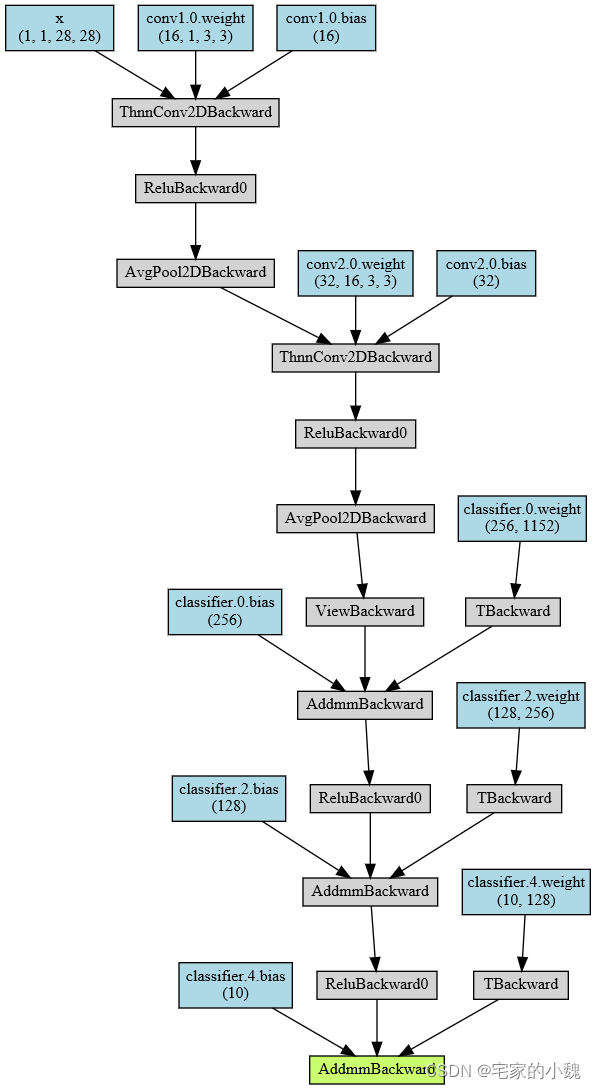

卷积神经网络搭建

第一层,

16

16

16 个

3

×

3

3\times3

3×3 的卷积核,ReLU,AvgPool2d。

第二层,

32

32

32 个

3

×

3

3\times3

3×3 的卷积核,ReLU,AvgPool2d。

全连接层

256

?

128

?

10

256 - 128 - 10

256?128?10 。

class MyConvNet(nn.Module):

def __init__(self):

super(MyConvNet, self).__init__()

# 定义第一层卷积

self.conv1 = nn.Sequential(

nn.Conv2d(

in_channels = 1, # 输入图像通道数

out_channels = 16, # 输出特征数(卷积核个数)

kernel_size = 3, # 卷积核大小

stride = 1, # 卷积核步长1

padding = 1, # 边缘填充1

),

nn.ReLU(), # 激活函数

nn.AvgPool2d(

kernel_size = 2, # 平均值池化,2*2

stride = 2, # 池化步长2

),

)

self.conv2 = nn.Sequential(

nn.Conv2d(16, 32, 3, 1, 0),

nn.ReLU(),

nn.AvgPool2d(2, 2),

)

self.classifier = nn.Sequential(

nn.Linear(32 * 6 * 6, 256),

nn.ReLU(),

nn.Linear(256, 128),

nn.ReLU(),

nn.Linear(128, 10),

)

def forward(self, x):

# 定义前向传播路径

x = self.conv1(x)

x = self.conv2(x)

x = x.view(x.size(0), -1) # 展平多维的卷积图层

output = self.classifier(x)

return output

# 定义卷积网络对象

myconvnet = MyConvNet()

from torchsummary import summary

summary(myconvnet, input_size=(1, 28, 28))

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [-1, 16, 28, 28] 160

ReLU-2 [-1, 16, 28, 28] 0

AvgPool2d-3 [-1, 16, 14, 14] 0

Conv2d-4 [-1, 32, 12, 12] 4,640

ReLU-5 [-1, 32, 12, 12] 0

AvgPool2d-6 [-1, 32, 6, 6] 0

Linear-7 [-1, 256] 295,168

ReLU-8 [-1, 256] 0

Linear-9 [-1, 128] 32,896

ReLU-10 [-1, 128] 0

Linear-11 [-1, 10] 1,290

================================================================

Total params: 334,154

Trainable params: 334,154

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.00

Forward/backward pass size (MB): 0.30

Params size (MB): 1.27

Estimated Total Size (MB): 1.58

----------------------------------------------------------------

# 输出网络结构

from torchviz import make_dot

x = torch.randn(1, 1, 28, 28).requires_grad_(True)

y = myconvnet(x)

myCNN_vis = make_dot(y, params=dict(list(myconvnet.named_parameters()) + [('x', x)]))

myCNN_vis

卷积神经网络训练和预测

训练集整体有 60000 60000 60000 张图像, 938 938 938 个 batch ,使用 80 % 80\% 80% 的 batch 用于模型训练, 20 % 20\% 20% 的用于模型验证

# 定义网络训练过程函数

def train_model(model, traindataloader, train_rate, criterion, optimizer, num_epochs = 25):

'''

模型,训练数据集(待切分),训练集百分比,损失函数,优化器,训练轮数

'''

# 计算训练使用的 batch 数量

batch_num = len(traindataloader)

train_batch_num = round(batch_num * train_rate)

# 复制模型参数

best_model_wts = copy.deepcopy(model.state_dict())

best_acc = 0.0

train_loss_all = []

val_loss_all =[]

train_acc_all = []

val_acc_all = []

since = time.time()

# 训练框架

for epoch in range(num_epochs):

print('Epoch {}/{}'.format(epoch, num_epochs - 1))

print('-' * 10)

train_loss = 0.0

train_corrects = 0

train_num = 0

val_loss = 0.0

val_corrects = 0

val_num = 0

for step, (b_x, b_y) in enumerate(traindataloader):

if step < train_batch_num:

model.train() # 设置为训练模式

output = model(b_x)

pre_lab = torch.argmax(output, 1)

loss = criterion(output, b_y) # 计算误差损失

optimizer.zero_grad() # 清空过往梯度

loss.backward() # 误差反向传播

optimizer.step() # 根据误差更新参数

train_loss += loss.item() * b_x.size(0)

train_corrects += torch.sum(pre_lab == b_y.data)

train_num += b_x.size(0)

else:

model.eval() # 设置为验证模式

output = model(b_x)

pre_lab = torch.argmax(output, 1)

loss = criterion(output, b_y)

val_loss += loss.item() * b_x.size(0)

val_corrects += torch.sum(pre_lab == b_y.data)

val_num += b_x.size(0)

# ======================小循环结束========================

# 计算一个epoch在训练集和验证集上的损失和精度

train_loss_all.append(train_loss / train_num)

train_acc_all.append(train_corrects.double().item() / train_num)

val_loss_all.append(val_loss / val_num)

val_acc_all.append(val_corrects.double().item() / val_num)

print('{} Train Loss: {:.4f} Train Acc: {:.4f}'.format(epoch, train_loss_all[-1], train_acc_all[-1]))

print('{} Val Loss: {:.4f} Val Acc: {:.4f}'.format(epoch, val_loss_all[-1], val_acc_all[-1]))

# 拷贝模型最高精度下的参数

if val_acc_all[-1] > best_acc:

best_acc = val_acc_all[-1]

best_model_wts = copy.deepcopy(model.state_dict())

time_use = time.time() - since

print('Train and Val complete in {:.0f}m {:.0f}s'.format(time_use // 60, time_use % 60))

# ===========================大循环结束===========================

# 使用最好模型的参数

model.load_state_dict(best_model_wts)

train_process = pd.DataFrame(

data = {'epoch': range(num_epochs),

'train_loss_all': train_loss_all,

'val_loss_all': val_loss_all,

'train_acc_all': train_acc_all,

'val_acc_all': val_acc_all})

return model, train_process

模型的损失和识别精度组成数据表格 train_process 输出,使用 copy.deepcopy() 将模型最优的参数保存在 best_model_wts 中,最终所有的训练结果通过 model.load_state_dict(best_model_wts) 将最优的参数赋给最终的模型

下面对模型和优化器进行训练

# 模型训练

optimizer = Adam(myconvnet.parameters(), lr = 0.0003) # Adam优化器

criterion = nn.CrossEntropyLoss() # 损失函数

myconvnet, train_process = train_model(myconvnet, train_loader, 0.8, criterion, optimizer, num_epochs = 25)

Epoch 0/24

----------

0 Train Loss: 0.8061 Train Acc: 0.7122

0 Val Loss: 0.5649 Val Acc: 0.7820

Train and Val complete in 0m 50s

Epoch 1/24

----------

1 Train Loss: 0.5165 Train Acc: 0.8080

1 Val Loss: 0.4758 Val Acc: 0.8256

Train and Val complete in 1m 39s

Epoch 2/24

----------

2 Train Loss: 0.4468 Train Acc: 0.8371

2 Val Loss: 0.4329 Val Acc: 0.8401

Train and Val complete in 2m 29s

Epoch 3/24

----------

3 Train Loss: 0.4036 Train Acc: 0.8528

3 Val Loss: 0.4026 Val Acc: 0.8516

Train and Val complete in 3m 22s

Epoch 4/24

----------

4 Train Loss: 0.3725 Train Acc: 0.8635

4 Val Loss: 0.3803 Val Acc: 0.8610

Train and Val complete in 4m 20s

Epoch 5/24

----------

5 Train Loss: 0.3490 Train Acc: 0.8719

5 Val Loss: 0.3631 Val Acc: 0.8674

Train and Val complete in 5m 17s

Epoch 6/24

----------

6 Train Loss: 0.3301 Train Acc: 0.8784

6 Val Loss: 0.3479 Val Acc: 0.8750

Train and Val complete in 6m 9s

Epoch 7/24

----------

7 Train Loss: 0.3144 Train Acc: 0.8832

7 Val Loss: 0.3373 Val Acc: 0.8789

Train and Val complete in 7m 14s

Epoch 8/24

----------

8 Train Loss: 0.3013 Train Acc: 0.8886

8 Val Loss: 0.3265 Val Acc: 0.8827

Train and Val complete in 8m 35s

Epoch 9/24

----------

9 Train Loss: 0.2896 Train Acc: 0.8927

9 Val Loss: 0.3172 Val Acc: 0.8868

Train and Val complete in 10m 7s

Epoch 10/24

----------

10 Train Loss: 0.2794 Train Acc: 0.8963

10 Val Loss: 0.3068 Val Acc: 0.8908

Train and Val complete in 10m 56s

Epoch 11/24

----------

11 Train Loss: 0.2701 Train Acc: 0.9002

11 Val Loss: 0.2993 Val Acc: 0.8932

Train and Val complete in 11m 46s

Epoch 12/24

----------

12 Train Loss: 0.2617 Train Acc: 0.9032

12 Val Loss: 0.2907 Val Acc: 0.8963

Train and Val complete in 12m 44s

Epoch 13/24

----------

13 Train Loss: 0.2540 Train Acc: 0.9063

13 Val Loss: 0.2828 Val Acc: 0.8997

Train and Val complete in 13m 33s

Epoch 14/24

----------

14 Train Loss: 0.2468 Train Acc: 0.9089

14 Val Loss: 0.2765 Val Acc: 0.9016

Train and Val complete in 14m 19s

Epoch 15/24

----------

15 Train Loss: 0.2401 Train Acc: 0.9113

15 Val Loss: 0.2708 Val Acc: 0.9036

Train and Val complete in 15m 7s

Epoch 16/24

----------

16 Train Loss: 0.2341 Train Acc: 0.9136

16 Val Loss: 0.2675 Val Acc: 0.9051

Train and Val complete in 15m 53s

Epoch 17/24

----------

17 Train Loss: 0.2278 Train Acc: 0.9157

17 Val Loss: 0.2640 Val Acc: 0.9060

Train and Val complete in 16m 39s

Epoch 18/24

----------

18 Train Loss: 0.2220 Train Acc: 0.9183

18 Val Loss: 0.2601 Val Acc: 0.9076

Train and Val complete in 17m 29s

Epoch 19/24

----------

19 Train Loss: 0.2162 Train Acc: 0.9201

19 Val Loss: 0.2590 Val Acc: 0.9074

Train and Val complete in 18m 21s

Epoch 20/24

----------

20 Train Loss: 0.2106 Train Acc: 0.9223

20 Val Loss: 0.2550 Val Acc: 0.9097

Train and Val complete in 19m 10s

Epoch 21/24

----------

21 Train Loss: 0.2051 Train Acc: 0.9245

21 Val Loss: 0.2538 Val Acc: 0.9101

Train and Val complete in 19m 57s

Epoch 22/24

----------

22 Train Loss: 0.1999 Train Acc: 0.9260

22 Val Loss: 0.2512 Val Acc: 0.9107

Train and Val complete in 20m 45s

Epoch 23/24

----------

23 Train Loss: 0.1947 Train Acc: 0.9279

23 Val Loss: 0.2507 Val Acc: 0.9108

Train and Val complete in 21m 38s

Epoch 24/24

----------

24 Train Loss: 0.1896 Train Acc: 0.9298

24 Val Loss: 0.2512 Val Acc: 0.9114

Train and Val complete in 22m 32s

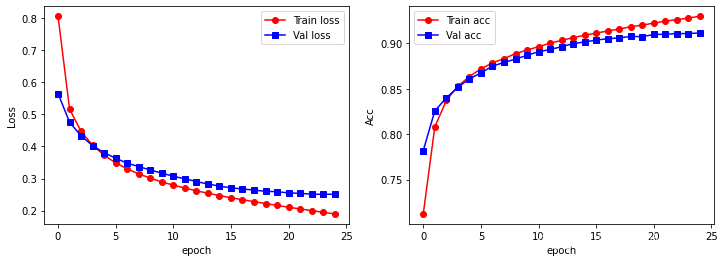

使用折线图将训练过程的精度和损失函数进行可视化:

# 可视化训练过程

plt.figure(figsize = (12, 4))

plt.subplot(1, 2, 1)

plt.plot(train_process.epoch, train_process.train_loss_all, 'ro-', label = 'Train loss')

plt.plot(train_process.epoch, train_process.val_loss_all, 'bs-', label = 'Val loss')

plt.legend()

plt.xlabel('epoch')

plt.ylabel('Loss')

plt.subplot(1, 2, 2)

plt.plot(train_process.epoch, train_process.train_acc_all, 'ro-', label = 'Train acc')

plt.plot(train_process.epoch, train_process.val_acc_all, 'bs-', label = 'Val acc')

plt.legend()

plt.xlabel('epoch')

plt.ylabel('Acc')

plt.show()

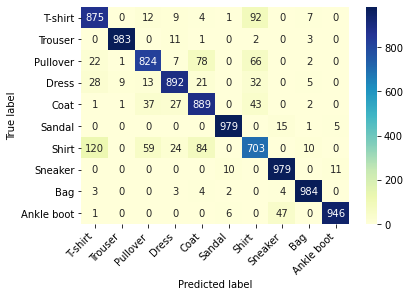

同时我们计算模型的泛化能力,使用输出的模型在测试集上进行预测:

# 测试集预测,并可视化预测效果

myconvnet.eval()

output = myconvnet(test_data_x)

pre_lab = torch.argmax(output, 1)

acc = accuracy_score(test_data_y, pre_lab)

print(test_data_y)

print(pre_lab)

print('测试集上的预测精度为', acc)

tensor([9, 2, 1, ..., 8, 1, 5])

tensor([9, 2, 1, ..., 8, 1, 5])

测试集上的预测精度为 0.9054

# 计算测试集上的混淆矩阵并可视化

conf_mat = confusion_matrix(test_data_y, pre_lab)

df_cm = pd.DataFrame(conf_mat, index = label, columns = label)

heatmap = sns.heatmap(df_cm, annot = True, fmt = 'd', cmap = 'YlGnBu')

heatmap.yaxis.set_ticklabels(heatmap.yaxis.get_ticklabels(), rotation = 0, ha = 'right')

heatmap.xaxis.set_ticklabels(heatmap.xaxis.get_ticklabels(), rotation = 45, ha = 'right')

plt.ylabel('True label')

plt.xlabel('Predicted label')

plt.show()

我们发现,最容易预测错误的是 T-shirt 和 Shirt ,相互预测出错的样本量为 120 120 120 个。