朴素贝叶斯简介(概率分类)

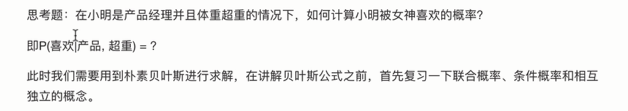

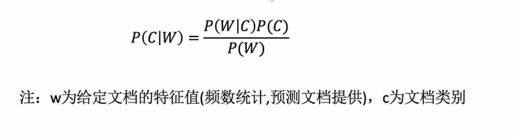

概率基础

联合概率、条件概率与相互独立

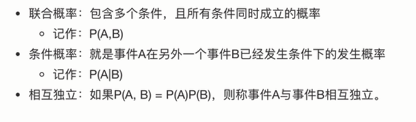

贝叶斯公式

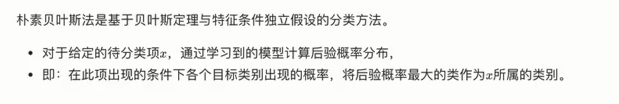

介绍

案例

API

商品评论情感分析

导入依赖

import pandas as pd

import numpy as py

import jieba

import matplotlib.pyplot as plt

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.naive_bayes import MultinomialNB

获取数据

data = pd.read_csv("evaluation.csv",encoding="gbk")

data

数据基本处理

# 取出内容列,用于后面分析

content = data["内容"]

content

# 把评价中的好评差评转换为数字

data.loc[data.loc[:,"评价"] == "好评","评论编号"] =1

data.loc[data.loc[:,"评价"] == "差评","评论编号"] =0

data

# 选择停用词

stopwords = []

with open("stopwords.txt","r",encoding="utf-8") as f:

lines = f.readlines()

for tmp in lines:

line = tmp.strip()

stopwords.append(line)

stopwords = list(set(stopwords))

print("停用词:\n",stopwords)

# 把内容转换为标准格式

comment_list = []

for tmp in content:

# 把话切割成词

seg_list = jieba.cut(tmp,cut_all=False)

seg_str = ",".join(seg_list)

comment_list.append(seg_str)

comment_list

# 统计词个数

con = CountVectorizer(stop_words=stopwords)

X = con.fit_transform(comment_list)

X.toarray()

## 准备训练集和测试集

x_train = X.toarray()[:10,:]

y_train = data["评价"][:10]

print("训练集:\n",x_train)

print("训练集:\n",y_train)

x_test = X.toarray()[10:,:]

y_test = data["评价"][10:]

print("测试集:\n",x_test)

print("测试集:\n",y_test)

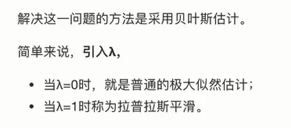

模型训练

mb = MultinomialNB(alpha=1)

mb.fit(x_train,y_train)

y_pre = mb.predict(x_test)

模型评估

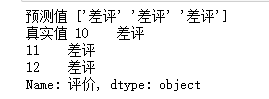

print("预测值",y_pre)

print("真实值",y_test)

mb.score(x_test,y_test)

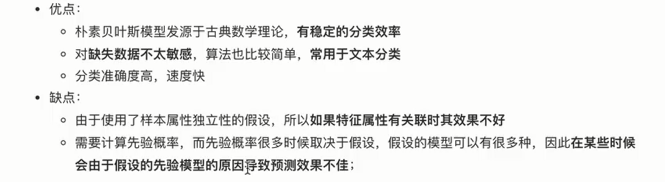

朴素贝叶斯优缺点

朴素贝叶斯内容汇总

NB的原理

朴素贝叶斯朴素在哪

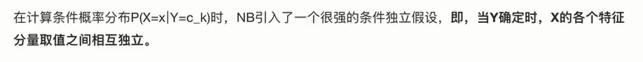

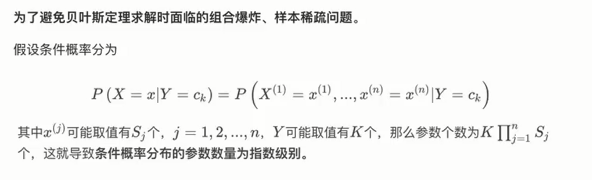

为什么引入条件独立性假设

在估计条件概率P(X|Y)时出现概率为0的情况怎么办

为什么属性独立性假设在实际情况中很难成立,但朴素贝叶斯任能取得较好的效果

朴素贝叶斯与LR(逻辑回归)的区别