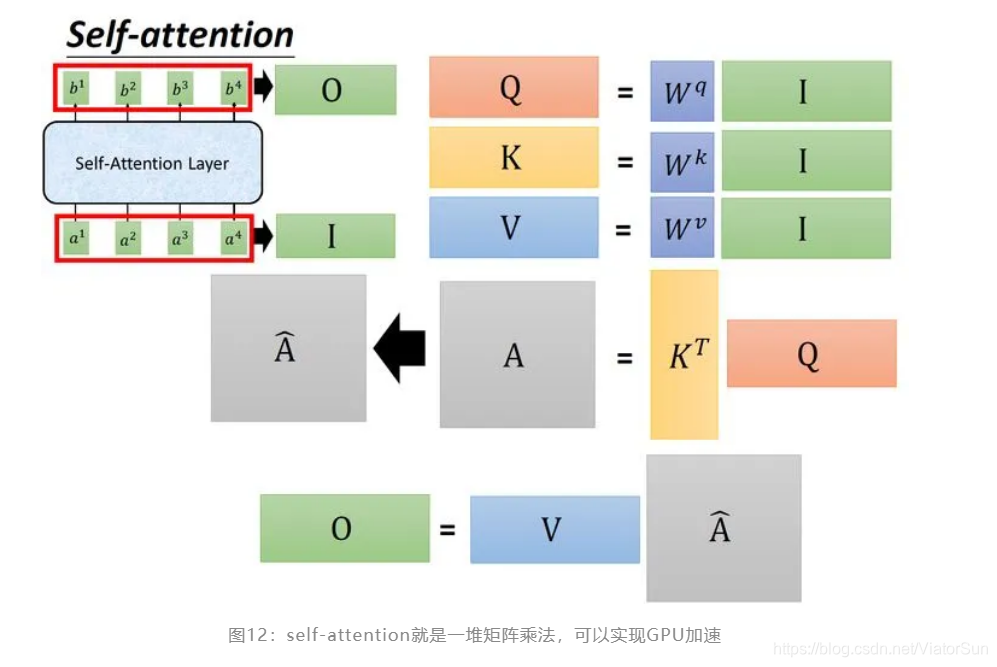

A t t e n t i o n ( Q , K , V ) = S o f t m a x ( Q ? K T d ) ? V Attention(Q,K,V)=Softmax(\frac{Q\cdot K^T}{\sqrt d})\cdot V Attention(Q,K,V)=Softmax(d?Q?KT?)?V

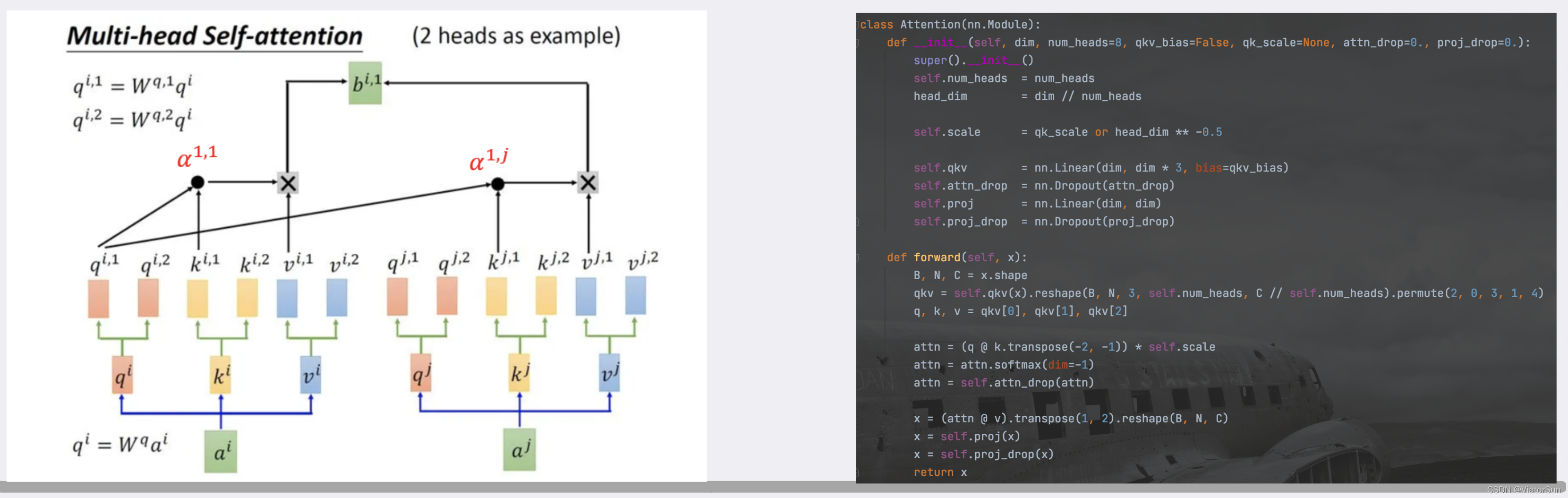

class Attention(nn.Module):

def __init__(self, dim, heads=8, head_dim=64, dropout=0.):

super().__init__()

inner_dim = head_dim * heads # 中间转换纬度

project_out = not (heads == 1 and head_dim == dim)

self.heads = heads

self.scale = head_dim ** -0.5

self.atten = nn.Softmax(dim = -1)

self.to_qkv = nn.Linear(dim, inner_dim *3, bias = False)

self.to_out = nn.Sequential(nn.Linear(inner_dim, dim), nn.Dropout(dropout) ) if project_out else nn.Identity()

def forward(self, LayerNorX):

b, n, _, h = *LayerNorX.shape, self.heads

qkv_ = self.to_qkv(LayerNorX)

qkv = qkv_.chunk(3, dim = -1) # chunk 按照纬度拆分

q, k, v = map(lambda t: rearrange(t, 'b n (h d) -> b h n d', h = h), qkv)

dots = einsum('b h i d, b h j d -> b h i j', q, k) * self.scale

atten = self.atten(dots)

out = einsum('b h i j, b h j d -> b h i d', atten, v)

out = rearrange(out, 'b h n d -> b n (h d)')

out = self.to_out(out)

return out

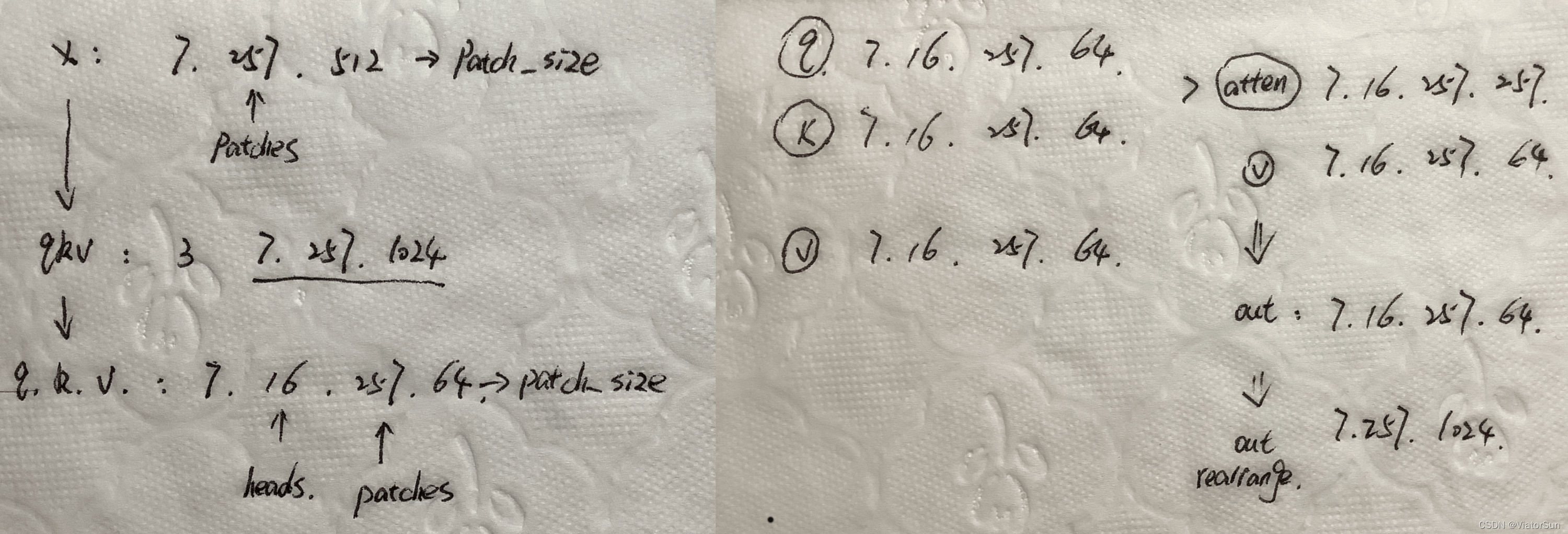

按照 ViT 的数据运行,Attention 的输入数据维度为: [ 7 , 257 , 512 ] [7,257,512] [7,257,512]

> 7 :batch

> 257 :patches 个数,另 ? class token

> 512 :patch 的尺度

Q/K/V 最低两维:patches、patche_size

将其摊开 为矩阵,即可复现 “原图”,故

K

T

×

Q

K^T \times Q

KT×Q or

Q

T

×

K

Q^T \times K

QT×K 一样

Multi-head

对于多头注意力时,采用的是:先将Q/K/V 通过 linear 或其他方法扩增,然后再 rearrayge即可