[论文阅读笔记]2019_SIGIR_An Efficient Adaptive Transfer Neural Network for Social-aware Recommendation

论文下载地址: https://doi.org/10.1145/3331184.3331192

发表期刊:SIGIR

Publish time: 2019

作者及单位:

- Chong Chen1, Min Zhang1?, Chenyang Wang1, Weizhi Ma1,

- Minming Li2, Yiqun Liu1 and Shaoping Ma1

- 1Department of Computer Science and Technology, Institute for Artifcial Intelligence, Beijing National Research Center for Information Science and Technology, Tsinghua University

- 2Department of Computer Science, City University of Hong Kong cc17@mails.tsinghua.edu.cn,z-m@tsinghua.edu.cn

数据集: 正文中的介绍

- Ciao http://www.jiliang.xyz/trust.html

- Epinion http://alchemy.cs.washington.edu/data/epinions/

- Flixster http://www.cs.ubc.ca/jamalim/datasets/

代码:

其他:

其他人写的文章

简要概括创新点: Transfer Learning + Attention + whole-data based strategy。前提是以MF为基础,缺点也显而易见:It is not suitable for models with non-linear prediction layers,

- (1) in this paper, we propose an Efcient Adaptive Transfer Neural Network (EATNN) for social-aware recommendation. (基于以上观察,本文提出了一种高效的自适应迁移神经网络(EATNN) 用于社会感知推荐。)

- the two key ingredients of our proposed model in detail, which are: (我们提出的模型的两个关键组成部分,即:)

- (1) attention-based adaptive transfer learning and (基于注意的适应性迁移学习及其应用)

- (2) efcient whole-data based optimization. (高效的基于全数据的优化)

- (2) To adaptively capture the interplay between item and social domain for each user, we introduce attention mechanisms [1, 3] to automatically estimate the diference of mutual infuence between item domain and social domain. The key idea is to learn two attention-based kernels to model the weights of the outputs come from diferent domains. (为了自适应地捕捉每个用户的项目和社交领域之间的相互作用,我们引入了注意机制[1,3],以自动估计项目领域和社交领域之间相互影响的差异。其关键思想是学习两个基于注意的核函数来建模来自不同领域的输出的权重。)

- (3) Besides, we propose an efcient optimization method to learn from the whole training set without negative sampling, and extend it to support multi-task learning. (此外,我们提出了一种有效的优化方法,可以在不使用负采样的情况下从整个训练集中学习,并将其扩展到支持多任务学习。)

- (4) To ensure training efciency, we accelerate the optimization method by reformulating a commonly used square loss function with rigorous mathematical reasoning. By leveraging sparsity in implicit data, we succeed to update each parameter in a manageable time complexity without sampling. (为了保证训练效率,我们通过用严格的数学推理重新构造一个常用的平方损失函数来加速优化方法。通过利用隐式数据中的稀疏性,我们成功地在可管理的时间复杂度内更新每个参数,而无需采样。)

- All the datasets are preprocessed to make sure that all items have at least five interactions. (所有数据集都经过预处理,以确保所有项目至少有5次交互。)

ABSTRACT

-

(1) Many previous studies attempt to utilize information from other domains to achieve better performance of recommendation. Recently, social information has been shown efective in improving recommendation results with transfer learning frameworks, and the transfer part helps to learn users’ preferences from both item domain and social domain. (以往的许多研究都试图利用其他领域的信息来实现更好的推荐性能。最近,社交信息已显示出通过迁移学习 框架能有效改善推荐结果的能力,迁移部分有助于从项目域和社交域中学习用户的偏好)

- However, two vital issues have not been well-considered in existing methods: (然而,现有的方法没有充分考虑到两个重要问题:)

- (1) Usually, a static transfer scheme is adopted to share a user’s common preference between item and social domains, which is not robust in real life where the degrees of sharing and information richness are varied for diferent users. Hence a non-personalized transfer scheme may be insufcient and unsuccessful. (通常,采用静态 迁移方案在项目域和社交域之间共享用户的共同偏好,这在现实生活中并不稳健,因为不同用户的共享程度和信息丰富程度各不相同。因此,非个性化的迁移方案可能是无效且不成功的)

- (2) Most previous neural recommendation methods rely on negative sampling in training to increase computational efciency, which makes them highly sensitive to sampling strategies and hence difcult to achieve optimal results in practical applications. (以前的大多数神经推荐方法在训练中都依赖负采样 来提高计算效率,这使它们对采样策略高度敏感,因此难以在实际应用中获得最佳结果。)

- However, two vital issues have not been well-considered in existing methods: (然而,现有的方法没有充分考虑到两个重要问题:)

-

(2) To address the above problems, we propose an Efcient Adaptive Transfer Neural Network (EATNN). (为了解决上述问题,我们提出一个高效自适应迁移神经网络(EATNN)。)

- By introducing attention mechanisms, the proposed model automatically assign a personalized transfer scheme for each user. (通过引入注意力机制,提出的模型自动的分配个性化 迁移方案给每个用户。)

- Moreover, we devise an efcient optimization method to learn from the whole training set without negative sampling, and further extend it to support multi-task learning. (此外,我们设计了一种有效的优化方法,可以从整个训练集中进行学习,而无需进行负采样,并将其进一步扩展以支持多任务学习)

-

Extensive experiments on three real-world public datasets indicate that our EATNN method consistently outperforms the state-of-the-art methods on Top-K recommendation task, especially for cold-start users who have few item interactions. (在三个真实的公共数据集上进行的广泛实验表明,我们的EATNN方法在Top-K推荐任务上始终优于最新的方法,特别是对于那些很少有项目交互的冷启动用户。)

-

Remarkably, EATNN shows signifcant advantages in training efciency, which makes it more practical to be applied in real E-commerce scenarios. The code is available at ( https://github.com/chenchongthu/EATNN ). (值得注意的是,EATNN在训练效率方面显示出显著优势,这使其在实际的电子商务场景中更加实用。可用的代码在 (https://github.com/chenchongthu/EATNN)。)

CCS CONCEPTS

? Information systems → Recommender systems; ? Computing methodologies → Neural networks;

KEYWORDS

Recommender Systems, Adaptive Transfer Learning, Whole-data based Learning, Social Connections, Implicit Feedback

1 INTRODUCTION

-

(1) Recommender systems provide essential web services on the Internet to alleviate the information overload problem. Recently, many E-commerce sites, such as Ciao, Epinion, and Flixster, have becomepopular social platforms in which users can follow other users, discuss, and select items. The social connections in these applications refect users’ interests or profles, which are helpful for user modeling and personalized recommendation. (推荐系统在互联网上提供必要的web服务,以缓解信息过载问题。最近,许多电子商务网站,如Ciao、ePion和Flixster,已经成为流行的社交平台,用户可以在其中跟踪其他用户、讨论和选择项目。这些应用中的社交关系反映了用户的兴趣或爱好,有助于用户建模和个性化推荐。)

-

(2) Traditional Collaborative Filtering (CF) methods [12–14, 28] mainly make use of users’ historical records such as ratings, clicks, and purchases. Although they have shown good results, the performance will degrade signifcantly when the records matrix is very sparse. (传统的 协同过滤(CF) 方法[12-14,28]主要利用用户的历史记录,如评分、点击和购买。虽然它们显示了良好的结果,但当记录矩阵非常 稀疏时 ,性能将显著降低。)

- To address the lack of data, there has been a trend to augment user-item interactions with users’ social connections for recommendation [4, 18, 20, 27, 43]. (为了解决 缺乏数据的问题,有一种趋势是增加用户项目互动与用户的社交关系推荐[4,18,20,27,43]。)

- Generally, a user’s preferences can not only be inferred from the items he/she bought and clicked, but also be afected by his/her social connections. As a result, social-aware methods can utilize a much larger volume of data to tackle the data sparsity issue, and further improve the performance of recommender systems [4]. (一般来说,用户的偏好不仅可以从他/她购买和点击的物品中推断出来,还可以通过他/她的社交关系得到影响。因此,社会感知方法 可以利用更大的数据量来解决数据稀疏问题 ,并进一步提高推荐系统的性能[4]。)

-

(3) Many social-aware recommendation methods are based on transfer learning [15, 18, 25], as it is a suitable choice for the coordination of user-item interactions and user-user connections. The key concept behind transfer learning is to transfer the shared knowledge from one domain to other domains. (许多具有社会意识的推荐方法都基于迁移学习[15,18,25],因为它是协调用户项目交互和用户连接的合适选择。迁移学习背后的关键概念是将共享的知识从一个领域转移到其他领域。)

- However, most existing methods simply transfer a fxed proportion of common knowledge between item domain and social domain for each user [15, 18, 31], which is not robust in real life due to: (然而,大多数现有方法只是在每个用户的项目域和社交域之间转移固定比例的公共知识[15,18,31],这在现实生活中并不可靠,因为:)

- (1) the information richness of the two domains usually varies for diferent users; (这两个领域的信息丰富程度通常因不同用户而异;)

- (2) the degrees of the preference sharing between the two domains are varied for diferent users. As shown in Figure 1, user B has similar preferences in item domain and social domain, while A only shares very few preferences between the two domains. Therefore, a non-personalized transfer is insufcient and unsuccessful. To better characterize users’ preferences, recommender systems require adaptive transfer schemes for diferent users. (对于不同的用户,两个域之间的偏好共享程度是不同的。 如图1所示,用户B在item domain和social domain中有相似的偏好,而A在这两个域之间只有很少的偏好。因此,非个人化的转移是不充分和不成功的。为了更好地描述用户的偏好,推荐系统需要针对不同用户的自适应传输方案。)

- However, most existing methods simply transfer a fxed proportion of common knowledge between item domain and social domain for each user [15, 18, 31], which is not robust in real life due to: (然而,大多数现有方法只是在每个用户的项目域和社交域之间转移固定比例的公共知识[15,18,31],这在现实生活中并不可靠,因为:)

-

(4) In addition, since implicit data is often a natural byproduct of users’ behavior (e.g., browsing histories, click logs), user interactions that can be observed in both item and social domains are rather limited, and non-observed instances, which is taken as negative examples in model learning, are with much larger scale. (此外,由于隐式数据通常是用户行为(例如浏览历史记录、点击日志)的自然副产品,因此在项目和社交领域中可以观察到的用户交互相当有限,而在模型学习中被视为负样例的未观察到的实例的规模要大得多。)

- To increase computational efciency, existing neural recommendation methods [4, 10, 33, 34, 40] mainly rely on negative sampling for optimization, which is, however, highly sensitive to the sampling distribution and the number of negative samples [10]. (为了提高计算效率,现有的神经推荐方法[4,10,33,34,40]主要依靠负采样进行优化,然而,负采样对采样分布和负采样数非常敏感[10]。)

- Moreover, social-aware recommendation usually needs to optimize the loss function in both item and social domains, which is a multi-task problem. Hence for social-aware problem, it is more difcult for sampling-based strategy to converge to the optimum performance. (此外,社会感知推荐通常需要优化项目和社会领域的损失函数,这是一个多任务问题。因此,对于社会感知问题,基于抽样的策略 更难收敛到最优性能。)

- By contrast, whole-data based strategy computes the gradient on all training data. Thus it can easily converge to a better optimum [12, 41]. Unfortunately, the difculty in applying whole-data based strategy lies in the expensive computational cost for large-scale data, which makes it less applicable to neural models. (相比之下,基于整体数据的策略计算所有训练数据的梯度。因此,它可以很容易地收敛到一个更好的最优值[12,41]。不幸的是,应用基于整体数据的策略的困难在于对大规模数据的昂贵计算成本,这使得它不太适用于神经模型。)

-

(5) Motivated by the above observations, in this paper, we propose an Efcient Adaptive Transfer Neural Network (EATNN) for social-aware recommendation. (基于以上观察,本文提出了一种高效的自适应迁移神经网络(EATNN) 用于社会感知推荐。)

- To adaptively capture the interplay between item and social domain for each user, we introduce attention mechanisms [1, 3] to automatically estimate the diference of mutual infuence between item domain and social domain. The key idea is to learn two attention-based kernels to model the weights of the outputs come from diferent domains. (为了自适应地捕捉每个用户的项目和社交领域之间的相互作用,我们引入了注意机制[1,3],以自动估计项目领域和社交领域之间相互影响的差异。其关键思想是学习两个基于注意的核函数来建模来自不同领域的输出的权重。)

- Besides, we propose an efcient optimization method to learn from the whole training set without negative sampling, and extend it to support multi-task learning. (此外,我们提出了一种有效的优化方法,可以在不使用负采样的情况下从整个训练集中学习,并将其扩展到支持多任务学习。)

- To ensure training efciency, we accelerate the optimization method by reformulating a commonly used square loss function with rigorous mathematical reasoning. By leveraging sparsity in implicit data, we succeed to update each parameter in a manageable time complexity without sampling. (为了保证训练效率,我们通过用严格的数学推理重新构造一个常用的平方损失函数来加速优化方法。通过利用隐式数据中的稀疏性,我们成功地在可管理的时间复杂度内更新每个参数,而无需采样。)

-

(6) To evaluate the recommendation performance and training efciency of our model, we apply EATNN on three real-world datasets with extensive experiments. The results indicate that our model consistently outperforms the state-of-the-art methods on Top-K personalized recommendation task, especially for cold-start users who have few item interactions. (为了评估我们模型的推荐性能和训练效率,我们将EATNN应用于三个真实数据集,并进行了大量实验。结果表明,我们的模型在Top-K个性化推荐任务上始终优于最先进的方法,尤其是对于项目交互较少的冷启动用户。)

-

Furthermore, EATNN also shows signifcant advantages in training efciency, which makes it more practical in real E-commerce scenarios. The main contributions of this work are as follows: (此外,EATN在培训效率方面也显示出显著的优势,这使得它在真实的电子商务场景中更加实用。这项工作的主要贡献如下:)

- (1) We propose a novel Efcient Adaptive Transfer Neural Network for social-aware recommendation. By introducing attention mechanisms, the proposed model can adaptively capture the interplay between item domain and social domain for each user. (我们提出了一种新的用于社会感知推荐的高效自适应神经网络。通过引入注意机制,该模型可以自适应地捕捉每个用户的项目域和社交域之间的相互作用。)

- (2) We devise an efcient optimization method to avoid negative sampling and achieve more accurate performance. The proposed method is not only suitable for learning from implicit data that only contains positive examples, but also capable of jointly learning multi-task problems. (我们设计了一种有效的优化方法,以避免负采样并获得更精确的性能。该方法不仅适用于从只包含正例的隐式数据中学习,而且能够联合学习多任务问题。)

- (3) Extensive experiments are conducted on three benchmark datasets. The results show that EATNN consistently and signifcantly outperforms the state-of-the-art models in terms of both recommendation performance and training efciency. (在三个基准数据集上进行了大量实验。结果表明,在推荐性能和训练效率方面,EATN始终显著优于最先进的模型。)

2 RELATED WORK

2.1 Social-aware Recommendation

-

(1) Social-aware recommendation aims at leveraging users’ social connections to improve the performance of recommender systems. It works based on the assumption that users tend to share similar preferences with their friends. In previous work, (社交感知推荐旨在利用用户的社交关系来提高推荐系统的性能。它基于这样一种假设,即用户倾向于与朋友分享相似的偏好。在之前的工作中,)

- Krohn et al. [15] proposed a Multi-Relational Bayesian Personalized Ranking (MR-BPR) model based on Collective Matrix Factorization (CMF) [31], which predicts both user feedback on items and on social connections. (Krohn等人[15]提出了一个基于 集体矩阵分解(CMF) [31]的 多关系贝叶斯个性化排名(MR-BPR) 模型,该模型预测用户对物品和社会关系的反馈。)

- Zhao et al. [43] assumed that users are more likely to haveseen items consumed by their friends, and extended BPR [28] by changing the negative sampling strategy (SBPR). (Zhao等人[43]假设用户更可能看到朋友消费的物品,并通过改变负采样策略 (SBPR) 扩展了 BPR[28]。)

- Recently, the authors in [18] proposed to consider the visibility of both items and social relationships, and utilized transfer learning to combine the item and social domains for recommendation (TranSIV). (最近,作者在[18 ]提出考虑项目和社会关系的可见性,并利用迁移学习结合项目和社会领域的推荐(TURVV)。)

-

(2) Since deep learning has yielded great success in many felds, some researchers also tried to explore diferent neural network structures for social-aware recommendation task. For instance, (由于深度学习在许多领域取得了巨大成功,一些研究人员还试图探索不同的神经网络结构,用于社会感知推荐任务。例如,)

- Sun et al. [33] presented an attentive recurrent network for temporal social-aware recommendation (ARSE). (Sun等人[33]为时间社会意识推荐提出了一个注意力的循环网络(ARSE))。

- Wang et al. [37] enhanced NCF method [10] by combining with the graph regularization technique to model the cross-domain social relations. (Wang等人[37]将NCF方法[10]与 图正则化 技术相结合,对跨领域的社会关系进行建模,从而增强了NCF方法。)

- Recently, Chen et al. [4] proposed a Social Attentional Memory Network (SAMN), which considered to model both aspect- and friend-level diferences in social-aware recommendation. (最近,Chen等人[4]提出了一个 社会注意记忆网络(SAMN),该网络考虑了在社会意识推荐中对方面和朋友层面的差异进行建模。)

- However, existing neural methods [4, 10, 33, 37] mainly rely on negative sampling for model optimization, which may limit the performance of recommender systems. (然而,现有的神经方法[4,10,33,37]主要依赖负采样进行模型优化,这可能会限制推荐系统的性能。)

- Efcient optimization from all training data without sampling is one of the main concerns of this paper. (无需采样就能从所有训练数据中进行有效优化是本文关注的主要问题之一。)

2.2 Transfer Learning

-

(1) Transfer learning has been adopted in various systems for cross-domain tasks [2, 22, 29, 45]. The key idea of transfer learning is to transfer the common knowledge from the source domain to the target domain. As previously noted [44], social media contains multi-domain information, which provides a bridge for transfer learning. In previous studies, (转移学习已被用于各种 跨领域 任务系统[2,22,29,45]。迁移学习的核心思想是将知识从源领域转移到目标域。如前所述[44],社交媒体包含多领域信息,为迁移学习提供了桥梁。在之前的研究中,)

- Roy et al. [29] utilized transfer learning to deal with multi-relational data representation in social networks, but it did not specifcally focus on recommendation tasks. (Roy等人[29]利用转移学习来处理社交网络中的多关系数据表示,但并没有特别关注推荐任务。)

- Eaton et al. [9] pointed out that parts of the source domain data are inconsistent with the target domain observations, which may afect the construction of the model in the target domain. Based on that, some researchers [18, 19] designed selective latent factor transfer models to better capture the consistency and heterogeneity across domains. However, in these work, the transfer ratio needs to be properly selected through human efort and can not change dynamically in diferent scenarios. (Eaton等人[9]指出,部分源域数据与目标域观测不一致,这可能会影响目标域模型的构建。基于此,一些研究人员[18,19]设计了 选择性潜在因素转移模型 ,以更好地捕捉跨领域的 一致性 和 异质性 。然而,在这些工作中,传输比需要通过人工进行适当选择,并且不能在不同的场景中动态变化。)

-

(2) There are also some studies considering the adaption issue in transfer learning. However, existing methods mainly focus on task adaptation or domain adaption. (也有一些研究考虑了迁移学习中的 适应性问题。 然而,现有的方法主要侧重于 任务适应 或 领域适应 。)

- E.g., based on Gaussian Processes, Cao et al. [2] proposed to adapt the transfer-all and transfer-none schemes by estimating the similarity between a source and a target task. (例如,基于 高斯过程,Cao等人[2]提出通过估计源任务和目标任务之间的相似性来调整全部转移 和 无转移方案。)

- Zhang et al. [42] studied domain adaptive transfer learning which assumed that the pre-training and test sets have diferent distributions. (Zhang等人[42]研究了领域自适应迁移学习,该学习假设预训练集和测试集具有不同的分布。)

- The authors in [22] designed a method for completely heterogeneous transfer learning to determine diferent transferability of source knowledge. (作者在[22]中设计了一种完全异质迁移学习的方法,以确定源知识的不同可迁移性。)

- Our work difers from the above studies as the designed model is not limited to task adaptation or domain adaption. (我们的工作与上述研究不同,因为设计的模型不限于任务适应或领域适应。)

- Instead, we propose to adapt each user’s two kinds of information (item interactions and social connections) with a finer granularity, which allows the shared knowledge of each user to be transferred in a personalized manner. (相反,我们建议以更细粒度调整每个用户的两种信息(项目交互和社交联系),这允许以个性化的方式传递每个用户的共享知识。)

2.3 Model Learning in Recommendation

-

(1) There are two strategies to optimize a recommendation model with implicit feedback: (使用隐式反馈优化推荐模型有两种策略:)

- (1) negative sampling strategy [4, 10, 28] that samples negative instances from missing data; (负采样策略[4,10,28],从缺失数据中采样负实例;)

- (2) whole-data based strategy [7, 12, 17, 18] that sees all the missing data as negative. (整个基于数据的策略[7,12,17,18],认为所有缺失的数据都是负面的。)

-

As shown in previous studies [11, 41], both strategies have pros and cons: (正如之前的研究[11,41]所示,这两种策略都有利弊:)

- negative sampling strategy is more efcient by reducing negative examples in training, but may decrease the model’s performance; (负抽样策略通过减少训练中的负样本而更有效,但可能会降低模型的性能;)

- whole-data based strategy leverages the full data with a potentially better coverage, but inefciency can be an issue. (整个基于数据的策略利用了可能更好的覆盖率的完整数据,但效率低下可能是一个问题。)

-

Existing neural recommendation methods [4, 10, 33, 40] mainly rely on negative sampling for efcient optimization. To retain the model’s fdelity, we persist in whole-data based learning in this paper, and develop a fast optimization method to address the inefciency issue. (现有的神经推荐方法[4,10,33,40]主要依靠负采样进行有效优化。为了保持模型的优越性,本文坚持基于全数据的学习,并开发了一种快速优化方法来解决效率低下的问题。)

-

(2) Some eforts have been devoted to resolving the inefciency issue of whole-data based strategy. Most of them are based on Alternating Least Squares (ALS) [12]. (一些研究致力于解决基于数据的整体策略的无效性问题。其中大多数是基于 交替最小二乘法(ALS) [12]。)

- E.g., Pilaszy et al. [26] described an approximate solution of ALS. (例如,Pilaszy等人[26]描述了ALS的近似解。)

- He et al. [11] proposed an efcient element-wise ALS with non-uniform missing data. (他等人[11]提出了一种有效的 元素级ALS ,具有非均匀缺失数据。)

- Unfortunately, ALS based methods are not applicable to neural models which use Gradient Descent (GD) for optimization. (不幸的是,基于ALS的方法不适用于使用 梯度下降(GD) 进行优化的神经模型。)

-

Recently, some researchers [39,41] studied fast Batch Gradient Descent (BGD) methods to learn from all training examples. (最近,一些研究人员[39,41]研究了 快速批量梯度下降(BGD) 方法,以从所有训练示例中学习。)

- However, they only focus on optimizing traditional non-neural models. (然而,他们只专注于优化传统的非神经模型。)

-

Distinct from previous studies, we derive a new whole-data based loss function, which is, to the best of our knowledge, the frst efcient whole-data based learning strategy tailored for neural recommendation models. The loss function is further extended to jointly learn both item and social domains in our model. (与以往的研究不同,我们推导了一个新的 基于全数据的损失函数,据我们所知,这是为神经推荐模型量身定制的最有效的基于全数据的学习策略。在我们的模型中,损失函数被进一步扩展,以共同学习项目和社会领域。)

3 PRELIMINARY

We frst introduce the key notations used in this work and the whole-data based MF method for learning from implicit data. (我们首先介绍了这项工作中使用的关键符号,以及从隐式数据学习的基于所有数据的MF方法。)

3.1 Notations

-

(1) Table 1 depicts the notations and key concepts. ( 表1 描述了符号和关键概念。)

- Suppose we have M M M users and N N N items in the dataset, (假设数据集中有 M M M用户和 N N N项目,)

- and we use the index u u u to denote a user, t t t to denote another user, (我们使用索引 u u u表示一个用户, t t t表示另一个用户,)

- and v v v to denote an item. ( v v v表示一个项目。)

- There are two kinds of observed interactions: (观察到的相互作用有两种:)

- user-item interactions R = [ R u v ] M × N ∈ { 0 , 1 } R = [R_{uv}]_{M×N} \in \{0,1\} R=[Ruv?]M×N?∈{0,1} indicates whether u u u has purchased or clicked on item v v v, (中的用户项目交互 R = [ R u v ] M × N ∈ { 0 , 1 } R = [R_{uv}]_{M×N} \in \{0,1\} R=[Ruv?]M×N?∈{0,1}是否已购买或点击了项目 v v v,)

- and user-user social interactions X = [ X u t ] M × M ∈ { 0 , 1 } X = [X_{ut}]_{M×M} \in \{0,1\} X=[Xut?]M×M?∈{0,1} indicates whetheru trusts (or is a friend of) t t t in the social network. (用户社交互动 X = [ X u t ] M × M ∈ { 0 , 1 } X = [X_{ut}]_{M×M} \in \{0,1\} X=[Xut?]M×M?∈{0,1}表示用户是否信任(或是) t t t在社交网络中的朋友。)

- R \mathcal{R} R and X \mathcal{X} X denote the sets of interactions whose values are non-zero for the item domain and the social domain, respectively. ( R \mathcal{R} R和 X \mathcal{X} X分别表示项目域和社交域的值为非零的交互集。)

-

(2) For user u u u, u C u^C uC represents the latent factors shared between the item and social domains; (对于用户 u u u, u C u^C uC表示项目和社交域之间共享的隐因子;)

-

u I u^I uI and u S u^S uS represent user latent factors corresponding to the item and social domains, respectively. ( u I u^I uI和 u S u^S uS分别代表对应于项目和社交领域的用户潜在因素。)

-

Vector q v q_v qv? denotes the latent vector of v, (向量 q v q_v qv?表示v的潜在向量,)

-

and g t g_t gt? denotes the latent vector of t t t as a friend. ( g t g_t gt?表示 t t t作为朋友的潜在向量。)

-

α \alpha α and β \beta β are the parameters for adaptive transfer learning. ( α \alpha α和 β \beta β是自适应迁移学习的参数。)

-

Vector p u I p^I_u puI? and p u S p^S_u puS? are the representations of user u u u for item domain and social domain after transferring, respectively. (Vector p u I p^I_u puI? 和 p u S p^S_u puS?分别是用户 u u u在项目域和社交域转移后的表示。)

-

More details are introduced in Section 4.

3.2 MF Method for Implicit Feedback

-

(1) Matrix Factorization (MF) maps both users and items into a joint latent feature space of d d d dimension such that interactions are modeled as inner products in that space. Mathematically, each entry R u v R_{uv} Ruv? of R R R is estimated as: (矩阵分解(MF)将用户和项目映射到 d d d维度的联合潜在特征空间中,以便将交互建模为该空间中的内积。从数学上讲, R R R的每个条目 R u v R_{uv} Ruv?估计为:)

-

(2) The item recommendation problem is formulated as estimating the scoring function R u v R_{uv} Ruv?, which is used to rank items. (项目推荐问题被描述为估计评分函数 R u v R_{uv} Ruv?,用于对项目进行排名。)

-

(3) For implicit data, the observed interactions are rather limited, and non-observed examples are of a much larger scale. To learn model parameters, Hu et al. [12] introduced a weighted regression function, which associates a confidence to each prediction in the implicit feedback matrix R R R: (对于隐式数据,观察到的交互作用相当有限,而未观察到的例子规模要大得多。为了了解模型参数,Hu等人[12]引入了一个 加权回归函数 ,该函数将置信度与隐式反馈矩阵 R R R中的每个预测相关联:)

- where c u v c_{uv} cuv? denotes the weight of entry R u v R_{uv} Ruv?. Note that in implicit feedback learning, missing entries are usually assigned a zero R u v R_{uv} Ruv? value but non-zero c u v c_{uv} cuv? weight. (其中 c u v c_{uv} cuv?表示条目 R u v R_{uv} Ruv?的权重。请注意,在内隐反馈学习中,缺失条目通常被指定为零 R u v R_{uv} Ruv?值,但非零 c u v c_{uv} cuv?权重。)

-

(4) As can be seen, the time complexity of computing the loss in Eq.(2) is O ( ∣ U ∣ ∣ V ∣ d ) O(|U||V|d) O(∣U∣∣V∣d). Clearly, the straightforward way to calculate gradients is generally infeasible, because ∣ U ∣ ∣ V ∣ |U||V| ∣U∣∣V∣ can easily reach billion level or even higher in real life. (可以看出,计算公式(2)中损失的时间复杂度为 O ( ∣ U ∣ ∣ V ∣ d ) O(|U | | V | d) O(∣U∣∣V∣d)。显然,计算梯度的直接方法通常是不可行的,因为 ∣ U ∣ ∣ V ∣ |U | | V | ∣U∣∣V∣在现实生活中很容易达到十亿甚至更高的水平。)

4 EFFICIENT ADAPTIVE TRANSFER NEURAL NETWORK (EATNN)

In this section, we frst present a general overview of the EATNN framework, then introduce the two key ingredients of our proposed model in detail, which are: (在本节中,我们首先概述EATNN框架,然后详细介绍我们提出的模型的两个关键组成部分,即:)

- (1) attention-based adaptive transfer learning and (基于注意的适应性迁移学习及其应用)

- (2) efcient whole-data based optimization. (高效的基于全数据的优化)

4.1 Model Overview

- The goal of our model is to make item recommendations based on implicit feedback and social data. The overall model architecture is described in Figure 2. From the figure, we can present a simple high-level overview of our model: (我们模型的目标是基于内隐反馈和社会数据提出项目建议。整体模型架构如图2所示。从图中,我们可以对我们的模型进行简单的高层概述:)

- (1) Users and items are converted to dense vector representations through embeddings. Specifcally, as the bridge of transfer learning, each user

u

u

u has three latent vectors. (用户和项目通过嵌入转换为密集向量表示。具体来说,作为迁移学习的桥梁,每个用户

u

u

u有三个潜在向量。)

- u C u^C uC represents the knowledge shared between the item and social domains. ( u C u^C uC表示项目和社交领域之间共享的知识。)

- u I u^I uI and u S u^S uS represent user u u u’s specifc preference corresponding to item domain and social domain, respectively. ( u I u^I uI和 u S u^S uS分别代表用户 u u u对应于项目域和社交域的特定偏好。)

- (2) An attention-based adaptive transfer layer is designed to automatically model the domain relationships and learn domain-specifc functionalities to leverage shared representations. It allows parameters to be automatically allocated to capture both shared knowledge and domain-specifc knowledge, while avoiding the need of adding many new parameters. (基于注意的自适应传输层设计用于自动建模域关系,并学习特定于域的功能,以利用共享表示。它允许自动分配参数以捕获共享知识和特定领域的知识,同时避免了添加许多新参数的需要。)

- (3) The model is jointly optimized by a newly derived efcient whole-data based training strategy. (该模型通过一种新的有效的基于全数据的训练策略进行联合优化。)

- (1) Users and items are converted to dense vector representations through embeddings. Specifcally, as the bridge of transfer learning, each user

u

u

u has three latent vectors. (用户和项目通过嵌入转换为密集向量表示。具体来说,作为迁移学习的桥梁,每个用户

u

u

u有三个潜在向量。)

4.2 Attention-based Adaptive Transfer

-

(1) Attention mechanism has been widely utilized in many felds, such as computer vision [5], machine translation [1], and recommendation [3, 4, 38]. Since attention mechanism has superior ability to assign non-uniform weights according to input instances, it is adopted in our model to achieve personalized adaptive transfer learning. (注意力机制在许多领域都得到了广泛的应用,如 计算机视觉 、机器翻译 和推荐[3,4,38]。由于注意机制具有根据输入实例分配非均匀权重的优越能力,因此我们的模型采用它来实现个性化自适应迁移学习。)

- Specifcally, we apply attention networks for item domain and social domain respectively. Each is a two-layer network with user representations (

u

C

u^C

uC,

u

I

u^I

uI,

u

S

u^S

uS) as the inputs. For a user, if the two domains are less related, then the shared knowledge (

u

C

u^C

uC) will be penalized and the attention network will learn to utilize more domain-specifc information (

u

I

u^I

uI or

u

S

u^S

uS) instead. Formally, the item domain attention and the social domain attention are defned as: (具体来说,我们将注意力网络分别应用于项目域和社交域。每个都是一个两层网络,用户表示(

u

C

u^C

uC,

u

I

u^I

uI,

u

S

u^S

uS)作为输入。对于一个用户来说,如果两个域的相关性较小,那么共享的知识(

u

C

u^C

uC)将受到惩罚,注意力网络将学习使用更多特定于域的信息(

u

I

u^I

uI或

u

S

u^S

uS)。形式上,项目域注意和社交域注意被定义为:)

- where

W

α

∈

R

k

×

d

W_\alpha \in R^{k×d}

Wα?∈Rk×d,

b

α

∈

R

k

b_\alpha \in R^k

bα?∈Rk,

h

α

∈

R

k

h_\alpha \in R^k

hα?∈Rk are parameters of the item domain attention, (是项目域注意的参数,)

- W β ∈ R k × d W_\beta \in R^{k×d} Wβ?∈Rk×d, b β ∈ R k b_\beta \in R^k bβ?∈Rk, h β ∈ R k h_\beta \in R^k hβ?∈Rk are parameters of the social domain attention. (是社交领域注意力的参数。)

- d d d is the dimension of embedding vector, ( d d d是嵌入向量的维数,)

- k k k is the dimension of the attention network, ( k k k是注意力网络的维度,)

- and σ \sigma σ is the nonlinear activation function ReLU [23]. ( σ \sigma σ是非线性激活函数ReLU[23]。)

- Specifcally, we apply attention networks for item domain and social domain respectively. Each is a two-layer network with user representations (

u

C

u^C

uC,

u

I

u^I

uI,

u

S

u^S

uS) as the inputs. For a user, if the two domains are less related, then the shared knowledge (

u

C

u^C

uC) will be penalized and the attention network will learn to utilize more domain-specifc information (

u

I

u^I

uI or

u

S

u^S

uS) instead. Formally, the item domain attention and the social domain attention are defned as: (具体来说,我们将注意力网络分别应用于项目域和社交域。每个都是一个两层网络,用户表示(

u

C

u^C

uC,

u

I

u^I

uI,

u

S

u^S

uS)作为输入。对于一个用户来说,如果两个域的相关性较小,那么共享的知识(

u

C

u^C

uC)将受到惩罚,注意力网络将学习使用更多特定于域的信息(

u

I

u^I

uI或

u

S

u^S

uS)。形式上,项目域注意和社交域注意被定义为:)

-

(2) Then, the final attention scores are normalized with a softmax function: (然后,使用softmax函数将最终注意力分数标准化:)

- α ( C , u ) \alpha_{(C,u)} α(C,u)? and β ( C , u ) \beta_{(C,u)} β(C,u)? are the weights of shared knowledge ( u C u^C uC) for item domain and social domain respectively, which determine how much to transfer in each domain. ( α ( C , u ) \alpha_{(C,u)} α(C,u)?和 β ( C , u ) \beta_{(C,u)} β(C,u)?分别是项目域和社交域共享知识的权重( u C u^C uC),它们决定了在每个域中转移多少知识。)

-

(3) After obtaining the above attention weights, the representations of user u u u for the two domains are calculated as follows: (在获得上述注意权重后,两个域的用户 u u u表示计算如下:)

- p u I p^I_u puI? and p u S p^S_u puS? are two feature vectors, which represent the user’s preferences for items and other users after transferring the common knowledge between the two domains. ( p u I p^I_u puI? and p u S p^S_u puS?是两个特征向量,代表用户在两个域之间传递公共知识后对项目和其他用户的偏好。)

-

(4) Based on the learnt feature vectors, the prediction part aims to generate a score that indicates a user’s preferences for an item or a friend. The prediction part is built on a neural form of MF [10]. (基于所学习的特征向量,预测部分旨在生成表示用户对项目或朋友的偏好的分数。预测部分基于MF的神经形式[10]。)

-

For each domain task, a specifc output layer is employed. The scores of user u u u for item v v v and another user t t t are calculated as follows: (对于每个域任务,都使用特定的输出层。用户 u u u对物品 v v v的得分和另一个用户 t t t的得分计算如下:)

- where q v ∈ R d q_v \in R^d qv?∈Rd and g t ∈ R d g_t \in R^d gt?∈Rd are latent vectors of item v v v and user t t t as a friend, ( q v ∈ R d q_v \in R^d qv?∈Rd and g t ∈ R d g_t \in R^d gt?∈Rd是物品 v v v和用户 t t t作为朋友的潜在向量)

- ⊙ \odot ⊙ denotes the element-wise product of vectors, (表示向量的元素乘积)

- and h I ∈ R d h_I \in R^d hI?∈Rd and h S ∈ R d h^S \in R^d hS∈Rd denote the output layer for item domain and social domain, respectively. ( h I ∈ R d h_I \in R^d hI?∈Rd and h S ∈ R d h^S \in R^d hS∈Rd分别表示项目域和社交域的输出层。)

- Then for our target task – recommendation, the candidate items will be ranked in descending order of R ^ u v \hat{R}_{uv} R^uv? to provide Top-K item recommendation list. (然后,对于我们的目标任务-推荐,候选项目将按 R ^ u v \hat{R}_{uv} R^uv?的降序排列,以提供Top-K项目推荐列表。)

4.3 Efcient Whole-data based Learning

To improve the speed of whole-data based optimization, we derive an efcient loss function for learning from implicit feedback. (为了提高基于全数据的优化速度,我们推导了一个有效的损失函数,用于从隐式反馈中学习。)

4.3.1 Weighting Strategy.

- (1) We frst present the weighting strategy for each entry in matrices

R

R

R and

X

X

X. (我们首先给出矩阵

R

R

R和

X

X

X中每个条目的加权策略)

- There have been many studies on how to assign proper weights for implicit interactions, such as uniform weighting strategy [12,26,36] and frequency-based weighting strategy [11,17]. (关于如何为内隐交互分配适当的权重,已有许多研究,例如 统一权重策略 [12,26,36]和 基于频率的权重策略 [11,17]。)

- Since this is not the main concern of our work, we follow the settings of previous work [11]: (由于这不是我们工作的主要关注点,我们遵循之前工作的设置)

- (1) the weight of each positive entry ( c u v I + c^{I+}_{uv} cuvI+? and c u t S + c^{S+}_{ut} cutS+?) is set to 1; (每个正项的权重( c u v I + c^{I+}_{uv} cuvI+? 和 c u t S + c^{S+}_{ut} cutS+?)设置为1)

- (2) the weights of negative instances are calculated as follows, which assign the larger weights to the items and friends with higher frequencies: (负面实例的权重计算如下,将较大的权重分配给频率较高的项目和朋友:)

- where m v m_v mv? and n t n_t nt? denote the frequency of item v v v and friend t t t in R R R and X X X, respectively; (其中, m v m_v mv?和 n t n_t nt?分别表示 R R R和 X X X中 v v v项和 t t t项的 频率;)

- R v \mathcal{R}_v Rv? and X t \mathcal{X}_t Xt? denote the positive interactions of v v v and t t t; ( R v \mathcal{R}_v Rv? and X t \mathcal{X}_t Xt?表示 v v v和 t t t之间的正相互;)

- c 0 I c^I_0 c0I? and c 0 S c^S_0 c0S? determine the overall weight of missing data, ( c 0 I c^I_0 c0I?和 c 0 S c^S_0 c0S?确定缺失数据的总体权重,)

- and ρ 1 \rho_1 ρ1? and ρ 2 \rho_2 ρ2? control the signifcance level of popular items over unpopular ones. ( ρ 1 \rho_1 ρ1?和 ρ 2 \rho_2 ρ2?控制受欢迎物品相对于不受欢迎物品的重要程度。)

4.3.2 Loss Inference.

-

(1) In our method, the loss functions of item domain and social domain only difer in their inputs, thus we focus on illustrating the inference of the item domain in detail. (在我们的方法中,项目域和社会域的损失函数 只在它们的输入中有所不同 ,因此我们着重于详细说明项目域的推理。)

-

(2) According Eq.(2) , for a batch of users, the loss of item domain is: (根据 公式(2) ,对于一批用户,项目域的损失为:)

-

(3) In implicit data, since R u v ∈ { 0 , 1 } R_{uv} \in \{0,1\} Ruv?∈{0,1} indicates whether u u u has purchased or clicked on item v v v, it can be replaced by a constant to simplify the equation. Also, the loss of missing data can be expressed by the residual between the loss of all data and that of positive data: (在隐式数据中,由于 R u v ∈ { 0 , 1 } R_{uv} \in \{0,1\} Ruv?∈{0,1}表示 u u u是否购买或点击了 v v v项,因此可以用一个常数来代替它以简化方程。此外,缺失数据的损失可以用所有数据的损失与正数据的损失之间的残差来表示:)

- where const denotes a Θ \Theta Θ-invariant constant value, (其中const表示 Θ \Theta Θ不变常量值,)

- and L I A ( Θ ) L^A_I(\Theta) LIA?(Θ) denotes the loss for all data. ( L I A ( Θ ) L^A_I(\Theta) LIA?(Θ)表示所有数据的loss。)

- Thus, L I ( Θ ) L_I(Θ) LI?(Θ) can be seen as a combination of the loss of positive data and the loss of all data. (因此, L I ( Θ ) L_I(Θ) LI?(Θ)可以被视为正数据loss和所有数据loss的组合。)

- And the loss of missing data has been eliminated. The new computational bottleneck lies in L I A ( Θ ) L^A_I(Θ) LIA?(Θ) now. (丢失的数据也被消除了。新的计算瓶颈在于 L I A ( Θ ) L^A_I(Θ) LIA?(Θ)。)

-

(4) Recall the prediction of R ^ u v \hat{R}_{uv} R^uv?, we have: (回想一下 R ^ u v \hat{R}_{uv} R^uv?的预测,我们有:)

-

(5) Based on a decouple manipulation for the inner product opera tion, the summation operator and elements in p u p_u pu? and q v q_v qv? can be rearranged. (基于内积运算的解耦操作, p u p_u pu?和 q v q_v qv?中的求和运算符和元素可以重新排列。)

-

(6) By substituting Eq.(11) in L I A ( Θ ) L^A_I(\Theta) LIA?(Θ), there emerges a nice structure: if we set c u v ? c^?_{uv} cuv?? to c v ? c^?_v cv?? (Eq.(7)), the interaction between p u , i I p^I_{u,i} pu,iI? and q v , i q_{v,i} qv,i? can be properly separated. (通过在Eq.(11)中替换 L I A ( Θ ) L^A_I(\Theta) LIA?(Θ),出现了一个很好的结构:如果我们设置 c u v ? c^?_{uv} cuv??到 c v ? c^?_v cv??(等式(7)), p u , i I p^I_{u,i} pu,iI? 和 q v , i q_{v,i} qv,i?之间的交互可以正确地分开。)

-

Thus, the optimization of ∑ v ∈ V c v I ? q v , i q v , j \sum_{v\in V} c^{I?}_v q_{v,i} q_{v,j} ∑v∈V?cvI??qv,i?qv,j? and ∑ u ∈ B p u , i I p u , j I \sum_{u\in B}p^I_{u,i} p^I_{u,j} ∑u∈B?pu,iI?pu,jI? are independent of each other, which means we could achieve a signifcant speed-up by precomputing the two terms (因此, ∑ v ∈ V c v I ? q v , i q v , j \sum_{v\in V} c^{I?}_v q_{v,i} q_{v,j} ∑v∈V?cvI??qv,i?qv,j? 和 ∑ u ∈ B p u , i I p u , j I \sum_{u\in B}p^I_{u,i} p^I_{u,j} ∑u∈B?pu,iI?pu,jI?中的优化相互独立,这意味着我们可以通过预先计算这两项来实现显著的加速)

-

(7) The rearrangement of nested sums in Eq.(12) is the key transformation that allows the fast optimization. The computing complexity of L I A ( Θ ) L^A_I(\Theta) LIA?(Θ) has been reduced from O ( ∣ B ∣ ∣ V ∣ d ) O(|B||V|d) O(∣B∣∣V∣d) to O ( ( ∣ B ∣ + ∣ V ∣ ) d 2 ) O((|B| + |V|)d2) O((∣B∣+∣V∣)d2). (等式(12)中嵌套和的重新排列是允许快速优化的关键转换。 L I A ( Θ ) L^A_I(\Theta) LIA?(Θ)的计算复杂度已从 O ( ∣ B ∣ ∣ V ∣ d ) O(|B||V|d) O(∣B∣∣V∣d) 到了 O ( ( ∣ B ∣ + ∣ V ∣ ) d 2 ) O((|B| + |V|)d2) O((∣B∣+∣V∣)d2)。)

-

(8) By substituting Eq.(12) in Eq.(9) and removing the const part, we get the fnal efcient whole-data based loss of the item domain as follows: (通过替换公式(9)中的公式(12),并删除常量部分,我们得到了基于项目域的有效整体数据丢失,如下所示:)

- where c u v I + c^{I+}_{uv} cuvI+? is set to 1 and c u v I ? c^{I?}_{uv} cuvI?? is simplifed to c v I ? c^{I?}_{v} cvI?? as discussed before. (其中 c u v I + c^{I+}_{uv} cuvI+?被设置为1,且 c u v I ? c^{I?}_{uv} cuvI??简化为 c v I ? c^{I?}_{v} cvI??,如前所述。)

4.3.3 Joint Learning.

-

(1) Similarly, we can derive the loss function of social domain: (同样,我们可以推导出社会领域的损失函数:)

-

(2) After that, we integrate both the subtasks of item domain and social domain into a unifed multi-task learning framework whose objective function is: (然后,我们将项目域和社交域的子任务整合到一个统一的多任务学习框架中,其目标函数为:)

- where L ~ I ( Θ ) \tilde{\mathcal{L}}_I(\Theta) L~I?(Θ) is the item domain loss from Eq.(13), ( L ~ I ( Θ ) \tilde{\mathcal{L}}_I(\Theta) L~I?(Θ)是从等式(13)得到的项目域的loss)

- L ~ S ( Θ ) \tilde{L}_S(\Theta) L~S?(Θ) is the social domain loss from Eq.(14) , ( L ~ S ( Θ ) \tilde{L}_S(\Theta) L~S?(Θ)是从等式(14)得到的社交域的loss)

- and μ \mu μ is the parameter to adjust the weight proportion of each term. (而 μ \mu μ是调整每项权重比例的参数。)

- The whole framework can be efciently trained using existing optimizers in an end-to-end manner. (整个框架可以使用现有的优化器以端到端的方式进行有效培训。)

4.3.4 Discussion.

-

(1) So far we have derived the efcient whole-data based learning method. Note that the method is not limited to optimize recommendation models. It has the potential to beneft many other tasks where only positive data is observed, such as word embedding [21] and multi-label classifcation [35]. (到目前为止,我们已经得出了有效的基于全数据的学习方法。请注意,该方法不限于优化推荐模型。它有可能对其他许多只观察到正面数据的任务有所帮助,例如 单词嵌入 [21]和 多标签分类 [35]。)

-

(2) To analyze the time complexity of our optimization method, we exclude the time overhead of adaptive transfer learning in the model. (为了分析优化方法的时间复杂度,我们在模型中排除了自适应迁移学习的时间开销。)

- In Eq.(15) , updating a batch of users in item domain takes ( ( ∣ B ∣ + ∣ V ∣ ) d 2 + ∣ R B ∣ d ) ((|B| + |V|)d2+ |R_B|d) ((∣B∣+∣V∣)d2+∣RB?∣d) time, where R B \mathcal{R}_B RB? denotes positive item interactions of this batch of users. (在等式(15)中,更新项目域中的一批用户需要花费 ( ( ∣ B ∣ + ∣ V ∣ ) d 2 + ∣ R B ∣ d ) ((|B| + |V|)d2+ |R_B|d) ((∣B∣+∣V∣)d2+∣RB?∣d)时间,其中 R B \mathcal{R}_B RB?表示这批用户的积极项目交互。)

- Similarly, in social domain it takes O ( ( ∣ B ∣ + ∣ U ∣ ) d 2 + ∣ X B ∣ d ) O((|B|+|U|)d^2+|X_B|d) O((∣B∣+∣U∣)d2+∣XB?∣d) time.

- Thus, one batch takes total O ( ( 2 ∣ B ∣ + ∣ U ∣ + ∣ V ∣ ) d 2 + ( ∣ R B ∣ + ∣ X B ∣ ) d ) O((2|B|+ |U| + |V|)d^2+(|R_B| + |X_B|)d) O((2∣B∣+∣U∣+∣V∣)d2+(∣RB?∣+∣XB?∣)d) time.

- For the original regression loss, it takes O ( ( ∣ B ∣ ∣ V ∣ + ∣ B ∣ ∣ U ∣ ) d ) O((|B||V| + |B||U|)d) O((∣B∣∣V∣+∣B∣∣U∣)d) time. (对于原始回归损失,需要花费 O ( ( ∣ B ∣ ∣ V ∣ + ∣ B ∣ ∣ U ∣ ) d ) O((|B||V| + |B||U|)d) O((∣B∣∣V∣+∣B∣∣U∣)d)时间。)

- Since ∣ R B ∣ ? ∣ B ∣ ∣ V ∣ |R_B| \ll |B||V| ∣RB?∣?∣B∣∣V∣, ∣ X B ∣ ? ∣ B ∣ ∣ U ∣ |XB| \ll |B||U| ∣XB∣?∣B∣∣U∣, and d ? ∣ B ∣ d \ll |B| d?∣B∣ in practice, the computional complexity of our optimization method is reduced by several magnitudes. (由于 ∣ R B ∣ ? ∣ B ∣ ∣ V ∣ |R_B| \ll |B||V| ∣RB?∣?∣B∣∣V∣, ∣ X B ∣ ? ∣ B ∣ ∣ U ∣ |XB| \ll |B||U| ∣XB∣?∣B∣∣U∣, and d ? ∣ B ∣ d \ll |B| d?∣B∣在实践中,我们的优化方法的计算复杂度降低了几个数量级。)

- This makes it possible to apply whole-data based optimization strategy for neural models. Moreover, since no approximation is introduced during the derivation process, the optimization results are exactly the same with the original whole-data based regression loss. (这使得对神经模型应用基于整体数据的优化策略成为可能。此外,由于在推导过程中没有引入近似值, 优化结果与基于原始全数据的回归损失完全相同。)

-

(3) As fast whole-data based learning is a challenging problem, our current efcient optimization method is still preliminary and has a limitation. It is not suitable for models with non-linear prediction layers, because the rearrange operation in Eq.(11) requires the prediction of R ^ u v \hat{R}_{uv} R^uv? to be linear. We leave the extension of the method as future work. (由于快速的基于全数据的学习是一个具有挑战性的问题,我们目前有效的优化方法仍然是初步的,并且存在局限性。它不适用于具有非线性预测层的模型, 因为等式(11)中的重排操作要求 R ^ u v \hat{R}_{uv} R^uv?的预测是线性的 。我们将该方法的扩展留作将来的工作。)

4.4 Model Training

-

(1) To optimize the objective function, we adopt mini-batch Adagrad [8] as the optimizer. (为了优化目标函数,我们采用mini-batch Adagrad[8]作为优化器。)

- Its main advantage is that the learning rate can be self-adapted during the training phase, which eases the pain of choosing a proper learning rate. (它的主要优点是,学习率 可以在训练阶段 自适应 ,这减轻了选择合适学习速率的痛苦。)

- Specifcally, users are frst divided into multiple batches. Then, for each batch of users, all positive interactions in both item domain and social domain are utilized to form the training instances. (具体来说,用户首先被分为多个批次。然后,针对每一批用户,利用项目域和社交域中的所有积极交互来形成训练实例。)

-

(2) Dropout is an efective solution to prevent deep neural networks from overftting [32], which randomly drops part of neurons during training. (辍学是防止深层神经网络过度训练的有效方法[32],在训练过程中会随机丢弃部分神经元。)

-

In this work, we employ dropout to improve our model’s generalization ability. Specifcally, after transferring, we randomly drop ρ percent of pIuand pS u,where ρ is the dropout ratio. (在这项工作中,我们使用dropout来提高模型的泛化能力。 具体地说,在转移后,我们随机降低p和pS u的ρ百分比,其中ρ是退出率。)

5 EXPERIMENTS

5.1 Experimental Settings

5.1.1 Datasets.

-

(1) We experimented with three public accessible datasets: Ciao3, Epinion4 and Flixster5. (我们对三个公共可访问的数据集进行了实验:Ciao3、ePionion4和Flixster5。)

- The three datasets are widely used in previous studies [4, 18, 33]. (这三个数据集在之前的研究中被广泛使用[4,18,33]。)

- Each dataset contains users’ ratings to the items they have purchased and the social connections between users. (每个数据集都包含 用户对他们购买的物品的评分 以及用户之间的社会关系。)

- Among all the benchmark datasets, Flixster is the largest one and contains more than seven million item interactions from about seventy thousand users. (在所有的基准数据集中,Flixster是最大的一个,包含来自约7万用户的700多万条交互信息。)

-

(2) All the datasets are preprocessed to make sure that all items have at least five interactions. (所有数据集都经过预处理,以确保所有项目至少有5次交互。)

- As long as there exist some user–user or user–item interactions, the corresponding rating is assigned a value of 1 as implicit feedback. The statistical details of these datasets are summarized in Table 2. (只要存在一些用户-用户或用户-项目交互,相应的评分就会被指定为1作为隐含反馈。 表2总结了这些数据集的统计细节。)

5.1.2 Baselines.

To evaluate the performance of Top-K recommendation, we compare our EATNN with the following methods. (为了评估Top-K推荐的性能,我们将EATNN与以下方法进行比较。)

- Bayesian Personalized Ranking (BPR) [28]: This method optimizes MF with the Bayesian Personalized Ranking objective function. (该方法利用 贝叶斯个性化排序目标函数 对MF进行优化。)

- Exposure MF (ExpoMF) [17]: This is a whole-data based method for item recommendation. It treats all missing interactions as negative and weighs them by item popularity. (这是一个完整的基于数据的项目推荐方法。它将所有缺失的互动视为负面互动,并按项目受欢迎程度来衡量。)

- Neural Collaborative Filtering (NCF) [10]: This is the state-of-the-art deep learning method which uses users’ historical feedback for item ranking. It combines MF with a multilayer perceptron (MLP) model . (这是最先进的深度学习方法,使用用户的历史反馈进行项目排名。它将MF与 多层感知器(MLP)模型 相结合。)

- Social Bayesian Personalized Ranking (SBPR) [43]: This is a ranking model which assumes that users tend to assign higher scores to items that their friends prefer. (这是一个排名模型,它假设用户倾向于为朋友喜欢的项目分配更高的分数。)

- Transfer Model with Social and Item Visibilities (Tran-SIV) [18]: This is a state-of-the-art social-aware recommendation method. It considers the visibility of both items and friend relationships, and utilizes transfer learning to combine the item domain and social domain for recommendation. (这是一种最先进的社会意识推荐方法。它考虑了项目和朋友关系的可见性,并利用 迁移学习 将项目域和社交域结合起来进行推荐。)

- Social Attentional Memory Network (SAMN) [4]: SAMN is a state-of-the-art deep learning method, which leverages attention mechanisms to model both aspect- and friend-level diferences for social-aware recommendation. (SAMN是一种先进的深度学习方法,它利用注意力机制对社交推荐的方面和朋友层面的差异进行建模。)

The comparison of EATNN and the baseline methods are listed in Table 3. (表3列出了EATNN和基线方法的比较。)

5.1.3 Evaluation Metrics.

- We adopt Recall@K and N D C G @ K NDCG@K NDCG@K to evaluate the performance of all methods. (我们采取Recall@K和NDCG@KNDCG@K评估所有方法的性能。)

- The two metrics have been widely used in previous recommendation studies [4, 18, 40]. (这两个指标在之前的推荐研究中得到了广泛应用[4,18,40]。)

- Recall@K considers whether the ground truth is ranked among the top K items, (Recall@K考虑地面真相是否位列前K项,)

- while N DCG@K is a position-aware ranking metric. (而NDCG@K是一个位置感知排名指标。)

5.1.4 Experiments Details.

- (1) We randomly split each dataset into training (80%), validation (10%), and test (10%) sets.

- (2) The parameters for all baseline methods were initialized as in the corresponding papers, and were then carefully tuned to achieve optimal performances.

- (3) The learning rate for all models were tuned amongst [0.005, 0.01, 0.02, 0.05].

- (4) To prevent overftting, we tuned the dropout ratio in [0.1, 0.3, 0.5, 0.7, 0.9].

- (5) The batch size was tested in [128, 256, 512,1024],

- (6) the ==dimension of attention network k k k == and the latent factor numberd were tested in [32, 64, 128].

- (7) After the tuning process, the batch size was set to 512,

- the size of the latent factor dimension d d d was set to 64.

- (8) For our EATNN model,

- the attention size k k k was set to 32,

- the learning rate was set to 0.05,

- and the dropout ratio ρ \rho ρ was set to 0.3 for Ciao and Epinion, and 0.7 for Flixster.

- For the optimization objective, we set the weight parameter μ = 0.1 \mu = 0.1 μ=0.1.

5.2 Comparative Analyses on Overall Performances

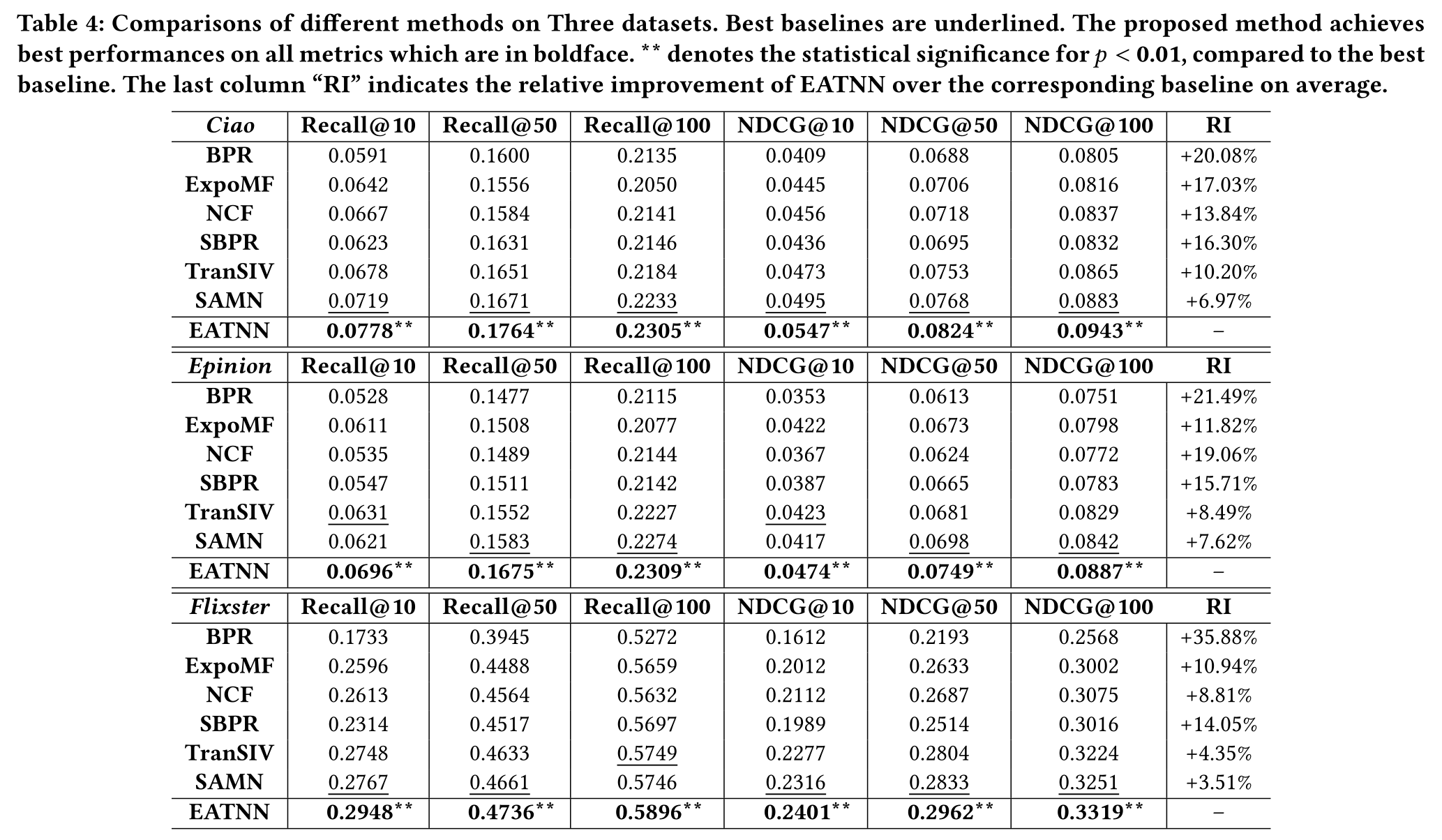

The results of the comparison of diferent methods on three datasets are shown in Table 4. To evaluate on diferent recommendation lengths, we set the length K = 10, 50, and 100 in our experiments. From the results, the following observations can be made: (表4显示了三个数据集上不同方法的比较结果。为了评估不同的推荐长度,我们在实验中设置了长度K=10、50和100。根据这些结果,可以得出以下观察结果:)

-

(1) First, methods incorporating social information generally perform better than non-social methods. For example, in Table 4, the performance of SBPR is better than BPR, and TranSIV, SAMN, and EATNN outperform BPR, ExpoMF, and NCF. This is consistent with previous work [4, 18, 43], which indicates that social information refects users’ interests, and hence is helpful in the recommendation (首先,纳入社会信息的方法通常比非社会方法表现更好。例如,在表4中,SBPR的性能优于BPR,TranSIV、SAMN和EATNN的性能优于BPR、EXOMF和NCF。这与之前的工作[4,18,43]一致,这表明社交信息反映了用户的兴趣,因此有助于推荐)

-

(2) Second, our method EATNN achieves the best performance on the three datasets, and signifcantly outperforms all baseline methods (including neural models NCF and SAMN) withp-values smaller than 0.01. (其次,我们的方法EATNN在这三个数据集上取得了最好的性能,并且在P值小于0.01的情况下显著优于所有基线方法(包括神经模型NCF和SAMN)。)

- Specifcally, compared to SAMN – a recently proposed and very expressive deep learning model, EATNN exhibits average improvements of 6.97%, 7.62% and 3.51% on the three datasets. (具体而言,与SAMN(最近提出的一种非常有表现力的深度学习模型)相比,EATNN在三个数据集上的平均改善率分别为6.97%、7.62%和3.51%。)

- The substantial improvement of our model over the baselines could be attributed to two reasons: (我们的模型相对于基线的实质性改进可归因于两个原因)

-

(1) our model uses attention mechanisms to adaptively transfer the common knowledge between item domain and social domain, which allows the social information to be modeled with a fner granularity; (该模型利用注意机制在项目域和社会域之间自适应地传递公共知识,使得社会信息的建模具有更高的粒度;)

-

(2) the parameters in our model are jointly optimized on the whole data, while sample-based methods (BPR, NCF, SAMN) only use a fraction of sampled data and may ignore important negative examples. (我们的模型中的参数是在整个数据上联合优化的,而基于样本的方法(BPR、NCF、SAMN)只使用一小部分样本数据,并且可能忽略重要的负面示例。)

-

(3) Third, considering the performance on each dataset, we fnd the improvements of EATNN depend on the sparsity of the item domain data. (第三,考虑到每个数据集的性能,我们发现EATNN的改进依赖于项目域数据的稀疏性。)

- The Flixster dataset is relatively dense in terms of user–item interactions (averaging 114.66 interactions per user, compared with 21.74 and 22.03 for Ciao and Epinions, respectively). User preferences are more difcult to learn from sparse user–item interactions (cold-start data), but can be enriched by the knowledge learnt from social domain. Thus, the results show that our transfer learning based model is more useful on sparse datasets. To make further verifcations, we conduct experiments on less training data and the results are shown in Section 5.4. (Flixster数据集在用户-项目交互方面相对密集(每个用户平均114.66次交互,而Ciao和Epinions分别为21.74次和22.03次)。用户偏好更难从稀疏的用户-项目交互(冷启动数据)中学习,但可以通过从社交领域学习的知识来丰富。因此,结果表明,基于迁移学习的模型在稀疏数据集上更有用。 为了进一步验证,我们在较少的训练数据上进行了实验,结果见第5.4节。)

-

5.3 Efciency Analyses

-

(1) In this section, we conducted experiments to explore the training efciencies of our EATNN and two state-of-the-art social-aware recommendation methods: TranSIV and SAMN. (在本节中,我们进行了实验,以探索我们的EATN和两种最先进的社会意识推荐方法:TranSIV和SAMN的训练效率。)

-

(2) We frst compared the overall runtime of the three methods. The results of EATNN-E is also added to show the efciency of our proposed optimization method, where EATNN-E represents the variant model of EATNN using the original regression loss (Eq.2). (我们首先比较了这三种方法的总体运行时间。EATNN-E的结果也被添加,以显示我们提出的优化方法的效率,其中EATNN-E使用原始回归损失表示EATNN的变量模型(等式2)。)

-

In our experiments, the traditional method TranSIV was trained with 8 threads on the Intel Xeon 8-Core CPU of 2.4 GHz, while the neural models SAMN, EATNN-E and EATNN were trained on a single NVIDIA GeForce GTX TITAN X GPU. The runtime results are shown in Table 5. (在我们的实验中,传统方法TranSIV在2.4 GHz的Intel Xeon 8核CPU上使用8个线程进行训练,而神经模型SAMN、EATNN-E和EATNN在单个NVIDIA GeForce GTX TITAN X GPU上进行训练。运行时结果如表5所示。)

-

We can frst observe that the training time cost of EATNN is much less than EATNN-E, which certifes that our derived loss can be learned more efciently compared to the original regression loss. (我们首先可以观察到,EATNN的训练时间成本比EATNN-E小得多,这证明与原始回归损失相比,我们可以更有效地学习导出的损失。)

-

Second, generally the training of EATNN is much faster than TranSIV, SAMN and EATNN-E. In particular, for the biggest dataset Flixster, EATNN only needs 27 hours to achieve the optimal performance, while SAMN and EATNN-E need about 8 and 5 days, respectively. For other datasets, the results of EATNN are also remarkable. In real E-commerce scenarios, the cost of training time is also an important factor to be considered. Our EATNN model shows signifcant advantages in training efciency, which makes it more practical in real life. (其次,一般来说,EATNN的训练速度比TranSIV、SAMN和EATNN-E快得多。特别是对于最大的数据集Flixster,EATNN只需要27小时就可以达到最佳性能,而SAMN和EATNN-E分别需要大约8天和5天。对于其他数据集,EATNN的结果也很显著。在真实的电子商务场景中,培训时间成本也是需要考虑的一个重要因素。我们的EATNN模型在训练效率方面显示出了显著的优势,这使得它在现实生活中更加实用。)

-

-

(3) We also investigated the training process of the neural models SAMN and our EATNN (The results of EATNN-E and EATNN are exactly the same). Figure 3 shows the prediction accuracy of the two models with respect to diferent training epochs. Due to the space limitation, we only show the results of Ciao and Epinion datasets on Recall@50 and NDCG@50 metrics. For Flixster dataset and other metrics, the observations are similar. From the fgure, we can see that EATNN converges much faster than SAMN and consistently achieves better performance. The reason is that EATNN is optimized with a newly derived whole-data based method, while SAMN is based on negative sampling, which can be sub-optimal. (我们还研究了神经模型SAMN和我们的EATNN的训练过程(EATNN-E和EATNN的结果完全相同)。图3显示了两个模型在不同训练时期的预测精度。由于空间限制,我们仅在屏幕上显示Ciao和Epinion数据集的结果Recall@50和NDCG@50韵律学。对于Flixster数据集和其他指标,观察结果类似。从图中可以看出,EATN的收敛速度比SAMN快得多,并始终获得更好的性能。原因是EATNN是用一种新的基于全数据的方法优化的,而SAMN是基于负采样的,这可能是次优的。)

5.4 Handling Cold-Start Issue

-

(1) We validated the ability of our model in handling the cold-start problem, where users have few interactions in item domain. Specifcally, the experiments were conducted by using diferent proportions of the training data, including: (我们验证了我们的模型在处理冷启动问题方面的能力,在冷启动问题中,用户在项目域中几乎没有交互。具体而言,实验是通过使用不同比例的训练数据进行的,包括:)

- (1) 25% for training, 75% for testing and (25%用于培训,75%用于测试和培训)

- (2) 50% for training, 50% for testing. (50%用于培训,50%用于测试。)

-

(2) All of the social information is used in the social-aware algorithms (SBPR, TranSIV, SAMN, and EATNN). The results are similar for all the three datasets. Due to the space limitation, we show the results of Epinion dataset in Table 6. Note that a larger test set contains more positive examples, which may lead to bigger values of Recall and NDCG compared to Table 4 where only 10% data is used for testing [18]. From Table 6, we have the following observations: (所有社交信息都用于社交感知算法(SBPR、TranSIV、SAMN和EATNN)。这三个数据集的结果相似。由于空间限制,我们在表6中显示了Epinion数据集的结果。请注意,与表4相比,更大的测试集包含更多的正面示例,这可能会导致更大的召回率和NDCG值,其中只有10%的数据用于测试[18]。从表6中,我们得出以下观察结果:)

- (1) Firstly, compared with non-social methods, social-aware methods show much better performances, and more improvements are achieved when fewer data are used for training. Considering that social domain and item domain are correlated, the knowledge learnt from social behavior can compensate for the shortage of user feedback on items. As a result, the use of social information produces great improvement when the training data are scarce. (首先,与非社会方法相比,社会感知方法表现出更好的性能,并且当用于训练的数据较少时,可以实现更多的改进。考虑到社会领域和项目领域是相关的,从社会行为中学习到的知识可以弥补用户对项目反馈的不足。因此,当培训数据稀缺时,社会信息的使用产生了巨大的改善。)

- (2) Secondly, our EATNN demonstrates signifcant improvements over other base-lines including social-aware methods SBPR, TranSIV, and SAMN. Specifcally, the improvements over the best baseline are 8.68% for 25% training and 7.81% for 50% training. This indicates the efectiveness of EATNN in addressing the cold-start issue by leveraging adaptive transfer learning and users’ social information. (其次,与其他基线相比,我们的EATN表现出显著的改进,包括社会感知方法SBPR、TranSIV和SAMN。具体来说,25%的训练对最佳基线的改善率为8.68%,50%的训练对最佳基线的改善率为7.81%。这表明EATNN通过利用适应性迁移学习和用户的社交信息来解决冷启动问题的有效性。 )

- (3) Thirdly, TranSIV, and EATNN generally achieve greater improvements when the training data are scarce. This observation coincides with previous work [16, 18], which states that transfer learning methods contribute even more when data in the target domain is sparse. (第三,TranSIV和EATNN通常在训练数据不足时取得更大的改进。这一观察结果与之前的研究[16,18]一致,该研究指出,当目标领域的数据稀疏时,迁移学习方法的贡献更大。 )

5.5 Ablation Study

-

(1) To further understand the efectiveness of social information and the designed attention-based adaptive transfer learning framework, we conducted experiments with the following variants of EATNN: (为了进一步了解社会信息的有效性和设计的基于注意力的自适应迁移学习框架,我们对以下几种EATNN进行了实验:)

- EATNN-S: A variant model of EATNN without using social information. (EATNN-S:不使用社会信息的EATNN的变体模型。)

- EATNN-A: A variant model of EATNN in which the transfer framework is not adaptive. A constant weight (0.5 in our experiments) is assigned to the shared knowledge between item and social domains for every user. (EATNN-A:EATNN的一种变体模型,其中传输框架是不自适应的。为每个用户的项目和社交领域之间的共享知识分配恒定权重(在我们的实验中为0.5)。)

-

(2) Figure 4 shows the performance of diferent variants. The results of the state-of-art methods NCF (non-social) and SAMN (social-aware) are shown as baselines. Due to the space limitation, we also only show the results of Ciao and Epinion datasets on Recall@50 and NDCG@50 metrics. From Figure 4, two observations are made: (图4显示了不同变体的性能。最先进的方法NCF(非社会)和SAMN(社会意识)的结果显示为基线。由于空间的限制,我们也只在屏幕上显示Ciao和Epinion数据集的结果Recall@50和NDCG@50韵律学。从图4可以看出两个观察结果:)

- (1) When using social information, EATNN and the variant EATNNA both perform better than SAMN (p<0.01). And when the attention-based adaptive transfer framework is applied, the performances are further improved signifcantly compared with the constant weight method EATNN-A (p<0.05). It indicates that the shared knowledge between the item and social domains are varied and should be adaptively transferred for diferent users. The better results of EATNN also show that our attention-based adaptive transfer framework can efectively learn the weight of the shared knowledge. (在使用社交信息时,EATNN和变异型EATNNA的表现均优于SAMN(p<0.01)。当采用基于注意的自适应转移框架时,与等权方法EATNN-A相比,性能得到了进一步的显著改善(p<0.05)。这表明项目和社交领域之间的共享知识是多种多样的,应该针对不同的用户进行自适应转移。 EATNN的较好结果还表明,基于注意的自适应转移框架能够有效地学习共享知识的权重。)

- (2) EATNN-S performs the worst among the variant models since no social interactions are utilized. Nevertheless, when using only item interactions, EATNN-S still signifcantly outperforms NCF (p<0.01), indicating the efectiveness of whole-data based learning. (EATNN-S在各种模型中表现最差,因为没有使用社交互动。然而,当仅使用项目交互时,EATNN-S仍然显著优于NCF(p<0.01),表明基于整体数据的学习的有效性。)

5.6 Case Study on Adaptive Transfer

- The attention weights refect how the model learns and recommends. (注意权重反映了模型的学习和推荐方式。)

- We provide some examples to show the adaptive transfer learning process when making recommendations. (我们提供了一些例子来展示在提出建议时的适应性迁移学习过程。)

- Table 7 shows some samples in diferent scenarios from Epinion dataset. EATNN learns the diference between these two domains and automatically balances the shared and non-shared parameters. (表7显示了Epinion数据集中不同场景中的一些示例。EATNN学习这两个域之间的差异,并自动平衡共享和非共享参数。)

- The frst user in EX1 has rich interactions in both item and social domains, and attention weights refect how the shared knowledge is transferred. 66.3% of u C u^C uC is used to predict user preferences to items, while 77.4% of u C u^C uC is to predict user preferences to other users in social networks. (EX1中的第一个用户在项目和社交领域都有丰富的互动,注意力权重反映了共享知识的传递方式。66.3%的 u C u^C uC用于预测用户对物品的偏好,而77.4%的 u C u^C uC用于预测用户对社交网络中其他用户的偏好。)

- EX2 is an example that the user has no item interactions in training set, whose attention weight of item-specifc vector is around 0. Note that in EX2, both u C u^C uC and u S u^S uS are trained only on social data after random initialization, thus β ( C , u ) β_{(C,u)} β(C,u)? and β ( S , u ) β_{(S,u)} β(S,u)? exhibit similar weights. It is shown that attention weights also refect the richness of feedback information. (EX2是一个示例,用户在训练集中没有项目交互,其项目特定向量的注意力权重约为0。请注意,在EX2中, u C u^C uC和 u S u^S uS都是在随机初始化后仅对社会数据进行训练的,因此, β ( C , u ) β_{(C,u)} β(C,u)? 和 β ( S , u ) β_{(S,u)} β(S,u)?显示出类似的权重。结果表明,注意权重也反映了反馈信息的丰富程度。)

- Opposite case is shown in EX3 which has no social interactions in training set, where α ( C , u ) α_{(C,u)} α(C,u)? and α ( I , u ) α_{(I,u)} α(I,u)? are similar. (相反的情况显示在EX3中,它在训练集中没有社交互动,其中 α ( C , u ) α_{(C,u)} α(C,u)? 和 α ( I , u ) α_{(I,u)} α(I,u)?是相似的。)

- EX4 shows that the item feedback information is not rich enough to dominate the recommendation. (EX4显示项目反馈信息不够丰富,无法主导推荐。)

6 CONCLUSION AND FUTURE WORK

-

(1) In this paper, we propose a novel Efcient Adaptive Transfer Neural Network (EATNN) for social-aware recommendation. (在本文中,我们提出了一种新的有效的自适应转移神经网络(EATNN)用于社会感知推荐。)

- Specifcally, by introducing attention mechanisms, EATNN is able to adaptively assign a personalized scheme to transfer the shared knowledge between item domain and social domain. (具体来说,通过引入注意机制,EATNN能够自适应地分配一个个性化方案,在项目域和社会域之间传递共享的知识。)

- We also derive an efcient whole-data based optimization method, whose complexity is reduced signifcantly. (我们还提出了一种有效的基于全数据的优化方法,其复杂度显著降低。)

- Extensive experiments have been made on three real-life datasets. The proposed EATNN consistently and signifcantly outperforms the state-of-the-art recommendation models on diferent evaluation metrics, especially for cold-start users that have few item interactions. (在三个真实数据集上进行了广泛的实验。所提出的EATNN在不同的评估指标上一致且显著优于最先进的推荐模型,尤其是对于项目交互较少的冷启动用户。)

- Moreover, EATNN shows signifcant advantages in training efciency, which makes it more practical to be applied in real E-commerce scenarios. (此外,EATN在培训效率方面显示出显著的优势,这使得它在实际电子商务场景中的应用更加实用。)

-

(2) Our efcient whole-data based strategy has the potential to beneft many other tasks where only positive data is observed. The EATNN model is also not limited to the task in this paper. (我们高效的基于整体数据的策略有可能有益于其他许多只观察到正面数据的任务。EATNN模型也不限于本文中的任务。)

-

In the future, we are interested in exploring EATNN and our efcient whole-data based strategy in other related tasks like content recommendation [6], network embedding [21, 30], and multi-domain classifcation [24]. Also, we will try to extend our optimization method to make it suitable for learning deep non-linear models. (未来,我们有兴趣在其他相关任务中探索EATNN和我们高效的基于全数据的策略,如内容推荐[6]、网络嵌入[21,30]和多域分类[24]。此外,我们将尝试扩展我们的优化方法,使其适用于学习深度非线性模型。)