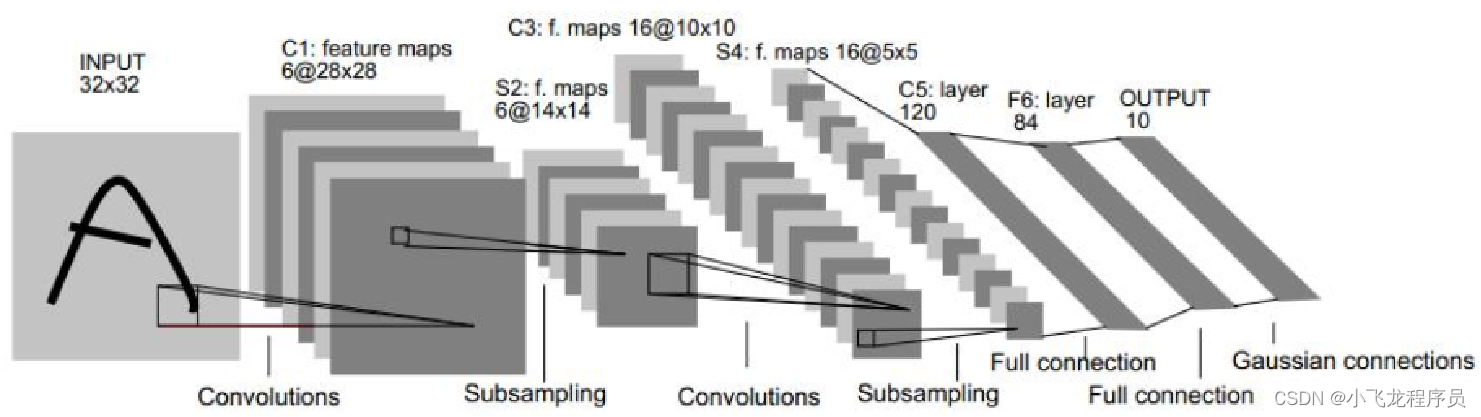

1. LeNet-5卷积神经网络实现mnist数据集

方法一:

from tensorflow.keras import layers,models,metrics,optimizers,activations,losses,utils

from tensorflow.keras.layers import Conv2D,MaxPooling2D,Dense,Dropout,BatchNormalization,Flatten

from tensorflow.keras.utils import to_categorical

from tensorflow.keras.models import Sequential

from tensorflow.keras.datasets import mnist

import tensorflow as tf

# 切分数据集

(train_x,train_y),(test_x,test_y)=mnist.load_data()

print(train_x.shape,test_x.shape,train_y.shape,test_y.shape)

train_x=train_x.reshape(60000,784)

test_x=test_x.reshape(10000,784)

#转化为浮点型

train_x=train_x.astype('float32')

test_x=test_x.astype('float32')

#归一化

train_x=train_x/255

test_x=test_x/255

# 将图像转换为 数量,宽,高,通道(方法一)

train_x=tf.reshape(train_x,[-1,28,28,1])

test_x=tf.reshape(test_x,[-1,28,28,1])

print(train_x.shape,test_x.shape)

#升维(方法二)

#train_x,test_x=np.expand_dims(train_x,axis=3),np.expand_dims(test_x,axis=3)

#标签独热处理

train_y=to_categorical(train_y,10)

test_y=to_categorical(test_y,10)

print(train_y.shape,test_y.shape)

def lenet5():

model=Sequential()

model.add(Conv2D(filters=32,kernel_size=(5,5),strides=(1,1),input_shape=(28,28,1),

padding='valid',activation='relu',kernel_initializer='uniform'))

model.add(MaxPooling2D(pool_size=(2,2),strides=2))

model.add(Conv2D(filters=32, kernel_size=(5, 5), strides=(1, 1),

padding='valid',activation='relu',kernel_initializer='uniform'))

model.add(MaxPooling2D(pool_size=(2,2),strides=2))

model.add(Flatten())

model.add(Dense(100,activation='relu'))

model.add(Dense(10,activation='softmax'))

return model

model=lenet5()

model.compile(optimizer=optimizers.RMSprop(learning_rate=0.01),

loss=losses.categorical_crossentropy,metrics='accuracy')

model.fit(train_x,train_y,validation_data=(test_x,test_y),epochs=20,verbose=1)

print(model.predict(test_x))

score=model.evaluate(test_x,test_y,verbose=0)

print('loss',score[0])

print('accuracy',score[1])

方法二:

from tensorflow.keras import layers,models,metrics,optimizers,activations,losses,utils

from tensorflow.keras.layers import Conv2D,MaxPooling2D,Dense,Dropout,BatchNormalization,Flatten

from tensorflow.keras.utils import to_categorical

from tensorflow.keras.models import Sequential,Model

from tensorflow.keras.datasets import mnist

import tensorflow as tf

# 切分数据集

(train_x,train_y),(test_x,test_y)=mnist.load_data()

print(train_x.shape,test_x.shape,train_y.shape,test_y.shape)

train_x=train_x.reshape(60000,784)

test_x=test_x.reshape(10000,784)

#转化为浮点型

train_x=train_x.astype('float32')

test_x=test_x.astype('float32')

#归一化

train_x=train_x/255

test_x=test_x/255

# 将图像转换为 数量,宽,高,通道

train_x=tf.reshape(train_x,[-1,28,28,1])

test_x=tf.reshape(test_x,[-1,28,28,1])

print(train_x.shape,test_x.shape)

#标签独热处理

train_y=to_categorical(train_y,10)

test_y=to_categorical(test_y,10)

print(train_y.shape,test_y.shape)

class lenet5(Model):

def __init__(self):

super(lenet5, self).__init__() # (28,28,1)

self.c1=Conv2D(6, (5, 5), padding='same', activation='relu') # (28,28,6)

self.s2=MaxPooling2D(pool_size=(2, 2), strides=2) # (14,14,6)

self.c3=Conv2D(16, (5, 5), padding='same', activation='relu') # (14,14,16)

self.s4=MaxPooling2D(pool_size=(2, 2), strides=2) # (7,7,16)

self.falt=Flatten() # 7*7*16=784

self.fc5=Dense(120, activation='relu')

self.fc6=Dense(84, activation='relu')

self.fc7=Dense(10, activation='softmax')

def call(self, input, training=None, mask=None):

out = self.c1(input)

out = self.s2(out)

out = self.c3(out)

out = self.s4(out)

out = self.falt(out)

out = self.fc5(out)

out = self.fc6(out)

out = self.fc7(out)

return out

model=lenet5()

model.compile(optimizer=optimizers.RMSprop(learning_rate=0.01),

loss=losses.categorical_crossentropy,metrics='accuracy')

model.fit(train_x,train_y,validation_data=(test_x,test_y),epochs=20,verbose=1)

print(model.predict(test_x))

score=model.evaluate(test_x,test_y,verbose=0)

print('loss',score[0])

print('accuracy',score[1])

LeNet-5函数封装(两种写法)

方法一:类封装

class lenet5(Model):

def __init__(self):

super(lenet5, self).__init__() # (28,28,1)

self.c1=Conv2D(6, (5, 5), padding='same', activation='relu') # (28,28,6)

self.s2=MaxPooling2D(pool_size=(2, 2), strides=2) # (14,14,6)

self.c3=Conv2D(16, (5, 5), padding='same', activation='relu') # (14,14,16)

self.s4=MaxPooling2D(pool_size=(2, 2), strides=2) # (7,7,16)

self.falt=Flatten() # 7*7*16=784

self.fc5=Dense(120, activation='relu')

self.fc6=Dense(84, activation='relu')

self.fc7=Dense(10, activation='softmax')

def call(self, input, training=None, mask=None):

out = self.c1(input)

out = self.s2(out)

out = self.c3(out)

out = self.s4(out)

out = self.falt(out)

out = self.fc5(out)

out = self.fc6(out)

out = self.fc7(out)

return out

方法二:函数封装

def lenet5():

model=Sequential()

model.add(Conv2D(filters=32,kernel_size=(5,5),strides=(1,1),input_shape=(28,28,1),

padding='valid',activation='relu',kernel_initializer='uniform'))

model.add(MaxPooling2D(pool_size=(2,2),strides=2))

model.add(Conv2D(filters=32, kernel_size=(5, 5), strides=(1, 1),

padding='valid',activation='relu',kernel_initializer='uniform'))

model.add(MaxPooling2D(pool_size=(2,2),strides=2))

model.add(Flatten())

model.add(Dense(100,activation='relu'))

model.add(Dense(10,activation='softmax'))

return model

两种leNet-5方法对比,一种为类封装,另一种为函数封装

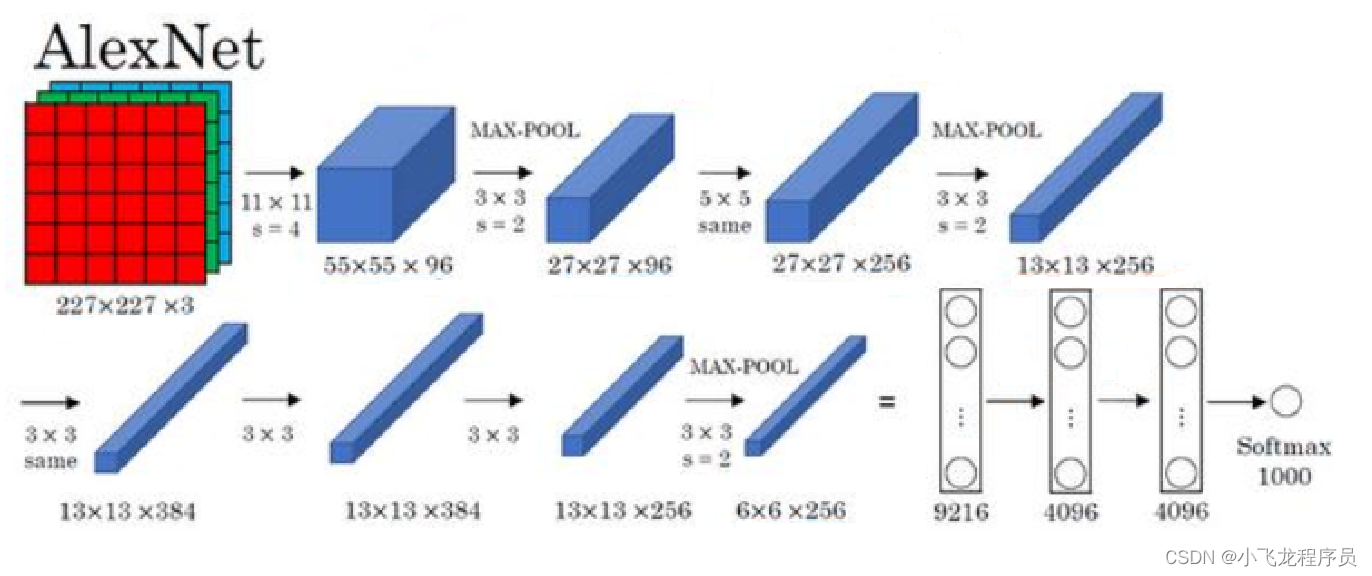

2. AlexNet-8卷积神经网络

alexnet比lenet层数多,针对的是1000类分类问题,输入图片大小为277*277的彩色图片。

def alexNet_8():

model=Sequential()

#第一层卷积

model.add(Conv2D(filters=32,kernel_size=(11,11),padding='SAME',strides=(4,4),input_shape=(227,227,3)))

model.add(activations='relu')

model.add(BatchNormalization())

model.add(Dropout(0.3))

#第一层池化

model.add(MaxPooling2D(pool_size=(3,3),strides=(2,2),padding='valid'))

# 第二层卷积

model.add(Conv2D(filters=64, kernel_size=(5, 5), padding='SAME', strides=(1, 1)))

model.add(activations='relu')

model.add(BatchNormalization())

model.add(Dropout(0.3))

# 第二层池化

model.add(MaxPooling2D(pool_size=(3,3), strides=(2, 2), padding='valid'))

# 第三层卷积

model.add(Conv2D(filters=128, kernel_size=(3, 3), padding='SAME', strides=(1, 1), input_shape=(32, 32, 3)))

model.add(activations='relu')

model.add(BatchNormalization())

model.add(Dropout(0.3))

model.add(Conv2D(filters=128, kernel_size=(3, 3), padding='same', strides=(1, 1)))

model.add(activations='relu')

model.add(BatchNormalization())

model.add(Dropout(0.3))

# 8*8*128

model.add(Conv2D(filters=128, kernel_size=(3, 3), padding='same', strides=(1, 1)))

model.add(activations='relu')

model.add(BatchNormalization())

model.add(Dropout(0.3))

# 第三层池化

model.add(MaxPooling2D(pool_size=(3,3), strides=(2, 2), padding='valid'))

#平铺

model.add(Flatten())

#第六全连接层

model.add(Dense(4096,activation='relu'))

model.add(Dropout(0.5))

# 第七全连接层

model.add(Dense(4096, activation='relu'))

model.add(Dropout(0.5))

# 第八全连接层

model.add(Dense(1000, activation='softmax'))

return model

3. VGGNet-16卷积神经网络

方法一:

from tensorflow.keras import layers,utils,optimizers,activations,datasets,models,losses

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Conv2D,MaxPooling2D,Dense,Dropout,BatchNormalization,Flatten,Activation

from tensorflow.keras.utils import to_categorical

from tensorflow.keras.datasets import mnist,cifar10,cifar100

from tensorflow.keras import losses

import tensorflow as tf

#加载数据

(train_x,train_y),(test_x,test_y)=cifar10.load_data()

print(train_x.shape,train_y.shape,test_x.shape,test_y.shape)

train_x=train_x.astype('float32')

test_x=test_x.astype('float32')

print(train_x.shape,test_x.shape)

#对标签独热

train_y=to_categorical(train_y,10)

test_y=to_categorical(test_y,10)

def vgg_16():

model=Sequential()

#第一层卷积32*32*3

model.add(Conv2D(filters=64,kernel_size=(3,3),padding='SAME',strides=(1,1),input_shape=(32,32,3)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(Dropout(0.3))

#32*32*32

model.add(Conv2D(filters=64,kernel_size=(3,3),padding='same',strides=(1,1)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(Dropout(0.3))

#32*32*32

#第一层池化

model.add(MaxPooling2D(pool_size=(2,2),strides=(2,2),padding='valid'))

#16*16*32

# 第二层卷积

model.add(Conv2D(filters=128, kernel_size=(3, 3), padding='SAME', strides=(1, 1)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(Dropout(0.3))

# 16*16*64

model.add(Conv2D(filters=128, kernel_size=(3, 3), padding='same', strides=(1, 1)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(Dropout(0.3))

# 16*16*64

# 第二层池化

model.add(MaxPooling2D(pool_size=(2, 2), strides=(2, 2), padding='valid'))

#8*8*64

# 第三层卷积

model.add(Conv2D(filters=256, kernel_size=(3, 3), padding='SAME', strides=(1, 1), input_shape=(32, 32, 3)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(Dropout(0.3))

# 8*8*128

model.add(Conv2D(filters=256, kernel_size=(3, 3), padding='same', strides=(1, 1)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(Dropout(0.3))

# 8*8*128

model.add(Conv2D(filters=256, kernel_size=(3, 3), padding='same', strides=(1, 1)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(Dropout(0.3))

# 第三层池化

model.add(MaxPooling2D(pool_size=(2, 2), strides=(2, 2), padding='valid'))

#4*4*128

# 第四层卷积

model.add(Conv2D(filters=512, kernel_size=(3, 3), padding='SAME', strides=(1, 1)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(Dropout(0.3))

# 4*4*256

model.add(Conv2D(filters=512, kernel_size=(3, 3), padding='same', strides=(1, 1)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(Dropout(0.3))

# 4*4*256

model.add(Conv2D(filters=512, kernel_size=(3, 3), padding='same', strides=(1, 1)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(Dropout(0.3))

# 第四层池化

model.add(MaxPooling2D(pool_size=(2, 2), strides=(2, 2), padding='valid'))

#2*2*256

# 第五层卷积

model.add(Conv2D(filters=512, kernel_size=(3, 3), padding='SAME', strides=(1, 1)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(Dropout(0.3))

# 2*2*512

model.add(Conv2D(filters=512, kernel_size=(3, 3), padding='same', strides=(1, 1)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(Dropout(0.3))

# 2*2*512

model.add(Conv2D(filters=512, kernel_size=(3, 3), padding='same', strides=(1, 1)))

model.add(Activation('relu'))

model.add(BatchNormalization())

model.add(Dropout(0.3))

# 2*2*512

# 第五层池化

model.add(MaxPooling2D(pool_size=(2, 2), strides=(2, 2), padding='valid'))

#1*1*512

#平铺

model.add(Flatten())

#第六全连接层

model.add(Dense(4096,activation='relu'))

model.add(Dropout(0.3))

# 第七全连接层

model.add(Dense(1000, activation='relu'))

model.add(Dropout(0.3))

# 第八全连接层

model.add(Dense(10, activation='softmax'))

return model

model=vgg_16()

model.compile(optimizer=optimizers.RMSprop(learning_rate=0.01),loss=losses.categorical_crossentropy,metrics=['accuracy'])

model.fit(train_x,train_y,epochs=100,validation_data=(test_x,test_y))

model.predict(test_x)

score=model.evaluate(test_x,test_y,batch_size=128,verbose=1)

print('loss',score[0])

print('accuracy',score[1])

方法二:

import tensorflow as tf

# tf.compat.v1.logging.set_verbosity(40)

from tensorflow.keras import losses, optimizers, models, datasets, Sequential

from tensorflow.keras.layers import Dense, MaxPooling2D, Conv2D, Dropout, Flatten

class VGG16(models.Model):

def __init__(self, shape):

super(VGG16, self).__init__()

self.model = Sequential([

Conv2D(input_shape=(shape[1], shape[2], shape[3]),

filters=64, kernel_size=(3, 3), activation='relu', padding='same', name='block1_conv1'),

Conv2D(filters=64, kernel_size=(3, 3), activation='relu', padding='same', name='block1_conv2'),

MaxPooling2D(pool_size=(2, 2), name='block1_pool'),

Conv2D(filters=128, kernel_size=(3, 3), activation='relu', padding='same', name='block2_conv1'),

Conv2D(filters=128, kernel_size=(3, 3), activation='relu', padding='same', name='block2_conv2'),

MaxPooling2D(pool_size=(2, 2), name='block2_pool'),

Conv2D(filters=256, kernel_size=(3, 3), activation='relu', padding='same', name='block3_conv1'),

Conv2D(filters=256, kernel_size=(3, 3), activation='relu', padding='same', name='block3_conv2'),

Conv2D(filters=256, kernel_size=(3, 3), activation='relu', padding='same', name='block3_conv3'),

MaxPooling2D(pool_size=(2, 2), name='block3_pool'),

Conv2D(filters=512, kernel_size=(3, 3), activation='relu', padding='same', name='block4_conv1'),

Conv2D(filters=512, kernel_size=(3, 3), activation='relu', padding='same', name='block4_conv2'),

Conv2D(filters=512, kernel_size=(3, 3), activation='relu', padding='same', name='block4_conv3'),

MaxPooling2D(pool_size=(2, 2), name='block4_pool'),

Conv2D(filters=512, kernel_size=(3, 3), activation='relu', padding='same', name='block5_conv1'),

Conv2D(filters=512, kernel_size=(3, 3), activation='relu', padding='same', name='block5_conv2'),

Conv2D(filters=512, kernel_size=(3, 3), activation='relu', padding='same', name='block5_conv3'),

MaxPooling2D(pool_size=(2, 2), name='block5_pool'),

Flatten(),

Dense(4096, activation='relu'),

Dropout(0.5),

Dense(1000, activation='relu'),

Dropout(0.5),

Dense(10, activation='softmax')

])

def call(self, inputs, training=None, mask=None):

x = self.model(inputs)

return x

if __name__ == '__main__':

(trainx, trainy), (testx, testy) = datasets.cifar10.load_data()

trainx, testx = trainx.reshape(-1, 32, 32, 3) / 255.0, testx.reshape(-1, 32, 32, 3) / 255.0

print(trainx.shape)

model = VGG16(trainx.shape)

model.build(input_shape=(None, 32, 32, 3))

model.compile(loss=losses.sparse_categorical_crossentropy,

optimizer=optimizers.Adam(),

metrics=['accuracy'])

model.summary()

import datetime

# stamp = datetime.datetime.now().strftime('%Y%m%d-%H%M%S')

# logdir = os.path.join('data', 'autograph', stamp)

# writer = tf.summary.create_file_writer(logdir)

# tensorboard_callback = tf.keras.callbacks.TensorBoard(logdir, histogram_freq=1)

#

model.fit(trainx, trainy, epochs=5, batch_size=64)

#

model.evaluate(testx, testy)

总结

LeNet是第一个成功应用于手写字体识别的卷积神经网络

ALexNet展示了卷积神经网络的强大性能,开创了卷积神经网络空前的高潮

ZFNet通过可视化展示了卷积神经网络各层的功能和作用

VGG采用堆积的小卷积核替代采用大的卷积核,堆叠的小卷积核的卷积层等同于单个的大卷积核的卷积层,不仅能够增加决策函数的判别性还能减少参数量

GoogleNet增加了卷积神经网络的宽度,在多个不同尺寸的卷积核上进行卷积后再聚合,并使用1*1卷积降维减少参数量

ResNet解决了网络模型的退化问题,允许神经网络更深