1.一个DenseBlock的构建

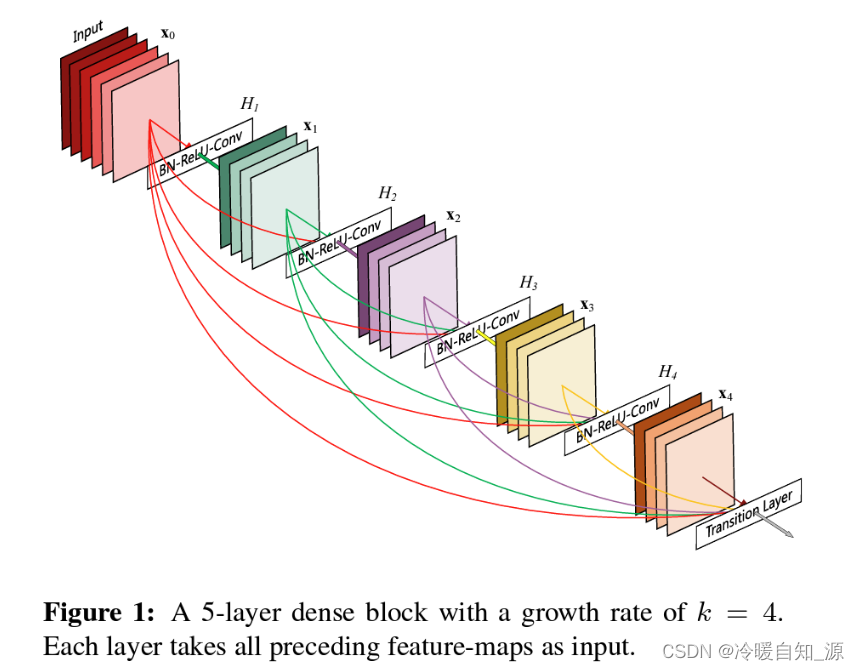

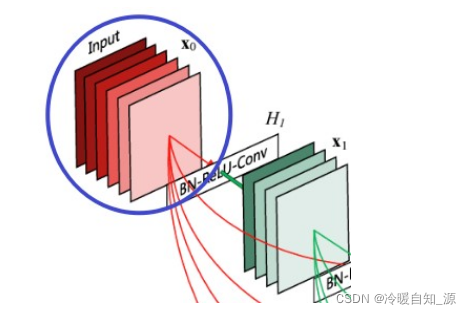

DenseBlock的模型图如下:

图中表示的是一个DenseBlock,其中包含了5层的DdenseLayer(密集连接层),增长速率groth_rate=4也就是,在这个DenseBlock中每次输入增长的维度是4。

例子:

1.原始输入,Input=(batch,5,56,56),经过网络之后,得到输出为:(batch,4,56,56)的大小的特征

2.密集连接会把前面的输入进行整合,之后输入的网络的 Input = (batch,9,56,56),依次类推

3.如果 layer_num=5,经过一个DenseBlock之后的全部特征输入为 :(Batch,5+6*4,56,56)=(batch,29,56,56)

4.为了减少维度下一个DenseBLock的输入会从步骤3得到的特征减少为:(batch,29/2,28,28)

4.一般DenseNet网络会有4个DenseBlock,那么第二个DenseBlock的原始输入就为:(batch,29/2,28,28),类推

1.1 DenseLayer网络模型的构建

DenserLayer是基本的结构,主要用于特征的提取等工作,控制输入经过网络后,输入的模型的特征数量,比如第一个模型输入是5个特征层,后面一个是四个特征层等。

但是可以发现一点,这个和别的网络有所不同的是,每一个DenseLayer虽然特征提取的函数一样的,因为要结合前面的特征最为新的网络的输入,所以模型每次的输入的维度是不同。比如groth_rate = 32,每次输入特征都会在原来的基础上增加32个通道。因此需要在函数中定义 num_layer个不同输入的网络模型,这也正是模型函数有意思的一点。

1.1.1 代码的构建步骤和思路

1.新建一个_DenseLayer类,继承自 nn.Module,初始化一些需要使用的参数

class _DenseLayer(nn.Module):

def __init__(

self,

block_idx:int,layer_idx:int,

num_input_features: int,

growth_rate: int,

bn_size: int,

) -> None:

super(_DenseLayer, self).__init__()

参数解释:

block_idx:int,:这是为了调试,了解当前是位于哪一个block

layer_idx:int,:调试,了解当前是哪一个层

num_input_features: int,:当前 DenseLayer输入的维度

growth_rate: int,:增长速率,也就是最终输入维度

bn_size: int,:中间步骤中1x1卷积中,维度升为 bn_size *growth_rate,倍数,一般为128

2.初始化函数在前向传播中需要使用的变量和函数

self.block_idx = block_idx

self.layer_idx = layer_idx

self.add_module('norm1', nn.BatchNorm2d(num_input_features))

self.add_module('relu1', nn.ReLU(inplace=True))

self.add_module('conv1', nn.Conv2d(num_input_features, bn_size *

growth_rate, kernel_size=1, stride=1,

bias=False))

self.add_module('norm2', nn.BatchNorm2d(bn_size * growth_rate))

self.add_module('relu2', nn.ReLU(inplace=True))

self.add_module('conv2', nn.Conv2d(bn_size * growth_rate, growth_rate,

kernel_size=3, stride=1, padding=1,

bias=False))

def bn_function(self, inputs: List[Tensor]) -> Tensor:

'''

1.经过Pytorch的图像的维度必须是4维,(batch,channel,hight,width)

2.图像经过DenserLayer之后,得到的数据是4维: (batch,32,hight,width)

3.所以特征拼接是通道 第一个维度,变成:(batch,channel + 32,hight,width)

'''

print(f"\n第{self.block_idx+1}个DenseBlock第{self.layer_idx+1}个DenseLayer的输入:{torch.cat(inputs, 1).size()}")

concated_features = torch.cat(inputs, 1)

# 1x1卷积,图像的维度变成 128,得到 torch.size(batch,128,height,width)

bottleneck_output = self.conv1(self.relu1(self.norm1(concated_features))) # noqa: T484

return bottleneck_output

解释:

1.使用 add_module(name,model)函数去添加模型,后面可以通道 self.name 去访问到这个模块的函数

2.norm1->relu1->conv1 ,主要是做了批标准化,激活,卷积改变通道数,控制维度(128)。

3.norm2->relu2->conv2,主要做了批标准化,激活,卷积提取特征,输入指定维度的特征(32)

3. forward()前向传播,在这个_DenseLayer()类的对象被调用的时候使用

def forward(self, input: Tensor) -> Tensor: # noqa: F811

# 如果得到的数据是单个数据,不是List形式的,则转换成 List,以便后面的处理,

# 比如第一个就是 (batch,64,56,56) -> [(batch,64,56,56)]

if isinstance(input, Tensor):

prev_features = [input]

else:

prev_features = input

#print(f"prev_features的shape:{[item.to('cpu').numpy().shape for item in prev_features]}")

#print(f"第{self.block_idx+1}个DenseBloc第{self.layer_idx+1}个DenseLayer的kprev_features的shape:{[item.to('cpu').numpy().shape for item in prev_features]}")

# 之前的特征图经过 BN1->Relu1->Conv1

bottleneck_output = self.bn_function(prev_features)

# 特征提取,不改变图像的大小,维度变成 32 ,得到 torch.size = (batch,32,height,width)

new_features = self.conv2(self.relu2(self.norm2(bottleneck_output)))

# 返回得到的新特征,torch.size = (batch,32,height,width)

return new_features

解释:

1.在_Denserlayer被调用的时候,对输入进行判断,一般都是List的列表,但是也支持 Tensor类型的单个数据

2.首先经过 norm1->relu1->conv1,再经过 norm2->relu2->conv2 ,最终返回 (batch,32,height,width)的特征返回

1.1.2 完整的DesenLayer的代码

class _DenseLayer(nn.Module):

def __init__(

self,

block_idx:int,layer_idx:int,

num_input_features: int,

growth_rate: int,

bn_size: int,

) -> None:

super(_DenseLayer, self).__init__()

self.block_idx = block_idx

self.layer_idx = layer_idx

self.add_module('norm1', nn.BatchNorm2d(num_input_features))

self.add_module('relu1', nn.ReLU(inplace=True))

self.add_module('conv1', nn.Conv2d(num_input_features, bn_size *

growth_rate, kernel_size=1, stride=1,

bias=False))

self.add_module('norm2', nn.BatchNorm2d(bn_size * growth_rate))

self.add_module('relu2', nn.ReLU(inplace=True))

self.add_module('conv2', nn.Conv2d(bn_size * growth_rate, growth_rate,

kernel_size=3, stride=1, padding=1,

bias=False))

def bn_function(self, inputs: List[Tensor]) -> Tensor:

'''

1.经过Pytorch的图像的维度必须是4维,(batch,channel,hight,width)

2.图像经过DenserLayer之后,得到的数据是4维: (batch,32,hight,width)

3.所以特征拼接是通道 第一个维度,变成:(batch,channel + 32,hight,width)

'''

print(f"\n第{self.block_idx+1}个DenseBlock第{self.layer_idx+1}个DenseLayer的输入:{torch.cat(inputs, 1).size()}")

concated_features = torch.cat(inputs, 1)

# 1x1卷积,图像的维度变成 128,得到 torch.size(batch,128,height,width)

bottleneck_output = self.conv1(self.relu1(self.norm1(concated_features))) # noqa: T484

return bottleneck_output

def forward(self, input: Tensor) -> Tensor: # noqa: F811

# 如果得到的数据是单个数据,不是List形式的,则转换成 List,以便后面的处理,

# 比如第一个就是 (batch,64,56,56) -> [(batch,64,56,56)]

if isinstance(input, Tensor):

prev_features = [input]

else:

prev_features = input

#print(f"prev_features的shape:{[item.to('cpu').numpy().shape for item in prev_features]}")

#print(f"第{self.block_idx+1}个DenseBloc第{self.layer_idx+1}个DenseLayer的kprev_features的shape:{[item.to('cpu').numpy().shape for item in prev_features]}")

# 之前的特征图经过 BN1->Relu1->Conv1

bottleneck_output = self.bn_function(prev_features)

# 特征提取,不改变图像的大小,维度变成 32 ,得到 torch.size = (batch,32,height,width)

new_features = self.conv2(self.relu2(self.norm2(bottleneck_output)))

# 返回得到的新特征,torch.size = (batch,32,height,width)

return new_features

1.2 DenseBlock 模型的构建

1.2.1 DenseBlock 模型的构建步骤代码实现

1.新建一个类_DenseBlock,继承自 nn.ModuleDict 类

class _DenseBlock(nn.ModuleDict):

_version = 2

def __init__(

self,

block_idx:int,

num_layers: int,

num_input_features: int,

bn_size: int,

growth_rate: int,

) -> None:

解释:为什么这个类要继承自 nn.ModuleDict呢?

当继承自这个类的是有,本意是字典类的对象,那么我通过 self.items()就可以直接获得字典的对象了。

1.初始化不同维度输入的DenseLayer,添加到网络中,以便后续使用

super(_DenseBlock, self).__init__()

self.block_idx = block_idx

'''

当i=0的时候,mum_layers = 6 ,调用函数 block = _DenseBlock(num_layers=6,num_input_features=64,bn_size=4,groth_rate=32),第一次执行特征的维度来自于前面的特征提取

'''

# 在DenseLayer中输出是相同的,但是输入的维度有来自前面的特征,所以每次输出的维度都是增长的,且增长的速率和输出的维度有关,称为 growth_rate

for i in range(num_layers):

layer = _DenseLayer(block_idx=self.block_idx,layer_idx=i,

num_input_features=num_input_features + i * growth_rate,

growth_rate=growth_rate,

bn_size=bn_size

)

# 在初始化的时候会形成很多子模型

self.add_module('denselayer%d' % (i + 1), layer)

解释

1.在初始化DenseLayer对象的时候,需要设定当前DenseBlock需要包含DenseLayer的个数,用于创建不同输入的网络模型。

2.使用for循环去构建 多个Ddenselayer,并使用 add_module添加到模型中,以便后续使用

2.forward()用于当模型对象被调用的时候使用

def forward(self, init_features: Tensor) -> Tensor:

# 初始的特征 转换成列表的形式,比如第一个是 torch.size = (batch,64,56,56) - > features=[(batch,64,56,56)]

features = [init_features]

print("\n=============================================\n")

print(f"第{self.block_idx+1}个DenseBlock的init_features的shape:{torch.cat(features, 1).size()}")

#print(f"init_features的shape:{[item.to('cpu').numpy().shape for item in features]}")

# 遍历所有的Layer

for name, layer in self.items():

#print(f"{name}第{i+1}个feature的shape:{item.to('cpu').numpy().shape}")

# 通过Layer只有,会得到一个新的特征 大小为 torch.size = (batch,32,height,width) ,注意,每一个DensnBlock中的DensnLayer得到的输出图像大小是不变的,否则无法进行特征的融合

new_features = layer(features)

features.append(new_features) # 如果是第一次的话,就会得到 features = [(batch,64,56,56),(batch,32,56,56)],第一个DenseBlock的第二个DenseLayer 得到features = [(batch,64,56,56),(batch,32,56,56),(batch,32,56,56)],类推

print(f"经过DenseBlock_{self.block_idx+1}的{name}层之后的features的List输出:{[item.to('cpu').numpy().shape for item in features]}")

#最终做完一个DenseBlock的话,会返回一个全部特征融合的结果,比如做完第一个DenseBlock,会得到 [(batch,num_layers*32 +init_feature[1],height,width)],第一个的话就是 torch.size = [(batch,256,56,56)],这个会作为第2个DenseBlock的输入

# 但是在做完第一个DenseBlock的时候,为了减少参数,会把前面的特征进行裁剪,变成 torch.size = [(batch,128,28,28)]

print

# 以此类推

#print(f"经过一个DenseBlock最终返回features的shape:{[item.to('cpu').numpy().shape for item in torch.cat(features, 1)]}")

print(f"\n经过一个DenseBlock_{self.block_idx+1}最终返回features的shape:{torch.cat(features, 1).size()}")

return torch.cat(features, 1)

解释:

1.在DenseBlock中会生成一个feature = []的列表,每次在DenseBlock的前向传播函数中,都会把经过DenseLayer之后新的特征添加在啊其基础之上。

2.在DenseBlock中的for循环中,每调用一次DenseLayer对象就会生成一个新的特征,(batch,32,w,h),添加到feature的列表中。

3.DenseBlock函数最终返回的是,所有特征的融合。但是feature=[] 一直都是存在的,且每个特征都是分开的。

1.2.2 DenseBlock的全部代码

class _DenseBlock(nn.ModuleDict):

_version = 2

def __init__(

self,

block_idx:int,

num_layers: int,

num_input_features: int,

bn_size: int,

growth_rate: int,

) -> None:

super(_DenseBlock, self).__init__()

self.block_idx = block_idx

'''

当i=0的时候,mum_layers = 6 ,调用函数 block = _DenseBlock(num_layers=6,num_input_features=64,bn_size=4,groth_rate=32),第一次执行特征的维度来自于前面的特征提取

'''

# 在DenseLayer中输出是相同的,但是输入的维度有来自前面的特征,所以每次输出的维度都是增长的,且增长的速率和输出的维度有关,称为 growth_rate

for i in range(num_layers):

layer = _DenseLayer(block_idx=self.block_idx,layer_idx=i,

num_input_features=num_input_features + i * growth_rate,

growth_rate=growth_rate,

bn_size=bn_size

)

# 在初始化的时候会形成很多子模型

self.add_module('denselayer%d' % (i + 1), layer)

def forward(self, init_features: Tensor) -> Tensor:

# 初始的特征 转换成列表的形式,比如第一个是 torch.size = (batch,64,56,56) - > features=[(batch,64,56,56)]

features = [init_features]

print("\n=============================================\n")

print(f"第{self.block_idx+1}个DenseBlock的init_features的shape:{torch.cat(features, 1).size()}")

#print(f"init_features的shape:{[item.to('cpu').numpy().shape for item in features]}")

# 遍历所有的Layer

for name, layer in self.items():

#print(f"{name}第{i+1}个feature的shape:{item.to('cpu').numpy().shape}")

# 通过Layer只有,会得到一个新的特征 大小为 torch.size = (batch,32,height,width) ,注意,每一个DensnBlock中的DensnLayer得到的输出图像大小是不变的,否则无法进行特征的融合

new_features = layer(features)

features.append(new_features) # 如果是第一次的话,就会得到 features = [(batch,64,56,56),(batch,32,56,56)],第一个DenseBlock的第二个DenseLayer 得到features = [(batch,64,56,56),(batch,32,56,56),(batch,32,56,56)],类推

print(f"经过DenseBlock_{self.block_idx+1}的{name}层之后的features的List输出:{[item.to('cpu').numpy().shape for item in features]}")

#最终做完一个DenseBlock的话,会返回一个全部特征融合的结果,比如做完第一个DenseBlock,会得到 [(batch,num_layers*32 +init_feature[1],height,width)],第一个的话就是 torch.size = [(batch,256,56,56)],这个会作为第2个DenseBlock的输入

# 但是在做完第一个DenseBlock的时候,为了减少参数,会把前面的特征进行裁剪,变成 torch.size = [(batch,128,28,28)]

print

# 以此类推

#print(f"经过一个DenseBlock最终返回features的shape:{[item.to('cpu').numpy().shape for item in torch.cat(features, 1)]}")

print(f"\n经过一个DenseBlock_{self.block_idx+1}最终返回features的shape:{torch.cat(features, 1).size()}")

return torch.cat(features, 1)

2._Transition 函数,用于降低模型的维度和参数个数

class _Transition(nn.Sequential):

def __init__(self, num_input_features: int, num_output_features: int) -> None:

super(_Transition, self).__init__()

self.add_module('norm', nn.BatchNorm2d(num_input_features))

self.add_module('relu', nn.ReLU(inplace=True))

self.add_module('conv', nn.Conv2d(num_input_features, num_output_features,

kernel_size=1, stride=1, bias=False))

self.add_module('pool', nn.AvgPool2d(kernel_size=2, stride=2))

解释:

这个函数的目的是将图像的长宽减半,图像的特征减半。

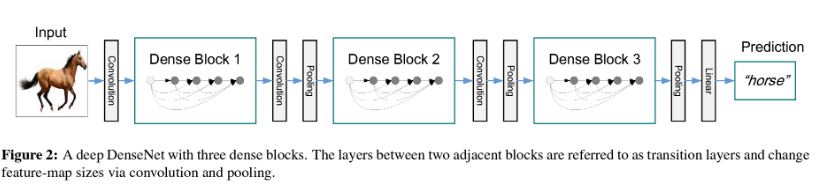

3.DenseNet的构建

DenseNet如下图所示,主要是由多个DenseBlock组成

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-K0ReLbcN-1646208488089)(index_files/1d5710b1-7f88-4d5a-9766-c8a50f78d3b8.png)]

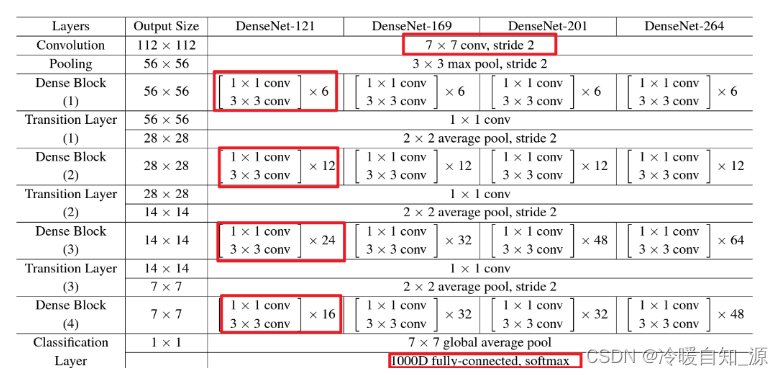

3.1 DenseNet 各个模型的参数详情

3.2 代码实现的思路分析

1.新建DenseNet类,基础自nn.Module,并初始化参数

class DenseNet(nn.Module):

def __init__(

self,

growth_rate: int = 32,

block_config: Tuple[int, int, int, int] = (6, 12, 24, 16),

num_init_features: int = 64,

bn_size: int = 4,

num_classes: int = 1000,

) -> None:

super(DenseNet, self).__init__()

2.对输入图像进行特征提取,得到特征大小 :(batch,64,56,56)

# First convolution 输入(3,224,224) -> (64,56,56)

self.features = nn.Sequential(OrderedDict([

('conv0', nn.Conv2d(3, num_init_features, kernel_size=7, stride=2,

padding=3, bias=False)),

('norm0', nn.BatchNorm2d(num_init_features)),

('relu0', nn.ReLU(inplace=True)),

('pool0', nn.MaxPool2d(kernel_size=3, stride=2, padding=1)),

]))

3.根据参数创建若干个DenseBLock,并添加到模型中

# 总共创建4个DenseBlock,第1个DenseBlock有6个DenseLayer,第2个DenseBlock有12个DenseLayer,第3个DenseBlock有24个DenseLayer,第4个DenseBlock有16个DenseLayer

# 每个DenseLayer 有两次卷积

for i, num_layers in enumerate(block_config):

'''

当i=0的时候,mum_layers = 6 ,调用函数 block = _DenseBlock(num_layers=6,num_input_features=64,bn_size=4,groth_rate=32),第一次执行特征的维度来自于前面的特征提取

当i=1的时候,mum_layers = 12 ,调用函数 block = _DenseBlock(num_layers=12,num_input_features=128,bn_size=4,groth_rate=32),后面执行完回来,num_features 减少一半:128

当i=2的时候,mum_layers = 24 ,调用函数 block = _DenseBlock(num_layers=24,num_input_features=256,bn_size=4,groth_rate=32),后面执行完回来,num_features 减少一半:256

当i=3的时候,mum_layers = 16 ,调用函数 block = _DenseBlock(num_layers=16,num_input_features=512,bn_size=4,groth_rate=32),后面执行完回来,num_features 减少一半:512

'''

block = _DenseBlock(block_idx=i,

num_layers=num_layers,

num_input_features=num_features,

bn_size=bn_size,

growth_rate=growth_rate,

)

# 添加到模型当中

self.features.add_module('denseblock%d' % (i + 1), block)

4.执行完一个DenseBlock之后,特征的维度发生了变化,更新维度

比如,输入为:(batch,64,56,56),经过num_layer=6的DenseBlock,最终用于输入第二个输入的特征大小为:(batch,256,56,56),

num_features = num_features + num_layers * growth_rate

5.为了降低模型的复杂度,对前面DenseBlock得到的模型进行降维

以4为例子,经过 _Transition之后,特征的大小变为:(batch,128,28,28)

# 判断是否执行完的DenseBlock

if i != len(block_config) - 1:

'''

当 i=0 的时候,调用函数 _Transition(num_input_features =256,num_output_features=128)

当 i=1 的时候,调用函数 _Transition(num_input_features =512,num_output_features=256)

当 i=2 的时候,调用函数 _Transition(num_input_features =1024,num_output_features=512)

当 i=3 的时候,调用函数 _Transition(num_input_features =1024,num_output_features=512)

'''

trans = _Transition(num_input_features=num_features,

num_output_features=num_features // 2)

# 添加到 features的子模型中

self.features.add_module('transition%d' % (i + 1), trans)

''' num_features 减少为原来的一半,下一个Block的输入的feature应该是,

执行第1回合之后, num_features = 128

执行第2回合之后, num_features = 256

执行第3回合之后, num_features = 512

执行第4回合之后, num_features = 512

'''

num_features = num_features // 2

6.最后添加BN层和分类层

# 最终得到

# Final batch norm,最后的BN层

self.features.add_module('norm5', nn.BatchNorm2d(num_features))

# Linear layer

self.classifier = nn.Linear(num_features, num_classes)

7.前向传播函数

def forward(self, x: Tensor) -> Tensor:

features = self.features(x)

out = F.relu(features, inplace=True)

out = F.adaptive_avg_pool2d(out, (1, 1))

out = torch.flatten(out, 1)

out = self.classifier(out)

return out

3.3 DenseNet实现的代码

class _Transition(nn.Sequential):

def __init__(self, num_input_features: int, num_output_features: int) -> None:

super(_Transition, self).__init__()

self.add_module('norm', nn.BatchNorm2d(num_input_features))

self.add_module('relu', nn.ReLU(inplace=True))

self.add_module('conv', nn.Conv2d(num_input_features, num_output_features,

kernel_size=1, stride=1, bias=False))

self.add_module('pool', nn.AvgPool2d(kernel_size=2, stride=2))

class DenseNet(nn.Module):

def __init__(

self,

growth_rate: int = 32,

block_config: Tuple[int, int, int, int] = (6, 12, 24, 16),

num_init_features: int = 64,

bn_size: int = 4,

num_classes: int = 1000,

) -> None:

super(DenseNet, self).__init__()

# First convolution 输入(3,224,224) -> (64,56,56)

self.features = nn.Sequential(OrderedDict([

('conv0', nn.Conv2d(3, num_init_features, kernel_size=7, stride=2,

padding=3, bias=False)),

('norm0', nn.BatchNorm2d(num_init_features)),

('relu0', nn.ReLU(inplace=True)),

('pool0', nn.MaxPool2d(kernel_size=3, stride=2, padding=1)),

]))

# Each denseblock 第一次进入num_feature = 64

num_features = num_init_features

# 总共创建4个DenseBlock,第1个DenseBlock有6个DenseLayer,第2个DenseBlock有12个DenseLayer,第3个DenseBlock有24个DenseLayer,第4个DenseBlock有16个DenseLayer

# 每个DenseLayer 有两次卷积

for i, num_layers in enumerate(block_config):

'''

当i=0的时候,mum_layers = 6 ,调用函数 block = _DenseBlock(num_layers=6,num_input_features=64,bn_size=4,groth_rate=32),第一次执行特征的维度来自于前面的特征提取

当i=1的时候,mum_layers = 12 ,调用函数 block = _DenseBlock(num_layers=12,num_input_features=128,bn_size=4,groth_rate=32),后面执行完回来,num_features 减少一半:128

当i=2的时候,mum_layers = 24 ,调用函数 block = _DenseBlock(num_layers=24,num_input_features=256,bn_size=4,groth_rate=32),后面执行完回来,num_features 减少一半:256

当i=3的时候,mum_layers = 16 ,调用函数 block = _DenseBlock(num_layers=16,num_input_features=512,bn_size=4,groth_rate=32),后面执行完回来,num_features 减少一半:512

'''

block = _DenseBlock(block_idx=i,

num_layers=num_layers,

num_input_features=num_features,

bn_size=bn_size,

growth_rate=growth_rate,

)

# 添加到模型当中

self.features.add_module('denseblock%d' % (i + 1), block)

'''

运行完 i= 0 之后,num_features = 64 + 6*32=256 # 执行完6个DenseLayer,_Transition会对前面的特征进行维度减半,图像大小减半 ,得到:(256,56,56)

运行完 i= 1 之后,num_features = 128+ 12*32=512 # 执行完12个DenseLayer,得到:(512,28,28)

运行完 i= 2 之后,num_features = 256 + 24*32=1024 # 执行完24个DenseLayer,得到:(1024,14,14)

运行完 i= 3 之后,num_features = 512 + 16*32=1024 # 执行完16个DenseLayer,得到:(1024,7,7)

'''

num_features = num_features + num_layers * growth_rate

# 判断是否执行完的DenseBlock

if i != len(block_config) - 1:

'''

当 i=0 的时候,调用函数 _Transition(num_input_features =256,num_output_features=128)

当 i=1 的时候,调用函数 _Transition(num_input_features =512,num_output_features=256)

当 i=2 的时候,调用函数 _Transition(num_input_features =1024,num_output_features=512)

当 i=3 的时候,调用函数 _Transition(num_input_features =1024,num_output_features=512)

'''

trans = _Transition(num_input_features=num_features,

num_output_features=num_features // 2)

# 添加到 features的子模型中

self.features.add_module('transition%d' % (i + 1), trans)

''' num_features 减少为原来的一半,下一个Block的输入的feature应该是,

执行第1回合之后, num_features = 128

执行第2回合之后, num_features = 256

执行第3回合之后, num_features = 512

执行第4回合之后, num_features = 512

'''

num_features = num_features // 2

# 最终得到

# Final batch norm,最后的BN层

self.features.add_module('norm5', nn.BatchNorm2d(num_features))

# Linear layer

self.classifier = nn.Linear(num_features, num_classes)

def forward(self, x: Tensor) -> Tensor:

features = self.features(x)

out = F.relu(features, inplace=True)

out = F.adaptive_avg_pool2d(out, (1, 1))

out = torch.flatten(out, 1)

out = self.classifier(out)

return out

4.网络模型的创建和测试

4.1 网络模型创建打印 densenet121

备注:dennet121 中的121 是如何来的?

从设置的参数可以看出来,block_num=4,且每个Block的num_layer=(6, 12, 24, 16),则总共有58个denselayer。

从代码中可以知道每个denselayer包含两个卷积。总共三个 _Transition层,每个层一个卷积。在最开始的时候一个卷积,结束的时候一个全连接层。则总计:58*2+3+1+1=121

model = DenseNet(growth_rate=32, block_config=(6, 12, 24, 16), num_init_features=64)

print(model)

- 打印模型如下:

DenseNet(

(features): Sequential(

(conv0): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False)

(norm0): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu0): ReLU(inplace=True)

(pool0): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

(denseblock1): _DenseBlock(

(denselayer1): _DenseLayer(

(norm1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(64, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer2): _DenseLayer(

(norm1): BatchNorm2d(96, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(96, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer3): _DenseLayer(

(norm1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer4): _DenseLayer(

(norm1): BatchNorm2d(160, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(160, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer5): _DenseLayer(

(norm1): BatchNorm2d(192, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(192, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer6): _DenseLayer(

(norm1): BatchNorm2d(224, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(224, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

)

(transition1): _Transition(

(norm): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(pool): AvgPool2d(kernel_size=2, stride=2, padding=0)

)

(denseblock2): _DenseBlock(

(denselayer1): _DenseLayer(

(norm1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer2): _DenseLayer(

(norm1): BatchNorm2d(160, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(160, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer3): _DenseLayer(

(norm1): BatchNorm2d(192, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(192, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer4): _DenseLayer(

(norm1): BatchNorm2d(224, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(224, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer5): _DenseLayer(

(norm1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer6): _DenseLayer(

(norm1): BatchNorm2d(288, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(288, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer7): _DenseLayer(

(norm1): BatchNorm2d(320, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(320, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer8): _DenseLayer(

(norm1): BatchNorm2d(352, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(352, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer9): _DenseLayer(

(norm1): BatchNorm2d(384, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(384, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer10): _DenseLayer(

(norm1): BatchNorm2d(416, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(416, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer11): _DenseLayer(

(norm1): BatchNorm2d(448, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(448, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer12): _DenseLayer(

(norm1): BatchNorm2d(480, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(480, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

)

(transition2): _Transition(

(norm): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(pool): AvgPool2d(kernel_size=2, stride=2, padding=0)

)

(denseblock3): _DenseBlock(

(denselayer1): _DenseLayer(

(norm1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer2): _DenseLayer(

(norm1): BatchNorm2d(288, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(288, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer3): _DenseLayer(

(norm1): BatchNorm2d(320, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(320, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer4): _DenseLayer(

(norm1): BatchNorm2d(352, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(352, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer5): _DenseLayer(

(norm1): BatchNorm2d(384, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(384, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer6): _DenseLayer(

(norm1): BatchNorm2d(416, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(416, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer7): _DenseLayer(

(norm1): BatchNorm2d(448, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(448, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer8): _DenseLayer(

(norm1): BatchNorm2d(480, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(480, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer9): _DenseLayer(

(norm1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer10): _DenseLayer(

(norm1): BatchNorm2d(544, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(544, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer11): _DenseLayer(

(norm1): BatchNorm2d(576, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(576, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer12): _DenseLayer(

(norm1): BatchNorm2d(608, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(608, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer13): _DenseLayer(

(norm1): BatchNorm2d(640, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(640, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer14): _DenseLayer(

(norm1): BatchNorm2d(672, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(672, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer15): _DenseLayer(

(norm1): BatchNorm2d(704, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(704, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer16): _DenseLayer(

(norm1): BatchNorm2d(736, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(736, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer17): _DenseLayer(

(norm1): BatchNorm2d(768, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(768, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer18): _DenseLayer(

(norm1): BatchNorm2d(800, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(800, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer19): _DenseLayer(

(norm1): BatchNorm2d(832, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(832, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer20): _DenseLayer(

(norm1): BatchNorm2d(864, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(864, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer21): _DenseLayer(

(norm1): BatchNorm2d(896, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(896, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer22): _DenseLayer(

(norm1): BatchNorm2d(928, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(928, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer23): _DenseLayer(

(norm1): BatchNorm2d(960, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(960, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer24): _DenseLayer(

(norm1): BatchNorm2d(992, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(992, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

)

(transition3): _Transition(

(norm): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(pool): AvgPool2d(kernel_size=2, stride=2, padding=0)

)

(denseblock4): _DenseBlock(

(denselayer1): _DenseLayer(

(norm1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer2): _DenseLayer(

(norm1): BatchNorm2d(544, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(544, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer3): _DenseLayer(

(norm1): BatchNorm2d(576, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(576, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer4): _DenseLayer(

(norm1): BatchNorm2d(608, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(608, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer5): _DenseLayer(

(norm1): BatchNorm2d(640, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(640, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer6): _DenseLayer(

(norm1): BatchNorm2d(672, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(672, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer7): _DenseLayer(

(norm1): BatchNorm2d(704, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(704, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer8): _DenseLayer(

(norm1): BatchNorm2d(736, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(736, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer9): _DenseLayer(

(norm1): BatchNorm2d(768, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(768, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer10): _DenseLayer(

(norm1): BatchNorm2d(800, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(800, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer11): _DenseLayer(

(norm1): BatchNorm2d(832, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(832, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer12): _DenseLayer(

(norm1): BatchNorm2d(864, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(864, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer13): _DenseLayer(

(norm1): BatchNorm2d(896, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(896, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer14): _DenseLayer(

(norm1): BatchNorm2d(928, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(928, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer15): _DenseLayer(

(norm1): BatchNorm2d(960, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(960, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

(denselayer16): _DenseLayer(

(norm1): BatchNorm2d(992, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu1): ReLU(inplace=True)

(conv1): Conv2d(992, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(norm2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu2): ReLU(inplace=True)

(conv2): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

)

)

(norm5): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(classifier): Linear(in_features=1024, out_features=1000, bias=True)

)

4.2 使用torchsummary打印每个网络模型的详细信息

4.2.1 代码和打印网络模型信息

- 代码

form torchsummary improt summary

summary(model=model,input_data=(3,224,224))

==========================================================================================

Layer (type:depth-idx) Output Shape Param #

==========================================================================================

├─Sequential: 1-1 [-1, 1024, 7, 7] --

| └─Conv2d: 2-1 [-1, 64, 112, 112] 9,408

| └─BatchNorm2d: 2-2 [-1, 64, 112, 112] 128

| └─ReLU: 2-3 [-1, 64, 112, 112] --

| └─MaxPool2d: 2-4 [-1, 64, 56, 56] --

| └─_DenseBlock: 2-5 [-1, 256, 56, 56] --

| | └─_DenseLayer: 3-1 [-1, 32, 56, 56] 45,440

| | └─_DenseLayer: 3-2 [-1, 32, 56, 56] 49,600

| | └─_DenseLayer: 3-3 [-1, 32, 56, 56] 53,760

| | └─_DenseLayer: 3-4 [-1, 32, 56, 56] 57,920

| | └─_DenseLayer: 3-5 [-1, 32, 56, 56] 62,080

| | └─_DenseLayer: 3-6 [-1, 32, 56, 56] 66,240

| └─_Transition: 2-6 [-1, 128, 28, 28] --

| | └─BatchNorm2d: 3-7 [-1, 256, 56, 56] 512

| | └─ReLU: 3-8 [-1, 256, 56, 56] --

| | └─Conv2d: 3-9 [-1, 128, 56, 56] 32,768

| | └─AvgPool2d: 3-10 [-1, 128, 28, 28] --

| └─_DenseBlock: 2-7 [-1, 512, 28, 28] --

| | └─_DenseLayer: 3-11 [-1, 32, 28, 28] 53,760

| | └─_DenseLayer: 3-12 [-1, 32, 28, 28] 57,920

| | └─_DenseLayer: 3-13 [-1, 32, 28, 28] 62,080

| | └─_DenseLayer: 3-14 [-1, 32, 28, 28] 66,240

| | └─_DenseLayer: 3-15 [-1, 32, 28, 28] 70,400

| | └─_DenseLayer: 3-16 [-1, 32, 28, 28] 74,560

| | └─_DenseLayer: 3-17 [-1, 32, 28, 28] 78,720

| | └─_DenseLayer: 3-18 [-1, 32, 28, 28] 82,880

| | └─_DenseLayer: 3-19 [-1, 32, 28, 28] 87,040

| | └─_DenseLayer: 3-20 [-1, 32, 28, 28] 91,200

| | └─_DenseLayer: 3-21 [-1, 32, 28, 28] 95,360

| | └─_DenseLayer: 3-22 [-1, 32, 28, 28] 99,520

| └─_Transition: 2-8 [-1, 256, 14, 14] --

| | └─BatchNorm2d: 3-23 [-1, 512, 28, 28] 1,024

| | └─ReLU: 3-24 [-1, 512, 28, 28] --

| | └─Conv2d: 3-25 [-1, 256, 28, 28] 131,072

| | └─AvgPool2d: 3-26 [-1, 256, 14, 14] --

| └─_DenseBlock: 2-9 [-1, 1024, 14, 14] --

| | └─_DenseLayer: 3-27 [-1, 32, 14, 14] 70,400

| | └─_DenseLayer: 3-28 [-1, 32, 14, 14] 74,560

| | └─_DenseLayer: 3-29 [-1, 32, 14, 14] 78,720

| | └─_DenseLayer: 3-30 [-1, 32, 14, 14] 82,880

| | └─_DenseLayer: 3-31 [-1, 32, 14, 14] 87,040

| | └─_DenseLayer: 3-32 [-1, 32, 14, 14] 91,200

| | └─_DenseLayer: 3-33 [-1, 32, 14, 14] 95,360

| | └─_DenseLayer: 3-34 [-1, 32, 14, 14] 99,520

| | └─_DenseLayer: 3-35 [-1, 32, 14, 14] 103,680

| | └─_DenseLayer: 3-36 [-1, 32, 14, 14] 107,840

| | └─_DenseLayer: 3-37 [-1, 32, 14, 14] 112,000

| | └─_DenseLayer: 3-38 [-1, 32, 14, 14] 116,160

| | └─_DenseLayer: 3-39 [-1, 32, 14, 14] 120,320

| | └─_DenseLayer: 3-40 [-1, 32, 14, 14] 124,480

| | └─_DenseLayer: 3-41 [-1, 32, 14, 14] 128,640

| | └─_DenseLayer: 3-42 [-1, 32, 14, 14] 132,800

| | └─_DenseLayer: 3-43 [-1, 32, 14, 14] 136,960

| | └─_DenseLayer: 3-44 [-1, 32, 14, 14] 141,120

| | └─_DenseLayer: 3-45 [-1, 32, 14, 14] 145,280

| | └─_DenseLayer: 3-46 [-1, 32, 14, 14] 149,440

| | └─_DenseLayer: 3-47 [-1, 32, 14, 14] 153,600

| | └─_DenseLayer: 3-48 [-1, 32, 14, 14] 157,760

| | └─_DenseLayer: 3-49 [-1, 32, 14, 14] 161,920

| | └─_DenseLayer: 3-50 [-1, 32, 14, 14] 166,080

| └─_Transition: 2-10 [-1, 512, 7, 7] --

| | └─BatchNorm2d: 3-51 [-1, 1024, 14, 14] 2,048

| | └─ReLU: 3-52 [-1, 1024, 14, 14] --

| | └─Conv2d: 3-53 [-1, 512, 14, 14] 524,288

| | └─AvgPool2d: 3-54 [-1, 512, 7, 7] --

| └─_DenseBlock: 2-11 [-1, 1024, 7, 7] --

| | └─_DenseLayer: 3-55 [-1, 32, 7, 7] 103,680

| | └─_DenseLayer: 3-56 [-1, 32, 7, 7] 107,840

| | └─_DenseLayer: 3-57 [-1, 32, 7, 7] 112,000

| | └─_DenseLayer: 3-58 [-1, 32, 7, 7] 116,160

| | └─_DenseLayer: 3-59 [-1, 32, 7, 7] 120,320

| | └─_DenseLayer: 3-60 [-1, 32, 7, 7] 124,480

| | └─_DenseLayer: 3-61 [-1, 32, 7, 7] 128,640

| | └─_DenseLayer: 3-62 [-1, 32, 7, 7] 132,800

| | └─_DenseLayer: 3-63 [-1, 32, 7, 7] 136,960

| | └─_DenseLayer: 3-64 [-1, 32, 7, 7] 141,120

| | └─_DenseLayer: 3-65 [-1, 32, 7, 7] 145,280

| | └─_DenseLayer: 3-66 [-1, 32, 7, 7] 149,440

| | └─_DenseLayer: 3-67 [-1, 32, 7, 7] 153,600

| | └─_DenseLayer: 3-68 [-1, 32, 7, 7] 157,760

| | └─_DenseLayer: 3-69 [-1, 32, 7, 7] 161,920

| | └─_DenseLayer: 3-70 [-1, 32, 7, 7] 166,080

| └─BatchNorm2d: 2-12 [-1, 1024, 7, 7] 2,048

├─Linear: 1-2 [-1, 1000] 1,025,000

==========================================================================================

Total params: 7,978,856

Trainable params: 7,978,856

Non-trainable params: 0

Total mult-adds (G): 2.85

==========================================================================================

Input size (MB): 0.57

Forward/backward pass size (MB): 172.18

Params size (MB): 30.44

Estimated Total Size (MB): 203.19

==========================================================================================

4.2.2 自定义调试信息的打印(认识代码运行的关键信息)

为了方便查看每一层的输出,为此我在创建每个Block和Layer的时候加入了调试信息,这对于网络模型的理解非常有用

=============================================

第1个DenseBlock的init_features的shape:torch.Size([2, 64, 56, 56])

第1个DenseBlock第1个DenseLayer的输入:torch.Size([2, 64, 56, 56])

经过DenseBlock_1的denselayer1层之后的features的List输出:[(2, 64, 56, 56), (2, 32, 56, 56)]

第1个DenseBlock第2个DenseLayer的输入:torch.Size([2, 96, 56, 56])

经过DenseBlock_1的denselayer2层之后的features的List输出:[(2, 64, 56, 56), (2, 32, 56, 56), (2, 32, 56, 56)]

第1个DenseBlock第3个DenseLayer的输入:torch.Size([2, 128, 56, 56])

经过DenseBlock_1的denselayer3层之后的features的List输出:[(2, 64, 56, 56), (2, 32, 56, 56), (2, 32, 56, 56), (2, 32, 56, 56)]

第1个DenseBlock第4个DenseLayer的输入:torch.Size([2, 160, 56, 56])

经过DenseBlock_1的denselayer4层之后的features的List输出:[(2, 64, 56, 56), (2, 32, 56, 56), (2, 32, 56, 56), (2, 32, 56, 56), (2, 32, 56, 56)]

第1个DenseBlock第5个DenseLayer的输入:torch.Size([2, 192, 56, 56])

经过DenseBlock_1的denselayer5层之后的features的List输出:[(2, 64, 56, 56), (2, 32, 56, 56), (2, 32, 56, 56), (2, 32, 56, 56), (2, 32, 56, 56), (2, 32, 56, 56)]

第1个DenseBlock第6个DenseLayer的输入:torch.Size([2, 224, 56, 56])

经过DenseBlock_1的denselayer6层之后的features的List输出:[(2, 64, 56, 56), (2, 32, 56, 56), (2, 32, 56, 56), (2, 32, 56, 56), (2, 32, 56, 56), (2, 32, 56, 56), (2, 32, 56, 56)]

经过一个DenseBlock_1最终返回features的shape:torch.Size([2, 256, 56, 56])

=============================================

第2个DenseBlock的init_features的shape:torch.Size([2, 128, 28, 28])

第2个DenseBlock第1个DenseLayer的输入:torch.Size([2, 128, 28, 28])

经过DenseBlock_2的denselayer1层之后的features的List输出:[(2, 128, 28, 28), (2, 32, 28, 28)]

第2个DenseBlock第2个DenseLayer的输入:torch.Size([2, 160, 28, 28])

经过DenseBlock_2的denselayer2层之后的features的List输出:[(2, 128, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28)]

第2个DenseBlock第3个DenseLayer的输入:torch.Size([2, 192, 28, 28])

经过DenseBlock_2的denselayer3层之后的features的List输出:[(2, 128, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28)]

第2个DenseBlock第4个DenseLayer的输入:torch.Size([2, 224, 28, 28])

经过DenseBlock_2的denselayer4层之后的features的List输出:[(2, 128, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28)]

第2个DenseBlock第5个DenseLayer的输入:torch.Size([2, 256, 28, 28])

经过DenseBlock_2的denselayer5层之后的features的List输出:[(2, 128, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28)]

第2个DenseBlock第6个DenseLayer的输入:torch.Size([2, 288, 28, 28])

经过DenseBlock_2的denselayer6层之后的features的List输出:[(2, 128, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28)]

第2个DenseBlock第7个DenseLayer的输入:torch.Size([2, 320, 28, 28])

经过DenseBlock_2的denselayer7层之后的features的List输出:[(2, 128, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28)]

第2个DenseBlock第8个DenseLayer的输入:torch.Size([2, 352, 28, 28])

经过DenseBlock_2的denselayer8层之后的features的List输出:[(2, 128, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28)]

第2个DenseBlock第9个DenseLayer的输入:torch.Size([2, 384, 28, 28])

经过DenseBlock_2的denselayer9层之后的features的List输出:[(2, 128, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28)]

第2个DenseBlock第10个DenseLayer的输入:torch.Size([2, 416, 28, 28])

经过DenseBlock_2的denselayer10层之后的features的List输出:[(2, 128, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28)]

第2个DenseBlock第11个DenseLayer的输入:torch.Size([2, 448, 28, 28])

经过DenseBlock_2的denselayer11层之后的features的List输出:[(2, 128, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28)]

第2个DenseBlock第12个DenseLayer的输入:torch.Size([2, 480, 28, 28])

经过DenseBlock_2的denselayer12层之后的features的List输出:[(2, 128, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28), (2, 32, 28, 28)]

经过一个DenseBlock_2最终返回features的shape:torch.Size([2, 512, 28, 28])

=============================================

第3个DenseBlock的init_features的shape:torch.Size([2, 256, 14, 14])

第3个DenseBlock第1个DenseLayer的输入:torch.Size([2, 256, 14, 14])

经过DenseBlock_3的denselayer1层之后的features的List输出:[(2, 256, 14, 14), (2, 32, 14, 14)]

第3个DenseBlock第2个DenseLayer的输入:torch.Size([2, 288, 14, 14])

经过DenseBlock_3的denselayer2层之后的features的List输出:[(2, 256, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14)]

第3个DenseBlock第3个DenseLayer的输入:torch.Size([2, 320, 14, 14])

经过DenseBlock_3的denselayer3层之后的features的List输出:[(2, 256, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14)]

第3个DenseBlock第4个DenseLayer的输入:torch.Size([2, 352, 14, 14])

经过DenseBlock_3的denselayer4层之后的features的List输出:[(2, 256, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14)]

第3个DenseBlock第5个DenseLayer的输入:torch.Size([2, 384, 14, 14])

经过DenseBlock_3的denselayer5层之后的features的List输出:[(2, 256, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14)]

第3个DenseBlock第6个DenseLayer的输入:torch.Size([2, 416, 14, 14])

经过DenseBlock_3的denselayer6层之后的features的List输出:[(2, 256, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14)]

第3个DenseBlock第7个DenseLayer的输入:torch.Size([2, 448, 14, 14])

经过DenseBlock_3的denselayer7层之后的features的List输出:[(2, 256, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14)]

第3个DenseBlock第8个DenseLayer的输入:torch.Size([2, 480, 14, 14])

经过DenseBlock_3的denselayer8层之后的features的List输出:[(2, 256, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14)]

第3个DenseBlock第9个DenseLayer的输入:torch.Size([2, 512, 14, 14])

经过DenseBlock_3的denselayer9层之后的features的List输出:[(2, 256, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14)]

第3个DenseBlock第10个DenseLayer的输入:torch.Size([2, 544, 14, 14])

经过DenseBlock_3的denselayer10层之后的features的List输出:[(2, 256, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14)]

第3个DenseBlock第11个DenseLayer的输入:torch.Size([2, 576, 14, 14])

经过DenseBlock_3的denselayer11层之后的features的List输出:[(2, 256, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14)]

第3个DenseBlock第12个DenseLayer的输入:torch.Size([2, 608, 14, 14])

经过DenseBlock_3的denselayer12层之后的features的List输出:[(2, 256, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14)]

第3个DenseBlock第13个DenseLayer的输入:torch.Size([2, 640, 14, 14])

经过DenseBlock_3的denselayer13层之后的features的List输出:[(2, 256, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14)]

第3个DenseBlock第14个DenseLayer的输入:torch.Size([2, 672, 14, 14])

经过DenseBlock_3的denselayer14层之后的features的List输出:[(2, 256, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14)]

第3个DenseBlock第15个DenseLayer的输入:torch.Size([2, 704, 14, 14])

经过DenseBlock_3的denselayer15层之后的features的List输出:[(2, 256, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14)]

第3个DenseBlock第16个DenseLayer的输入:torch.Size([2, 736, 14, 14])

经过DenseBlock_3的denselayer16层之后的features的List输出:[(2, 256, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14)]

第3个DenseBlock第17个DenseLayer的输入:torch.Size([2, 768, 14, 14])

经过DenseBlock_3的denselayer17层之后的features的List输出:[(2, 256, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14)]

第3个DenseBlock第18个DenseLayer的输入:torch.Size([2, 800, 14, 14])

经过DenseBlock_3的denselayer18层之后的features的List输出:[(2, 256, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14)]

第3个DenseBlock第19个DenseLayer的输入:torch.Size([2, 832, 14, 14])

经过DenseBlock_3的denselayer19层之后的features的List输出:[(2, 256, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14)]

第3个DenseBlock第20个DenseLayer的输入:torch.Size([2, 864, 14, 14])

经过DenseBlock_3的denselayer20层之后的features的List输出:[(2, 256, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14)]

第3个DenseBlock第21个DenseLayer的输入:torch.Size([2, 896, 14, 14])

经过DenseBlock_3的denselayer21层之后的features的List输出:[(2, 256, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14)]

第3个DenseBlock第22个DenseLayer的输入:torch.Size([2, 928, 14, 14])

经过DenseBlock_3的denselayer22层之后的features的List输出:[(2, 256, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14)]

第3个DenseBlock第23个DenseLayer的输入:torch.Size([2, 960, 14, 14])

经过DenseBlock_3的denselayer23层之后的features的List输出:[(2, 256, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14)]

第3个DenseBlock第24个DenseLayer的输入:torch.Size([2, 992, 14, 14])

经过DenseBlock_3的denselayer24层之后的features的List输出:[(2, 256, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14), (2, 32, 14, 14)]

经过一个DenseBlock_3最终返回features的shape:torch.Size([2, 1024, 14, 14])

=============================================

第4个DenseBlock的init_features的shape:torch.Size([2, 512, 7, 7])

第4个DenseBlock第1个DenseLayer的输入:torch.Size([2, 512, 7, 7])

经过DenseBlock_4的denselayer1层之后的features的List输出:[(2, 512, 7, 7), (2, 32, 7, 7)]

第4个DenseBlock第2个DenseLayer的输入:torch.Size([2, 544, 7, 7])

经过DenseBlock_4的denselayer2层之后的features的List输出:[(2, 512, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7)]

第4个DenseBlock第3个DenseLayer的输入:torch.Size([2, 576, 7, 7])

经过DenseBlock_4的denselayer3层之后的features的List输出:[(2, 512, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7)]

第4个DenseBlock第4个DenseLayer的输入:torch.Size([2, 608, 7, 7])

经过DenseBlock_4的denselayer4层之后的features的List输出:[(2, 512, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7)]

第4个DenseBlock第5个DenseLayer的输入:torch.Size([2, 640, 7, 7])

经过DenseBlock_4的denselayer5层之后的features的List输出:[(2, 512, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7)]

第4个DenseBlock第6个DenseLayer的输入:torch.Size([2, 672, 7, 7])

经过DenseBlock_4的denselayer6层之后的features的List输出:[(2, 512, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7)]

第4个DenseBlock第7个DenseLayer的输入:torch.Size([2, 704, 7, 7])

经过DenseBlock_4的denselayer7层之后的features的List输出:[(2, 512, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7)]

第4个DenseBlock第8个DenseLayer的输入:torch.Size([2, 736, 7, 7])

经过DenseBlock_4的denselayer8层之后的features的List输出:[(2, 512, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7)]

第4个DenseBlock第9个DenseLayer的输入:torch.Size([2, 768, 7, 7])

经过DenseBlock_4的denselayer9层之后的features的List输出:[(2, 512, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7)]

第4个DenseBlock第10个DenseLayer的输入:torch.Size([2, 800, 7, 7])

经过DenseBlock_4的denselayer10层之后的features的List输出:[(2, 512, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7)]

第4个DenseBlock第11个DenseLayer的输入:torch.Size([2, 832, 7, 7])

经过DenseBlock_4的denselayer11层之后的features的List输出:[(2, 512, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7)]

第4个DenseBlock第12个DenseLayer的输入:torch.Size([2, 864, 7, 7])

经过DenseBlock_4的denselayer12层之后的features的List输出:[(2, 512, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7)]

第4个DenseBlock第13个DenseLayer的输入:torch.Size([2, 896, 7, 7])

经过DenseBlock_4的denselayer13层之后的features的List输出:[(2, 512, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7)]

第4个DenseBlock第14个DenseLayer的输入:torch.Size([2, 928, 7, 7])

经过DenseBlock_4的denselayer14层之后的features的List输出:[(2, 512, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7)]

第4个DenseBlock第15个DenseLayer的输入:torch.Size([2, 960, 7, 7])

经过DenseBlock_4的denselayer15层之后的features的List输出:[(2, 512, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7)]

第4个DenseBlock第16个DenseLayer的输入:torch.Size([2, 992, 7, 7])

经过DenseBlock_4的denselayer16层之后的features的List输出:[(2, 512, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7), (2, 32, 7, 7)]

经过一个DenseBlock_4最终返回features的shape:torch.Size([2, 1024, 7, 7])

5.全部代码汇总和问题解决

import re

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.utils.checkpoint as cp

from collections import OrderedDict

from torch import Tensor

from typing import Any, List, Tuple

import numpy as np

from torchsummary import summary

class _DenseLayer(nn.Module):

def __init__(

self,

block_idx:int,layer_idx:int,

num_input_features: int,

growth_rate: int,

bn_size: int,

) -> None:

super(_DenseLayer, self).__init__()

self.block_idx = block_idx

self.layer_idx = layer_idx

self.add_module('norm1', nn.BatchNorm2d(num_input_features))

self.add_module('relu1', nn.ReLU(inplace=True))

self.add_module('conv1', nn.Conv2d(num_input_features, bn_size *

growth_rate, kernel_size=1, stride=1,

bias=False))

self.add_module('norm2', nn.BatchNorm2d(bn_size * growth_rate))

self.add_module('relu2', nn.ReLU(inplace=True))

self.add_module('conv2', nn.Conv2d(bn_size * growth_rate, growth_rate,

kernel_size=3, stride=1, padding=1,

bias=False))

def bn_function(self, inputs: List[Tensor]) -> Tensor:

'''

1.经过Pytorch的图像的维度必须是4维,(batch,channel,hight,width)

2.图像经过DenserLayer之后,得到的数据是4维: (batch,32,hight,width)

3.所以特征拼接是通道 第一个维度,变成:(batch,channel + 32,hight,width)

'''

print(f"\n第{self.block_idx+1}个DenseBlock第{self.layer_idx+1}个DenseLayer的输入:{torch.cat(inputs, 1).size()}")

concated_features = torch.cat(inputs, 1)

# 1x1卷积,图像的维度变成 128,得到 torch.size(batch,128,height,width)

bottleneck_output = self.conv1(self.relu1(self.norm1(concated_features))) # noqa: T484

return bottleneck_output

def forward(self, input: Tensor) -> Tensor: # noqa: F811

# 如果得到的数据是单个数据,不是List形式的,则转换成 List,以便后面的处理,

# 比如第一个就是 (batch,64,56,56) -> [(batch,64,56,56)]

if isinstance(input, Tensor):

prev_features = [input]

else:

prev_features = input

#print(f"prev_features的shape:{[item.to('cpu').numpy().shape for item in prev_features]}")

#print(f"第{self.block_idx+1}个DenseBloc第{self.layer_idx+1}个DenseLayer的kprev_features的shape:{[item.to('cpu').numpy().shape for item in prev_features]}")

# 之前的特征图经过 BN1->Relu1->Conv1

bottleneck_output = self.bn_function(prev_features)

# 特征提取,不改变图像的大小,维度变成 32 ,得到 torch.size = (batch,32,height,width)

new_features = self.conv2(self.relu2(self.norm2(bottleneck_output)))

# 返回得到的新特征,torch.size = (batch,32,height,width)

return new_features

class _DenseBlock(nn.ModuleDict):

_version = 2

def __init__(

self,

block_idx:int,

num_layers: int,

num_input_features: int,

bn_size: int,

growth_rate: int,

) -> None:

super(_DenseBlock, self).__init__()

self.block_idx = block_idx

'''

当i=0的时候,mum_layers = 6 ,调用函数 block = _DenseBlock(num_layers=6,num_input_features=64,bn_size=4,groth_rate=32),第一次执行特征的维度来自于前面的特征提取

'''

# 在DenseLayer中输出是相同的,但是输入的维度有来自前面的特征,所以每次输出的维度都是增长的,且增长的速率和输出的维度有关,称为 growth_rate

for i in range(num_layers):

layer = _DenseLayer(block_idx=self.block_idx,layer_idx=i,

num_input_features=num_input_features + i * growth_rate,

growth_rate=growth_rate,

bn_size=bn_size

)

# 在初始化的时候会形成很多子模型

self.add_module('denselayer%d' % (i + 1), layer)

def forward(self, init_features: Tensor) -> Tensor:

# 初始的特征 转换成列表的形式,比如第一个是 torch.size = (batch,64,56,56) - > features=[(batch,64,56,56)]

features = [init_features]

print("\n=============================================\n")

print(f"第{self.block_idx+1}个DenseBlock的init_features的shape:{torch.cat(features, 1).size()}")

#print(f"init_features的shape:{[item.to('cpu').numpy().shape for item in features]}")

# 遍历所有的Layer

for name, layer in self.items():

#print(f"{name}第{i+1}个feature的shape:{item.to('cpu').numpy().shape}")

# 通过Layer只有,会得到一个新的特征 大小为 torch.size = (batch,32,height,width) ,注意,每一个DensnBlock中的DensnLayer得到的输出图像大小是不变的,否则无法进行特征的融合

new_features = layer(features)

features.append(new_features) # 如果是第一次的话,就会得到 features = [(batch,64,56,56),(batch,32,56,56)],第一个DenseBlock的第二个DenseLayer 得到features = [(batch,64,56,56),(batch,32,56,56),(batch,32,56,56)],类推

print(f"经过DenseBlock_{self.block_idx+1}的{name}层之后的features的List输出:{[item.to('cpu').numpy().shape for item in features]}")

#最终做完一个DenseBlock的话,会返回一个全部特征融合的结果,比如做完第一个DenseBlock,会得到 [(batch,num_layers*32 +init_feature[1],height,width)],第一个的话就是 torch.size = [(batch,256,56,56)],这个会作为第2个DenseBlock的输入

# 但是在做完第一个DenseBlock的时候,为了减少参数,会把前面的特征进行裁剪,变成 torch.size = [(batch,128,28,28)]

print

# 以此类推

#print(f"经过一个DenseBlock最终返回features的shape:{[item.to('cpu').numpy().shape for item in torch.cat(features, 1)]}")

print(f"\n经过一个DenseBlock_{self.block_idx+1}最终返回features的shape:{torch.cat(features, 1).size()}")

return torch.cat(features, 1)

class _Transition(nn.Sequential):

def __init__(self, num_input_features: int, num_output_features: int) -> None:

super(_Transition, self).__init__()

self.add_module('norm', nn.BatchNorm2d(num_input_features))

self.add_module('relu', nn.ReLU(inplace=True))

self.add_module('conv', nn.Conv2d(num_input_features, num_output_features,

kernel_size=1, stride=1, bias=False))

self.add_module('pool', nn.AvgPool2d(kernel_size=2, stride=2))

class DenseNet(nn.Module):

def __init__(

self,

growth_rate: int = 32,

block_config: Tuple[int, int, int, int] = (6, 12, 24, 16),

num_init_features: int = 64,

bn_size: int = 4,

num_classes: int = 1000,

) -> None:

super(DenseNet, self).__init__()

# First convolution 输入(3,224,224) -> (64,56,56)

self.features = nn.Sequential(OrderedDict([

('conv0', nn.Conv2d(3, num_init_features, kernel_size=7, stride=2,

padding=3, bias=False)),

('norm0', nn.BatchNorm2d(num_init_features)),

('relu0', nn.ReLU(inplace=True)),

('pool0', nn.MaxPool2d(kernel_size=3, stride=2, padding=1)),

]))

# Each denseblock 第一次进入num_feature = 64

num_features = num_init_features

# 总共创建4个DenseBlock,第1个DenseBlock有6个DenseLayer,第2个DenseBlock有12个DenseLayer,第3个DenseBlock有24个DenseLayer,第4个DenseBlock有16个DenseLayer

# 每个DenseLayer 有两次卷积

for i, num_layers in enumerate(block_config):

'''

当i=0的时候,mum_layers = 6 ,调用函数 block = _DenseBlock(num_layers=6,num_input_features=64,bn_size=4,groth_rate=32),第一次执行特征的维度来自于前面的特征提取

当i=1的时候,mum_layers = 12 ,调用函数 block = _DenseBlock(num_layers=12,num_input_features=128,bn_size=4,groth_rate=32),后面执行完回来,num_features 减少一半:128

当i=2的时候,mum_layers = 24 ,调用函数 block = _DenseBlock(num_layers=24,num_input_features=256,bn_size=4,groth_rate=32),后面执行完回来,num_features 减少一半:256

当i=3的时候,mum_layers = 16 ,调用函数 block = _DenseBlock(num_layers=16,num_input_features=512,bn_size=4,groth_rate=32),后面执行完回来,num_features 减少一半:512

'''

block = _DenseBlock(block_idx=i,

num_layers=num_layers,