import numpy as np

import paddle.fluid as fluid

import paddle

logit_y = np.array([[1.23, 2.33, 3.33, 2.11], \

[5.23, 2.33, 3.33, 2.11], \

[1.23, 8.33, 3.33, 2.11], \

[1.23, 2.33, 3.33, 2.11]]).astype(np.float32)

output_y1 = np.array([[3], [0], [1], [1]]).astype(np.int64)

output_y2 = np.array([[0,0,0,1], [1,0,0,0], [0,1,0,0], [0,1,0,0]]).astype(np.float32)

logit_y = paddle.to_tensor(logit_y)

output_y1 = paddle.to_tensor(output_y1)

output_y2 = paddle.to_tensor(output_y2)

#print(logit_y.shape, output_y1.shape)

#print(logit_y.numpy(), output_y1.numpy())

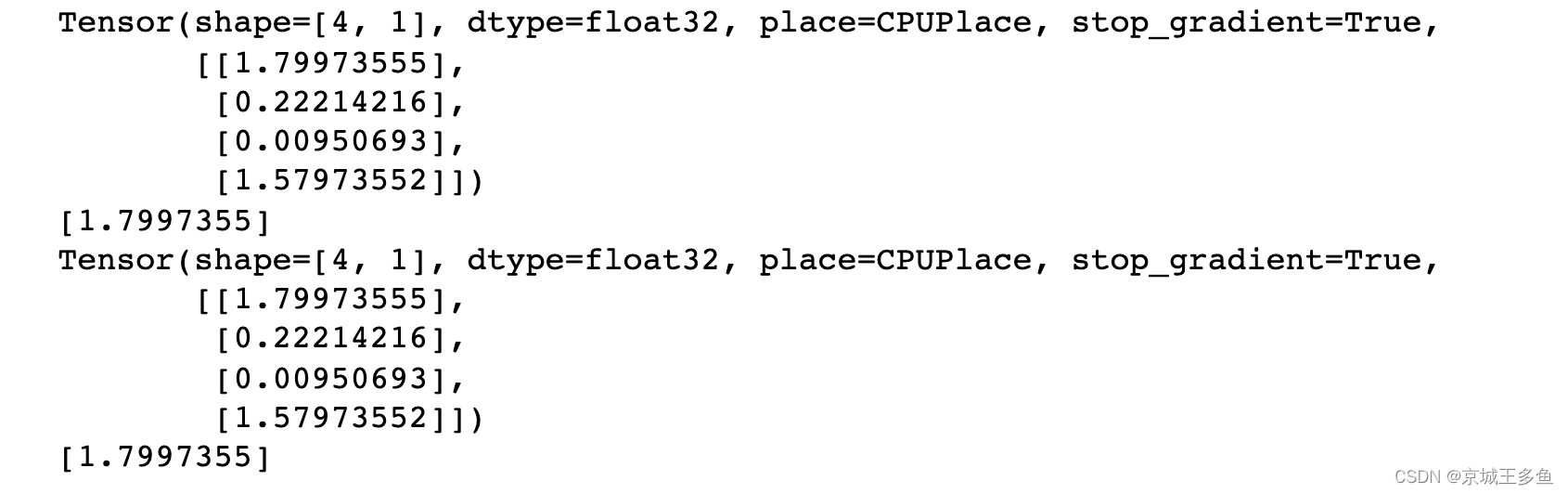

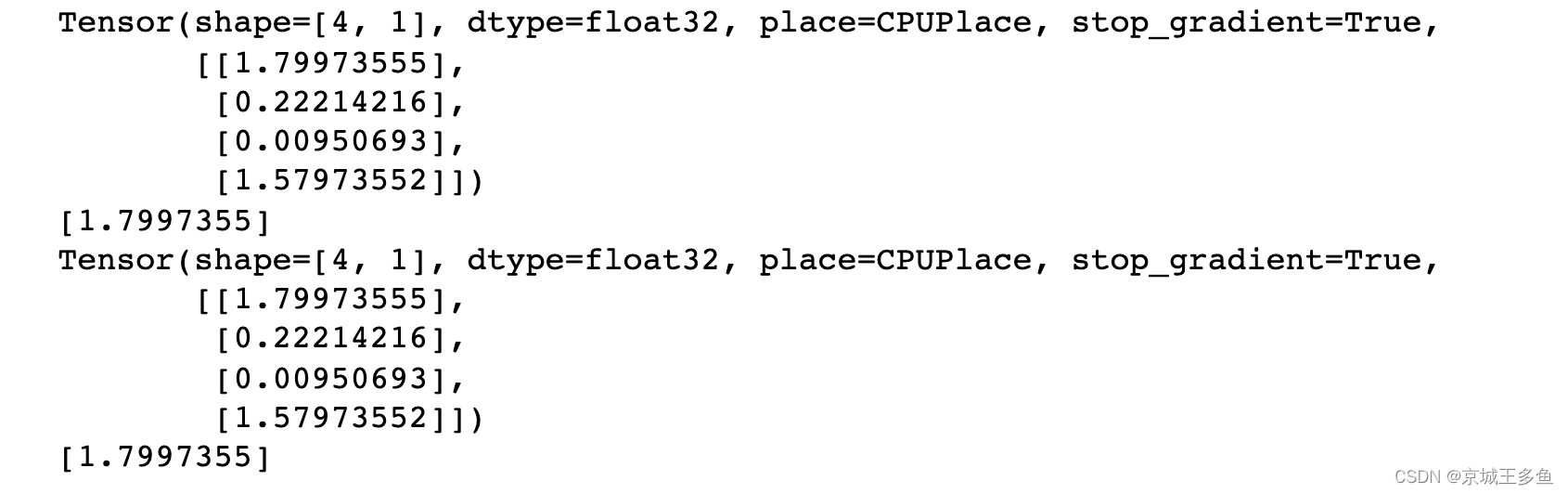

loss1 = fluid.layers.softmax_with_cross_entropy(logit_y, output_y1)

print(loss1)

print(loss1.numpy()[0])

loss2 = fluid.layers.softmax_with_cross_entropy(logit_y, output_y2, soft_label=True)

print(loss2)

print(loss2.numpy()[0])

官方文档:softmax_with_cross_entropy-API文档-PaddlePaddle深度学习平台该OP实现了softmax交叉熵损失函数。该函数会将softmax操作、交叉熵损失函数的计算过程进行合并,从而提供了数值上更稳定的梯度值。 因为该运算对 logits 的 axis 维执行softma https://www.paddlepaddle.org.cn/documentation/docs/zh/1.8/api_cn/layers_cn/softmax_with_cross_entropy_cn.html https://www.paddlepaddle.org.cn/documentation/docs/zh/1.8/api_cn/layers_cn/softmax_with_cross_entropy_cn.html

|