文章目录

Lambda Layer

使用keras.layers提供的Lambda layer API

mnist = tf.keras.datasets.mnist

(x_train, y_train),(x_test, y_test) = mnist.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

如下:

model = tf.keras.models.Sequential([

tf.keras.layers.Flatten(input_shape=(28, 28)),

tf.keras.layers.Dense(128),

tf.keras.layers.Lambda(lambda x: tf.abs(x)),

tf.keras.layers.Dense(10, activation='softmax')

])

也可以不使用lambda表达式:

def my_relu(x):

return K.maxium(0,x)

model = tf.keras.models.Sequential([

tf.keras.layers.Flatten(input_shape=(28, 28)),

tf.keras.layers.Dense(128),

tf.keras.layers.Lambda(my_relu),

tf.keras.layers.Dense(10, activation='softmax')

])

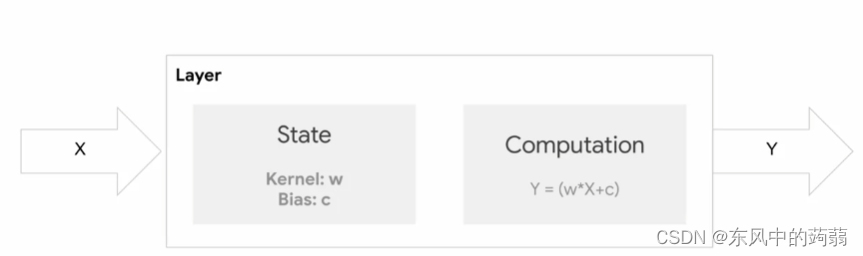

上述定义的Layer都是untrainable,他们不具备权重。

一般layer具有如下结构:

定义类

我们还可以从基类 Layer总继承,从而创建一个新的类。

# inherit from this base class

from tensorflow.keras.layers import Layer

class SimpleDense(Layer):

def __init__(self, units=32, activation=None):

'''Initializes the instance attributes'''

super(SimpleDense, self).__init__()

self.units = units

self.activation = tf.keras.activations.get(activation)

def build(self, input_shape):

'''Create the state of the layer (weights)'''

# initialize the weights

w_init = tf.random_normal_initializer()

self.w = tf.Variable(name="kernel",

initial_value=w_init(shape=(input_shape[-1], self.units),

dtype='float32'),

trainable=True)

# initialize the biases

b_init = tf.zeros_initializer()

self.b = tf.Variable(name="bias",

initial_value=b_init(shape=(self.units,), dtype='float32'),

trainable=True)

def call(self, inputs):

'''Defines the computation from inputs to outputs'''

return self.activation(tf.matmul(inputs, self.w) + self.b)

我们并不需要在定义的Layer中进行任何back-propagation的操作,只需要指定forward-propagation就行。

# define the dataset

xs = np.array([-1.0, 0.0, 1.0, 2.0, 3.0, 4.0], dtype=float)

ys = np.array([-3.0, -1.0, 1.0, 3.0, 5.0, 7.0], dtype=float)

# use the Sequential API to build a model with our custom layer

my_layer = SimpleDense(units=1)

model = tf.keras.Sequential([my_layer])

# configure and train the model

model.compile(optimizer='sgd', loss='mean_squared_error')

model.fit(xs, ys, epochs=500,verbose=0)

# perform inference

print(model.predict([10.0]))

# see the updated state of the variables

print(my_layer.variables)