一、LeNet

- 卷积神经网络的开山鼻祖。

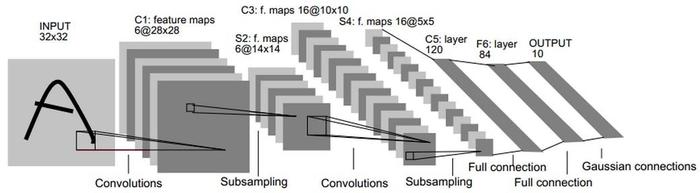

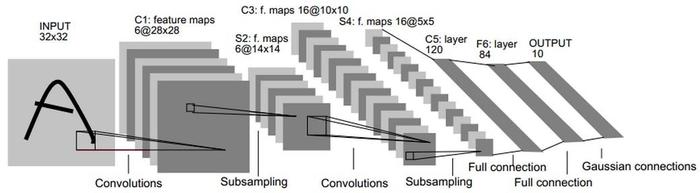

- 网络结构如下:

- 7层网络:2层卷积层,2层池化层交替出现。最后是三层全连接层。

- Pytorch实现

import torch

from torch import nn

class LeNet(nn.Module):

def __init__(self):

super(LeNet, self).__init__()

self.layer1 = nn.Sequential(

nn.Conv2d(1,6,kernel_size=3,padding=1),

nn.MaxPool2d(2,2)

)

self.layer2 = nn.Sequential(

nn.Conv2d(6,16,kernel_size=3, padding=1),

nn.MaxPool2d(2, 2)

)

self.layer3 = nn.Sequential(

nn.Linear(16*5*5,120),

nn.Linear(120,84),

nn.Linear(84,10)

)

def forward(self,x):

x = self.layer1(x)

x = self.layer2(x)

x = x.view(x.size(0),-1)

x = self.layer3(x)

return x

if __name__ == '__main__':

LeNet_model = LeNet()

二、AlexNet

- AlexNet相对于LeNet第一次引入了Relu激活函数,并在全连接层引入了Dropout,从而防止过拟合。

- 网络结构

- 代码实现

from torch import nn

class AlexNet(nn.Module):

def __init__(self,num_classes):

super(AlexNet, self).__init__()

self.features = nn.Sequential(

nn.Conv2d(3,64,kernel_size=11,stride=4,padding=2),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3,stride=2),

nn.Conv2d(64,192, kernel_size=5, padding=2),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2),

nn.Conv2d(192,384, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(384, 256, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(256, 256, kernel_size=3, padding=1),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3,stride=2)

)

self.classifier = nn.Sequential(

nn.Dropout(),

nn.Linear(256*6*6,4096),

nn.ReLU(inplace=True),

nn.Dropout(),

nn.Linear(4096,4096),

nn.ReLU(inplace=True),

nn.Linear(4096,num_classes)

)

def forward(self,x):

x = self.features(x)

x = x.view(x.size(0),-1)

x = self.classifier(x)

return x

if __name__ == '__main__':

AlexNet_module = AlexNet()

|