????????resnet在深度学习领域的重要性不言而喻,自从15年resnet提出后,被各种深度学习模型大量引用。得益于其残差结构的设计,使得深度学习模型可以训练更深层的网络。常见的resnet有resnet18、resnet34、resnet50、resnet101、resnet152几种结构,resnet残差网络由一个卷积块和四个残差块组成,每个残差块包含多个残差结构。从残差结构上看,resnet18和resnet34结构近似,使用相同的残差结构,仅在残差结构数量上有区别,都是使用两个卷积层加一个残差连接层作为残差结构。

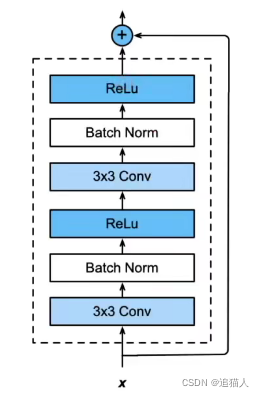

resnet18与resnet34的残差结构:

? ? ? ? 输入特征图x经过两个卷积核大小为3*3的卷积层,使用padding为1的填充保持特征图大小不变,每个卷积层后接BN层和Relu层,完成两次卷积核将结果与输入特征图x直接相加。resnet50到resnet152使用另一种残差结构,这种残差结构使用两个1*1的卷积核加一个3*3的卷积核。

| resnet18 | resnet34 | resnet50 | resnet101 | resnet152 | |

| block1 | 1*conv | 1*conv | 1*conv | 1*conv | 1*conv |

| block2 | 2*res(2*conv) | 3*res(2*conv) | 3*res(3*conv) | 3*res(3*conv) | 3*res(3*conv) |

| block3 | 2*residual(2*conv) | 4*res(2*conv) | 4*res(3*conv) | 4*res(3*conv) | 8*res(3*conv) |

| block4 | 2*residual(2*conv) | 6*res(2*conv) | 6*res(3*conv) | 23*res(3*conv) | 36*res(3*conv) |

| block5 | 2*residual(2*conv) | 3*res(2*conv) | 3*res(3*conv) | 3*res(3*conv) | 3*res(3*conv) |

| FN | linear | linear | linear | linear | linear |

resnet18的模型实现:

import torch.nn as nn

from torch.nn import functional as F

# 残差单元

class Residual(nn.Module):

def __init__(self, input_channel, out_channel, use_conv1x1=False, strides=1):

super().__init__()

self.conv1 = nn.Conv2d(input_channel, out_channel, kernel_size=3, stride=strides, padding=1)

self.conv2 = nn.Conv2d(out_channel, out_channel, kernel_size=3, stride=1, padding=1)

if use_conv1x1:

self.conv3 = nn.Conv2d(input_channel, out_channel, kernel_size=1, stride=strides)

else:

self.conv3 = None

self.bn1 = nn.BatchNorm2d(out_channel)

self.bn2 = nn.BatchNorm2d(out_channel)

self.relu = nn.ReLU(inplace=True)

def forward(self, X):

Y = self.relu(self.bn1(self.conv1(X)))

Y = self.bn2(self.conv2(Y))

if self.conv3:

X = self.conv3(X)

Y += X

return F.relu(Y)

# 多个残差单元组成的残差块

def res_block(input_channel, out_channel, num_residuals, first_block=False):

blk = []

for i in range(num_residuals):

if i == 0 and not first_block:

blk.append(Residual(input_channel, out_channel, use_conv1x1=True, strides=2))

else:

blk.append(Residual(out_channel, out_channel))

return blk

def resnet18(num_channel, classes):

block_1 = nn.Sequential(

nn.Conv2d(num_channel, 64, kernel_size=7, stride=2, padding=3),

nn.BatchNorm2d(64),

nn.ReLU(),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

)

block_2 = nn.Sequential(*res_block(64, 64, 2, first_block=True))

block_3 = nn.Sequential(*res_block(64, 128, 2))

block_4 = nn.Sequential(*res_block(128, 256, 2))

block_5 = nn.Sequential(*res_block(256, 512, 2))

model = nn.Sequential(block_1, block_2, block_3, block_4, block_5,

nn.AdaptiveAvgPool2d((1, 1)),

nn.Flatten(),

nn.Linear(512, classes)

)

return model

?resnet34的实现:

def resnet34(classes):

b1 = nn.Sequential(

nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3), # [3,224,224]-->[1,112,112]

nn.BatchNorm2d(64),

nn.ReLU(),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1) # [1,110,110]-->[1,56,56]

)

b2 = nn.Sequential(*res_block(64, 64, 3, first_block=True))

b3 = nn.Sequential(*res_block(64, 128, 4))

b4 = nn.Sequential(*res_block(128, 256, 6))

b5 = nn.Sequential(*res_block(256, 512, 3))

model = nn.Sequential(b1, b2, b3, b4, b5,

nn.AdaptiveAvgPool2d((1, 1)),

nn.Flatten(),

nn.Linear(512, classes)

)

return model???????其中残差连接1*1的卷积核,在每个残差块第一个残差结构中对输入特征图使用用于改变输入特征图通道数量,对除第一个残差块之外的其他残差块输入特征图进行特征缩放。当输入图像为[3,224,224],经过第一个卷积层,特征图变为[64,56,56]。由于之前的卷积层和池化层已经进行了两次特征缩放,在设计的时候,在第一个残差块没有对特征图做缩放,也没有改变通道数。之后的每个残差块,都会对特征图进行大小减半,通道加倍,因此在后面三个残差块中,第一个残差单元需要对输入通道进行1*1卷积核加步长为2的操作,以改变特征图大小和通道数,同时对其中第一个卷积层使用步长为2,以保证最后相加时特征图大小和通道数一致。

resnet50模型实现:

import torch

import torch.nn as nn

from torch.nn import functional as F

# 残差单元

class Residual(nn.Module):

def __init__(self, input_channel, out_channel, use_conv1x1=False, strides=1):

super().__init__()

self.conv1 = nn.Conv2d(input_channel, int(out_channel / 4), kernel_size=1, stride=strides)

self.conv2 = nn.Conv2d(int(out_channel / 4), int(out_channel / 4), kernel_size=3, stride=1, padding=1)

self.conv3 = nn.Conv2d(int(out_channel / 4), out_channel, kernel_size=1)

if use_conv1x1:

self.conv4 = nn.Conv2d(input_channel, out_channel, kernel_size=1, stride=strides)

else:

self.conv4 = None

self.bn1 = nn.BatchNorm2d(int(out_channel / 4))

self.bn2 = nn.BatchNorm2d(out_channel)

self.relu = nn.ReLU(inplace=True)

def forward(self, X):

Y = self.relu(self.bn1(self.conv1(X)))

Y = self.relu(self.bn1(self.conv2(Y)))

Y = self.bn2(self.conv3(Y))

if self.conv4:

X = self.conv4(X)

Y += X

return F.relu(Y)

# 多个残差单元组成的残差块

def res_block(input_channel, out_channel, num_residuals, first_block=False):

blk = []

for i in range(num_residuals):

if i == 0 and not first_block:

blk.append(Residual(input_channel, out_channel, use_conv1x1=True, strides=2))

input_channel = out_channel

if i == 0 and first_block:

blk.append(Residual(input_channel, out_channel, use_conv1x1=True, strides=1))

input_channel = out_channel

else:

blk.append(Residual(input_channel, out_channel))

return blk

def resnet50(num_channel, classes):

b1 = nn.Sequential(

nn.Conv2d(num_channel, 64, kernel_size=7, stride=2, padding=3),

nn.BatchNorm2d(64),

nn.ReLU(),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

)

b2 = nn.Sequential(*res_block(64, 256, 3, first_block=True))

b3 = nn.Sequential(*res_block(256, 512, 4))

b4 = nn.Sequential(*res_block(512, 1024, 6))

b5 = nn.Sequential(*res_block(1024, 2048, 3))

model = nn.Sequential(b1, b2, b3, b4, b5,

nn.AdaptiveAvgPool2d((1, 1)),

nn.Flatten(),

nn.Linear(2048, classes)

)

return model

net = resnet50(num_channel=3, classes=2)

x = torch.rand(size=(1, 3, 224, 224), dtype=torch.float32)

for layer in net:

print(layer)

x = layer(x)

print(x.shape)

?resnet101实现:

def resnet101(num_channel, classes):

b1 = nn.Sequential(

nn.Conv2d(num_channel, 64, kernel_size=7, stride=2, padding=3), # [3,224,224]-->[64,112,112]

nn.BatchNorm2d(64),

nn.ReLU(),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1) # [1,110,110]-->[1,56,56]

)

b2 = nn.Sequential(*res_block(64, 256, 3, first_block=True))

b3 = nn.Sequential(*res_block(256, 512, 4))

b4 = nn.Sequential(*res_block(512, 1024, 23))

b5 = nn.Sequential(*res_block(1024, 2048, 3))

model = nn.Sequential(b1, b2, b3, b4, b5,

nn.AdaptiveAvgPool2d((1, 1)),

nn.Flatten(),

nn.Linear(2048, classes)

)

return modelresnet152实现:

def resnet152(num_channel, classes):

b1 = nn.Sequential(

nn.Conv2d(num_channel, 64, kernel_size=7, stride=2, padding=3), # [3,224,224]-->[64,112,112]

nn.BatchNorm2d(64),

nn.ReLU(),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1) # [64,112,112]-->[64,56,56]

)

b2 = nn.Sequential(*res_block(64, 256, 3, first_block=True))

b3 = nn.Sequential(*res_block(256, 512, 8))

b4 = nn.Sequential(*res_block(512, 1024, 36))

b5 = nn.Sequential(*res_block(1024, 2048, 3))

model = nn.Sequential(b1, b2, b3, b4, b5,

nn.AdaptiveAvgPool2d((1, 1)),

nn.Flatten(),

nn.Linear(2048, classes)

)

return model? ? ? resnet50之后的网络出了采用3个卷结合,在残差结构内部也有一些区别,在残差结构内第一个卷积层和第二个卷积层输出通道为残差块输出通道的四分之一,并且第一个残差块需要对特征图通道进行变换,那么在代码实现上需要对第一个残差块的第一个残差结构使用1*1卷积核进行输入特征图的通道变换。