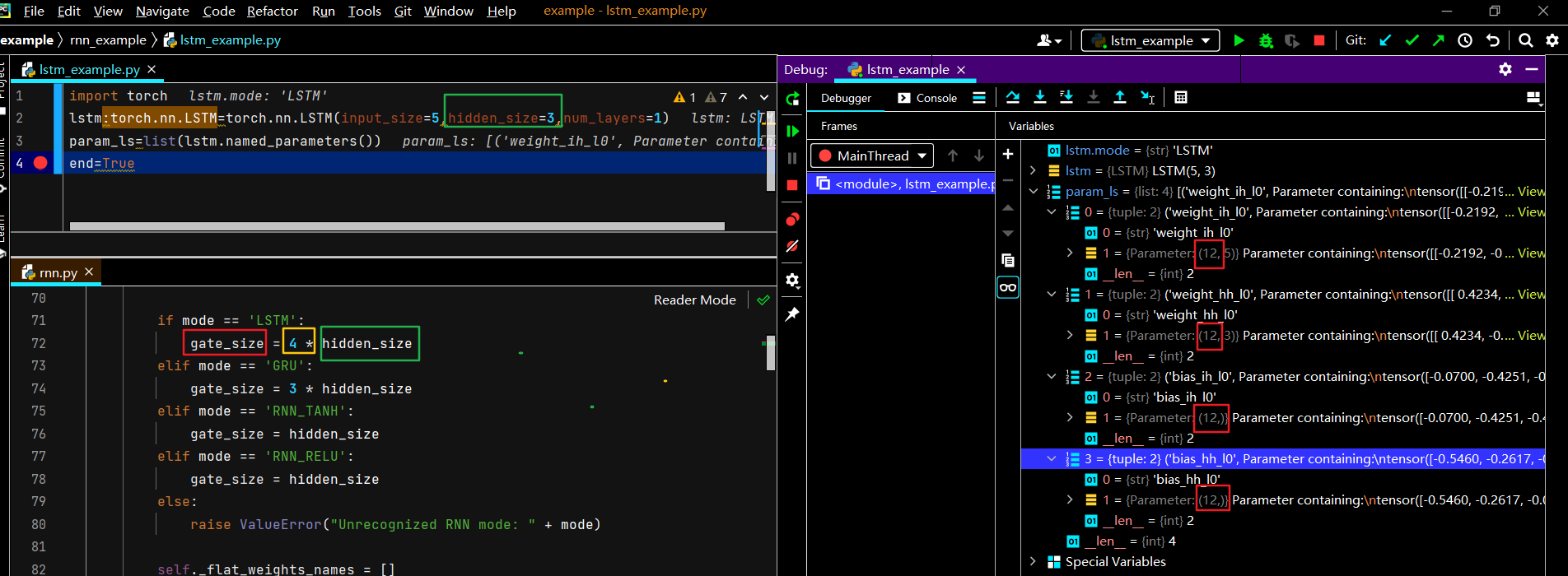

RNN

LSTM

import torch

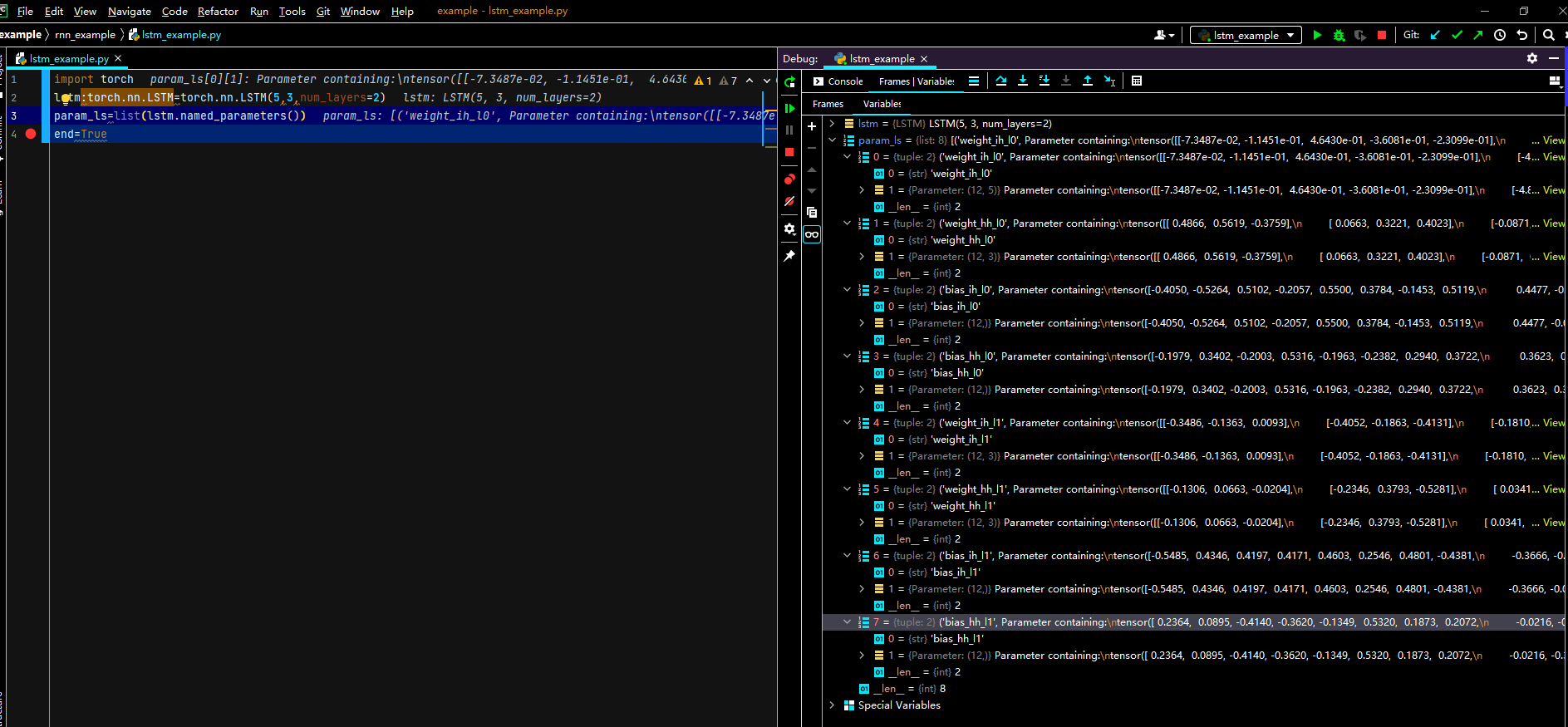

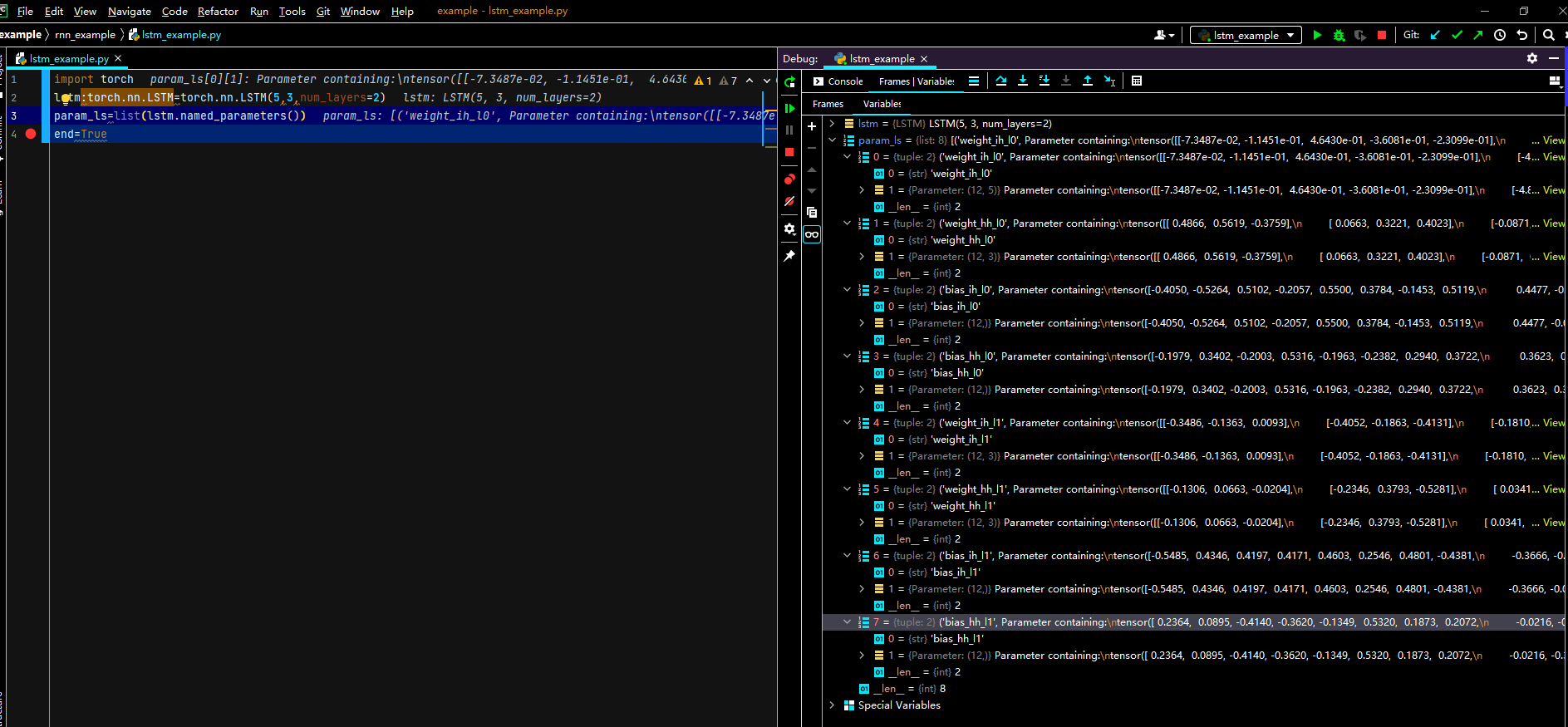

lstm:torch.nn.LSTM=torch.nn.LSTM(5,3,num_layers=2)

param_ls=list(lstm.named_parameters())

end=True

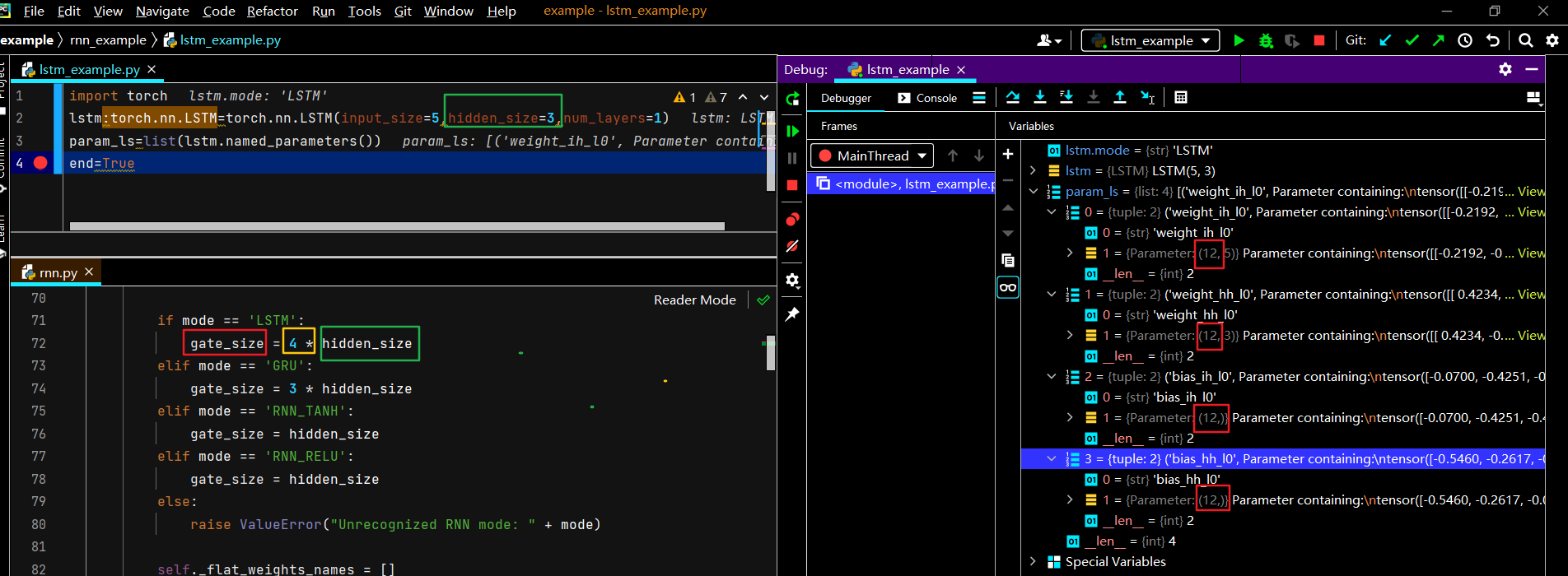

import torch

lstm:torch.nn.LSTM=torch.nn.LSTM(input_size=5,hidden_size=3,num_layers=1)

param_ls=list(lstm.named_parameters())

end=True

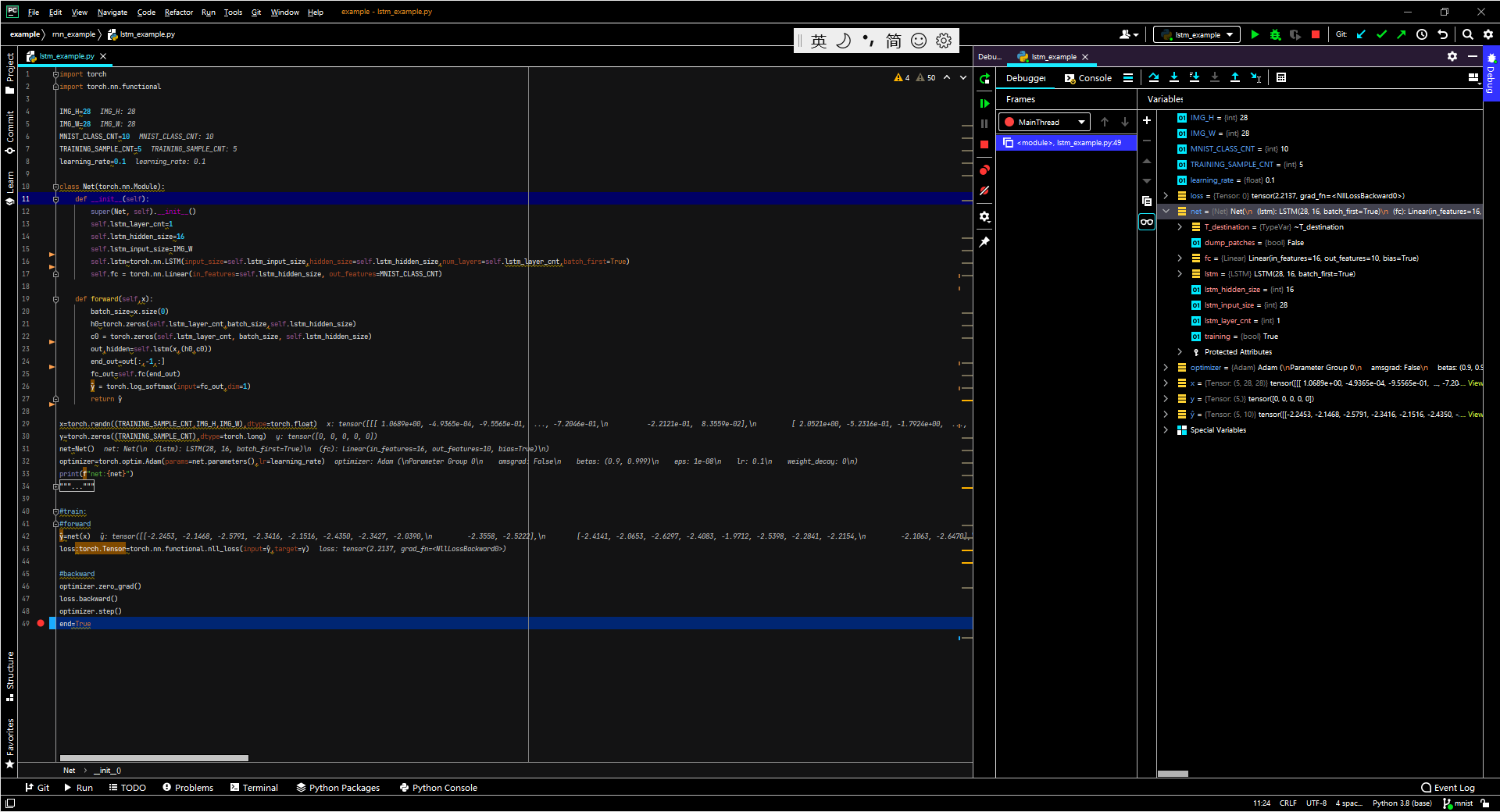

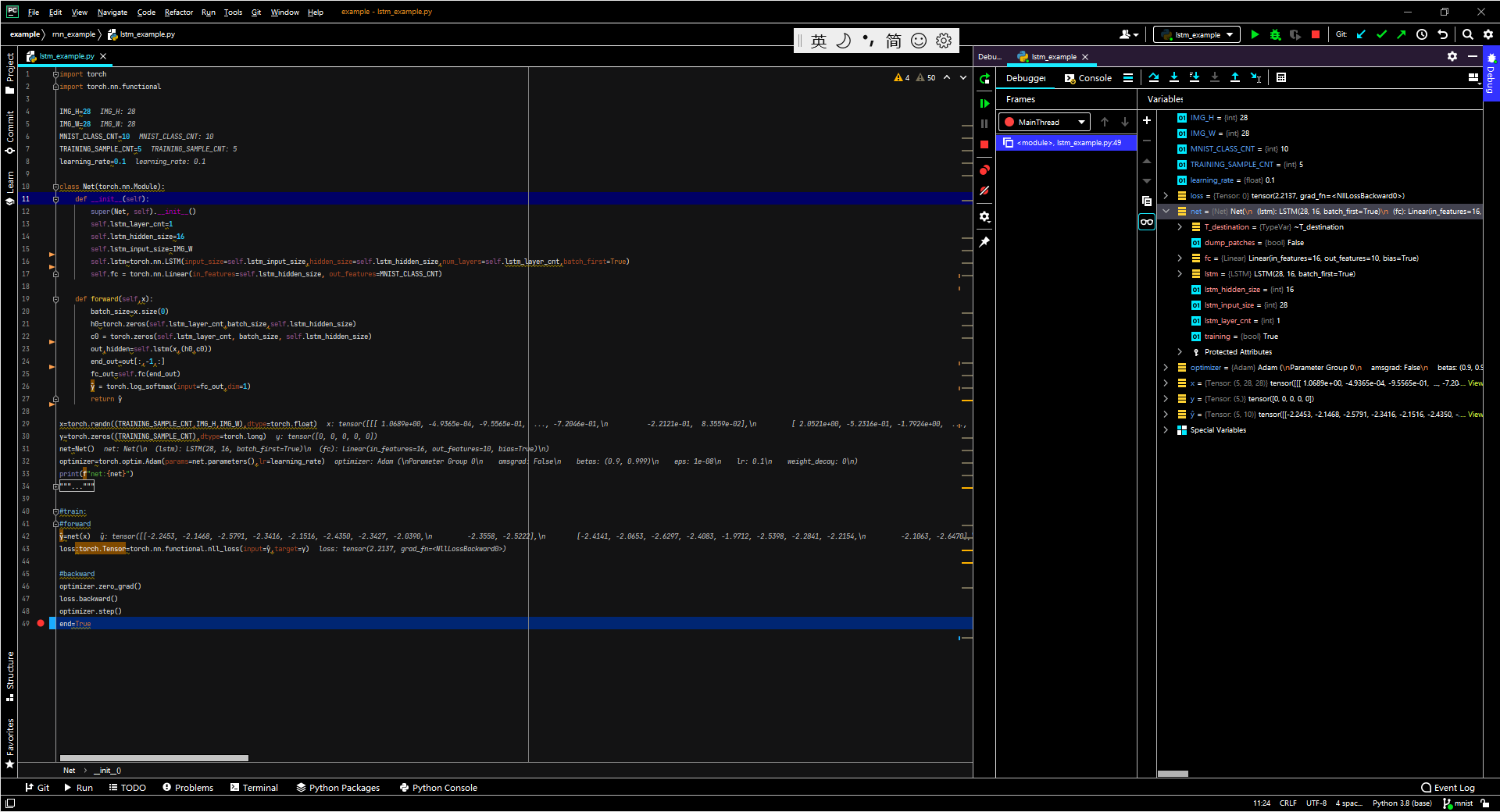

mnist lstm

import torch

import torch.nn.functional

IMG_H=28

IMG_W=28

MNIST_CLASS_CNT=10

TRAINING_SAMPLE_CNT=5

learning_rate=0.1

class Net(torch.nn.Module):

def __init__(self):

super(Net, self).__init__()

self.lstm_layer_cnt=1

self.lstm_hidden_size=16

self.lstm_input_size=IMG_W

self.lstm=torch.nn.LSTM(input_size=self.lstm_input_size,hidden_size=self.lstm_hidden_size,num_layers=self.lstm_layer_cnt,batch_first=True)

self.fc = torch.nn.Linear(in_features=self.lstm_hidden_size, out_features=MNIST_CLASS_CNT)

def forward(self,x):

batch_size=x.size(0)

h0=torch.zeros(self.lstm_layer_cnt,batch_size,self.lstm_hidden_size)

c0 = torch.zeros(self.lstm_layer_cnt, batch_size, self.lstm_hidden_size)

out,hidden=self.lstm(x,(h0,c0))

end_out=out[:,-1,:]

fc_out=self.fc(end_out)

? = torch.log_softmax(input=fc_out,dim=1)

return ?

x=torch.randn((TRAINING_SAMPLE_CNT,IMG_H,IMG_W),dtype=torch.float)

y=torch.zeros((TRAINING_SAMPLE_CNT),dtype=torch.long)

net=Net()

optimizer=torch.optim.Adam(params=net.parameters(),lr=learning_rate)

print(f"net:{net}")

"""

Net(

(lstm): LSTM(28, 16, batch_first=True)

(fc): Linear(in_features=16, out_features=10, bias=True)

)"""

?=net(x)

loss:torch.Tensor=torch.nn.functional.nll_loss(input=?,target=y)

optimizer.zero_grad()

loss.backward()

optimizer.step()

end=True

GRU

attention

|