lightGBM

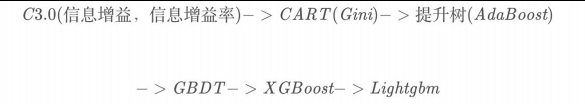

lightGBM演进过程

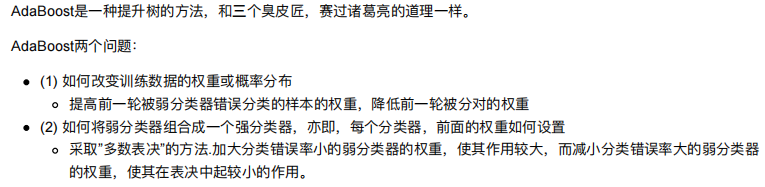

AdaBoost算法

GBDT算法以及优缺点

lightGBM入门

lightGBM是2017年1?,微软在GItHub上开源的?个新的梯度提升框架。

介绍链接

higgs数据集介绍:这是?个分类问题,?于区分产?希格斯玻??的信号过程和不产?希格斯玻??的信号过 程。

数据链接

lightGBM原理

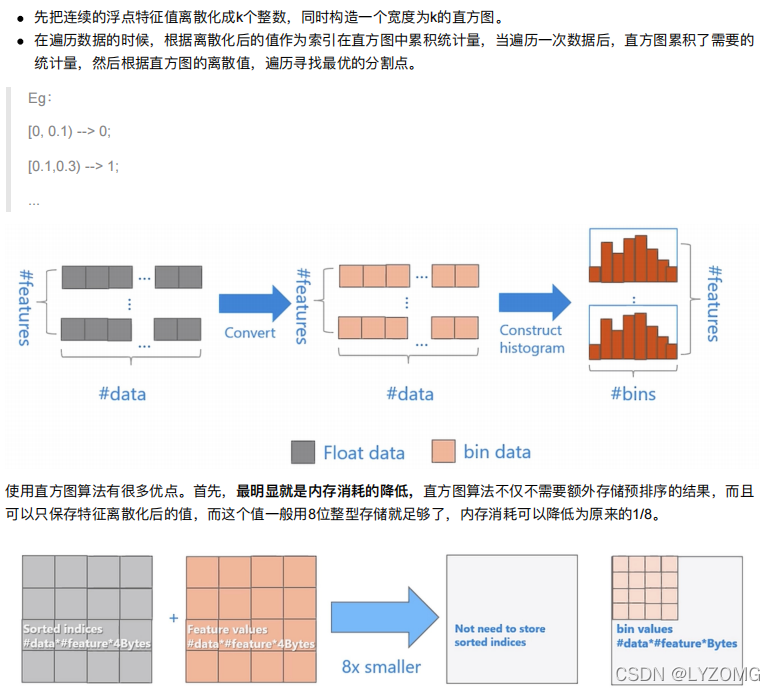

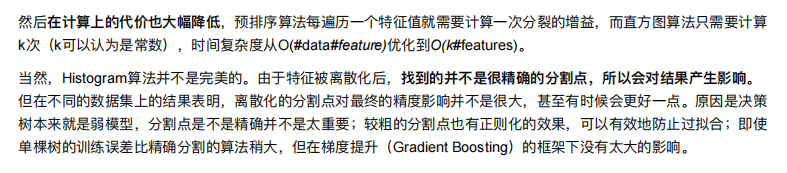

基于Histogram(直?图)的决策树算法

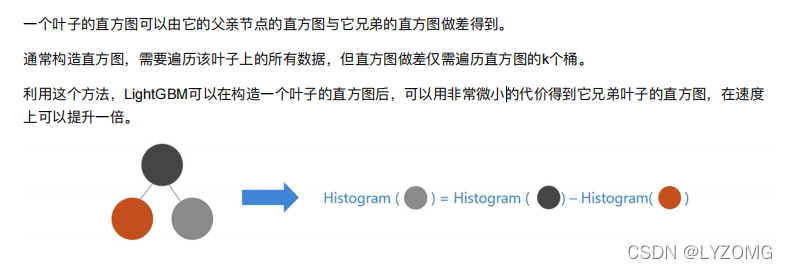

Lightgbm 的Histogram(直?图)做差加速

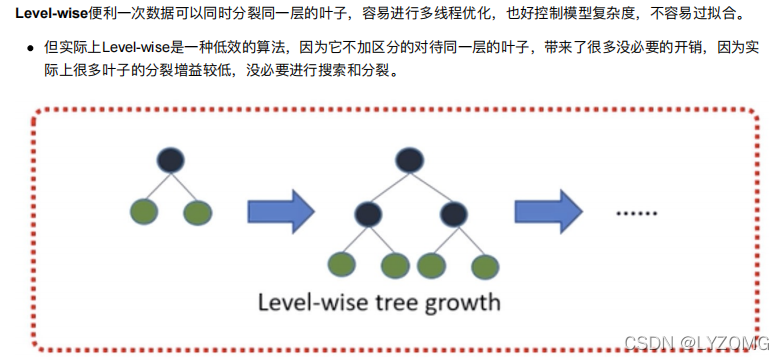

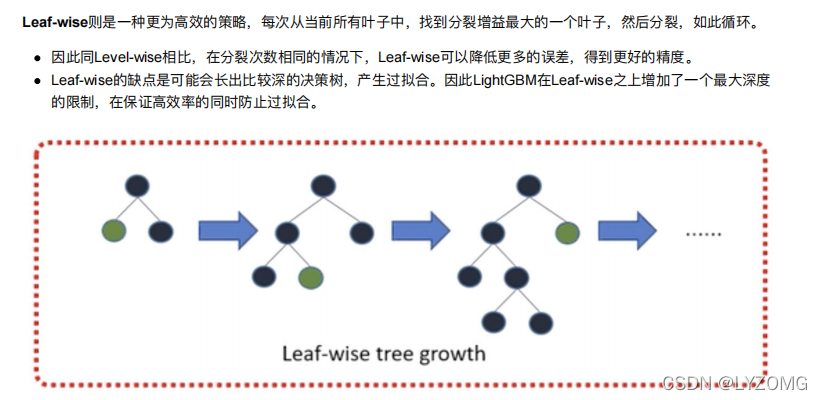

带深度限制的Leaf-wise的叶???策略

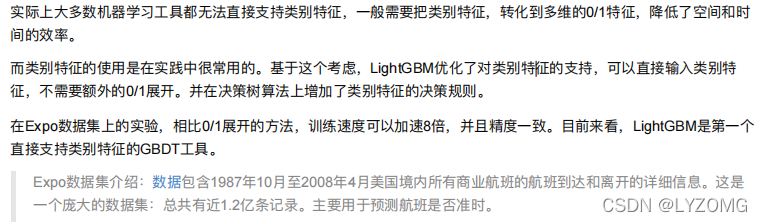

直接?持类别特征

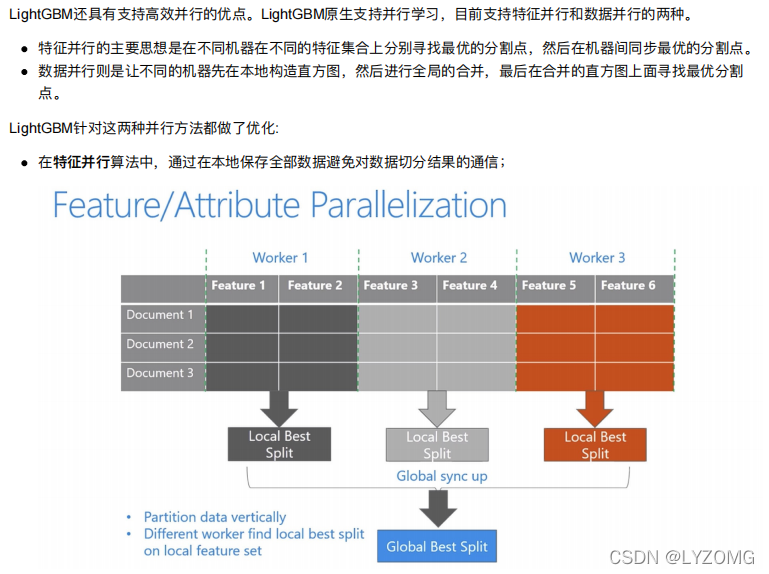

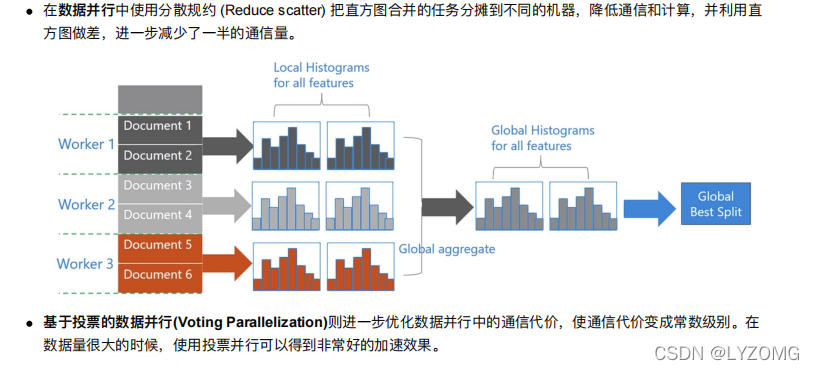

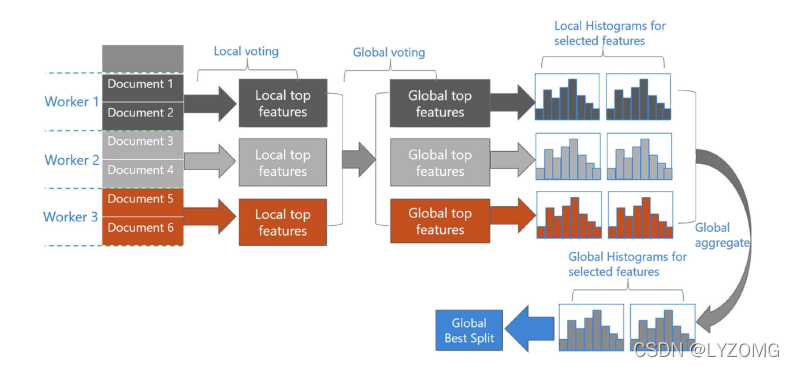

直接?持?效并?

lightGBM算法api

安装

pip3 install lightgbm

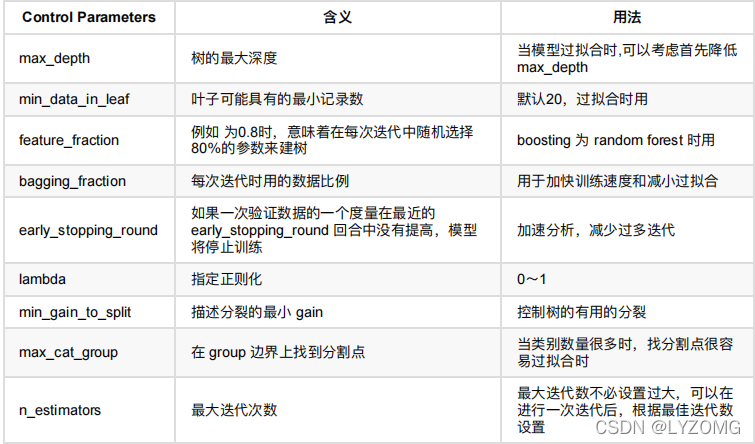

lightGBM参数—— Control Parameters

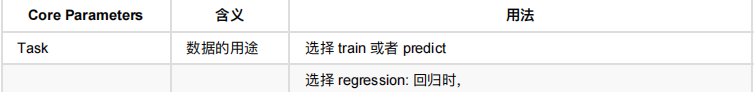

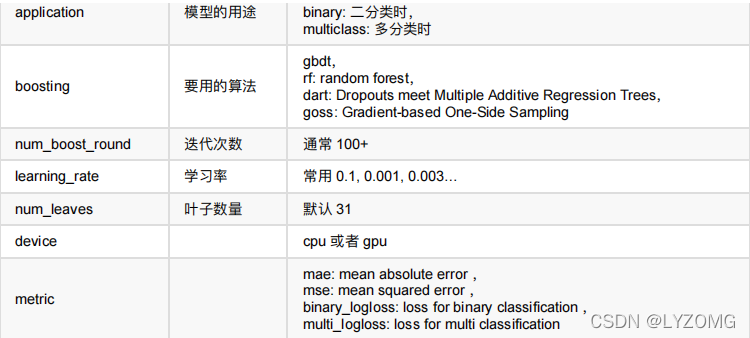

lightGBM参数—— Core Parameters

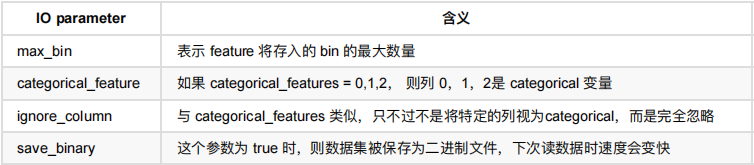

lightGBM参数—— IO parameter

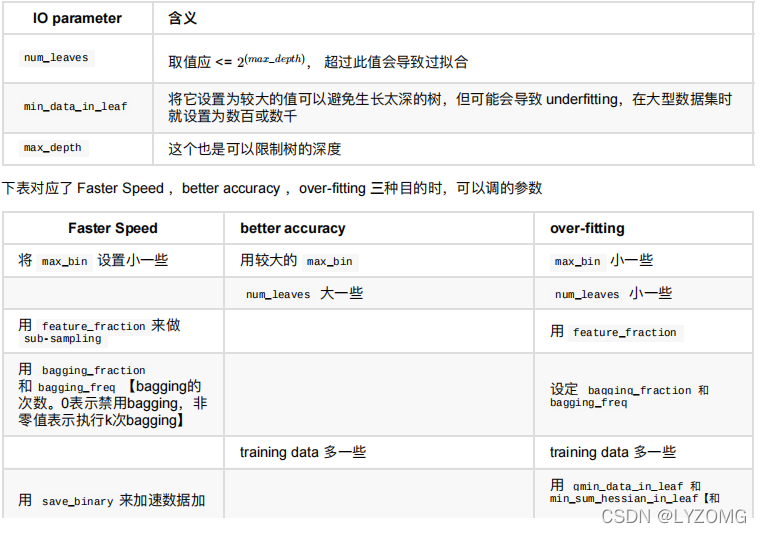

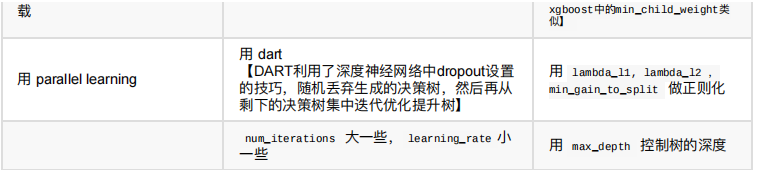

调参建议

lightGBM案例一:鸢尾花

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.model_selection import GridSearchCV

from sklearn.metrics import mean_squared_error

import lightgbm as lgb

读取数据

iris = load_iris()

data = iris.data

target= iris.target

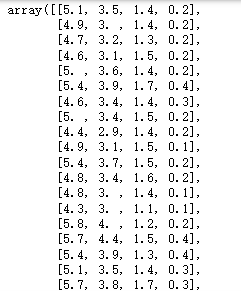

data

target

数据基本处理

x_train,x_test,y_train,y_test = train_test_split(data,target,test_size=0.2)

模型训练

模型基本训练

# 模型基本训练

gbm = lgb.LGBMRegressor(objective="regression",learning_rate=0.05,n_estimators=20)

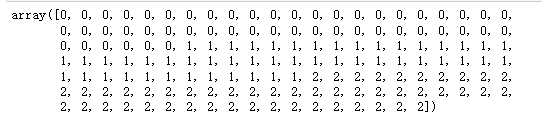

gbm.fit(x_train,y_train,eval_set=[(x_test,y_test)],eval_metric="l1",early_stopping_rounds=3)

gbm.score(x_test,y_test)

网格搜索进行训练

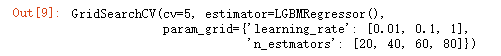

estimators = lgb.LGBMRegressor(num_leaves=31)

param_grid = {

"learning_rate":[0.01,0.1,1],

"n_estmators":[20,40,60,80]

}

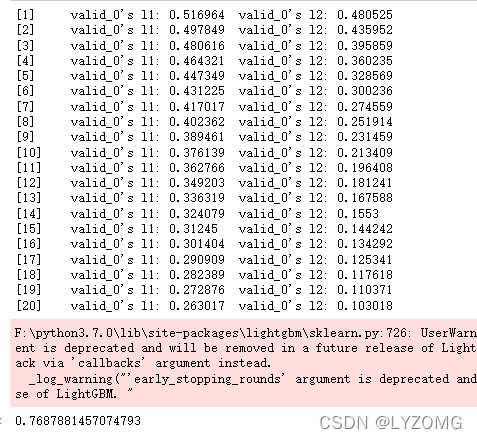

gbm = GridSearchCV(estimator=estimators,param_grid=param_grid,cv=5)

gbm.fit(x_train,y_train)

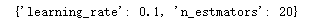

gbm.best_params_

gbm = lgb.LGBMRegressor(objective="regression",learning_rate=0.1,n_estimators=20)

gbm.fit(x_train,y_train,eval_set=[(x_test,y_test)],eval_metric="l1",early_stopping_rounds=3)

gbm.score(x_test,y_test)

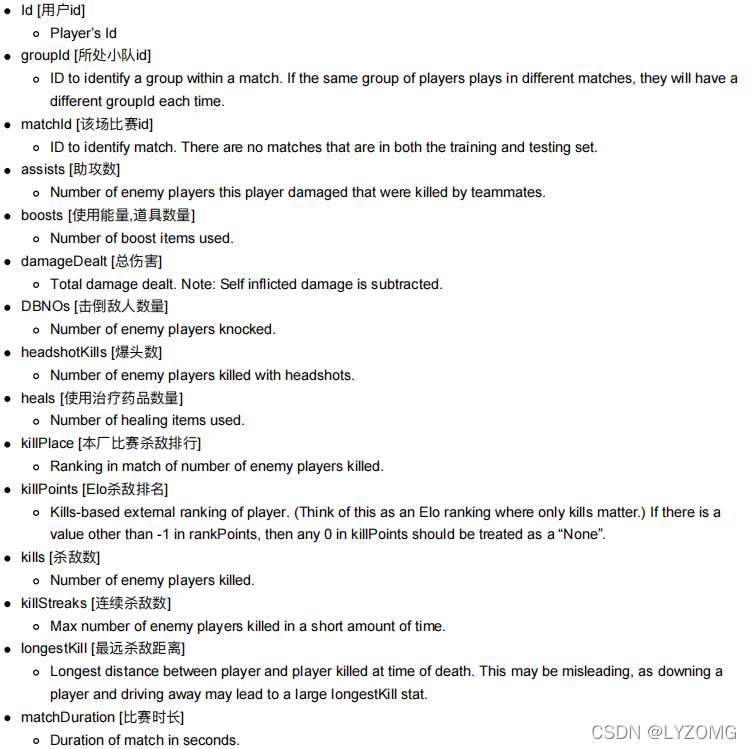

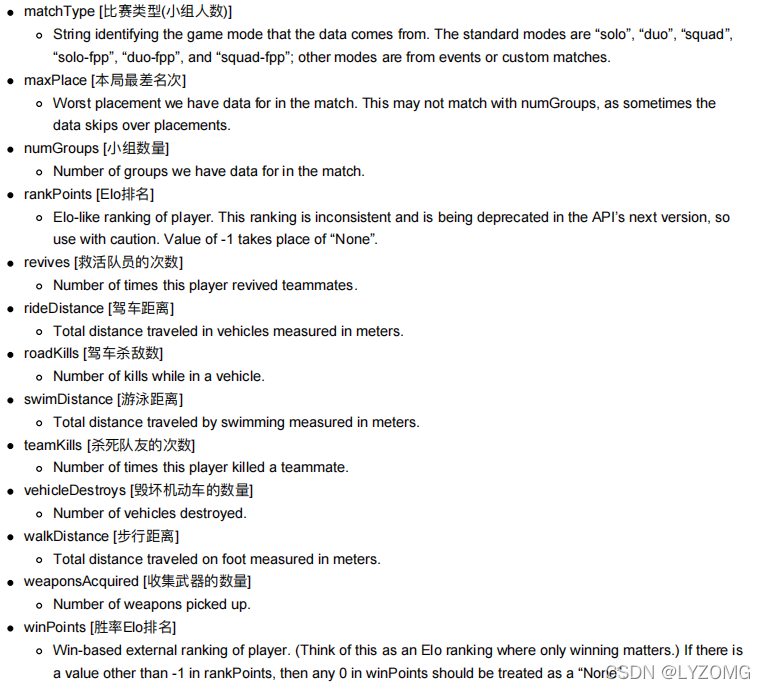

lightGBM案例二:绝地求生玩家排名预测

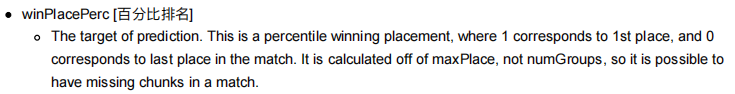

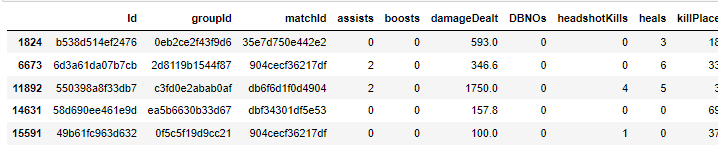

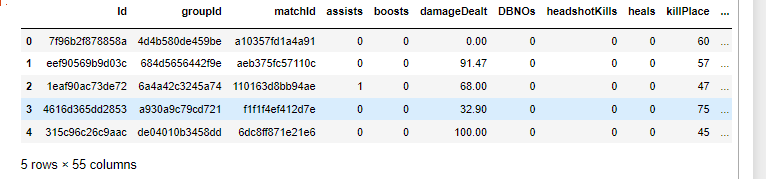

数据集字段

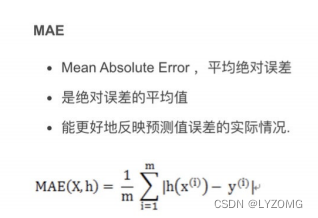

创建?个模型,根据他们的最终统计数据预测玩家的排名,从1(第?名)到0(最后?名)。 最后结果通过平均绝对误差(MAE)进?评估,即通过预测的winPlacePerc和真实的winPlacePerc之间的平均绝对误差

MAE

sklearn.metrics.mean_absolute_error

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

import seaborn as sns

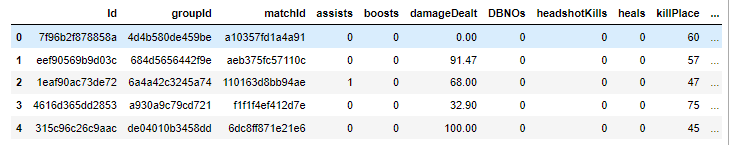

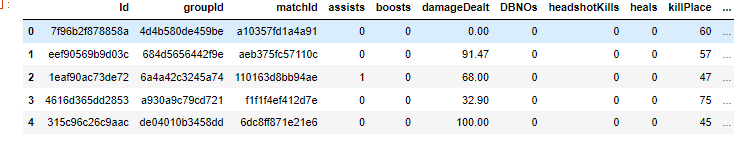

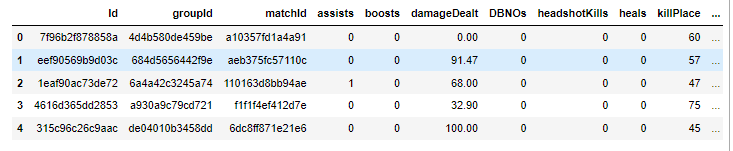

获取数据、基本数据信息查看

train = pd.read_csv("./data/train_V2.csv")

train.head()

# 查看一共有多少数据

train.shape #(4446966, 29)

# 有多少场比赛

np.unique(train["matchId"]).shape #(47965,)

# 有多少队伍

np.unique(train["groupId"]).shape #(2026745,)

数据基本处理

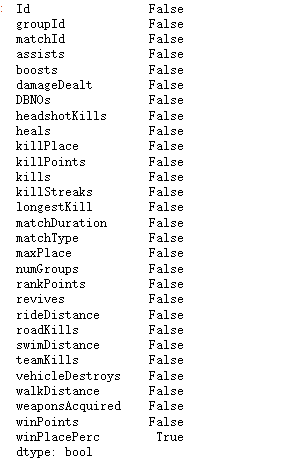

# 数据缺失值处理 winPlacePerc有缺失值

np.any(train.isnull(),axis=0)

# 查找缺失值

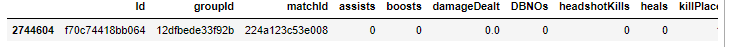

train[train["winPlacePerc"].isnull()]

# 只有一行,删除即可

train = train.drop(2744604,inplace=True)

train.shape #(4446965, 29)

# 特征数据规范化处理

# 查看每场比赛参加的人数

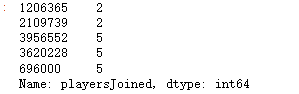

count = train.groupby("matchId")["matchId"].transform("count")

train["playersJoined"] = count

train.head()

train["playersJoined"].sort_values().head()

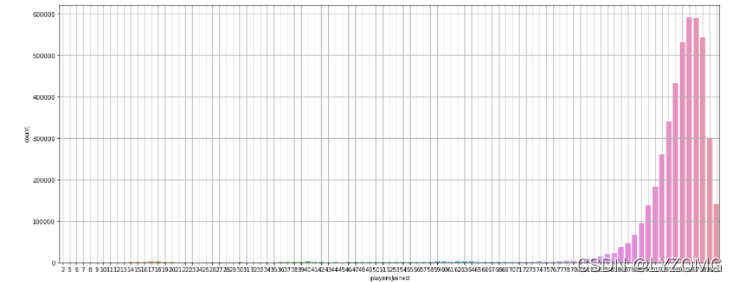

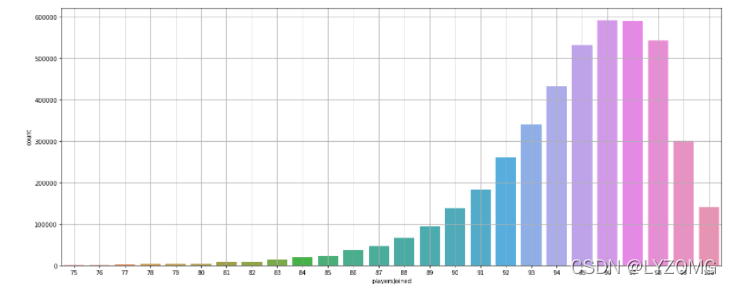

plt.figure(figsize=(20,8))

sns.countplot(train["playersJoined"])

plt.grid()

plt.show()

plt.figure(figsize=(20,8))

sns.countplot(train[train["playersJoined"]>=75]["playersJoined"])

plt.grid()

plt.show()

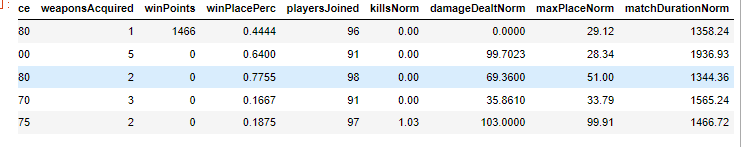

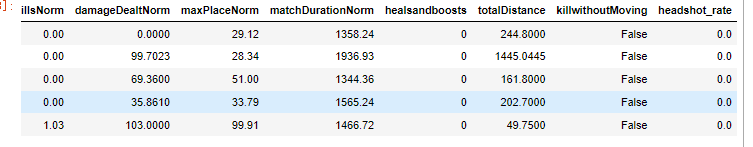

# 规范化输出部分数据

train["killsNorm"] = train["kills"] * ((100-train["playersJoined"])/100+1)

train["damageDealtNorm"] = train["damageDealt"] * ((100-train["playersJoined"])/100+1)

train["maxPlaceNorm"] = train["maxPlace"] * ((100-train["playersJoined"])/100+1)

train["matchDurationNorm"] = train["matchDuration"] * ((100-train["playersJoined"])/100+1)

train.head()

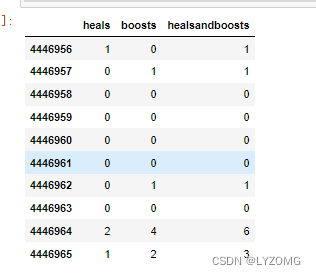

# 部分变量合成

train["healsandboosts"] = train["heals"] + train["boosts"]

train[["heals", "boosts", "healsandboosts"]].tail(10)

# 异常值处理 删除有击杀,但是完全没有移动的玩家

train["totalDistance"] = train["rideDistance"] + train["walkDistance"] + train["swimDistance"]

train.head()

train["killwithoutMoving"] = (train["kills"] > 0) & (train["totalDistance"] == 0)

train[train["killwithoutMoving"] == True].head()

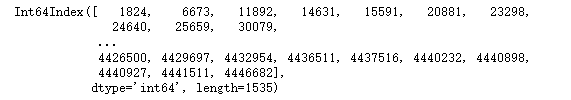

train[train["killwithoutMoving"] == True].shape #(1535, 37)

train[train["killwithoutMoving"] == True].index

train.drop(train[train["killwithoutMoving"] == True].index, inplace=True)

train.shape #(4445430, 37)

# 异常值处理:删除驾车杀敌数异常的数据

train.drop(train[train["roadKills"] > 10].index, inplace=True)

train.shape #(4445426, 37)

# 异常值处理:删除玩家在一局中杀敌数超过30人的数据

train.drop(train[train["kills"] > 30].index, inplace=True)

train.shape #(4445331, 37)

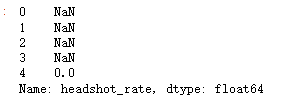

# 异常值处理:删除爆头率异常数据

train["headshot_rate"] = train["headshotKills"]/train["kills"]

train["headshot_rate"].head()

train["headshot_rate"] = train["headshot_rate"].fillna(0)

train.head()

train[(train["headshot_rate"] == 1) & (train["kills"] > 9)].head()

train.drop(train[(train["headshot_rate"] == 1) & (train["kills"] > 9)].index, inplace=True)

train.shape #(4445307, 38)

# 异常值处理:删除最远杀敌距离异常数据

train.drop(train[train["longestKill"] >=1000].index, inplace=True)

train.shape #(4445287, 38)

# 异常值处理:删除关于运动距离的异常值

# 行走

train.drop(train[train["walkDistance"] >=10000].index, inplace=True)

train.shape #(4445068, 38)

# 载具

train.drop(train[train["rideDistance"] >=20000].index, inplace=True)

train.shape #(4444918, 38)

# 游泳

train.drop(train[train["swimDistance"] >=20000].index, inplace=True)

train.shape #(4444918, 38)

# 异常值处理:武器收集异常值处理

train.drop(train[train["weaponsAcquired"] >=80].index, inplace=True)

train.shape #(4444899, 38)

# 异常值处理:删除使用治疗药品数量异常值

train.drop(train[train["heals"] >=80].index, inplace=True)

train.shape #(4444898, 38)

# 类别型数据处理

# 比赛类型one-hot处理

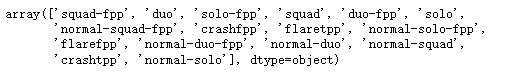

train["matchType"].unique()

train = pd.get_dummies(train, columns=["matchType"])

train.head()

train.shape #(4444898, 53)

# 对groupId,matchId等数据进行处理

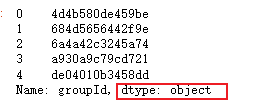

train["groupId"].head()

train["groupId"] = train["groupId"].astype("category")

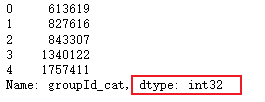

train["groupId_cat"] = train["groupId"].cat.codes

train["groupId_cat"].head()

train["matchId"] = train["matchId"].astype("category")

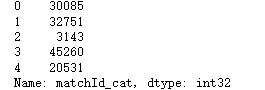

train["matchId_cat"] = train["matchId"].cat.codes

train["matchId_cat"].head()

train.head()

train.drop(["groupId", "matchId"], axis=1, inplace=True)

# 数据截取 取部分数据进行使用(100000)

df_sample = train.sample(100000)

df_sample.shape #(100000, 53)

# 确定特征值和目标值

df = df_sample.drop(["winPlacePerc", "Id"], axis=1)

y = df_sample["winPlacePerc"]

df.shape #(100000, 51)

y.shape #(100000,)

# 分割训练集和测试集

from sklearn.model_selection import train_test_split

X_train, X_valid, y_train, y_valid = train_test_split(df, y, test_size=0.2)

X_train.shape #(80000, 51)

y_train.shape #(80000,)

模型训练/模型评估

初步使用随机森林

from sklearn.ensemble import RandomForestRegressor

from sklearn.metrics import mean_absolute_error

m1 = RandomForestRegressor(n_estimators=40,

min_samples_leaf=3,

max_features='sqrt',

n_jobs=-1)

m1.fit(X_train, y_train)

y_pre = m1.predict(X_valid)

m1.score(X_valid, y_valid)

mean_absolute_error(y_valid, y_pre)

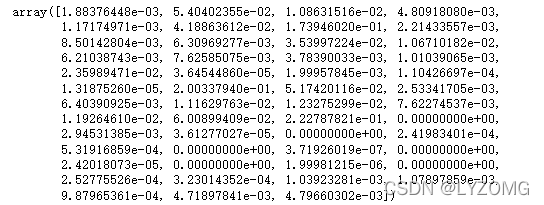

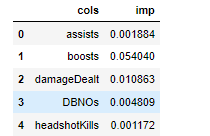

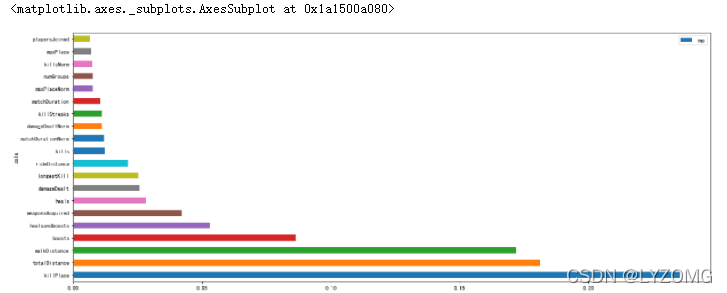

再次使用随机森林,去掉不重要字段

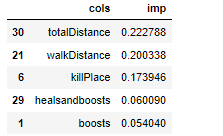

m1.feature_importances_

imp_df = pd.DataFrame({"cols":df.columns, "imp":m1.feature_importances_})

imp_df.head()

imp_df = imp_df.sort_values("imp", ascending=False)

imp_df.head()

imp_df[:20].plot("cols", "imp", figsize=(20, 8), kind="barh")

to_keep = imp_df[imp_df.imp > 0.005].cols

to_keep.shape #(20,)

df_keep = df[to_keep]

X_train, X_valid, y_train, y_valid = train_test_split(df_keep, y, test_size=0.2)

X_train.shape #(80000, 20)

m2 = RandomForestRegressor(n_estimators=40,

min_samples_leaf=3,

max_features='sqrt',

n_jobs=-1)

m2.fit(X_train, y_train)

y_pre = m2.predict(X_valid)

m2.score(X_valid, y_valid) #0.9125654968172906

mean_absolute_error(y_valid, y_pre) #0.06408683094647326

使用lightGBM对模型进行训练

X_train, X_valid, y_train, y_valid = train_test_split(df, y, test_size=0.2)

X_train.shape #(80000, 51)

import lightgbm as lgb

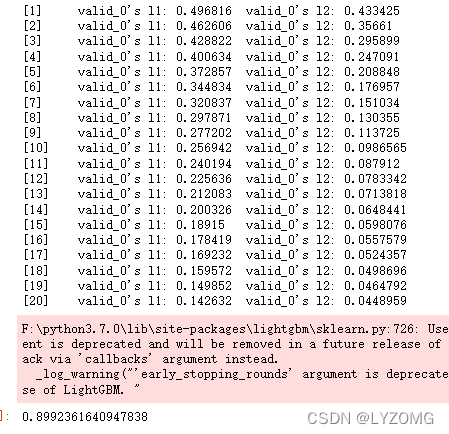

gbm = lgb.LGBMRegressor(objective="regression", num_leaves=31, learning_rate=0.05, n_estimators=20)

gbm.fit(X_train, y_train, eval_set=[(X_valid, y_valid)], eval_metric="l1", early_stopping_rounds=5)

y_pre = gbm.predict(X_valid, num_iteration=gbm.best_iteration_)

mean_absolute_error(y_valid, y_pre) #0.12331524150224461

使用GridSearchCV对lightGBM模型进行调优

from sklearn.model_selection import GridSearchCV

estimator = lgb.LGBMRegressor(num_leaves=31)

param_grid = {

"learning_rate":[0.01, 0.1, 1],

"n_estimators":[40, 60, 80, 100, 200, 300]

}

gbm = GridSearchCV(estimator, param_grid, cv=5, n_jobs=-1)

gbm.fit(X_train, y_train)

y_pre = gbm.predict(X_valid)

mean_absolute_error(y_valid, y_pre) #0.05685004010605751

gbm.best_params_ #{'learning_rate': 0.1, 'n_estimators': 300}

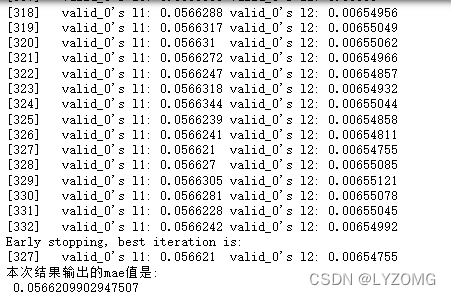

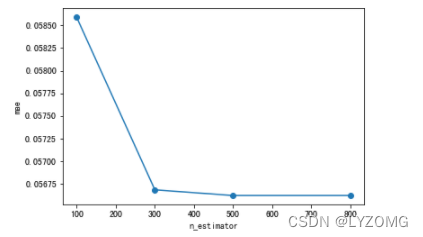

三次调优

# n_estimators

scores = []

n_estimators = [100, 300, 500, 800]

for nes in n_estimators:

lgbm = lgb.LGBMRegressor(boosting_type='gbdt',

num_leaves=31,

max_depth=5,

learning_rate=0.1,

n_estimators=nes,

min_child_samples=20,

n_jobs=-1)

lgbm.fit(X_train, y_train, eval_set=[(X_valid, y_valid)], eval_metric="l1", early_stopping_rounds=5)

y_pre = lgbm.predict(X_valid)

mae = mean_absolute_error(y_valid, y_pre)

scores.append(mae)

print("本次结果输出的mae值是:\n", mae)

plt.plot(n_estimators,scores,'o-')

plt.ylabel("mae")

plt.xlabel("n_estimator")

print("best n_estimator {}".format(n_estimators[np.argmin(scores)]))

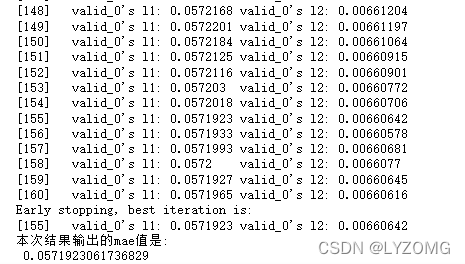

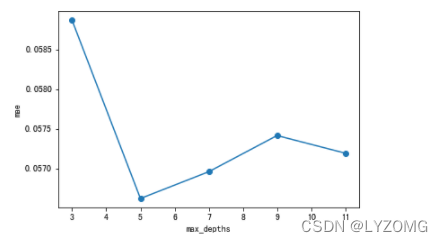

# max_depth

scores = []

max_depth = [3, 5, 7, 9, 11]

for md in max_depth:

lgbm = lgb.LGBMRegressor(boosting_type='gbdt',

num_leaves=31,

max_depth=md,

learning_rate=0.1,

n_estimators=500,

min_child_samples=20,

n_jobs=-1)

lgbm.fit(X_train, y_train, eval_set=[(X_valid, y_valid)], eval_metric="l1", early_stopping_rounds=5)

y_pre = lgbm.predict(X_valid)

mae = mean_absolute_error(y_valid, y_pre)

scores.append(mae)

print("本次结果输出的mae值是:\n", mae)

plt.plot(max_depth,scores,'o-')

plt.ylabel("mae")

plt.xlabel("max_depths")

print("best max_depths {}".format(max_depth[np.argmin(scores)]))

scores1