文章目录

1. 模型的保存和加载 (权重保存-简易方法)

- 保存状态字典

import torch

from torch import nn

import torchvision.models as models

# 从torchvision中下载一个预训练的模型vgg16

mymodel = models.vgg16(pretrained=True)

# 保存模型的权重state_dict

torch.save(mymodel.state_dict(), "model_weights.pth")

- 创建一个需要加载模型的实例

# 创建一个新的实例,跟以前的模型一致

new_model = models.vgg16()

- 将保存好的权重加载到新的模型中

# 将保存好的权重加载到新的模型中

new_model.load_state_dict(torch.load("model_weights.pth"))

# 模型进入推理模式,会影响到dropout和batchnorm

new_model.eval()

2. 保存checkpoint

这种保存方式,不仅仅保存模型的权重,还会保存模型的其他参数

(1)导入所有必要的库来加载我们的数据

(2)定义并初始化神经网络

(3)初始化优化器

(4)保存常规检查点checkpoint

(5)加载常规检查点checkpoint

2.1 导入所有相关的库

import torch

import torch.nn as nn

import torch.optim as optim

from torch import functional as F

2.2 定义并初始化神经网络

# 定义神经网络

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(3, 6, 5)

self.pool = nn.MaxPool2d(2, 2)

self.conv2 = nn.Conv2d(6, 16, 5)

self.fc1 = nn.Linear(16 * 5 * 5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

x = self.pool(F.relu(self.conv1(x)))

x = self.pool(F.relu(self.conv2(x)))

x = x.view(-1, 16 * 5 * 5)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

# 实例化神经网络

net = Net()

2.3 初始化优化器

初始化优化器,并将神经网络的参数注入到优化器中,设置超参数

- lr :学习率 ;learning_rate

- momentum:动量

# 优化器的实例化及超参数的设置

optimizer = optim.SGD(net.parameters(), lr=0.001, momentum=0.9)

2.4 保存常规检查点checkpoint

# Additional information

# epoch 迭代次数设置

EPOCH = 5

# 模型

PATH = "model.pt"

# 损失值

LOSS = 0.4

# 逐个保存模型的相关参数

torch.save({

'epoch': EPOCH,

'model_state_dict': net.state_dict(),

'optimizer_state_dict': optimizer.state_dict(),

'loss': LOSS,

}, PATH)

2.5 加载常规检查点checkpoint

# 实例化模型

model = Net()

# 实例化优化器

optimizer = optim.SGD(net.parameters(), lr=0.001, momentum=0.9)

# 加载保存的model.pt

checkpoint = torch.load(PATH)

# 加载权重

model.load_state_dict(checkpoint['model_state_dict'])

# 加载优化器值

optimizer.load_state_dict(checkpoint['optimizer_state_dict'])

# 加载迭代次数epoch

epoch = checkpoint['epoch']

# 加载损失loss

loss = checkpoint['loss']

# 设置模型为推理模式

model.eval()

注:在运行推理之前,必须调用model.eval()将dropout和批处理规范化层设置为求值模式。如果不这样做,将产生不一致的推理结果。

3. to 操作

我们通过model.to(torch.double)可以将模型的参数类型进行更改

# 1.导入相关库

import torch

from torch import nn

# 2.定义模型

class MyTest(nn.Module):

def __init__(self):

super(MyTest, self).__init__()

self.linear1 = nn.Linear(2, 3)

self.linear2 = nn.Linear(3, 4)

self.batchnorm = nn.BatchNorm2d(4)

# 3.实例化神经网络

mymodel = MyTest()

# 4.打印出神经网络中linear1的权重参数类型linear1.weights.dtype = torch.float32

print(mymodel._modules["linear1"].weight.dtype)

# 5.将模型中的参数类型转换成torch.double

mymodel.to(torch.double)

# 6.打印出神经网络中linear1的权重参数类型linear1.weights.dtype = torch.float64

print(mymodel._modules["linear1"].weight.dtype)

# 转换成功 torch.float32 -> torch.float64

4. _parameters和parameters的区别

- _parameters:针对的是当前模型中是否有nn.parameters,跟子类无关

- parameters:不仅跟当前模型的parameters有关,还跟其子类的parameters有关

# 1.导入相关库

import torch

from torch import nn

# 2.定义模型

class MyTest(nn.Module):

def __init__(self):

super(MyTest, self).__init__()

self.linear1 = nn.Linear(2, 3)

self.linear2 = nn.Linear(3, 4)

self.batchnorm = nn.BatchNorm2d(4)

# 3.实例化神经网络

mymodel = MyTest()

# 4.打印出神经网络中linear1的权重参数类型linear1.weights.dtype = torch.float32

print(mymodel._modules["linear1"].weight.dtype)

# 5.将模型中的参数类型转换成torch.double

mymodel.to(torch.double)

# 6.打印出神经网络中linear1的权重参数类型linear1.weights.dtype = torch.float64

print(mymodel._modules["linear1"].weight.dtype)

# 转换成功 torch.float32 -> torch.float64

# _paramters 返回的是当前网络中是否有parameters,跟子模块的parameters无关

# 所以 mymodel._parameters=OrderedDict()

print(f"mymodel._parameters={mymodel._parameters}")

# 同理_buffers一致

print(f"mymodel._buffers={mymodel._buffers}")

# 逐个迭代模型中的参数

for pa in mymodel.parameters():

print(f"mymodel.parameters={pa}")

5. state_dict

- 作用: 保存模型的参数

def _save_to_state_dict(self, destination, prefix, keep_vars):

r"""Saves module state to `destination` dictionary, containing a state

of the module, but not its descendants. This is called on every

submodule in :meth:`~torch.nn.Module.state_dict`.

In rare cases, subclasses can achieve class-specific behavior by

overriding this method with custom logic.

Args:

destination (dict): a dict where state will be stored

prefix (str): the prefix for parameters and buffers used in this

module

"""

for name, param in self._parameters.items():

if param is not None:

destination[prefix + name] = param if keep_vars else param.detach()

for name, buf in self._buffers.items():

if buf is not None and name not in self._non_persistent_buffers_set:

destination[prefix + name] = buf if keep_vars else buf.detach()

# The user can pass an optional arbitrary mappable object to `state_dict`, in which case `state_dict` returns

# back that same object. But if they pass nothing, an `OrederedDict` is created and returned.

T_destination = TypeVar('T_destination', bound=Mapping[str, Tensor])

def state_dict(self, destination=None, prefix='', keep_vars=False):

r"""Returns a dictionary containing a whole state of the module.

Both parameters and persistent buffers (e.g. running averages) are

included. Keys are corresponding parameter and buffer names.

Returns:

dict:

a dictionary containing a whole state of the module

Example::

>>> module.state_dict().keys()

['bias', 'weight']

"""

if destination is None:

destination = OrderedDict()

destination._metadata = OrderedDict()

destination._metadata[prefix[:-1]] = local_metadata = dict(version=self._version)

# 遍历当前模型中模块的state_dict

self._save_to_state_dict(destination, prefix, keep_vars)

# 遍历子模型中模块的state_dict

for name, module in self._modules.items():

if module is not None:

module.state_dict(destination, prefix + name + '.', keep_vars=keep_vars)

for hook in self._state_dict_hooks.values():

hook_result = hook(self, destination, prefix, local_metadata)

if hook_result is not None:

destination = hook_result

return destination

- 案例代码

# 1.导入相关库

import torch

from torch import nn

# 2.定义模型

class MyTest(nn.Module):

def __init__(self):

super(MyTest, self).__init__()

self.linear1 = nn.Linear(2, 3)

self.linear2 = nn.Linear(3, 4)

self.batchnorm = nn.BatchNorm2d(4)

# 3.实例化神经网络

mymodel = MyTest()

print(f"mymodel.state_dict()={mymodel.state_dict()}")

- 输出结果

注:就是一个有序的字典,将所有子模块的参数保存下来

mymodel.state_dict()=OrderedDict([('linear1.weight', tensor([[ 0.2309, 0.1947],

[ 0.1572, -0.5997],

[ 0.4253, 0.1184]], dtype=torch.float64)), ('linear1.bias', tensor([ 0.0010, -0.1031, -0.2801], dtype=torch.float64)), ('linear2.weight', tensor([[-0.4427, 0.0356, 0.0527],

[-0.1414, 0.4508, 0.5320],

[ 0.3816, 0.3372, 0.3967],

[-0.1054, 0.1467, -0.5630]], dtype=torch.float64)), ('linear2.bias', tensor([ 0.3134, -0.3881, 0.2067, -0.1626], dtype=torch.float64)), ('batchnorm.weight', tensor([1., 1., 1., 1.], dtype=torch.float64)), ('batchnorm.bias', tensor([0., 0., 0., 0.], dtype=torch.float64)), ('batchnorm.running_mean', tensor([0., 0., 0., 0.], dtype=torch.float64)), ('batchnorm.running_var', tensor([1., 1., 1., 1.], dtype=torch.float64)), ('batchnorm.num_batches_tracked', tensor(0))])

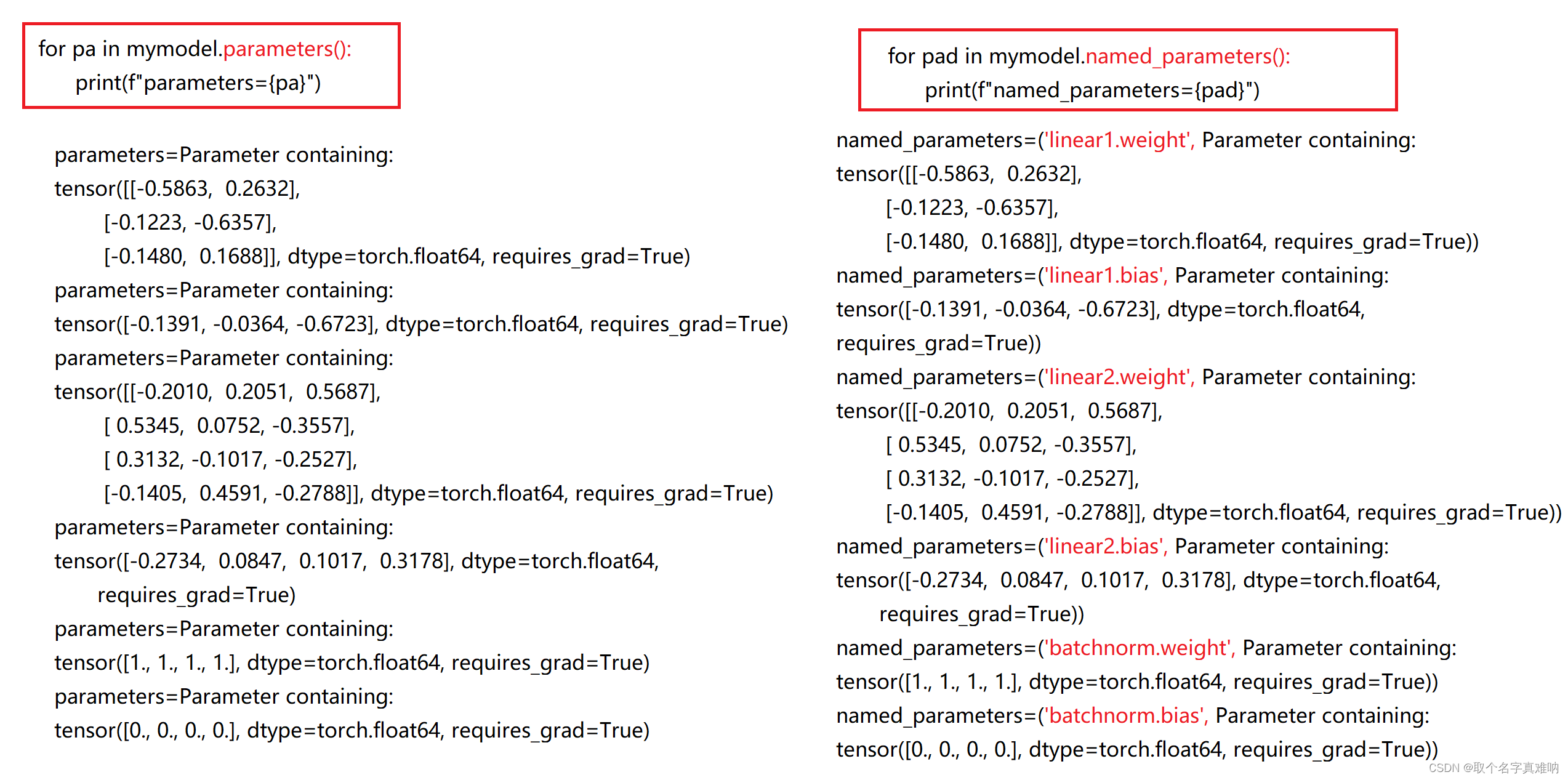

6. parameters&named_parameters

parameters:返回参数的张量值named_parameters():返回参数的名称和张量值

# 1.导入相关库

import torch

from torch import nn

# 2.定义模型

class MyTest(nn.Module):

def __init__(self):

super(MyTest, self).__init__()

self.linear1 = nn.Linear(2, 3)

self.linear2 = nn.Linear(3, 4)

self.batchnorm = nn.BatchNorm2d(4)

# 3.实例化神经网络

mymodel = MyTest()

for pa in mymodel.parameters():

print(f"parameters={pa}")

for pad in mymodel.named_parameters():

print(f"named_parameters={pad}")

parameters=Parameter containing:

tensor([[ 0.3966, -0.1722],

[-0.6319, 0.4421],

[ 0.1774, 0.5560]], requires_grad=True)

parameters=Parameter containing:

tensor([ 0.6004, 0.4914, -0.6790], requires_grad=True)

parameters=Parameter containing:

tensor([[ 0.5584, 0.4561, 0.3161],

[-0.2900, 0.4303, 0.4115],

[ 0.4425, -0.1321, -0.1889],

[-0.4999, -0.3429, -0.2785]], requires_grad=True)

parameters=Parameter containing:

tensor([-0.4464, -0.3374, -0.0186, -0.1464], requires_grad=True)

parameters=Parameter containing:

tensor([1., 1., 1., 1.], requires_grad=True)

parameters=Parameter containing:

tensor([0., 0., 0., 0.], requires_grad=True)

named_parameters=('linear1.weight', Parameter containing:

tensor([[ 0.3966, -0.1722],

[-0.6319, 0.4421],

[ 0.1774, 0.5560]], requires_grad=True))

named_parameters=('linear1.bias', Parameter containing:

tensor([ 0.6004, 0.4914, -0.6790], requires_grad=True))

named_parameters=('linear2.weight', Parameter containing:

tensor([[ 0.5584, 0.4561, 0.3161],

[-0.2900, 0.4303, 0.4115],

[ 0.4425, -0.1321, -0.1889],

[-0.4999, -0.3429, -0.2785]], requires_grad=True))

named_parameters=('linear2.bias', Parameter containing:

tensor([-0.4464, -0.3374, -0.0186, -0.1464], requires_grad=True))

named_parameters=('batchnorm.weight', Parameter containing:

tensor([1., 1., 1., 1.], requires_grad=True))

named_parameters=('batchnorm.bias', Parameter containing:

tensor([0., 0., 0., 0.], requires_grad=True))

7. _modules&named_modules

_module:返回模型中的所有子模块named_modules:返回模型中的所有子模块和自身

# 1.导入相关库

import torch

from torch import nn

# 2.定义模型

class MyTest(nn.Module):

def __init__(self):

super(MyTest, self).__init__()

self.linear1 = nn.Linear(2, 3)

self.linear2 = nn.Linear(3, 4)

self.batchnorm = nn.BatchNorm2d(4)

# 3.实例化神经网络

mymodel = MyTest()

print(f"mymodel._modules={mymodel._modules}")

print("*" * 10)

for named_modules in mymodel.named_modules():

print(f"named_modules={named_modules}")

mymodel._modules=OrderedDict([('linear1', Linear(in_features=2, out_features=3, bias=True)), ('linear2', Linear(in_features=3, out_features=4, bias=True)), ('batchnorm', BatchNorm2d(4, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True))])

**********

named_modules=('', MyTest(

(linear1): Linear(in_features=2, out_features=3, bias=True)

(linear2): Linear(in_features=3, out_features=4, bias=True)

(batchnorm): BatchNorm2d(4, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

))

named_modules=('linear1', Linear(in_features=2, out_features=3, bias=True))

named_modules=('linear2', Linear(in_features=3, out_features=4, bias=True))

named_modules=('batchnorm', BatchNorm2d(4, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True))