??Hiddenlayer库包含一个build_graph()函数,可以非常方便地将深度学习网络进行可视化

1.AlexNet可视化

import torch

from torchvision.models.alexnet import AlexNet

import hiddenlayer as hl

model = AlexNet()

print(model)

hl_graph = hl.build_graph(model, torch.zeros([3, 3, 224, 224]))

hl_graph.theme = hl.graph.THEMES['blue'].copy()

hl_graph.save('./AlexNet.png', format='png')

print('Have done!')

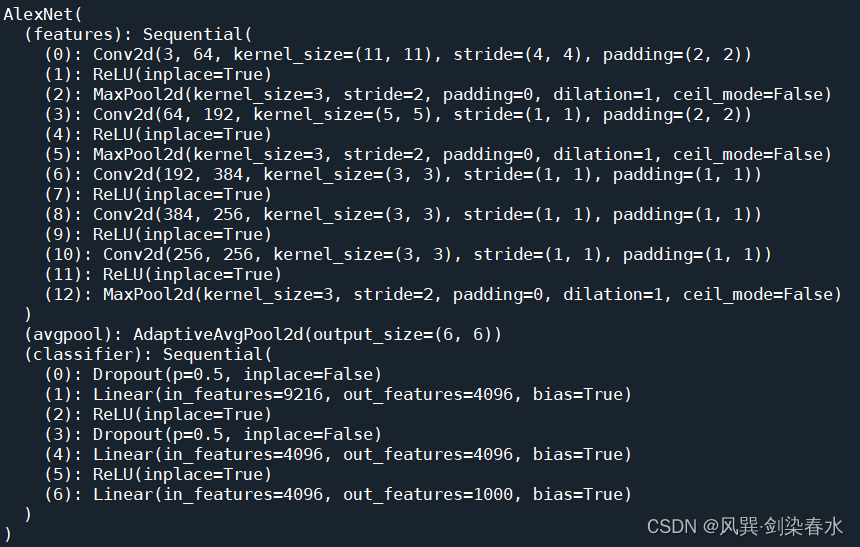

AlexNet结构:

可视化:

2.ResNet18可视化

import torch

from torchvision.models.resnet import resnet18

import hiddenlayer as hl

model = resnet18()

print(model)

hl_graph = hl.build_graph(model, torch.zeros([3, 3, 224, 224]))

hl_graph.theme = hl.graph.THEMES['blue'].copy()

hl_graph.save('./resnet18.png', format='png')

print('Have done!')

可视化:

3.ResNeSt可视化

??可以发现,随着网络深度加深,可视化的结果变得非常密集,ResNeSt结构最少为50层,为了降低密集程度,使得结构更加清晰,自定义了一个ResNeSt8网络,将ResNeSt50的[3,4,6,3]改为了[2,2,2,2]

import torch

from resnest.torch import resnest8

import hiddenlayer as hl

model = resnest8()

print(model)

hl_graph = hl.build_graph(model, torch.zeros([3, 3, 224, 224]))

hl_graph.theme = hl.graph.THEMES['blue'].copy()

hl_graph.save('./resnest8.png', format='png')

print('Have done!')

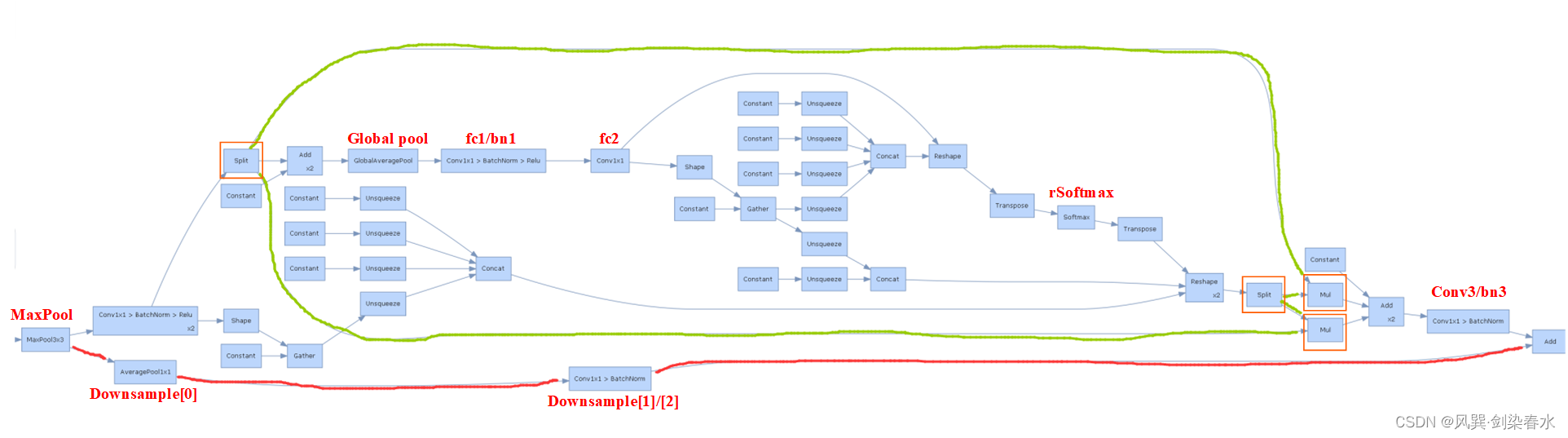

可视化:总体呈现8个块

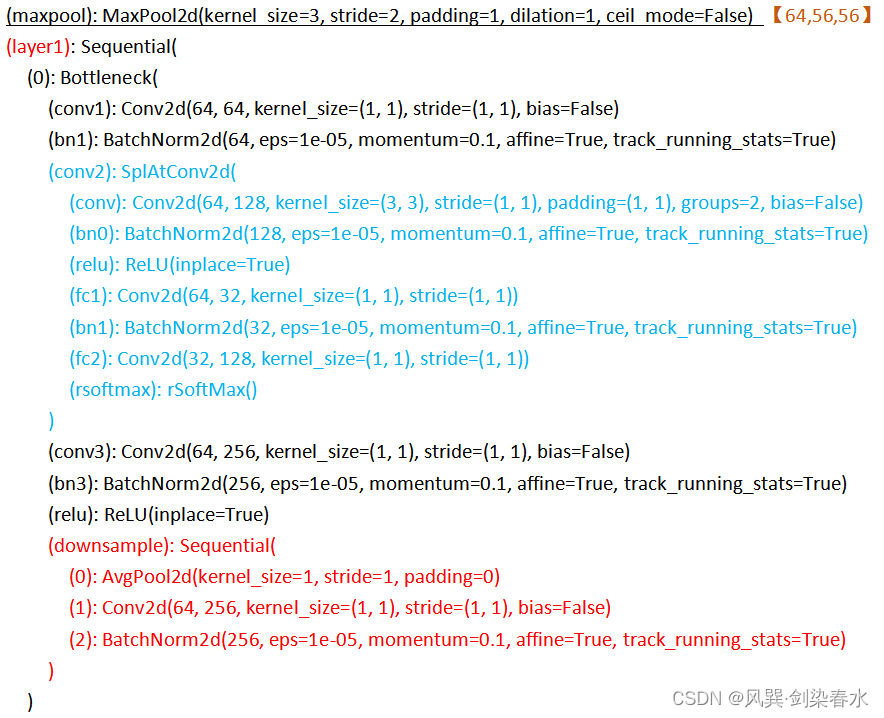

??ResNeSt网络一共有4个Layer,每个Layer除了第一个Bottlenck有Downsample外,其他Bottlenck都是直接的残差连接。且由于Layer1之前有一个Maxpool层,已经将输入下采样4倍,故Layer1的第一个Bottlenck的Downsample其实没有进行下采样。

??选择Layer1的第一个Bottlenck放大,红线表示Downsample,绿线表示SplAtConv2d模块,整体形式与论文中基本一致。至于图中那些constant,唔…我也很迷惑…求大神指点

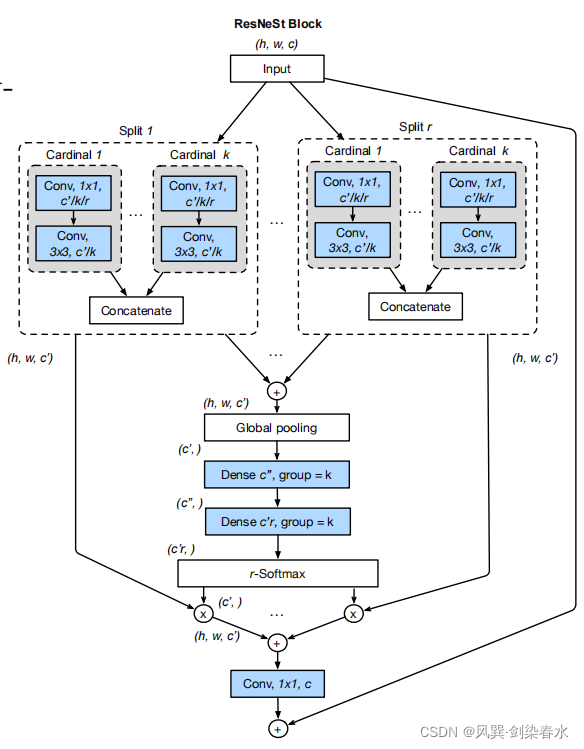

原论文图: