最近搞LSTM优化,但是显存利用率不稳定,想看一下LSTM的显存占用情况,搜罗了一通,发现一个不错的开源工具,记录分享一下。

首先上项目地址:https://github.com/Oldpan/Pytorch-Memory-Utils

这里也有作者写的博客:https://oldpan.me/archives/pytorch-gpu-memory-usage-track

代码库就两个python文件modelsize_estimate.py?和?gpu_mem_track.py,需要先将这两个文件复制到自己的代码工程目录下

作者也给出了使用示例,如下:

import torch

from torchvision import models

from gpu_mem_track import MemTracker

device = torch.device('cuda:0')

gpu_tracker = MemTracker() # define a GPU tracker

gpu_tracker.track() # run function between the code line where uses GPU

cnn = models.vgg19(pretrained=True).features.to(device).eval()

gpu_tracker.track() # run function between the code line where uses GPU

dummy_tensor_1 = torch.randn(30, 3, 512, 512).float().to(device) # 30*3*512*512*4/1024/1024 = 90.00M

dummy_tensor_2 = torch.randn(40, 3, 512, 512).float().to(device) # 40*3*512*512*4/1024/1024 = 120.00M

dummy_tensor_3 = torch.randn(60, 3, 512, 512).float().to(device) # 60*3*512*512*4/1024/1024 = 180.00M

gpu_tracker.track()

dummy_tensor_4 = torch.randn(120, 3, 512, 512).float().to(device) # 120*3*512*512*4/1024/1024 = 360.00M

dummy_tensor_5 = torch.randn(80, 3, 512, 512).float().to(device) # 80*3*512*512*4/1024/1024 = 240.00M

gpu_tracker.track()

dummy_tensor_4 = dummy_tensor_4.cpu()

dummy_tensor_2 = dummy_tensor_2.cpu()

gpu_tracker.clear_cache() # or torch.cuda.empty_cache()

gpu_tracker.track()使用也很简单,在你需要查看显存利用代码的上下添加gpu_tracker.track()即可

gpu_tracker.track()

cnn = models.vgg19(pretrained=True).to(device) # 导入VGG19模型并且将数据转到显存中

gpu_tracker.track()?然后可以发现程序运行过程中的显存变化(第一行是载入前的显存,最后一行是载入后的显存)

At __main__ <module>: line 13 Total Used Memory:472.2 Mb

+ | 1 * Size:(128, 64, 3, 3) | Memory: 0.2949 M | <class 'torch.nn.parameter.Parameter'>

+ | 1 * Size:(256, 128, 3, 3) | Memory: 1.1796 M | <class 'torch.nn.parameter.Parameter'>

+ | 1 * Size:(64, 64, 3, 3) | Memory: 0.1474 M | <class 'torch.nn.parameter.Parameter'>

+ | 2 * Size:(4096,) | Memory: 0.0327 M | <class 'torch.nn.parameter.Parameter'>

+ | 1 * Size:(512, 256, 3, 3) | Memory: 4.7185 M | <class 'torch.nn.parameter.Parameter'>

+ | 2 * Size:(128,) | Memory: 0.0010 M | <class 'torch.nn.parameter.Parameter'>

+ | 1 * Size:(1000, 4096) | Memory: 16.384 M | <class 'torch.nn.parameter.Parameter'>

+ | 6 * Size:(512,) | Memory: 0.0122 M | <class 'torch.nn.parameter.Parameter'>

+ | 1 * Size:(64, 3, 3, 3) | Memory: 0.0069 M | <class 'torch.nn.parameter.Parameter'>

+ | 1 * Size:(4096, 25088) | Memory: 411.04 M | <class 'torch.nn.parameter.Parameter'>

+ | 1 * Size:(4096, 4096) | Memory: 67.108 M | <class 'torch.nn.parameter.Parameter'>

+ | 5 * Size:(512, 512, 3, 3) | Memory: 47.185 M | <class 'torch.nn.parameter.Parameter'>

+ | 2 * Size:(64,) | Memory: 0.0005 M | <class 'torch.nn.parameter.Parameter'>

+ | 3 * Size:(256,) | Memory: 0.0030 M | <class 'torch.nn.parameter.Parameter'>

+ | 1 * Size:(128, 128, 3, 3) | Memory: 0.5898 M | <class 'torch.nn.parameter.Parameter'>

+ | 2 * Size:(256, 256, 3, 3) | Memory: 4.7185 M | <class 'torch.nn.parameter.Parameter'>

+ | 1 * Size:(1000,) | Memory: 0.004 M | <class 'torch.nn.parameter.Parameter'>

At __main__ <module>: line 15 Total Used Memory:1387.5 Mb1387.5 – 472.2 = 915.3 MB,即显存占用情况,熟悉vgg19 的同学应该看出来,vgg19所有层的权重加起来大概是548M,这里却用了915.3M,将上面打印的报告打印的Tensor-Memory也都加起来算下来也差不多551.8Mb,和原始模型大小比较一致,但是两次打印的差值为什么要大这么多呢?

作者分析了原因:Pytorch在开始运行程序时需要额外的显存开销,这种额外的显存开销与我们实际使用的模型权重显存大小无关

大概可以理解。

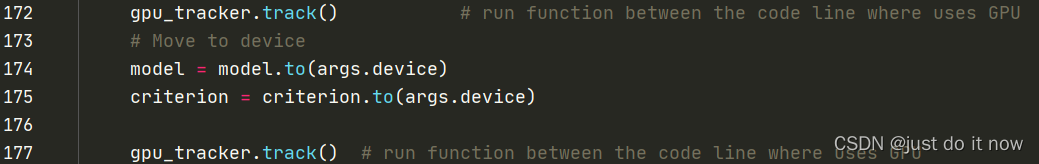

下面是我的时间,模型是双层LSTM:

打印信息如下:

At train-mul.py line 172: main Total Tensor Used Memory:0.0 Mb Total Allocated Memory:0.0 Mb

+ | 2 * Size:(180, 60) | Memory: 0.0823 M | <class 'torch.nn.parameter.Parameter'> | torch.float32

+ | 1 * Size:(352504, 300) | Memory: 403.40 M | <class 'torch.nn.parameter.Parameter'> | torch.float32

+ | 4 * Size:(240,) | Memory: 0.0036 M | <class 'torch.nn.parameter.Parameter'> | torch.float32

+ | 4 * Size:(180,) | Memory: 0.0027 M | <class 'torch.nn.parameter.Parameter'> | torch.float32

+ | 1 * Size:(11, 120) | Memory: 0.0050 M | <class 'torch.nn.parameter.Parameter'> | torch.float32

+ | 2 * Size:(180, 160) | Memory: 0.2197 M | <class 'torch.nn.parameter.Parameter'> | torch.float32

+ | 2 * Size:(1, 256) | Memory: 0.0019 M | <class 'torch.nn.parameter.Parameter'> | torch.float32

+ | 2 * Size:(240, 80) | Memory: 0.1464 M | <class 'torch.nn.parameter.Parameter'> | torch.float32

+ | 2 * Size:(256,) | Memory: 0.0019 M | <class 'torch.nn.parameter.Parameter'> | torch.float32

+ | 1 * Size:(256, 160) | Memory: 0.1562 M | <class 'torch.nn.parameter.Parameter'> | torch.float32

+ | 1 * Size:(11,) | Memory: 4.1961 M | <class 'torch.nn.parameter.Parameter'> | torch.float32

+ | 2 * Size:(240, 300) | Memory: 0.5493 M | <class 'torch.nn.parameter.Parameter'> | torch.float32

+ | 1 * Size:(256, 120) | Memory: 0.1171 M | <class 'torch.nn.parameter.Parameter'> | torch.float32

At train-mul.py line 177: main Total Tensor Used Memory:404.7 Mb Total Allocated Memory:405.3 Mb

所有tensor相加大概是408.88M,如下:

![]()

?两次打印差值是405.3M-0M=405.3M

很奇怪,两次差值比tensor memory相加要低,按说要大一些才是,原因不明