之前一直认为ffmpeg比opencv牛逼很多,不想用opencv,不过后面发现,opencv还是有些用处,主要集中于图片处理这块,比如识别人脸。

今天从电视剧美人心计中,截取一段10秒钟的视频,一帧一帧读取里面的内容,对单独一帧,先从YUV420转RGB,再用opencv从转换后RGB图像数据中获取人脸坐标和宽高,然后用drawbox滤镜将人脸部分框选出来,效果如下:

后面我再会加上一些特征,比如性别,年龄,置于红色方框之内。

现在做下简短说明:

1.本人事先将视频的帧率调整到10

2.本人在滤镜连接的时候,用的是avfilter_link,若滤镜比较多时,这种连接写起来会比较费劲,采取 avfilter_graph_parse_ptr可能会方便些。

3.本人在代码中限制了人脸选择的张数为10.

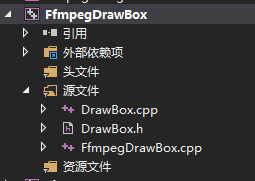

代码结构如下:

其中FfmpegDrawBox.cpp的内容如下:

#include <iostream>

#include "DrawBox.h"

#include <vector>

#ifdef __cplusplus

extern "C"

{

#endif

#pragma comment(lib, "avcodec.lib")

#pragma comment(lib, "avformat.lib")

#pragma comment(lib, "avutil.lib")

#pragma comment(lib, "avdevice.lib")

#pragma comment(lib, "avfilter.lib")

#pragma comment(lib, "postproc.lib")

#pragma comment(lib, "swresample.lib")

#pragma comment(lib, "swscale.lib")

#ifdef __cplusplus

};

#endif

std::string Unicode_to_Utf8(const std::string & str)

{

int nwLen = ::MultiByteToWideChar(CP_ACP, 0, str.c_str(), -1, NULL, 0);

wchar_t * pwBuf = new wchar_t[nwLen + 1];//一定要加1,不然会出现尾巴

ZeroMemory(pwBuf, nwLen * 2 + 2);

::MultiByteToWideChar(CP_ACP, 0, str.c_str(), str.length(), pwBuf, nwLen);

int nLen = ::WideCharToMultiByte(CP_UTF8, 0, pwBuf, -1, NULL, NULL, NULL, NULL);

char * pBuf = new char[nLen + 1];

ZeroMemory(pBuf, nLen + 1);

::WideCharToMultiByte(CP_UTF8, 0, pwBuf, nwLen, pBuf, nLen, NULL, NULL);

std::string retStr(pBuf);

delete[]pwBuf;

delete[]pBuf;

pwBuf = NULL;

pBuf = NULL;

return retStr;

}

int main()

{

CDrawBox cCDrawBox;

const char *pFileA = "E:\\learn\\ffmpeg\\convert\\04_10framerate.mp4";

const char *pFileOut = "E:\\learn\\ffmpeg\\convert\\04_10framerate_result.mp4";

cCDrawBox.StartDrawBox(pFileA, pFileOut);

cCDrawBox.WaitFinish();

return 0;

}

DrawBox.h的内容如下:

#pragma once

#include <Windows.h>

#include <string>

#ifdef __cplusplus

extern "C"

{

#endif

#include "libavcodec/avcodec.h"

#include "libavformat/avformat.h"

#include "libswscale/swscale.h"

#include "libswresample/swresample.h"

#include "libavdevice/avdevice.h"

#include "libavutil/audio_fifo.h"

#include "libavutil/avutil.h"

#include "libavutil/fifo.h"

#include "libavutil/frame.h"

#include "libavutil/imgutils.h"

#include "libavfilter/avfilter.h"

#include "libavfilter/buffersink.h"

#include "libavfilter/buffersrc.h"

#ifdef __cplusplus

};

#endif

#include "opencv2/objdetect/objdetect.hpp"

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/imgproc/imgproc.hpp"

using namespace std;

using namespace cv;

class CDrawBox

{

public:

CDrawBox();

~CDrawBox();

public:

int StartDrawBox(const char *pFileA, const char *pFileOut);

int WaitFinish();

private:

int OpenFileA(const char *pFileA);

int OpenOutPut(const char *pFileOut);

int InitFilter(std::vector<Rect>& facesRect);

void UnInitFilter();

private:

static DWORD WINAPI VideoAReadProc(LPVOID lpParam);

void VideoARead();

static DWORD WINAPI VideoDrawBoxProc(LPVOID lpParam);

void VideoDrawBox();

private:

AVFormatContext *m_pFormatCtx_FileA = NULL;

AVCodecContext *m_pReadCodecCtx_VideoA = NULL;

AVCodec *m_pReadCodec_VideoA = NULL;

AVCodecContext *m_pCodecEncodeCtx_Video = NULL;

AVFormatContext *m_pFormatCtx_Out = NULL;

AVFifoBuffer *m_pVideoAFifo = NULL;

int m_iVideoWidth = 1920;

int m_iVideoHeight = 1080;

int m_iYuv420FrameSize = 0;

int m_iFaceCount = 0;

private:

AVFilterGraph* m_pFilterGraph = NULL;

AVFilterContext* m_pFilterCtxSrcVideoA = NULL;

AVFilterContext* m_pFilterCtxDrawBox[10];

AVFilterContext* m_pFilterCtxSink = NULL;

private:

CRITICAL_SECTION m_csVideoASection;

HANDLE m_hVideoAReadThread = NULL;

HANDLE m_hVideoDrawTextThread = NULL;

private:

CascadeClassifier m_cpufaceCascade;

};

DrawBox.cpp的代码如下:

#include "DrawBox.h"

static void CopyYUVToImage(uchar * dst, uint8_t *pY, uint8_t *pU, uint8_t *pV, int width, int height)

{

uint32_t size = width * height;

memcpy(dst, pY, size);

memcpy(dst + size, pU, size / 4);

memcpy(dst + size + size / 4, pV, size / 4);

}

CDrawBox::CDrawBox()

{

InitializeCriticalSection(&m_csVideoASection);

}

CDrawBox::~CDrawBox()

{

DeleteCriticalSection(&m_csVideoASection);

}

int CDrawBox::StartDrawBox(const char *pFileA, const char *pFileOut)

{

int ret = -1;

do

{

//const string path = "D:\\opencv\\build\\etc\\haarcascades\\haarcascade_frontalface_alt.xml";

const string path = "D:\\opencv\\build\\etc\\haarcascades\\haarcascade_profileface.xml";

if (!m_cpufaceCascade.load(path))

{

break;

}

ret = OpenFileA(pFileA);

if (ret != 0)

{

break;

}

ret = OpenOutPut(pFileOut);

if (ret != 0)

{

break;

}

m_iYuv420FrameSize = av_image_get_buffer_size(AV_PIX_FMT_YUV420P, m_pReadCodecCtx_VideoA->width, m_pReadCodecCtx_VideoA->height, 1);

//申请30帧缓存

m_pVideoAFifo = av_fifo_alloc(30 * m_iYuv420FrameSize);

m_hVideoAReadThread = CreateThread(NULL, 0, VideoAReadProc, this, 0, NULL);

m_hVideoDrawTextThread = CreateThread(NULL, 0, VideoDrawBoxProc, this, 0, NULL);

} while (0);

return ret;

}

int CDrawBox::WaitFinish()

{

int ret = 0;

do

{

if (NULL == m_hVideoAReadThread)

{

break;

}

WaitForSingleObject(m_hVideoAReadThread, INFINITE);

CloseHandle(m_hVideoAReadThread);

m_hVideoAReadThread = NULL;

WaitForSingleObject(m_hVideoDrawTextThread, INFINITE);

CloseHandle(m_hVideoDrawTextThread);

m_hVideoDrawTextThread = NULL;

} while (0);

return ret;

}

int CDrawBox::OpenFileA(const char *pFileA)

{

int ret = -1;

do

{

if ((ret = avformat_open_input(&m_pFormatCtx_FileA, pFileA, 0, 0)) < 0) {

printf("Could not open input file.");

break;

}

if ((ret = avformat_find_stream_info(m_pFormatCtx_FileA, 0)) < 0) {

printf("Failed to retrieve input stream information");

break;

}

if (m_pFormatCtx_FileA->streams[0]->codecpar->codec_type != AVMEDIA_TYPE_VIDEO)

{

break;

}

m_pReadCodec_VideoA = (AVCodec *)avcodec_find_decoder(m_pFormatCtx_FileA->streams[0]->codecpar->codec_id);

m_pReadCodecCtx_VideoA = avcodec_alloc_context3(m_pReadCodec_VideoA);

if (m_pReadCodecCtx_VideoA == NULL)

{

break;

}

avcodec_parameters_to_context(m_pReadCodecCtx_VideoA, m_pFormatCtx_FileA->streams[0]->codecpar);

m_iVideoWidth = m_pReadCodecCtx_VideoA->width;

m_iVideoHeight = m_pReadCodecCtx_VideoA->height;

m_pReadCodecCtx_VideoA->framerate = m_pFormatCtx_FileA->streams[0]->r_frame_rate;

if (avcodec_open2(m_pReadCodecCtx_VideoA, m_pReadCodec_VideoA, NULL) < 0)

{

break;

}

ret = 0;

} while (0);

return ret;

}

int CDrawBox::OpenOutPut(const char *pFileOut)

{

int iRet = -1;

AVStream *pAudioStream = NULL;

AVStream *pVideoStream = NULL;

do

{

avformat_alloc_output_context2(&m_pFormatCtx_Out, NULL, NULL, pFileOut);

{

AVCodec* pCodecEncode_Video = (AVCodec *)avcodec_find_encoder(m_pFormatCtx_Out->oformat->video_codec);

m_pCodecEncodeCtx_Video = avcodec_alloc_context3(pCodecEncode_Video);

if (!m_pCodecEncodeCtx_Video)

{

break;

}

pVideoStream = avformat_new_stream(m_pFormatCtx_Out, pCodecEncode_Video);

if (!pVideoStream)

{

break;

}

int frameRate = 10;

m_pCodecEncodeCtx_Video->flags |= AV_CODEC_FLAG_QSCALE;

m_pCodecEncodeCtx_Video->bit_rate = 4000000;

m_pCodecEncodeCtx_Video->rc_min_rate = 4000000;

m_pCodecEncodeCtx_Video->rc_max_rate = 4000000;

m_pCodecEncodeCtx_Video->bit_rate_tolerance = 4000000;

m_pCodecEncodeCtx_Video->time_base.den = frameRate;

m_pCodecEncodeCtx_Video->time_base.num = 1;

m_pCodecEncodeCtx_Video->width = m_iVideoWidth;

m_pCodecEncodeCtx_Video->height = m_iVideoHeight;

//pH264Encoder->pCodecCtx->frame_number = 1;

m_pCodecEncodeCtx_Video->gop_size = 12;

m_pCodecEncodeCtx_Video->max_b_frames = 0;

m_pCodecEncodeCtx_Video->thread_count = 4;

m_pCodecEncodeCtx_Video->pix_fmt = AV_PIX_FMT_YUV420P;

m_pCodecEncodeCtx_Video->codec_id = AV_CODEC_ID_H264;

m_pCodecEncodeCtx_Video->codec_type = AVMEDIA_TYPE_VIDEO;

av_opt_set(m_pCodecEncodeCtx_Video->priv_data, "b-pyramid", "none", 0);

av_opt_set(m_pCodecEncodeCtx_Video->priv_data, "preset", "superfast", 0);

av_opt_set(m_pCodecEncodeCtx_Video->priv_data, "tune", "zerolatency", 0);

if (m_pFormatCtx_Out->oformat->flags & AVFMT_GLOBALHEADER)

m_pCodecEncodeCtx_Video->flags |= AV_CODEC_FLAG_GLOBAL_HEADER;

if (avcodec_open2(m_pCodecEncodeCtx_Video, pCodecEncode_Video, 0) < 0)

{

//编码器打开失败,退出程序

break;

}

}

if (!(m_pFormatCtx_Out->oformat->flags & AVFMT_NOFILE))

{

if (avio_open(&m_pFormatCtx_Out->pb, pFileOut, AVIO_FLAG_WRITE) < 0)

{

break;

}

}

avcodec_parameters_from_context(pVideoStream->codecpar, m_pCodecEncodeCtx_Video);

if (avformat_write_header(m_pFormatCtx_Out, NULL) < 0)

{

break;

}

iRet = 0;

} while (0);

if (iRet != 0)

{

if (m_pCodecEncodeCtx_Video != NULL)

{

avcodec_free_context(&m_pCodecEncodeCtx_Video);

m_pCodecEncodeCtx_Video = NULL;

}

if (m_pFormatCtx_Out != NULL)

{

avformat_free_context(m_pFormatCtx_Out);

m_pFormatCtx_Out = NULL;

}

}

return iRet;

}

DWORD WINAPI CDrawBox::VideoAReadProc(LPVOID lpParam)

{

CDrawBox *pDrawBox = (CDrawBox *)lpParam;

if (pDrawBox != NULL)

{

pDrawBox->VideoARead();

}

return 0;

}

void CDrawBox::VideoARead()

{

AVFrame *pFrame;

pFrame = av_frame_alloc();

int y_size = m_pReadCodecCtx_VideoA->width * m_pReadCodecCtx_VideoA->height;

char *pY = new char[y_size];

char *pU = new char[y_size / 4];

char *pV = new char[y_size / 4];

AVPacket packet = { 0 };

int ret = 0;

while (1)

{

av_packet_unref(&packet);

ret = av_read_frame(m_pFormatCtx_FileA, &packet);

if (ret == AVERROR(EAGAIN))

{

continue;

}

else if (ret == AVERROR_EOF)

{

break;

}

else if (ret < 0)

{

break;

}

ret = avcodec_send_packet(m_pReadCodecCtx_VideoA, &packet);

if (ret >= 0)

{

ret = avcodec_receive_frame(m_pReadCodecCtx_VideoA, pFrame);

if (ret == AVERROR(EAGAIN))

{

continue;

}

else if (ret == AVERROR_EOF)

{

break;

}

else if (ret < 0) {

break;

}

while (1)

{

if (av_fifo_space(m_pVideoAFifo) >= m_iYuv420FrameSize)

{

///Y

int contY = 0;

for (int i = 0; i < pFrame->height; i++)

{

memcpy(pY + contY, pFrame->data[0] + i * pFrame->linesize[0], pFrame->width);

contY += pFrame->width;

}

///U

int contU = 0;

for (int i = 0; i < pFrame->height / 2; i++)

{

memcpy(pU + contU, pFrame->data[1] + i * pFrame->linesize[1], pFrame->width / 2);

contU += pFrame->width / 2;

}

///V

int contV = 0;

for (int i = 0; i < pFrame->height / 2; i++)

{

memcpy(pV + contV, pFrame->data[2] + i * pFrame->linesize[2], pFrame->width / 2);

contV += pFrame->width / 2;

}

EnterCriticalSection(&m_csVideoASection);

av_fifo_generic_write(m_pVideoAFifo, pY, y_size, NULL);

av_fifo_generic_write(m_pVideoAFifo, pU, y_size / 4, NULL);

av_fifo_generic_write(m_pVideoAFifo, pV, y_size / 4, NULL);

LeaveCriticalSection(&m_csVideoASection);

break;

}

else

{

Sleep(100);

}

}

}

if (ret == AVERROR(EAGAIN))

{

continue;

}

}

av_frame_free(&pFrame);

delete[] pY;

delete[] pU;

delete[] pV;

}

DWORD WINAPI CDrawBox::VideoDrawBoxProc(LPVOID lpParam)

{

CDrawBox *pDrawBox = (CDrawBox *)lpParam;

if (pDrawBox != NULL)

{

pDrawBox->VideoDrawBox();

}

return 0;

}

void CDrawBox::VideoDrawBox()

{

int ret = 0;

DWORD dwBeginTime = ::GetTickCount();

AVFrame *pFrameVideoA = av_frame_alloc();

uint8_t *videoA_buffer_yuv420 = (uint8_t *)av_malloc(m_iYuv420FrameSize);

av_image_fill_arrays(pFrameVideoA->data, pFrameVideoA->linesize, videoA_buffer_yuv420, AV_PIX_FMT_YUV420P, m_pReadCodecCtx_VideoA->width, m_pReadCodecCtx_VideoA->height, 1);

pFrameVideoA->width = m_iVideoWidth;

pFrameVideoA->height = m_iVideoHeight;

pFrameVideoA->format = AV_PIX_FMT_YUV420P;

AVFrame* pFrame_out = av_frame_alloc();

uint8_t *out_buffer_yuv420 = (uint8_t *)av_malloc(m_iYuv420FrameSize);

av_image_fill_arrays(pFrame_out->data, pFrame_out->linesize, out_buffer_yuv420, AV_PIX_FMT_YUV420P, m_iVideoWidth, m_iVideoHeight, 1);

AVPacket packet = { 0 };

int iPicCount = 0;

while (1)

{

if (NULL == m_pVideoAFifo)

{

break;

}

int iVideoASize = av_fifo_size(m_pVideoAFifo);

if (iVideoASize >= m_iYuv420FrameSize)

{

EnterCriticalSection(&m_csVideoASection);

av_fifo_generic_read(m_pVideoAFifo, videoA_buffer_yuv420, m_iYuv420FrameSize, NULL);

LeaveCriticalSection(&m_csVideoASection);

Mat mainYUuvImage;

mainYUuvImage.create(m_iVideoHeight * 3 / 2, m_iVideoWidth, CV_8UC1);

CopyYUVToImage(mainYUuvImage.data, pFrameVideoA->data[0], pFrameVideoA->data[1], pFrameVideoA->data[2], m_iVideoWidth, m_iVideoHeight);

cv::Mat mainRgbImage;

cv::cvtColor(mainYUuvImage, mainRgbImage, COLOR_YUV2RGB_I420);

mainYUuvImage.release();

vector<Rect> faces;

m_cpufaceCascade.detectMultiScale(mainRgbImage, faces, 1.1, 3, 0);

InitFilter(faces);

pFrameVideoA->pkt_dts = pFrameVideoA->pts = av_rescale_q_rnd(iPicCount, m_pCodecEncodeCtx_Video->time_base, m_pFormatCtx_Out->streams[0]->time_base, (AVRounding)(AV_ROUND_NEAR_INF | AV_ROUND_PASS_MINMAX));

pFrameVideoA->pkt_duration = 0;

pFrameVideoA->pkt_pos = -1;

ret = av_buffersrc_add_frame(m_pFilterCtxSrcVideoA, pFrameVideoA);

if (ret < 0)

{

break;

}

ret = av_buffersink_get_frame(m_pFilterCtxSink, pFrame_out);

if (ret < 0)

{

//printf("Mixer: failed to call av_buffersink_get_frame_flags\n");

break;

}

UnInitFilter();

pFrame_out->pkt_dts = pFrame_out->pts = av_rescale_q_rnd(iPicCount, m_pCodecEncodeCtx_Video->time_base, m_pFormatCtx_Out->streams[0]->time_base, (AVRounding)(AV_ROUND_NEAR_INF | AV_ROUND_PASS_MINMAX));

pFrame_out->pkt_duration = 0;

pFrame_out->pkt_pos = -1;

pFrame_out->width = m_iVideoWidth;

pFrame_out->height = m_iVideoHeight;

pFrame_out->format = AV_PIX_FMT_YUV420P;

ret = avcodec_send_frame(m_pCodecEncodeCtx_Video, pFrame_out);

ret = avcodec_receive_packet(m_pCodecEncodeCtx_Video, &packet);

av_write_frame(m_pFormatCtx_Out, &packet);

iPicCount++;

}

else

{

if (m_hVideoAReadThread == NULL)

{

break;

}

Sleep(1);

}

}

av_write_trailer(m_pFormatCtx_Out);

avio_close(m_pFormatCtx_Out->pb);

av_frame_free(&pFrameVideoA);

}

int CDrawBox::InitFilter(std::vector<Rect>& facesRect)

{

m_iFaceCount = facesRect.size();

if (m_iFaceCount > 10)

{

m_iFaceCount = 10;

}

int ret = 0;

char args_videoA[512];

const char* pad_name_videoA = "in0";

const char* name_drawbox = "drawbox";

AVFilter* filter_src_videoA = (AVFilter *)avfilter_get_by_name("buffer");

AVFilter* filter_sink = (AVFilter *)avfilter_get_by_name("buffersink");

AVFilter *filter_drawbox[10];

filter_drawbox[0] = (AVFilter *)avfilter_get_by_name("drawbox");

for (int i = 1; i < m_iFaceCount && i < 10; i++)

{

filter_drawbox[i] = (AVFilter *)avfilter_get_by_name("drawbox");

}

m_pFilterGraph = avfilter_graph_alloc();

AVRational timeBase;

timeBase.num = 1;

timeBase.den = 10;

AVRational timeAspect;

timeAspect.num = 0;

timeAspect.den = 1;

_snprintf(args_videoA, sizeof(args_videoA),

"video_size=%dx%d:pix_fmt=%d:time_base=%d/%d:pixel_aspect=%d/%d",

m_iVideoWidth, m_iVideoHeight, AV_PIX_FMT_YUV420P,

timeBase.num, timeBase.den,

timeAspect.num,

timeAspect.den);

std::string strFilterDesc[10];

int i = 0;

std::vector<Rect>::iterator iterFace = facesRect.begin();

for (; iterFace != facesRect.end(); ++iterFace)

{

char szFilter[100] = { 0 };

sprintf(szFilter, "x=%d:y=%d:w=%d:h=%d:c=red", iterFace->x, iterFace->y, iterFace->width, iterFace->height);

strFilterDesc[i] = szFilter;

i++;

if (i >= 10)

{

break;

}

}

if (facesRect.size() == 0)

{

strFilterDesc[0] = "x=0:y=0:w=0:h=0:c=red";

}

do

{

ret = avfilter_graph_create_filter(&m_pFilterCtxSrcVideoA, filter_src_videoA, pad_name_videoA, args_videoA, NULL, m_pFilterGraph);

if (ret < 0)

{

break;

}

ret = avfilter_graph_create_filter(&m_pFilterCtxDrawBox[0], filter_drawbox[0], name_drawbox, strFilterDesc[0].c_str(), NULL, m_pFilterGraph);

if (ret < 0)

{

break;

}

for (int i = 1; i < m_iFaceCount; i++)

{

ret = avfilter_graph_create_filter(&m_pFilterCtxDrawBox[i], filter_drawbox[i], name_drawbox, strFilterDesc[i].c_str(), NULL, m_pFilterGraph);

if (ret < 0)

{

break;

}

}

ret = avfilter_graph_create_filter(&m_pFilterCtxSink, filter_sink, "out", NULL, NULL, m_pFilterGraph);

if (ret < 0)

{

break;

}

ret = av_opt_set_bin(m_pFilterCtxSink, "pix_fmts", (uint8_t*)&m_pCodecEncodeCtx_Video->pix_fmt, sizeof(m_pCodecEncodeCtx_Video->pix_fmt), AV_OPT_SEARCH_CHILDREN);

ret = avfilter_link(m_pFilterCtxSrcVideoA, 0, m_pFilterCtxDrawBox[0], 0);

if (ret != 0)

{

break;

}

int iEndIndex = 0;

for (int i = 0; i < m_iFaceCount - 1; i++)

{

ret = avfilter_link(m_pFilterCtxDrawBox[i], 0, m_pFilterCtxDrawBox[i + 1], 0);

if (ret != 0)

{

break;

}

iEndIndex++;

}

ret = avfilter_link(m_pFilterCtxDrawBox[iEndIndex], 0, m_pFilterCtxSink, 0);

if (ret != 0)

{

break;

}

ret = avfilter_graph_config(m_pFilterGraph, NULL);

if (ret < 0)

{

break;

}

ret = 0;

} while (0);

av_free(filter_src_videoA);

char* temp = avfilter_graph_dump(m_pFilterGraph, NULL);

return ret;

}

void CDrawBox::UnInitFilter()

{

avfilter_free(m_pFilterCtxSrcVideoA);

m_pFilterCtxSrcVideoA = NULL;

avfilter_free(m_pFilterCtxDrawBox[0]);

for (int i = 1; i < m_iFaceCount && i < 10; i++)

{

avfilter_free(m_pFilterCtxDrawBox[i]);

}

avfilter_free(m_pFilterCtxSink);

m_pFilterCtxSink = NULL;

avfilter_graph_free(&m_pFilterGraph);

m_pFilterGraph = NULL;

}