文章目录

TrackMonocular中的核心步骤

我们在第一部分中给出了TrackMonocular函数,其中最重要的一步莫过于GrabImageMonocular,其代码如下所示:

cv::Mat Tracking::GrabImageMonocular(const cv::Mat &im, const double ×tamp)

{

mImGray = im;

// 基于图像进行一些简单的处理

if(mImGray.channels()==3)

{

if(mbRGB)

cvtColor(mImGray,mImGray,CV_RGB2GRAY);

else

cvtColor(mImGray,mImGray,CV_BGR2GRAY);

}

else if(mImGray.channels()==4)

{

if(mbRGB)

cvtColor(mImGray,mImGray,CV_RGBA2GRAY);

else

cvtColor(mImGray,mImGray,CV_BGRA2GRAY);

}

// 看看有没有初始化完了

if(mState==NOT_INITIALIZED || mState==NO_IMAGES_YET)

mCurrentFrame = Frame(mImGray,timestamp,mpIniORBextractor,mpORBVocabulary,mK,mDistCoef,mbf,mThDepth);

else

mCurrentFrame = Frame(mImGray,timestamp,mpORBextractorLeft,mpORBVocabulary,mK,mDistCoef,mbf,mThDepth);

// 进入跟踪!

Track();

return mCurrentFrame.mTcw.clone();

}

接下来,我们进入Tracking过程:

void Tracking::Track()

{

if(mState==NO_IMAGES_YET)

{

mState = NOT_INITIALIZED;

}

mLastProcessedState=mState;

// Get Map Mutex -> Map cannot be changed

unique_lock<mutex> lock(mpMap->mMutexMapUpdate);

if(mState==NOT_INITIALIZED)

{

if(mSensor==System::STEREO || mSensor==System::RGBD)

StereoInitialization();

else

MonocularInitialization();

mpFrameDrawer->Update(this);

if(mState!=OK)

return;

}

else

{

}

}

我们首先不给出Tracking的全貌,可以看到,截止目前,我们有了两个内容需要去熟悉:

- 在没初始化的时候,代码将图搞了个Frame个类,那么这个类是什么?

- 在没初始化的时候,一进入Tracking就被迫初始化,那这个初始化是个什么东西?

Frame中包含的信息

普通帧管好自己,关键帧服务优化与回环。

首先是存储了相机的一些基础信息,比如相机的内参、基线等等,这从配置文件中读入,在构造函数中为其赋值。

mvKeys 和 mvKeysRight分别是左目特征点描述子和右目特征点描述子。

mvKeysUn和mvuRight分别是畸变矫正之后的特征点描述子。

mvKeys、mvKeyRIght、mvKeysUn的类型是vector<cv::KeyPoint>

mvuRight的类型是vector<float>,记录右图中对应特征点的横坐标.我们认为双目匹配上的特征点,纵坐标是一样的。

KeyPoints的属性有这些.

// Stereo

Frame::Frame(const cv::Mat &imLeft, const cv::Mat &imRight, const double &timeStamp, ORBextractor* extractorLeft, ORBextractor* extractorRight, ORBVocabulary* voc, cv::Mat &K, cv::Mat &distCoef, const float &bf, const float &thDepth)

:mpORBvocabulary(voc),mpORBextractorLeft(extractorLeft),mpORBextractorRight(extractorRight), mTimeStamp(timeStamp), mK(K.clone()),mDistCoef(distCoef.clone()), mbf(bf), mThDepth(thDepth),

mpReferenceKF(static_cast<KeyFrame*>(NULL))

{

// ORB extraction

thread threadLeft(&Frame::ExtractORB,this,0,imLeft);

thread threadRight(&Frame::ExtractORB,this,1,imRight);

threadLeft.join();

threadRight.join();

N = mvKeys.size();

if(mvKeys.empty())

return;

UndistortKeyPoints();

ComputeStereoMatches();

mvpMapPoints = vector<MapPoint*>(N,static_cast<MapPoint*>(NULL));

mvbOutlier = vector<bool>(N,false);

// This is done only for the first Frame (or after a change in the calibration)

if(mbInitialComputations)

{

ComputeImageBounds(imLeft);

mfGridElementWidthInv=static_cast<float>(FRAME_GRID_COLS)/(mnMaxX-mnMinX);

mfGridElementHeightInv=static_cast<float>(FRAME_GRID_ROWS)/(mnMaxY-mnMinY);

fx = K.at<float>(0,0);

fy = K.at<float>(1,1);

cx = K.at<float>(0,2);

cy = K.at<float>(1,2);

invfx = 1.0f/fx;

invfy = 1.0f/fy;

mbInitialComputations=false;

}

mb = mbf/fx;

AssignFeaturesToGrid();

}

// RGBD

Frame::Frame(const cv::Mat &imGray, const cv::Mat &imDepth, const double &timeStamp, ORBextractor* extractor,ORBVocabulary* voc, cv::Mat &K, cv::Mat &distCoef, const float &bf, const float &thDepth)

:mpORBvocabulary(voc),mpORBextractorLeft(extractor),mpORBextractorRight(static_cast<ORBextractor*>(NULL)),

mTimeStamp(timeStamp), mK(K.clone()),mDistCoef(distCoef.clone()), mbf(bf), mThDepth(thDepth)

{

// ORB extraction

ExtractORB(0,imGray);

N = mvKeys.size();

if(mvKeys.empty())

return;

UndistortKeyPoints();

ComputeStereoFromRGBD(imDepth);

mvpMapPoints = vector<MapPoint*>(N,static_cast<MapPoint*>(NULL));

mvbOutlier = vector<bool>(N,false);

// This is done only for the first Frame (or after a change in the calibration)

if(mbInitialComputations)

{

ComputeImageBounds(imGray);

mfGridElementWidthInv=static_cast<float>(FRAME_GRID_COLS)/static_cast<float>(mnMaxX-mnMinX);

mfGridElementHeightInv=static_cast<float>(FRAME_GRID_ROWS)/static_cast<float>(mnMaxY-mnMinY);

fx = K.at<float>(0,0);

fy = K.at<float>(1,1);

cx = K.at<float>(0,2);

cy = K.at<float>(1,2);

invfx = 1.0f/fx;

invfy = 1.0f/fy;

mbInitialComputations=false;

}

mb = mbf/fx;

AssignFeaturesToGrid();

}

// Monocular

Frame::Frame(const cv::Mat &imGray, const double &timeStamp, ORBextractor* extractor,ORBVocabulary* voc, cv::Mat &K, cv::Mat &distCoef, const float &bf, const float &thDepth)

:mpORBvocabulary(voc),mpORBextractorLeft(extractor),mpORBextractorRight(static_cast<ORBextractor*>(NULL)),

mTimeStamp(timeStamp), mK(K.clone()),mDistCoef(distCoef.clone()), mbf(bf), mThDepth(thDepth)

{

// ORB extraction

ExtractORB(0,imGray);

N = mvKeys.size();

if(mvKeys.empty())

return;

UndistortKeyPoints();

// Set no stereo information

mvuRight = vector<float>(N,-1);

mvDepth = vector<float>(N,-1);

mvpMapPoints = vector<MapPoint*>(N,static_cast<MapPoint*>(NULL));

mvbOutlier = vector<bool>(N,false);

// This is done only for the first Frame (or after a change in the calibration)

if(mbInitialComputations)

{

ComputeImageBounds(imGray);

mfGridElementWidthInv=static_cast<float>(FRAME_GRID_COLS)/static_cast<float>(mnMaxX-mnMinX);

mfGridElementHeightInv=static_cast<float>(FRAME_GRID_ROWS)/static_cast<float>(mnMaxY-mnMinY);

fx = K.at<float>(0,0);

fy = K.at<float>(1,1);

cx = K.at<float>(0,2);

cy = K.at<float>(1,2);

invfx = 1.0f/fx;

invfy = 1.0f/fy;

mbInitialComputations=false;

}

mb = mbf/fx;

AssignFeaturesToGrid();

}

畸变矫正UndistorKeyPoints和ComputeImageBounds

畸变矫正只对单目和RGBD的输入图像有效,双目相机的畸变矫正参数均为0,因为在数据集发布之前就预先做了矫正。

双目矫正之后,将两个相机的成像平面矫正到同一平面上,两个相机的极线平行,极点在无穷远处,这是我们在函数ComputerStereoMatches()中做极线搜索的理论基础。

关于双目矫正,可以参考这份文档。总而言之,双目矫正不仅仅是对畸变进行了矫正,还使得极线得到了平行。

void Frame::UndistortKeyPoints()

{

// 如果输入的图像是双目图像,则已经做好了双目矫正,其畸变参数为0

if(mDistCoef.at<float>(0)==0.0)

{

mvKeysUn=mvKeys;

return;

}

// 将特征点坐标转变为undistortPoints要求的格式

cv::Mat mat(N,2,CV_32F);

for(int i=0; i<N; i++)

{

mat.at<float>(i,0)=mvKeys[i].pt.x;

mat.at<float>(i,1)=mvKeys[i].pt.y;

}

// 进行畸变矫正

mat=mat.reshape(2);

// 原图像,目标图像,相机外参

cv::undistortPoints(mat,mat,mK,mDistCoef,cv::Mat(),mK);

mat=mat.reshape(1);

// 记录矫正后的特征点

mvKeysUn.resize(N);

for(int i=0; i<N; i++)

{

cv::KeyPoint kp = mvKeys[i];

kp.pt.x=mat.at<float>(i,0);

kp.pt.y=mat.at<float>(i,1);

mvKeysUn[i]=kp;

}

}

对于每次的第一张图像,都会进行以下计算

// 通过计算图片顶点畸变矫正后的坐标来计算畸变矫正后图片的有效范围

void Frame::ComputeImageBounds(const cv::Mat &imLeft)

{

if(mDistCoef.at<float>(0)!=0.0)

{

cv::Mat mat(4,2,CV_32F);

mat.at<float>(0,0)=0.0; mat.at<float>(0,1)=0.0;

mat.at<float>(1,0)=imLeft.cols; mat.at<float>(1,1)=0.0;

mat.at<float>(2,0)=0.0; mat.at<float>(2,1)=imLeft.rows;

mat.at<float>(3,0)=imLeft.cols; mat.at<float>(3,1)=imLeft.rows;

// Undistort corners

mat=mat.reshape(2);

cv::undistortPoints(mat,mat,mK,mDistCoef,cv::Mat(),mK);

mat=mat.reshape(1);

mnMinX = min(mat.at<float>(0,0),mat.at<float>(2,0));

mnMaxX = max(mat.at<float>(1,0),mat.at<float>(3,0));

mnMinY = min(mat.at<float>(0,1),mat.at<float>(1,1));

mnMaxY = max(mat.at<float>(2,1),mat.at<float>(3,1));

}

else

{

mnMinX = 0.0f;

mnMaxX = imLeft.cols;

mnMinY = 0.0f;

mnMaxY = imLeft.rows;

}

}

对双目/RGB-D特征点的预处理

双目/RGBD相机中可以得到特征点的立体信息,包括右目特征点信息以及特征点深度信息:

- 双目相机通过双目特征点匹配关系计算特征点的深度值;

- RGBD相机通过特征点深度构造虚拟的右目图像特征点。

通过这种后处理,后续就不再区分双目特征点和RGBD特征点,都是以双目特征点的形式被处理。

双目特征点的处理ComputeSteroMatches

首先分别对两个帧进行特征点提取和描述后,接下来进入特征点匹配。其步骤为:

- 粗匹配:根据描述子距离(汉明距离)和金字塔层级判断。粗匹配的关系是按行寻找的,对于左目图像中的每个特征点,在右目图像对应行寻找匹配特征点;是在其上下几行里的特征点找这个点。

- 精匹配:根据特征点周围窗口内容的相似度进行匹配;

- 记录右目匹配和深度信息;

- 离群点筛选:以平均相似度2.1倍为标准,筛选离群点。

oid Frame::ComputeStereoMatches()

{

mvuRight = vector<float>(N,-1.0f);

mvDepth = vector<float>(N,-1.0f);

const int thOrbDist = (ORBmatcher::TH_HIGH+ORBmatcher::TH_LOW)/2;

const int nRows = mpORBextractorLeft->mvImagePyramid[0].rows;

//Assign keypoints to row table

// 右目图像特征点逐行统计;将右目图像中的每个特征点注册到附近几行

vector<vector<size_t> > vRowIndices(nRows,vector<size_t>());

for(int i=0; i<nRows; i++)

vRowIndices[i].reserve(200);

const int Nr = mvKeysRight.size();

for(int iR=0; iR<Nr; iR++)

{

const cv::KeyPoint &kp = mvKeysRight[iR];

const float &kpY = kp.pt.y;

const float r = 2.0f*mvScaleFactors[mvKeysRight[iR].octave];

const int maxr = ceil(kpY+r);

const int minr = floor(kpY-r);

for(int yi=minr;yi<=maxr;yi++)

vRowIndices[yi].push_back(iR);

}

// Set limits for search

// 粗匹配+精匹配

const float minZ = mb;

const float minD = 0;

const float maxD = mbf/minZ;

// For each left keypoint search a match in the right image

vector<pair<int, int> > vDistIdx;

vDistIdx.reserve(N);

for(int iL=0; iL<N; iL++)

{

const cv::KeyPoint &kpL = mvKeys[iL];

const int &levelL = kpL.octave;

const float &vL = kpL.pt.y;

const float &uL = kpL.pt.x;

const vector<size_t> &vCandidates = vRowIndices[vL];

if(vCandidates.empty())

continue;

const float minU = uL-maxD;

const float maxU = uL-minD;

if(maxU<0)

continue;

// step1 粗匹配

int bestDist = ORBmatcher::TH_HIGH;

size_t bestIdxR = 0;

const cv::Mat &dL = mDescriptors.row(iL);

// Compare descriptor to right keypoints

for(size_t iC=0; iC<vCandidates.size(); iC++)

{

const size_t iR = vCandidates[iC];

const cv::KeyPoint &kpR = mvKeysRight[iR];

if(kpR.octave<levelL-1 || kpR.octave>levelL+1)

continue;

const float &uR = kpR.pt.x;

if(uR>=minU && uR<=maxU)

{

const cv::Mat &dR = mDescriptorsRight.row(iR);

// 关键!比较两个特征点的海明距离,dL和dR来自于mDescriptors及其Right在ORBExtractor中作为参数

const int dist = ORBmatcher::DescriptorDistance(dL,dR);

if(dist<bestDist)

{

bestDist = dist;

bestIdxR = iR;

}

}

}

// Subpixel match by correlation

// step2 精匹配

if(bestDist<thOrbDist)

{

// coordinates in image pyramid at keypoint scale

const float uR0 = mvKeysRight[bestIdxR].pt.x;

const float scaleFactor = mvInvScaleFactors[kpL.octave];

const float scaleduL = round(kpL.pt.x*scaleFactor);

const float scaledvL = round(kpL.pt.y*scaleFactor);

const float scaleduR0 = round(uR0*scaleFactor);

// sliding window search

const int w = 5;

cv::Mat IL = mpORBextractorLeft->mvImagePyramid[kpL.octave].rowRange(scaledvL-w,scaledvL+w+1).colRange(scaleduL-w,scaleduL+w+1);

IL.convertTo(IL,CV_32F);

IL = IL - IL.at<float>(w,w) *cv::Mat::ones(IL.rows,IL.cols,CV_32F);

int bestDist = INT_MAX;

int bestincR = 0;

const int L = 5;

vector<float> vDists;

vDists.resize(2*L+1);

const float iniu = scaleduR0+L-w;

const float endu = scaleduR0+L+w+1;

if(iniu<0 || endu >= mpORBextractorRight->mvImagePyramid[kpL.octave].cols)

continue;

for(int incR=-L; incR<=+L; incR++)

{

cv::Mat IR = mpORBextractorRight->mvImagePyramid[kpL.octave].rowRange(scaledvL-w,scaledvL+w+1).colRange(scaleduR0+incR-w,scaleduR0+incR+w+1);

IR.convertTo(IR,CV_32F);

IR = IR - IR.at<float>(w,w) *cv::Mat::ones(IR.rows,IR.cols,CV_32F);

float dist = cv::norm(IL,IR,cv::NORM_L1);

if(dist<bestDist)

{

bestDist = dist;

bestincR = incR;

}

vDists[L+incR] = dist;

}

if(bestincR==-L || bestincR==L)

continue;

// Sub-pixel match (Parabola fitting)

// step3 亚像素插值:将特征点匹配距离拟合成二次曲线,寻找二次曲线最低点作为最优匹配点坐标

const float dist1 = vDists[L+bestincR-1];

const float dist2 = vDists[L+bestincR];

const float dist3 = vDists[L+bestincR+1];

const float deltaR = (dist1-dist3)/(2.0f*(dist1+dist3-2.0f*dist2));

if(deltaR<-1 || deltaR>1)

continue;

// Re-scaled coordinate

// step4 记录特征点的右目和深度信息

float bestuR = mvScaleFactors[kpL.octave]*((float)scaleduR0+(float)bestincR+deltaR);

float disparity = (uL-bestuR); // uL左特征点横坐标值,这里算的应该是视差

if(disparity>=minD && disparity<maxD)

{

if(disparity<=0)

{

disparity=0.01;

bestuR = uL-0.01;

}

mvDepth[iL]=mbf/disparity; // 深度信息 mbf相机基线和焦距的乘积处以视差

mvuRight[iL] = bestuR; // 右目特征点信息

vDistIdx.push_back(pair<int,int>(bestDist,iL));

}

}

}

// step5 删除离群点

sort(vDistIdx.begin(),vDistIdx.end());

const float median = vDistIdx[vDistIdx.size()/2].first;

const float thDist = 1.5f*1.4f*median;

for(int i=vDistIdx.size()-1;i>=0;i--)

{

if(vDistIdx[i].first<thDist)

break;

else

{

mvuRight[vDistIdx[i].second]=-1;

mvDepth[vDistIdx[i].second]=-1;

}

}

}

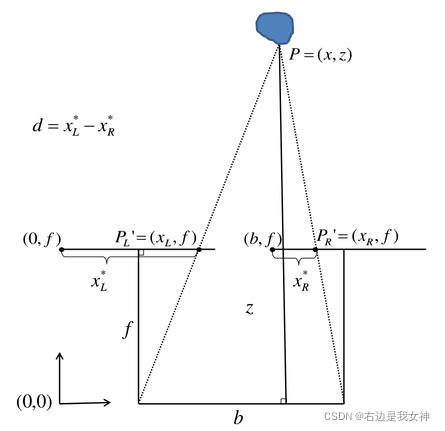

深度计算公式原理

z是深度又称为观测距离,基线是b(两个相机间距),焦距是f,视差为d,根据该三角形的相似性,又根据等比定理有:

z

b

=

z

?

f

b

?

d

=

f

d

\frac{z}{b}=\frac{z-f}{b-d}=\frac{f}{d}

bz?=b?dz?f?=df?

于是

z

=

f

?

b

d

z=\frac{f\cdot b}{d}

z=df?b?

RGB特征点的处理ComputeStereoFromRGBD

根据上一节的示意图计算出虚假的右目特征点。

void Frame::ComputeStereoFromRGBD(const cv::Mat &imDepth)

{

mvuRight = vector<float>(N,-1);

mvDepth = vector<float>(N,-1);

for(int i=0; i<N; i++)

{

const cv::KeyPoint &kp = mvKeys[i];

const cv::KeyPoint &kpU = mvKeysUn[i];

const float &v = kp.pt.y;

const float &u = kp.pt.x;

const float d = imDepth.at<float>(v,u);

if(d>0)

{

mvDepth[i] = d;

mvuRight[i] = kpU.pt.x-mbf/d;

}

}

}

最后得到的应该是mvKeysUn、mvuRight和mvDepth,这是RGBD和Stereo的结晶。

特征点分配

这是Frame构造函数的最后一步。

在对特征点进行处理后,将其分配到48行64列的网格中以加速匹配。

每个网格是这个特征点的索引。

void Frame::AssignFeaturesToGrid()

{

int nReserve = 0.5f*N/(FRAME_GRID_COLS*FRAME_GRID_ROWS);

for(unsigned int i=0; i<FRAME_GRID_COLS;i++)

for (unsigned int j=0; j<FRAME_GRID_ROWS;j++)

mGrid[i][j].reserve(nReserve);

for(int i=0;i<N;i++)

{ // 遍历特征点,将每个特征点索引加入到对应的网格中去

const cv::KeyPoint &kp = mvKeysUn[i];

int nGridPosX, nGridPosY;

if(PosInGrid(kp,nGridPosX,nGridPosY))

mGrid[nGridPosX][nGridPosY].push_back(i);

}

}

单目相机的初始化

在单目相机的构造函数中缺失了匹配这一步,虽然也有mvuRight和mvDepth但是都是空的,其以另一种方式处理。而双目和RGBD则没有这种特别的处理方式。

在代码中,需要注意的是1表示参考帧,2表示当前帧。

MonocularInitialization

void Tracking::MonocularInitialization()

{

// step1 如果单目初始化还没创建,之后又发现这一帧里的特征点数量够了,则创建初始化器

if(!mpInitializer)

{

// Set Reference Frame

if(mCurrentFrame.mvKeys.size()>100)

{

mInitialFrame = Frame(mCurrentFrame);

mLastFrame = Frame(mCurrentFrame);

mvbPrevMatched.resize(mCurrentFrame.mvKeysUn.size());

for(size_t i=0; i<mCurrentFrame.mvKeysUn.size(); i++)

mvbPrevMatched[i]=mCurrentFrame.mvKeysUn[i].pt;

if(mpInitializer)

delete mpInitializer;

mpInitializer = new Initializer(mCurrentFrame,1.0,200); // 核心

fill(mvIniMatches.begin(),mvIniMatches.end(),-1);

return;

}

}

else

{

// step2 如果上一帧特征点足够了,但当前帧特征太少,则匹配失败,删除初始化器

// Try to initialize

if((int)mCurrentFrame.mvKeys.size()<=100)

{

delete mpInitializer;

mpInitializer = static_cast<Initializer*>(NULL);

fill(mvIniMatches.begin(),mvIniMatches.end(),-1);

return;

}

// step3 在mInitialFrame间进行匹配搜索

// Find correspondences

ORBmatcher matcher(0.9,true);

int nmatches = matcher.SearchForInitialization(mInitialFrame,mCurrentFrame,mvbPrevMatched,mvIniMatches,100);

// step4 匹配的特征点数目太少,则匹配失败,删除初始化器。

// Check if there are enough correspondences

if(nmatches<100)

{

delete mpInitializer;

mpInitializer = static_cast<Initializer*>(NULL);

return;

}

// step5 进行单目初始化

cv::Mat Rcw; // Current Camera Rotation

cv::Mat tcw; // Current Camera Translation

vector<bool> vbTriangulated; // Triangulated Correspondences (mvIniMatches)

if(mpInitializer->Initialize(mCurrentFrame, mvIniMatches, Rcw, tcw, mvIniP3D, vbTriangulated))

{

// step6 单目初始化成功后删除未成功三角化的匹配点对

for(size_t i=0, iend=mvIniMatches.size(); i<iend;i++)

{

if(mvIniMatches[i]>=0 && !vbTriangulated[i])

{

mvIniMatches[i]=-1;

nmatches--;

}

}

// step7 创建初始化地图

// Set Frame Poses

mInitialFrame.SetPose(cv::Mat::eye(4,4,CV_32F));

cv::Mat Tcw = cv::Mat::eye(4,4,CV_32F);

Rcw.copyTo(Tcw.rowRange(0,3).colRange(0,3));

tcw.copyTo(Tcw.rowRange(0,3).col(3));

mCurrentFrame.SetPose(Tcw);

CreateInitialMapMonocular();

}

}

}

上述的含义是得有连续两帧合格,一旦有不合格的,删了初始化器重来。第一帧作为参考帧,第二帧作为当前帧,

初始化类中的初始化方法Initialize()

初始化器的创建需要初始化类。该类的代码为:

bool Initializer::Initialize(const Frame &CurrentFrame, const vector<int> &vMatches12, cv::Mat &R21, cv::Mat &t21,

vector<cv::Point3f> &vP3D, vector<bool> &vbTriangulated)

{

// 初始化器Initializer对象创建时就已经指定了mvKeys1,调用本函数只需指定mvKeys2即可

// Fill structures with current keypoints and matches with reference frame

// Reference Frame: 1, Current Frame: 2

mvKeys2 = CurrentFrame.mvKeysUn;

mvMatches12.clear();

mvMatches12.reserve(mvKeys2.size());

mvbMatched1.resize(mvKeys1.size());

// step1 将vMatches12拷贝到mvMatches12,mvMatches12只保存匹配上的特征点对。

for(size_t i=0, iend=vMatches12.size();i<iend; i++)

{

if(vMatches12[i]>=0)

{

mvMatches12.push_back(make_pair(i,vMatches12[i]));

mvbMatched1[i]=true;

}

else

mvbMatched1[i]=false;

}

// step2 准备RANSAC运算中需要的特征点对

const int N = mvMatches12.size();

// Indices for minimum set selection

vector<size_t> vAllIndices;

vAllIndices.reserve(N);

vector<size_t> vAvailableIndices;

for(int i=0; i<N; i++)

{

vAllIndices.push_back(i);

}

// Generate sets of 8 points for each RANSAC iteration

mvSets = vector< vector<size_t> >(mMaxIterations,vector<size_t>(8,0));

DUtils::Random::SeedRandOnce(0);

for(int it=0; it<mMaxIterations; it++)

{

vAvailableIndices = vAllIndices;

// Select a minimum set

for(size_t j=0; j<8; j++)

{

int randi = DUtils::Random::RandomInt(0,vAvailableIndices.size()-1);

int idx = vAvailableIndices[randi];

mvSets[it][j] = idx;

vAvailableIndices[randi] = vAvailableIndices.back();

vAvailableIndices.pop_back();

}

}

// step3 计算F矩阵和H矩阵及其置信度

// Launch threads to compute in parallel a fundamental matrix and a homography

vector<bool> vbMatchesInliersH, vbMatchesInliersF;

float SH, SF;

cv::Mat H, F;

thread threadH(&Initializer::FindHomography,this,ref(vbMatchesInliersH), ref(SH), ref(H));

thread threadF(&Initializer::FindFundamental,this,ref(vbMatchesInliersF), ref(SF), ref(F));

// Wait until both threads have finished

threadH.join();

threadF.join();

// step4 根据比分计算使用哪个矩阵恢复运动

// Compute ratio of scores

float RH = SH/(SH+SF);

// Try to reconstruct from homography or fundamental depending on the ratio (0.40-0.45)

if(RH>0.40)

return ReconstructH(vbMatchesInliersH,H,mK,R21,t21,vP3D,vbTriangulated,1.0,50);

else //if(pF_HF>0.6)

return ReconstructF(vbMatchesInliersF,F,mK,R21,t21,vP3D,vbTriangulated,1.0,50);

return false;

}

计算基础矩阵F和单应矩阵H

RANSAC算法

少数离群点回极大地影响计算结果的准确度。随着采样数量的增加,离群点数量也会增加。这是一种系统误差,难以通过增加采样点来解决。

RANSAC算法的思路是少量多次重复实验,每次实验仅使用尽可能少的点来计算,并统计本次计算中的内点数,只要尝试的次数足够多,总能找到一个包含所有内点的解。

理论上来说,计算F只要7对匹配点,计算H只要4对匹配点,ORB_SLAM2为了编程方便,每次迭代使用8对匹配点计算。

关于计算F和H的算法在这不多介绍。但是整体流程为:

- 特征点坐标归一化(使用均值和一阶中心矩归一化,目的是为了增强计算的稳定性);

- 在RANSAC的循环下计算F/H;

- 卡方检验。

卡方检验,以检测H矩阵为例

float Initializer::CheckHomography(const cv::Mat &H21, const cv::Mat &H12, vector<bool> &vbMatchesInliers, float sigma)

{

const int N = mvMatches12.size();

const float h11 = H21.at<float>(0,0);

const float h12 = H21.at<float>(0,1);

const float h13 = H21.at<float>(0,2);

const float h21 = H21.at<float>(1,0);

const float h22 = H21.at<float>(1,1);

const float h23 = H21.at<float>(1,2);

const float h31 = H21.at<float>(2,0);

const float h32 = H21.at<float>(2,1);

const float h33 = H21.at<float>(2,2);

const float h11inv = H12.at<float>(0,0);

const float h12inv = H12.at<float>(0,1);

const float h13inv = H12.at<float>(0,2);

const float h21inv = H12.at<float>(1,0);

const float h22inv = H12.at<float>(1,1);

const float h23inv = H12.at<float>(1,2);

const float h31inv = H12.at<float>(2,0);

const float h32inv = H12.at<float>(2,1);

const float h33inv = H12.at<float>(2,2);

vbMatchesInliers.resize(N); // 标记是否为内点

float score = 0; // 置信度得分

const float th = 5.991; // 自由度为2,显著性水平为0.05的卡方分布对应的临界阈值

const float invSigmaSquare = 1.0/(sigma*sigma); // 信息矩阵,方差平方的倒数

// 双向投影,计算加权投影误差

for(int i=0; i<N; i++)

{

bool bIn = true;

// step1 提取特征点

const cv::KeyPoint &kp1 = mvKeys1[mvMatches12[i].first];

const cv::KeyPoint &kp2 = mvKeys2[mvMatches12[i].second];

const float u1 = kp1.pt.x;

const float v1 = kp1.pt.y;

const float u2 = kp2.pt.x;

const float v2 = kp2.pt.y;

// Reprojection error in first image

// x2in1 = H12*x2

// step2 计算img2到img1的重投影误差

const float w2in1inv = 1.0/(h31inv*u2+h32inv*v2+h33inv);

const float u2in1 = (h11inv*u2+h12inv*v2+h13inv)*w2in1inv;

const float v2in1 = (h21inv*u2+h22inv*v2+h23inv)*w2in1inv;

const float squareDist1 = (u1-u2in1)*(u1-u2in1)+(v1-v2in1)*(v1-v2in1);

const float chiSquare1 = squareDist1*invSigmaSquare;

// step3 离群点标记上,非离群点累加计算得分

if(chiSquare1>th)

bIn = false;

else

score += th - chiSquare1;

// Reprojection error in second image

// x1in2 = H21*x1

// step4 计算img1到img2的重投影误差

const float w1in2inv = 1.0/(h31*u1+h32*v1+h33);

const float u1in2 = (h11*u1+h12*v1+h13)*w1in2inv;

const float v1in2 = (h21*u1+h22*v1+h23)*w1in2inv;

const float squareDist2 = (u2-u1in2)*(u2-u1in2)+(v2-v1in2)*(v2-v1in2);

const float chiSquare2 = squareDist2*invSigmaSquare;

// step5 离群点标记上,非离群点累加计算得分

if(chiSquare2>th)

bIn = false;

else

score += th - chiSquare2;

if(bIn)

vbMatchesInliers[i]=true;

else

vbMatchesInliers[i]=false;

}

return score;

}

之后采用F和H分解得到R、t即可。