pytorch使用-Lenet实现

一、Lenet实现网络图

二、创建网络

import torch.nn as nn

import torch.nn.functional as F

# TODO: 构建神经网络

class LeNet(nn.Module):

def __init__(self):

super(LeNet, self).__init__()

self.conv1 = nn.Conv2d(1, 6, (5, 5))

self.conv2 = nn.Conv2d(6, 16, (5, 5))

self.fc1 = nn.Linear(16*5*5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

# 卷积 -> 激活 ->池化

x = F.max_pool2d(F.relu(self.conv1(x)), (2, 2))

x = F.max_pool2d(F.relu(self.conv1(x)), 2)

x = x.view(x.size()[0], -1)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

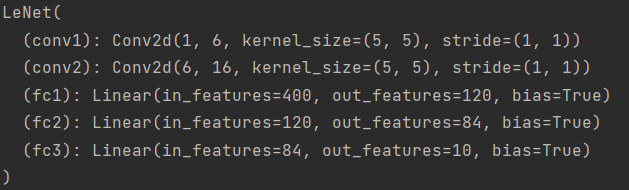

net = LeNet()

print(net)

网络的可学习参数通过 net.parameters() 返回,net.named_parameters 可同时返回可学习的参数及名称。

net = LeNet()

print(len(list(net.parameters())))

for name, para in net.named_parameters():

print(name, ":", para.size())

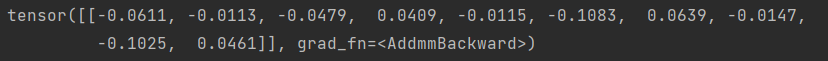

# 输出测试

input = torch.randn(1, 1, 32, 32)

out = net(input)

print(out)

# 梯度归零 和 反向传播

net.zero_grad()

out.backward(torch.ones_like(out))

三、定义损失函数

nn实现了神经网络中大多数的损失函数,例如nn.MSELoss用来计算均方误差,nn.CrossEntropyLoss用来计算交叉滴损失。

# 输出测试

input = torch.randn(1, 1, 32, 32)

out = net(input)

# 定义损失函数

criterion = nn.MSELoss()

# 随机生成标签

target = torch.randn(10)

target = target.view(1, -1)

loss = criterion(out, target)

print(loss)

loss.backward()

# 反向传播

net.zero_grad()

loss.backward()

四、定义优化器

更新网络参数,选择优化算法 SGD

# 传统SGD实现

learning_rate = 0.01

for f in net.parameters():

f.data.sub_(f.grad.data*learning_rate)

# pytorch

import torch.optim as optim

# 初始化优化器

optimizer = optim.SGD(net.parameters(), lr=0.01)

# 梯度清零

optimizer.zero_grad()

output = net(input)

# 反向传播

loss = criterion(out, target)

loss.backward()

# 参数更新

optimizer.step()