以下内容均为个人理解,如有错误,欢迎指正。

VGG16

网络结构

vgg16的网络结构如下所示,16的含义就是说网络中有16个全连接层。

图1没有画出最后一层。

结合这两张图来看,捋一下网络的结构和卷积的过程:

1.第一阶段:

假设输入图片大小为224 x 224 x 3(如图2),先经历两次3 x 3的卷积,得到 224 x 224 x 64,再经历一次最大池化,得到112 x 112 x 128

2.第二阶段:

由阶段一得到112 x 112 x 128的输入,先经历两次3 x 3的卷积,得到112 x 112 x 128,再经历一次最大池化,得到56 x 56 x 256

3.第三阶段:

由阶段二得到56 x 56 x 256的输入,先经历三次3 x 3的卷积,得到56 x 56 x 256,再经历一次最大池化,得到28 x 28 x 512

4.第四阶段:

由阶段三得到28 x 28 x 512 的输入,先经历三次3 x 3的卷积,得到28 x 28 x 512,再经历一次最大池化,得到14 x 14 x 512

5.第五阶段:

由阶段四得到14 x 14 x 512的输入,先经历三次3 x 3的卷积,得到14 x 14 x 512,再经历一次最大池化。得到7 x 7 x 512

6.第六阶段:

由阶段五得到7 x 7 x 512,先经过三个全连接层,得到1 x 1 x 4096,然后最后经历一次1 x 1 的卷积得到最后的输出。

代码实现

使用pytorch实现

import torch

import torch.nn as nn

# A B D E分别对应VGG11/13/16/19

cfg = {

'A' : [64, 'M', 128, 'M', 256, 256, 'M', 512, 512, 'M', 512, 512, 'M'],

'B' : [64, 64, 'M', 128, 128, 'M', 256, 256, 'M', 512, 512, 'M', 512, 512, 'M'],

'D' : [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 'M', 512, 512, 512, 'M', 512, 512, 512, 'M'],

'E' : [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 256, 'M', 512, 512, 512, 512, 'M', 512, 512, 512, 512, 'M']

}

class VGG16(nn.Module):

# num_classes=3表明是三分类

def __init__(self, features, num_classes=3, init_weights=True):

super(VGG16, self).__init__()

self.features = features

# 构造序列器

self.classifier = nn.Sequential(

# nn.Linear用于构造全连接层

# 第一个参数512*7*7由网络结构决定,不可变

# 第二个参数表示输出张量的大小,也表示神经元的个数,可以微调

nn.Linear(512 * 7 * 7, 4096),

nn.ReLU(True),

nn.Dropout(),

nn.Linear(4096, 4096),

nn.ReLU(True),

nn.Dropout(),

nn.Linear(4096, num_classes)

)

if init_weights:

self._initialize_weights()

# --------------------------------------------------------#

# 关于nn.Linear torch.nn.Linear(in_features, out_features, bias=True)

# nn.Linear用于设置全连接层,其输入输出一般都设置为二维张量,形状为[batch_size,size]

# in_features - size of each input sample,输入的二维张量大小

# out_features - size of each output sample,输出的二维张量大小

# bias - if set to False, the layer will not learn an additive bias

# 从shape的角度来理解,就是相当于输入[batch_size, in_features],输出[batch_size, out_features]

# --------------------------------------------------------#

def forward(self, x): # x为输入的张量

# 输入的张量x经过所有的卷积层,得到的特征层

x = self.features(x)

# 调整x的维度

x = x.view(x.size(0), -1)

# 使用构造的序列器,最终输出num_classes用于最后的类别判断

x = self.classifier(x)

return x

# 初始化权重

def _initialize_weights(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

if m.bias is not None:

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.BatchNorm2d):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.Linear):

nn.init.normal_(m.weight, 0, 0.01)

nn.init.constant_(m.bias, 0)

# 构造卷积层的实现函数

def make_layers(cfg, batch_normal=False):

layers = []

# 初始通道数为3,假设输入的图片为RGB图

in_channels = 3

for v in cfg:

# 如果当前是池化层

if v == 'M':

layers += [nn.MaxPool2d(kernel_size=2, stride=2)]

# 如果是卷积层+(BN)+ReLU

else:

# in_channels:输入通道数

# v:输出通道数

# kernel_size:卷积核大小

# padding:边缘补数

conv2d = nn.Conv2d(in_channels, v, kernel_size=3, padding=1)

if batch_normal: # 如果要进行批处理规范化,即添加BN层

layers += [conv2d, nn.BatchNorm2d(v), nn.ReLU(True)]

else:

layers += [conv2d, nn.ReLU(True)]

# 更新通道数

in_channels = v

# 返回卷积部分的序列器

return nn.Sequential(*layers)

def vgg16(**kwargs):

model = VGG16(make_layers(cfg['D'], batch_normal=False), **kwargs)

return model

UNet

参考讲解:链接

网络结构

顾名思义,UNet就是一个U形结构,通过卷积、下采样、上采样来实现网络结构。

1.卷积

把UNet分成一个个小模块来看,发现每一小模块都有连续的两次卷积

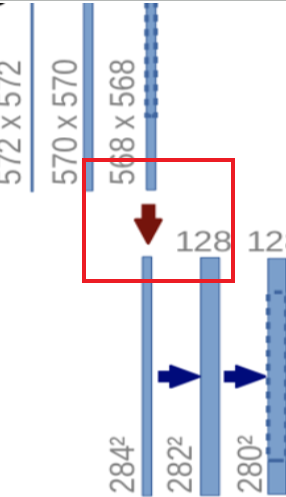

2.下采样

下采样就是一次最大池化操作,再连接两次卷积操作。

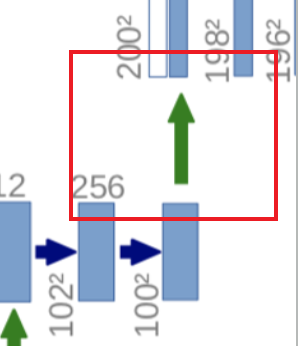

3.上采样

上采样过程包括常规的上采样操作,和特征融合。特征融合就相当于把两个特征层摞在一起,合成一个层。当两个特征层的大小不一致的时候,有两种处理方法:一种是将大的层裁剪小;另一种是将小的层填充大。UNet采用的是填充的方法,即填充尺寸较小的层。

4.最后一次卷积

最后输出的时候,UNet需要根据num_classes整合输出通道,即需要最后进行一次卷积操作。

代码实现

使用pytorch实现

import torch

import torch.nn as nn

from torch.nn import functional as F

# 连续两次卷积模块

class DoubleConv(nn.Module):

def __init__(self, in_channels, out_channels):

super(DoubleConv, self).__init__()

# 构造序列器

self.double_conv = nn.Sequential(

# in_channels:输入通道数

# out_channels:输出通道数

# kernel_size:卷积核大小

# padding:边缘填充,此处是用0填充

nn.Conv2d(in_channels, out_channels, kernel_size=3, padding=0),

# 添加BN层

nn.BatchNorm2d(out_channels),

nn.ReLU(True),

nn.Conv2d(out_channels, out_channels, kernel_size=3, padding=0),

nn.BatchNorm2d(out_channels),

nn.ReLU(True)

)

def forward(self, x):

return self.double_conv(x)

class DownSampling(nn.Module):

def __init__(self, in_channels, out_channels):

super(DownSampling, self).__init__()

# 执行一次最大池化,连接DoubleConv模块

self.maxpool_to_conv = nn.Sequential(

nn.MaxPool2d(2),

DoubleConv(in_channels, out_channels)

)

def forward(self, x):

return self.maxpool_to_conv(x)

class UpSampling(nn.Module):

def __init__(self, in_channels, out_channels, bilinear=True):

super(UpSampling, self).__init__()

if bilinear:

# 采用双线性插值的方法进行上采样

self.up = nn.Upsample(scale_factor=2, mode='bilinear', align_corners=True)

else:

# 采用反卷积进行上采样

self.up = nn.ConvTranspose2d(in_channels, in_channels//2, kernel_size=2, stride=2)

self.conv = DoubleConv(in_channels, out_channels)

# inputs1:上采样的数据(对应图中绿色箭头传来的数据)

# inputs2:特征融合的数据(对应图中灰色箭头传来的数据)

def forward(self, inputs1, inputs2):

# 进行一次up操作

inputs1 = self.up(inputs1)

# 进行特征融合

# 首先对较小的层进行填充

diffY = torch.tensor([inputs2.size()[2] - inputs1.size()[2]])

diffX = torch.tensor([inputs2.size()[3] - inputs1.size()[3]])

inputs1 = F.pad(inputs1, [diffX // 2, diffX - diffX // 2,

diffY // 2, diffY - diffY // 2])

# 得到融合后的层

outputs = torch.cat([inputs2, inputs1], dim=1)

# 最后连接DoubleConv模块

return self.conv(outputs)

class LastConv(nn.Module):

def __init__(self, in_channels, out_channels):

super(LastConv, self).__init__()

self.conv = nn.Conv2d(in_channels, out_channels, kernel_size=1)

def forward(self, x):

return self.conv(x)

class UNet(nn.Module):

def __init__(self, in_channels, num_classes, bilinear=False):

super(UNet, self).__init__()

self.in_channels = in_channels

self.num_classes = num_classes

self.bilinear = bilinear

self.inputs = DoubleConv(in_channels, 64)

self.down_1 = DownSampling(64, 128)

self.down_2 = DownSampling(128, 256)

self.down_3 = DownSampling(256, 512)

self.down_4 = DownSampling(512, 1024)

self.up_1 = UpSampling(1024, 512, self.bilinear)

self.up_2 = UpSampling(512, 256, self.bilinear)

self.up_3 = UpSampling(256, 128, self.bilinear)

self.up_4 = UpSampling(128, 64, self.bilinear)

self.outputs = LastConv(64, num_classes)

def forward(self, x):

# down部分

x1 = self.inputs(x) # 输入图像x经过连续两次卷积得到的第一个特征层x1

x2 = self.down_1(x1) # x1进行一次下采样后得到的第二个特征层x2,注意下采样操作包括最大池化和DoubleConv

x3 = self.down_2(x2) # x2进行一次下采样后得到的第三个特征层x3

x4 = self.down_3(x3) # x3进行一次下采样后得到的第四个特征层x4

x5 = self.down_4(x4) # x4进行一次下采样后得到的第五个特征层x5

# up部分

x6 = self.up_1(x5, x4) # 特征层x5,x4进行一次上采样得到层x6,注意上采样操作包括上采样、层融合、卷积

x7 = self.up_2(x6, x3) # x6和x3进行一次上采样得到层x7

x8 = self.up_3(x7, x2) # x7和x2进行一次上采样得到层x8

x = self.up_1(x8, x1) # x8和x1进行一次上采样得到层x

# last_conv部分

outputs = self.outputs(x) # 层x进行一次1x1的卷积得到最后的输出

return outputs

VGG16 + UNet

由于UNet的特征提取部分(对应网络的左半部分)和VGG16的结构相似,可以用VGG16_upsampling的方式来实现UNet,这样可以用预训练的成熟模型来加速整个UNet的训练。(此处可以参考迁移学习)

代码实现

可以通过在函数中添加pretrained参数来设置是否采用预训练模型,网上有训练好的VGG16的权重文件:链接

# 改写函数

def vgg16(**kwargs):

model = VGG16(make_layers(cfg['D'], batch_normal=False), **kwargs)

del model.classifier # UNet中没用到最后的全连接层

return model

class UNet(nn.Module):

def __init__(self, in_channels, num_classes, bilinear=False):

super(UNet, self).__init__()

self.in_channels = in_channels

self.num_classes = num_classes

self.bilinear = bilinear

self.vgg = vgg16()

self.up_1 = UpSampling(1024, 512, self.bilinear)

self.up_2 = UpSampling(512, 256, self.bilinear)

self.up_3 = UpSampling(256, 128, self.bilinear)

self.up_4 = UpSampling(128, 64, self.bilinear)

self.outputs = LastConv(64, num_classes)

def forward(self, x):

# down部分

[x1, x2, x3, x4, x5] = self.vgg.forward(x)

# up部分

x6 = self.up_1(x5, x4) # 特征层x5,x4进行一次上采样得到层x6,注意上采样操作包括上采样、层融合、卷积

x7 = self.up_2(x6, x3) # x6和x3进行一次上采样得到层x7

x8 = self.up_3(x7, x2) # x7和x2进行一次上采样得到层x8

x = self.up_1(x8, x1) # x8和x1进行一次上采样得到层x

# last_conv部分

outputs = self.outputs(x) # 层x进行一次1x1的卷积得到最后的输出

return outputs