开篇讲两句:

- 以往的代码,都是随便看看就过去了,没有这样较真过,以至于看了很久的深度学习和Python,都没有能够形成编程能力;

- 这次算是废寝忘食的深入进去了,踏实地把每一个代码都理解透,包括其中的数学原理(目前涉及的还很浅)和代码语句实现的功能;也是得益于疫情封闭在寝室,才有如此踏实的心情和宽松的时间,最重要的是周边的环境,没有干扰。

完整代码:

import matplotlib.pyplot as plt

x_data = [1.0, 2.0, 3.0]

y_data = [2.0, 4.0, 6.0]

w = 1.0

def forward(x):

return x * w

def cost(xs, ys):

cost = 0

for x, y in zip(xs, ys):

y_hat = forward(x)

cost += (y_hat - y) ** 2

return cost / len(xs)

def gradient(xs, ys):

grad = 0

for x, y in zip(xs, ys):

grad += 2 * x * (x * w - y)

return grad / len(xs)

print('Predict (before training)', 4, forward(4))

epoch_list = []

cost_list = []

for epoch in range(100):

cost_val = cost(x_data, y_data)

grad_val = gradient(x_data, y_data)

w -= 0.01 * grad_val

print('Epoch:', epoch, 'w=', w, 'loss=', cost_val)

cost_list.append(cost_val)

epoch_list.append(cost_val)

print('Predict (after training)', 4, forward(4))

plt.plot(epoch_list, cost_list)

plt.xlabel('epoch')

plt.ylabel('cost')

plt.show()

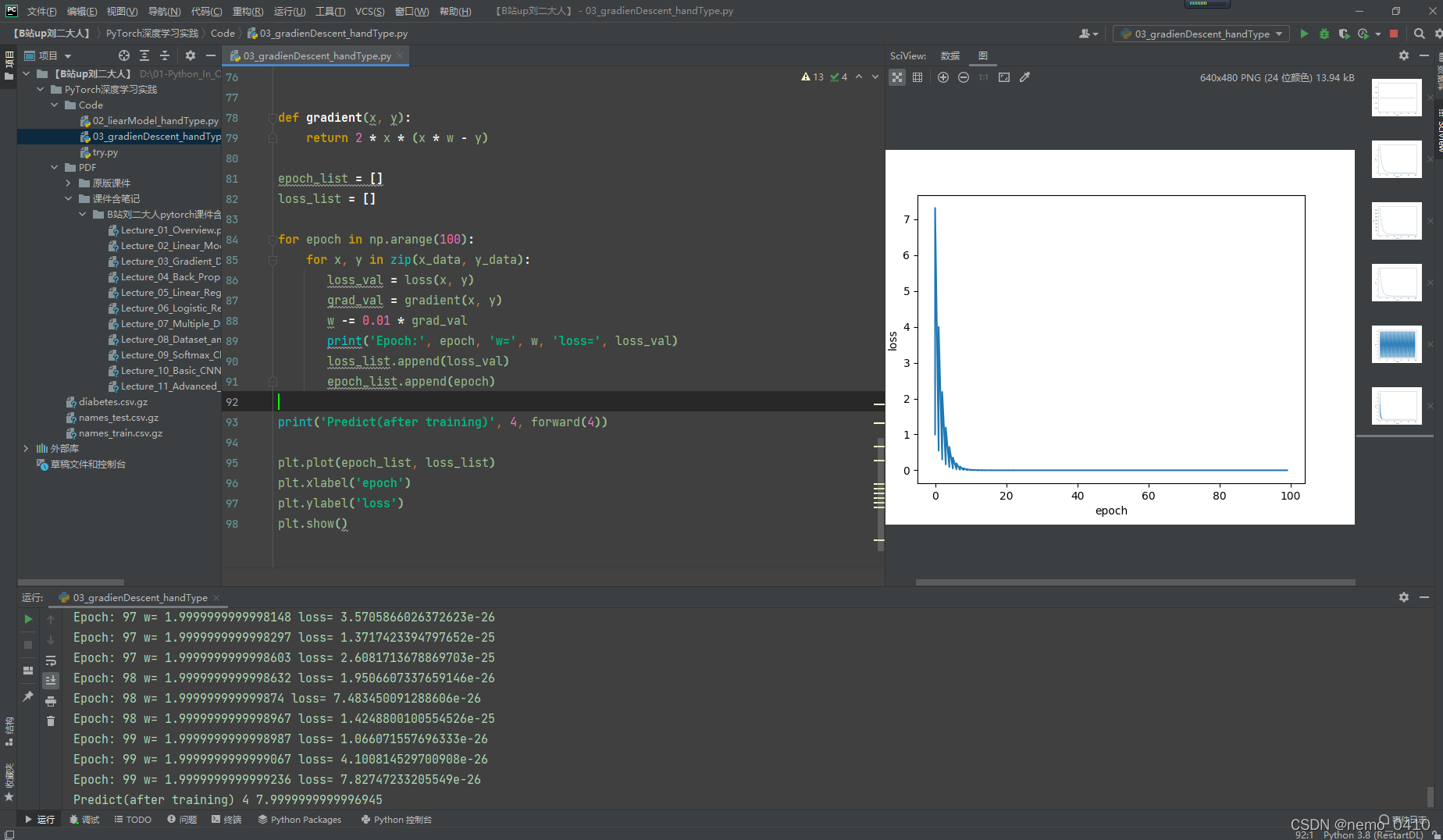

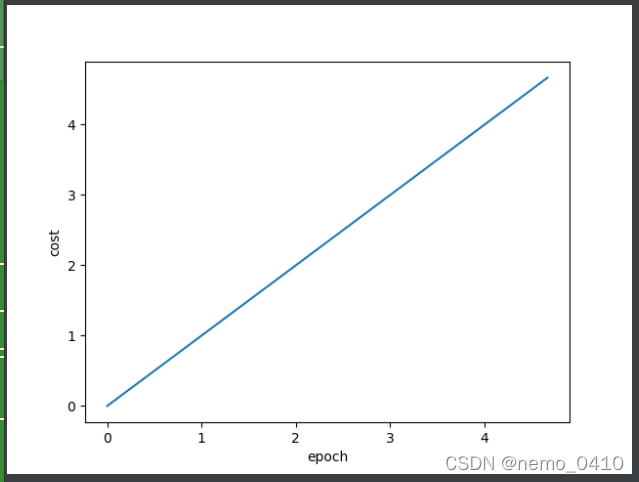

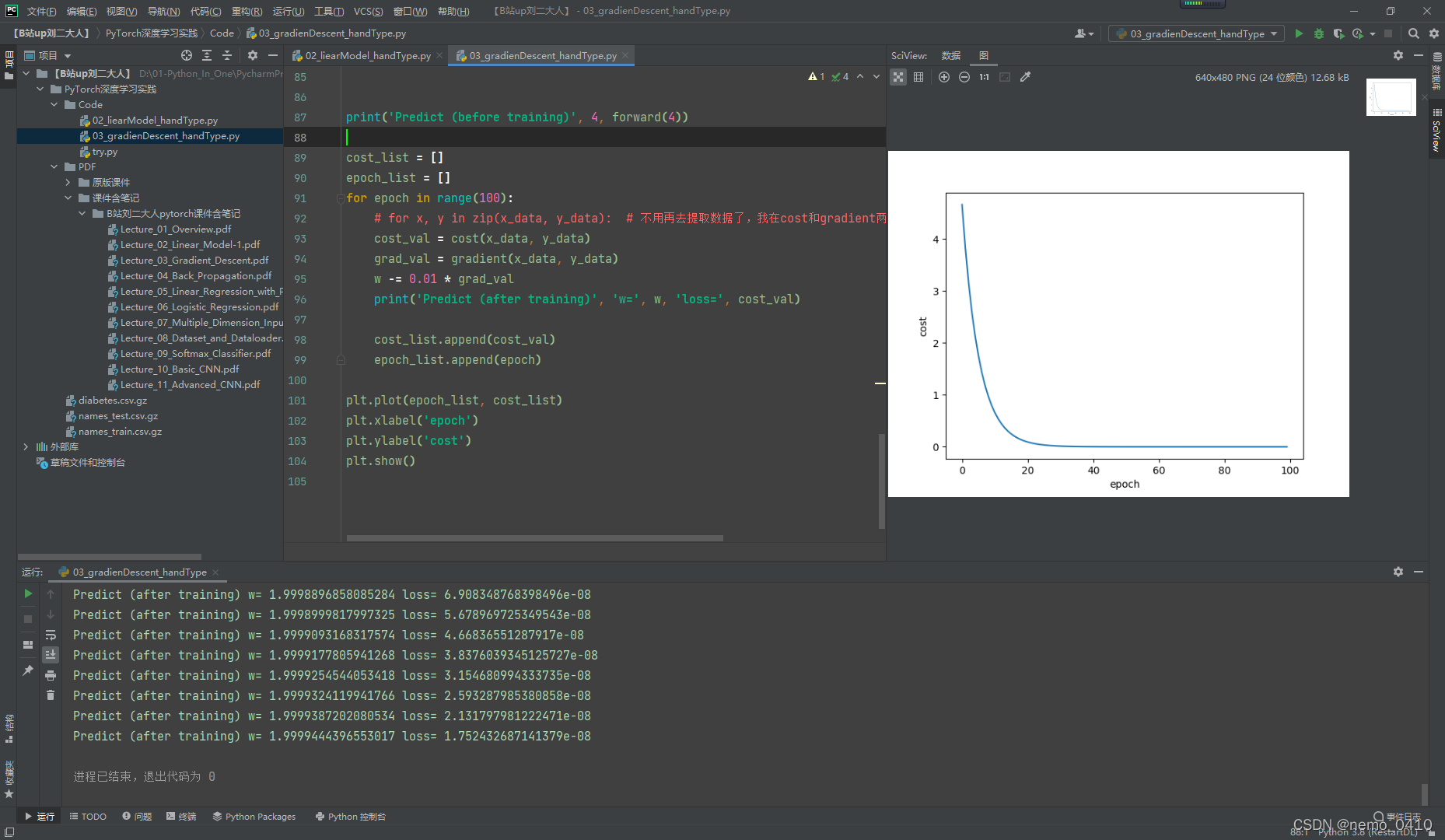

运行结果:

(后经过对比发现此图有错误,并不是想要的epoch横轴的训练损失输出曲线,正确的结果请往后文看)

默写出的错误:

import matplotlib.pyplot as plt

x_data = [1.0, 2.0, 3.0]

y_data = [2.0, 4.0, 6.0]

w = 1.0

def forward(x):

return x * w

def cost(xs, ys): # 此函数和下面函数传入的是数据集x_data和y_data,并不是上一个文件里的单独一个数据;

cost = 0

for x, y in zip(xs, ys):

y_hat = forward(x)

cost += (y_hat - y) ** 2

return cost / len(xs)

def gradient(xs, ys): # 此函数和上面函数传入的是数据集x_data和y_data,并不是上一个文件里的单独一个数据;

grad = 0

for x, y in zip(xs, ys):

grad += 2 * x * (x * w - y)

return grad / len(xs)

print('Predict (before training)', 4, forward(4))

cost_list = []

grad_list = []

for epoch in range(100):

for x, y in zip(x_data, y_data): # 不用再去提取数据了,我在cost和gradient两个函数里,都已经有相关的提取了

cost_val = cost(x,y)

grad_val = gradient(x,y)

w -= 0.01 * grad_val

print('Predict (after training)', 'w=', w, 'loss=', cost_val)

cost_list.append(cost_val)

grad_list.append(grad_val)

plt.plot(epoch, cost_list)

plt.xlabel('epoch')

plt.ylabel('cost')

plt.show()

-

训练那里,不需要再去

x_data和y_data当中提取数据了,直接把数据集扔进去就可以了; -

原因是:在

cost和gradient两个函数当中,已经有对数据集的提取了; -

复原老师的代码也有错误:那个横坐标写的不对;横坐标应该是

epoch的值,而不是原来写的cost_val; -

有个错误是导致我运行失败的原因:

- 我传入

cost和gradient函数的,不再是上一篇文章中说的单个数据,而是数据集;

- 我传入

-

在

cost和gradient函数内部也出过问题,我没有写成+=的形式,而是直接用了=;导致曲线下降趋势一直平缓; -

+=这样写的原因在于要累加cost和grad,不是更新;这是原理没有懂透;

-

return grad / len(xs)# 这里缩进错了,导致最终输出曲线不对,非常细节的问题;return跟for循环并列缩进;作为def函数的结尾,应该是def下仅仅一级的缩进就可以了;

经过修改之后的代码:

import matplotlib.pyplot as plt

x_data = [1.0, 2.0, 3.0]

y_data = [2.0, 4.0, 6.0]

w = 1.0

def forward(x):

return x * w

def cost(xs, ys):

cost = 0

for x, y in zip(xs, ys):

y_hat = forward(x)

cost += (y_hat - y) ** 2

return cost / len(xs)

def gradient(xs, ys):

grad = 0

for x, y in zip(xs, ys):

grad += 2 * x * (x * w - y)

return grad / len(xs)

print('Predict (before training)', 4, forward(4))

cost_list = []

epoch_list = []

for epoch in range(100):

# for x, y in zip(x_data, y_data): # 不用再去提取数据了,我在cost和gradient两个函数里,都已经有相关的提取了

cost_val = cost(x_data, y_data)

grad_val = gradient(x_data, y_data)

w -= 0.01 * grad_val

print('Predict (after training)', 'w=', w, 'loss=', cost_val)

cost_list.append(cost_val)

epoch_list.append(epoch)

plt.plot(epoch_list, cost_list)

plt.xlabel('epoch')

plt.ylabel('cost')

plt.show()

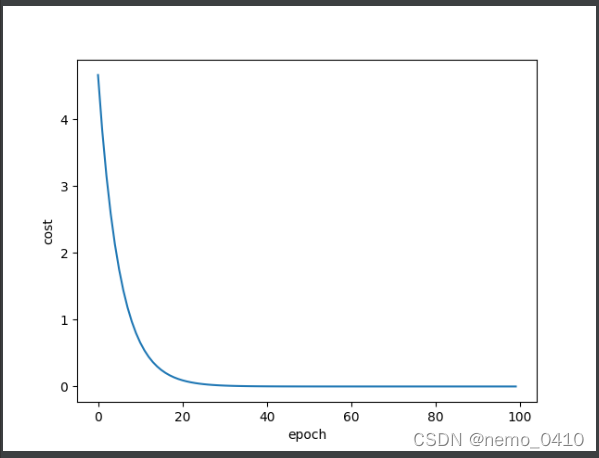

修改之后的结果:

以上是整个数据集上的计算,下面是单个数据进行权重更新的计算:

import numpy as np

import matplotlib.pyplot as plt

x_data = [1.0, 2.0, 3.0]

y_data = [2.0, 4.0, 6.0]

w = 1.0

def forward(x):

return x * w

def loss(x, y):

y_hat = forward(x)

return (y_hat - y) ** 2

def gradient(x, y):

return 2 * x * (x * w - y)

epoch_list = []

loss_list = []

for epoch in np.arange(100):

for x, y in zip(x_data, y_data):

loss_val = loss(x, y) # 本行为了绘图时使用,计算过程中没有涉及

grad_val = gradient(x, y)

w -= 0.01 * grad_val # 每个数据进行一次权重更新

print('Epoch:', epoch, 'w=', w, 'loss=', loss_val)

loss_list.append(loss_val)

epoch_list.append(epoch)

print('Predict(after training)', 4, forward(4))

plt.plot(epoch_list, loss_list)

plt.xlabel('epoch')

plt.ylabel('loss')

plt.show()

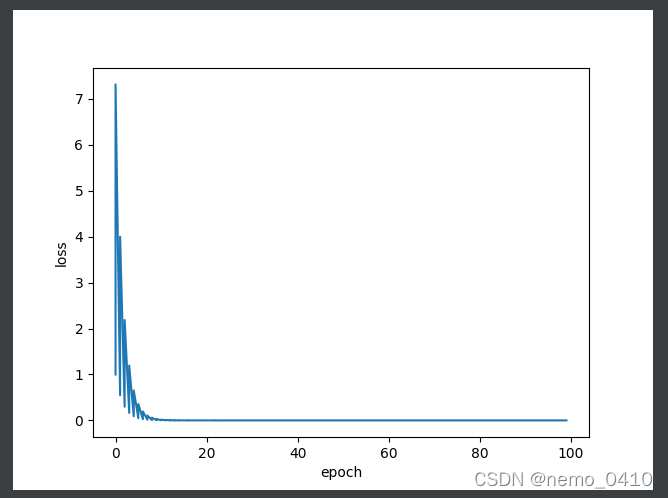

运行效果: