mmdetection开源代码链接:

第一章? ? 架构设计与实现

配置文件:

config每个配置文件里有一个metafile.yml的配置文件,给了一个系列(colletions)下的不同实现(不同backbone,neck等),以及相应的权重链接。

每一个model的config可以分为如下几块:

model:说明model结构backbone\neck\head 及其参数(loss是在head里配置的),get targets(由标注值生成feature)和decode(由feature生成目标形式)都是在head里以方法的形式直接定义的。在loss方法中调用get_targets。

schedule:说明optimizer和learning policy

dataset:train_pipeline和test_pipeline,即train和test时的transform

mmdet/models

第二章? ??网络结构设计? --mmdetection/mmdet/models

在模型层级上,detectors是Architecture,给出了检测的框架。检测框架的基类为BaseDetector,定义了接口,包括框架的forward接口(包括forward_train和forward_test两个逻辑)、一些属性(是否包含neck结构、是否在ROI head中是否包含shared head,是否包含mask等)、extract_feats接口、show_result方法等。

class BaseDetector(BaseModule, metaclass=ABCMeta):

"""Base class for detectors."""

@auto_fp16(apply_to=('img', ))

def forward(self, img, img_metas, return_loss=True, **kwargs):

"""Calls either :func:`forward_train` or :func:`forward_test` depending

on whether ``return_loss`` is ``True``.

Note this setting will change the expected inputs. When

``return_loss=True``, img and img_meta are single-nested (i.e. Tensor

and List[dict]), and when ``resturn_loss=False``, img and img_meta

should be double nested (i.e. List[Tensor], List[List[dict]]), with

the outer list indicating test time augmentations.

"""

if torch.onnx.is_in_onnx_export():

assert len(img_metas) == 1

return self.onnx_export(img[0], img_metas[0])

if return_loss:

return self.forward_train(img, img_metas, **kwargs)

else:

return self.forward_test(img, img_metas, **kwargs)

def forward_train(self, imgs, img_metas, **kwargs):

"""

Args:

img (Tensor): of shape (N, C, H, W) encoding input images.

Typically these should be mean centered and std scaled.

img_metas (list[dict]): List of image info dict where each dict

has: 'img_shape', 'scale_factor', 'flip', and may also contain

'filename', 'ori_shape', 'pad_shape', and 'img_norm_cfg'.

For details on the values of these keys, see

:class:`mmdet.datasets.pipelines.Collect`.

kwargs (keyword arguments): Specific to concrete implementation.

"""

# NOTE the batched image size information may be useful, e.g.

# in DETR, this is needed for the construction of masks, which is

# then used for the transformer_head.

batch_input_shape = tuple(imgs[0].size()[-2:])

for img_meta in img_metas:

img_meta['batch_input_shape'] = batch_input_shape

def forward_test(self, imgs, img_metas, **kwargs):

"""

Args:

imgs (List[Tensor]): the outer list indicates test-time

augmentations and inner Tensor should have a shape NxCxHxW,

which contains all images in the batch.

img_metas (List[List[dict]]): the outer list indicates test-time

augs (multiscale, flip, etc.) and the inner list indicates

images in a batch.

"""

for var, name in [(imgs, 'imgs'), (img_metas, 'img_metas')]:

if not isinstance(var, list):

raise TypeError(f'{name} must be a list, but got {type(var)}')

num_augs = len(imgs)

if num_augs != len(img_metas):

raise ValueError(f'num of augmentations ({len(imgs)}) '

f'!= num of image meta ({len(img_metas)})')

# NOTE the batched image size information may be useful, e.g.

# in DETR, this is needed for the construction of masks, which is

# then used for the transformer_head.

for img, img_meta in zip(imgs, img_metas):

batch_size = len(img_meta)

for img_id in range(batch_size):

img_meta[img_id]['batch_input_shape'] = tuple(img.size()[-2:])

if num_augs == 1:

# proposals (List[List[Tensor]]): the outer list indicates

# test-time augs (multiscale, flip, etc.) and the inner list

# indicates images in a batch.

# The Tensor should have a shape Px4, where P is the number of

# proposals.

if 'proposals' in kwargs:

kwargs['proposals'] = kwargs['proposals'][0]

return self.simple_test(imgs[0], img_metas[0], **kwargs)

else:

assert imgs[0].size(0) == 1, 'aug test does not support ' \

'inference with batch size ' \

f'{imgs[0].size(0)}'

# TODO: support test augmentation for predefined proposals

assert 'proposals' not in kwargs

return self.aug_test(imgs, img_metas, **kwargs)在此基础上继承了SingleStageDetector(调用backbone, neck, head)和TwoStageDetector(调用backbone,neck,rpn_head, roi_head)。

其中,SingleStageDetector如下:

@DETECTORS.register_module()

class SingleStageDetector(BaseDetector):

"""Base class for single-stage detectors.

Single-stage detectors directly and densely predict bounding boxes on the

output features of the backbone+neck.

"""

def __init__(self,

backbone,

neck=None,

bbox_head=None,

train_cfg=None,

test_cfg=None,

pretrained=None,

init_cfg=None):

super(SingleStageDetector, self).__init__(init_cfg)

if pretrained:

warnings.warn('DeprecationWarning: pretrained is deprecated, '

'please use "init_cfg" instead')

backbone.pretrained = pretrained

self.backbone = build_backbone(backbone)

if neck is not None:

self.neck = build_neck(neck)

bbox_head.update(train_cfg=train_cfg)

bbox_head.update(test_cfg=test_cfg)

self.bbox_head = build_head(bbox_head)

self.train_cfg = train_cfg

self.test_cfg = test_cfg

def forward_train(self,

img,

img_metas,

gt_bboxes,

gt_labels,

gt_bboxes_ignore=None):

"""

Args:

img (Tensor): Input images of shape (N, C, H, W).

Typically these should be mean centered and std scaled.

img_metas (list[dict]): A List of image info dict where each dict

has: 'img_shape', 'scale_factor', 'flip', and may also contain

'filename', 'ori_shape', 'pad_shape', and 'img_norm_cfg'.

For details on the values of these keys see

:class:`mmdet.datasets.pipelines.Collect`.

gt_bboxes (list[Tensor]): Each item are the truth boxes for each

image in [tl_x, tl_y, br_x, br_y] format.

gt_labels (list[Tensor]): Class indices corresponding to each box

gt_bboxes_ignore (None | list[Tensor]): Specify which bounding

boxes can be ignored when computing the loss.

Returns:

dict[str, Tensor]: A dictionary of loss components.

"""

super(SingleStageDetector, self).forward_train(img, img_metas)

x = self.extract_feat(img)

losses = self.bbox_head.forward_train(x, img_metas, gt_bboxes,

gt_labels, gt_bboxes_ignore)

return losses

def simple_test(self, img, img_metas, rescale=False):

"""Test function without test-time augmentation.

Args:

img (torch.Tensor): Images with shape (N, C, H, W).

img_metas (list[dict]): List of image information.

rescale (bool, optional): Whether to rescale the results.

Defaults to False.

Returns:

list[list[np.ndarray]]: BBox results of each image and classes.

The outer list corresponds to each image. The inner list

corresponds to each class.

"""

feat = self.extract_feat(img)

results_list = self.bbox_head.simple_test(

feat, img_metas, rescale=rescale)

bbox_results = [

bbox2result(det_bboxes, det_labels, self.bbox_head.num_classes)

for det_bboxes, det_labels in results_list

]

return bbox_resultsTwoStageDetector如下:

@DETECTORS.register_module()

class TwoStageDetector(BaseDetector):

"""Base class for two-stage detectors.

Two-stage detectors typically consisting of a region proposal network and a

task-specific regression head.

"""

def __init__(self,

backbone,

neck=None,

rpn_head=None,

roi_head=None,

train_cfg=None,

test_cfg=None,

pretrained=None,

init_cfg=None):

super(TwoStageDetector, self).__init__(init_cfg)

if pretrained:

warnings.warn('DeprecationWarning: pretrained is deprecated, '

'please use "init_cfg" instead')

backbone.pretrained = pretrained

self.backbone = build_backbone(backbone)

if neck is not None:

self.neck = build_neck(neck)

if rpn_head is not None:

rpn_train_cfg = train_cfg.rpn if train_cfg is not None else None

rpn_head_ = rpn_head.copy()

rpn_head_.update(train_cfg=rpn_train_cfg, test_cfg=test_cfg.rpn)

self.rpn_head = build_head(rpn_head_)

if roi_head is not None:

# update train and test cfg here for now

# TODO: refactor assigner & sampler

rcnn_train_cfg = train_cfg.rcnn if train_cfg is not None else None

roi_head.update(train_cfg=rcnn_train_cfg)

roi_head.update(test_cfg=test_cfg.rcnn)

roi_head.pretrained = pretrained

self.roi_head = build_head(roi_head)

self.train_cfg = train_cfg

self.test_cfg = test_cfg

def forward_train(self,

img,

img_metas,

gt_bboxes,

gt_labels,

gt_bboxes_ignore=None,

gt_masks=None,

proposals=None,

**kwargs):

"""

Args:

img (Tensor): of shape (N, C, H, W) encoding input images.

Typically these should be mean centered and std scaled.

img_metas (list[dict]): list of image info dict where each dict

has: 'img_shape', 'scale_factor', 'flip', and may also contain

'filename', 'ori_shape', 'pad_shape', and 'img_norm_cfg'.

For details on the values of these keys see

`mmdet/datasets/pipelines/formatting.py:Collect`.

gt_bboxes (list[Tensor]): Ground truth bboxes for each image with

shape (num_gts, 4) in [tl_x, tl_y, br_x, br_y] format.

gt_labels (list[Tensor]): class indices corresponding to each box

gt_bboxes_ignore (None | list[Tensor]): specify which bounding

boxes can be ignored when computing the loss.

gt_masks (None | Tensor) : true segmentation masks for each box

used if the architecture supports a segmentation task.

proposals : override rpn proposals with custom proposals. Use when

`with_rpn` is False.

Returns:

dict[str, Tensor]: a dictionary of loss components

"""

x = self.extract_feat(img)

losses = dict()

# RPN forward and loss

if self.with_rpn:

proposal_cfg = self.train_cfg.get('rpn_proposal',

self.test_cfg.rpn)

rpn_losses, proposal_list = self.rpn_head.forward_train(

x,

img_metas,

gt_bboxes,

gt_labels=None,

gt_bboxes_ignore=gt_bboxes_ignore,

proposal_cfg=proposal_cfg,

**kwargs)

losses.update(rpn_losses)

else:

proposal_list = proposals

roi_losses = self.roi_head.forward_train(x, img_metas, proposal_list,

gt_bboxes, gt_labels,

gt_bboxes_ignore, gt_masks,

**kwargs)

losses.update(roi_losses)

return losses

def simple_test(self, img, img_metas, proposals=None, rescale=False):

"""Test without augmentation."""

assert self.with_bbox, 'Bbox head must be implemented.'

x = self.extract_feat(img)

if proposals is None:

proposal_list = self.rpn_head.simple_test_rpn(x, img_metas)

else:

proposal_list = proposals

return self.roi_head.simple_test(

x, proposal_list, img_metas, rescale=rescale)其他的yolo、centernet、faster_rcnn等类基本都是基于以上两个类继承而来的。

三、Heads

BaseDenseHead中提供了loss的接口,在forward_train时计算loss:

class BaseDenseHead(BaseModule, metaclass=ABCMeta):

"""Base class for DenseHeads."""

@abstractmethod

def loss(self, **kwargs):

"""Compute losses of the head."""

pass

def forward_train(self,

x,

img_metas,

gt_bboxes,

gt_labels=None,

gt_bboxes_ignore=None,

proposal_cfg=None,

**kwargs):

"""

Args:

x (list[Tensor]): Features from FPN.

img_metas (list[dict]): Meta information of each image, e.g.,

image size, scaling factor, etc.

gt_bboxes (Tensor): Ground truth bboxes of the image,

shape (num_gts, 4).

gt_labels (Tensor): Ground truth labels of each box,

shape (num_gts,).

gt_bboxes_ignore (Tensor): Ground truth bboxes to be

ignored, shape (num_ignored_gts, 4).

proposal_cfg (mmcv.Config): Test / postprocessing configuration,

if None, test_cfg would be used

Returns:

tuple:

losses: (dict[str, Tensor]): A dictionary of loss components.

proposal_list (list[Tensor]): Proposals of each image.

"""

outs = self(x)

if gt_labels is None:

loss_inputs = outs + (gt_bboxes, img_metas)

else:

loss_inputs = outs + (gt_bboxes, gt_labels, img_metas)

losses = self.loss(*loss_inputs, gt_bboxes_ignore=gt_bboxes_ignore)

if proposal_cfg is None:

return losses

else:

proposal_list = self.get_bboxes(

*outs, img_metas=img_metas, cfg=proposal_cfg)

return losses, proposal_list

def simple_test(self, feats, img_metas, rescale=False):

"""Test function without test-time augmentation.

Args:

feats (tuple[torch.Tensor]): Multi-level features from the

upstream network, each is a 4D-tensor.

img_metas (list[dict]): List of image information.

rescale (bool, optional): Whether to rescale the results.

Defaults to False.

Returns:

list[tuple[Tensor, Tensor]]: Each item in result_list is 2-tuple.

The first item is ``bboxes`` with shape (n, 5),

where 5 represent (tl_x, tl_y, br_x, br_y, score).

The shape of the second tensor in the tuple is ``labels``

with shape (n, ).

"""

return self.simple_test_bboxes(feats, img_metas, rescale=rescale)

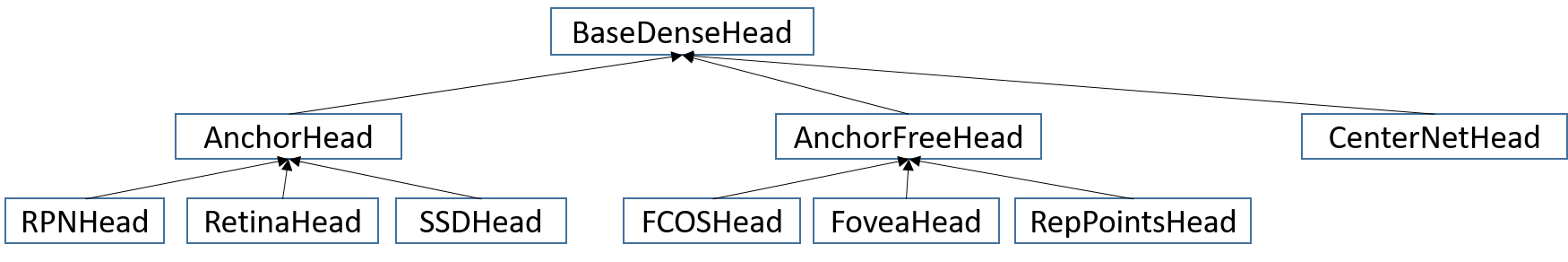

基于该基类,拓展了不同的head,如下图所示:

- ?head的实现中要实现loss,求loss时需要调用实现的get_targert方法获取真值,由真值和推理结果计算loss时不同的loss函数是传参数的。对于anchorhead,在get_target中需要实现box coder,box assigner,box sampler。

- head的实现中还需要实现get_box方法从推理的feature中decode为最终box的结果。

以centernet为例:

@HEADS.register_module()

class CenterNetHead(BaseDenseHead, BBoxTestMixin):

"""Objects as Points Head. CenterHead use center_point to indicate object's

position. Paper link <https://arxiv.org/abs/1904.07850>

Args:

in_channel (int): Number of channel in the input feature map.

feat_channel (int): Number of channel in the intermediate feature map.

num_classes (int): Number of categories excluding the background

category.

loss_center_heatmap (dict | None): Config of center heatmap loss.

Default: GaussianFocalLoss.

loss_wh (dict | None): Config of wh loss. Default: L1Loss.

loss_offset (dict | None): Config of offset loss. Default: L1Loss.

train_cfg (dict | None): Training config. Useless in CenterNet,

but we keep this variable for SingleStageDetector. Default: None.

test_cfg (dict | None): Testing config of CenterNet. Default: None.

init_cfg (dict or list[dict], optional): Initialization config dict.

Default: None

"""

def __init__(self,

in_channel,

feat_channel,

num_classes,

loss_center_heatmap=dict(

type='GaussianFocalLoss', loss_weight=1.0),

loss_wh=dict(type='L1Loss', loss_weight=0.1),

loss_offset=dict(type='L1Loss', loss_weight=1.0),

train_cfg=None,

test_cfg=None,

init_cfg=None):

super(CenterNetHead, self).__init__(init_cfg)

self.num_classes = num_classes

self.heatmap_head = self._build_head(in_channel, feat_channel,

num_classes)

self.wh_head = self._build_head(in_channel, feat_channel, 2)

self.offset_head = self._build_head(in_channel, feat_channel, 2)

self.loss_center_heatmap = build_loss(loss_center_heatmap)

self.loss_wh = build_loss(loss_wh)

self.loss_offset = build_loss(loss_offset)

self.train_cfg = train_cfg

self.test_cfg = test_cfg

self.fp16_enabled = False

def forward(self, feats):

"""Forward features. Notice CenterNet head does not use FPN.

Args:

feats (tuple[Tensor]): Features from the upstream network, each is

a 4D-tensor.

Returns:

center_heatmap_preds (List[Tensor]): center predict heatmaps for

all levels, the channels number is num_classes.

wh_preds (List[Tensor]): wh predicts for all levels, the channels

number is 2.

offset_preds (List[Tensor]): offset predicts for all levels, the

channels number is 2.

"""

return multi_apply(self.forward_single, feats)

def forward_single(self, feat):

"""Forward feature of a single level.

Args:

feat (Tensor): Feature of a single level.

Returns:

center_heatmap_pred (Tensor): center predict heatmaps, the

channels number is num_classes.

wh_pred (Tensor): wh predicts, the channels number is 2.

offset_pred (Tensor): offset predicts, the channels number is 2.

"""

center_heatmap_pred = self.heatmap_head(feat).sigmoid()

wh_pred = self.wh_head(feat)

offset_pred = self.offset_head(feat)

return center_heatmap_pred, wh_pred, offset_pred

@force_fp32(apply_to=('center_heatmap_preds', 'wh_preds', 'offset_preds'))

def loss(self,

center_heatmap_preds,

wh_preds,

offset_preds,

gt_bboxes,

gt_labels,

img_metas,

gt_bboxes_ignore=None):

"""Compute losses of the head.

Args:

center_heatmap_preds (list[Tensor]): center predict heatmaps for

all levels with shape (B, num_classes, H, W).

wh_preds (list[Tensor]): wh predicts for all levels with

shape (B, 2, H, W).

offset_preds (list[Tensor]): offset predicts for all levels

with shape (B, 2, H, W).

gt_bboxes (list[Tensor]): Ground truth bboxes for each image with

shape (num_gts, 4) in [tl_x, tl_y, br_x, br_y] format.

gt_labels (list[Tensor]): class indices corresponding to each box.

img_metas (list[dict]): Meta information of each image, e.g.,

image size, scaling factor, etc.

gt_bboxes_ignore (None | list[Tensor]): specify which bounding

boxes can be ignored when computing the loss. Default: None

Returns:

dict[str, Tensor]: which has components below:

- loss_center_heatmap (Tensor): loss of center heatmap.

- loss_wh (Tensor): loss of hw heatmap

- loss_offset (Tensor): loss of offset heatmap.

"""

assert len(center_heatmap_preds) == len(wh_preds) == len(

offset_preds) == 1

center_heatmap_pred = center_heatmap_preds[0]

wh_pred = wh_preds[0]

offset_pred = offset_preds[0]

target_result, avg_factor = self.get_targets(gt_bboxes, gt_labels,

center_heatmap_pred.shape,

img_metas[0]['pad_shape'])

center_heatmap_target = target_result['center_heatmap_target']

wh_target = target_result['wh_target']

offset_target = target_result['offset_target']

wh_offset_target_weight = target_result['wh_offset_target_weight']

# Since the channel of wh_target and offset_target is 2, the avg_factor

# of loss_center_heatmap is always 1/2 of loss_wh and loss_offset.

loss_center_heatmap = self.loss_center_heatmap(

center_heatmap_pred, center_heatmap_target, avg_factor=avg_factor)

loss_wh = self.loss_wh(

wh_pred,

wh_target,

wh_offset_target_weight,

avg_factor=avg_factor * 2)

loss_offset = self.loss_offset(

offset_pred,

offset_target,

wh_offset_target_weight,

avg_factor=avg_factor * 2)

return dict(

loss_center_heatmap=loss_center_heatmap,

loss_wh=loss_wh,

loss_offset=loss_offset)

mmdetection框架处理得更好,分成了detectors,backbones, necks, dense_heads, roi_heads, seg_heads。其中,,。其他backbones, necks, dense_heads, roi_heads, seg_heads作为component,各自有自己的base定义接口,并扩展了不同经典论文的结构可以直接使用。