跟前面的没什么差别,搭建两层网络就初始化两层网络的参数就好了

1. 自己初始化参数模型那些,巴拉巴拉巴拉************

会用到前面的训练、预测、评估部分函数,复制过来就好了

没有***d2l***库的话安装就好了

!pip install d2l

import tensorflow as tf

from d2l import tensorflow as d2l

# 分批载入数据集

batch_size = 256

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size)

# 初始化模型参数

# 建立两层网络

# 还是使用Fashion-MNIST数据集,y = X * W + b

# 输入图片尺寸28*28 拉成一维后就是784,10类所以最后层输出为10,

# 中间层的神经元数量设置为256,神经元数量最好设置为2的若干次幂

num_inputs, num_outputs, num_hiddens = 784, 10, 256

W1 = tf.Variable(tf.random.normal(shape=(num_inputs, num_hiddens), mean=0, stddev=0.01))

b1 = tf.Variable(tf.zeros(num_hiddens))

W2 = tf.Variable(tf.random.normal(shape=(num_hiddens, num_outputs), mean=0, stddev=0.01))

b2 = tf.Variable(tf.zeros(num_outputs))

# 参数

params = [W1, b1, W2, b2]

# 多层网络如果不增加激活函数就退化成了单层网络

# 激活函数是为了增加网络的非线性,这里使用的是ReLU

# 通常接在卷积层之后

def relu(X):

return tf.math.maximum(X, 0)

# 建立模型,搭建两层网络模型

def net(X):

X = tf.reshape(X, (-1, num_inputs))

H = relu(tf.matmul(X, W1) + b1)

return tf.matmul(H, W2) + b2

# 定义损失函数,使用交叉熵损失

def loss(y_hat, y):

return tf.losses.sparse_categorical_crossentropy(y, y_hat, from_logits=True)

##########################################################

# 下面部分跟之前的代码一毛一样

##########################################################

# 分类精度 统计正确的数量 精度:accuracy(y_hat, y) / len(y)

def accuracy(y_hat, y):

if len(y_hat.shape) > 1 and y_hat.shape[1] > 1:

y_hat = tf.argmax(y_hat, axis=1)

cmp = tf.cast(y_hat, y.dtype) == y

return float(tf.reduce_sum(tf.cast(cmp, y.dtype)))

def evaluate_accuracy(net, data_iter):

metric = Accumulator(2) # 正确预测数、预测总数

for X, y in data_iter:

metric.add(accuracy(net(X), y), d2l.size(y))

return metric[0] / metric[1]

class Accumulator:

def __init__(self, n):

self.data = [0.0] * n

def add(self, *args):

self.data = [a + float(b) for a, b in zip(self.data, args)]

def reset(self):

self.data = [0.0] * len(self.data)

def __getitem__(self, idx):

return self.data[idx]

# 训练

# updater是更新模型参数的常用函数

def train_epoch_ch3(net, train_iter, loss, updater):

# 训练损失总和、训练准确度总和、样本数, 累积求和

metric = Accumulator(3)

for X,y in train_iter:

# 计算梯度并更新参数

with tf.GradientTape() as tape:

y_hat = net(X)

# Keras内置的损失接受的是(标签,预测),这不同于用户在本书中的实现。

# 本书的实现接受(预测,标签),例如我们上面实现的“交叉熵”

if isinstance(loss, tf.keras.losses.Loss):

l = loss(y, y_hat)

else:

l = loss(y_hat, y)

if isinstance(updater, tf.keras.optimizers.Optimizer):

params = net.trainable_variables

grads = tape.gradient(l, params)

updater.apply_gradients(zip(grads, params))

else:

updater(X.shape[0], tape.gradient(l, updater.params))

# Keras的loss默认返回一个批量的平均损失

l_sum = l * float(tf.size(y)) if isinstance(

loss, tf.keras.losses.Loss) else tf.reduce_sum(l)

metric.add(l_sum, accuracy(y_hat, y), tf.size(y))

# 返回训练损失和训练精度

return metric[0] / metric[2], metric[1] / metric[2]

def train_ch3(net, train_iter, test_iter, loss, num_epochs, updater):

# """训练模型(定义见第3章)"""

for epoch in range(num_epochs):

train_metrics = train_epoch_ch3(net, train_iter, loss, updater)

test_acc = evaluate_accuracy(net, test_iter)

train_loss, train_acc = train_metrics

print("Epoch %s/%s:"%(epoch,num_epochs)+" train_loss: "+str(train_loss) + " train_acc: "+str(train_acc) + " test_acc: "+str(test_acc))

class Updater():

# """用小批量随机梯度下降法更新参数"""

def __init__(self, params, lr):

self.params = params

self.lr = lr

def __call__(self, batch_size, grads):

d2l.sgd(self.params, grads, self.lr, batch_size)

num_epochs, lr = 10, 0.1

updater = Updater([W1, W2, b1, b2], lr)

train_ch3(net, train_iter, test_iter, loss, num_epochs, updater)

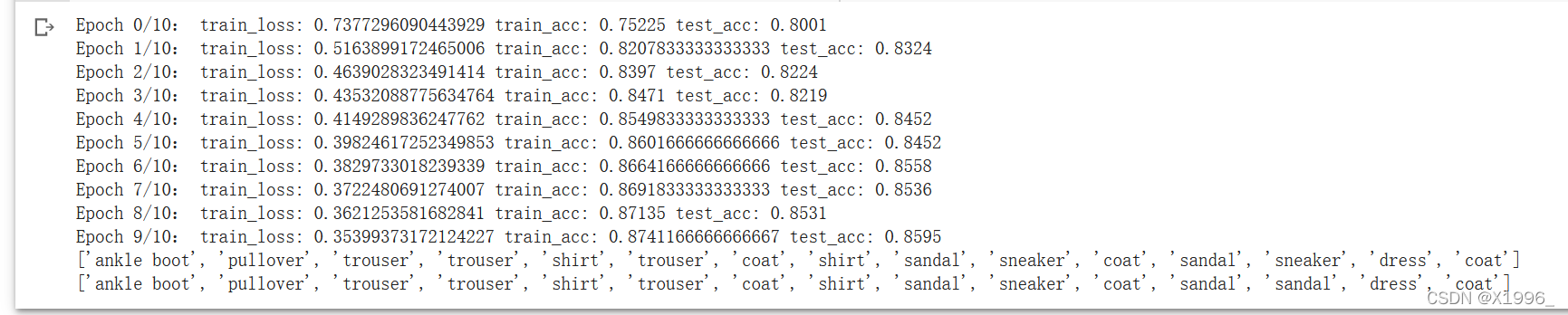

训练结果如下: 因为多了一层,所以比前面的训练慢一点

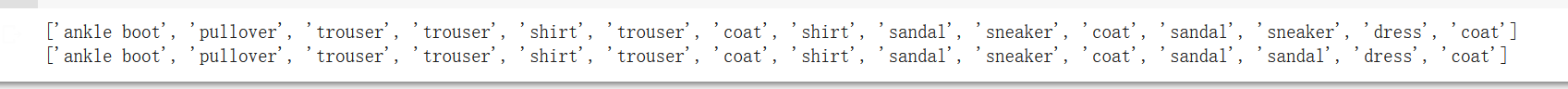

测试结果如下:

测试结果如下:

def predict_ch3(net, test_iter):

# """预测标签(定义见第3章)"""

# batch_size=256, 所以X和y的大小是256

for X, y in test_iter:

break

# 得到真实标签

trues = d2l.get_fashion_mnist_labels(y)

# 得到预测标签

preds = d2l.get_fashion_mnist_labels(tf.argmax(net(X), axis=1))

# 输出前15个的预测结果

print(trues[0:15])

print(preds[0:15])

predict_ch3(net, test_iter)

倒数第三个还是预测错了

2. 简洁实现就是使用定义好的API搭建模型,设置损失函数,优化器等

import tensorflow as tf

from d2l import tensorflow as d2l

# 分批载入数据集

batch_size = 256

num_epochs, lr = 10, 0.1

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size)

# 建立模型,搭建两层网络模型并初始化参数

net = tf.keras.models.Sequential([

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(256, activation='relu'),

tf.keras.layers.Dense(10)])

# 定义损失函数,使用交叉熵损失,优化器

loss = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True)

trainer = tf.keras.optimizers.SGD(learning_rate=lr)

# 分类精度 统计正确的数量 精度:accuracy(y_hat, y) / len(y)

def accuracy(y_hat, y):

if len(y_hat.shape) > 1 and y_hat.shape[1] > 1:

y_hat = tf.argmax(y_hat, axis=1)

cmp = tf.cast(y_hat, y.dtype) == y

return float(tf.reduce_sum(tf.cast(cmp, y.dtype)))

def evaluate_accuracy(net, data_iter):

metric = Accumulator(2) # 正确预测数、预测总数

for X, y in data_iter:

metric.add(accuracy(net(X), y), d2l.size(y))

return metric[0] / metric[1]

class Accumulator:

def __init__(self, n):

self.data = [0.0] * n

def add(self, *args):

self.data = [a + float(b) for a, b in zip(self.data, args)]

def reset(self):

self.data = [0.0] * len(self.data)

def __getitem__(self, idx):

return self.data[idx]

# 训练

# updater是更新模型参数的常用函数

def train_epoch_ch3(net, train_iter, loss, updater):

# 训练损失总和、训练准确度总和、样本数, 累积求和

metric = Accumulator(3)

for X,y in train_iter:

# 计算梯度并更新参数

with tf.GradientTape() as tape:

y_hat = net(X)

# Keras内置的损失接受的是(标签,预测),这不同于用户在本书中的实现。

# 本书的实现接受(预测,标签),例如我们上面实现的“交叉熵”

if isinstance(loss, tf.keras.losses.Loss):

l = loss(y, y_hat)

else:

l = loss(y_hat, y)

if isinstance(updater, tf.keras.optimizers.Optimizer):

params = net.trainable_variables

grads = tape.gradient(l, params)

updater.apply_gradients(zip(grads, params))

else:

updater(X.shape[0], tape.gradient(l, updater.params))

# Keras的loss默认返回一个批量的平均损失

l_sum = l * float(tf.size(y)) if isinstance(

loss, tf.keras.losses.Loss) else tf.reduce_sum(l)

metric.add(l_sum, accuracy(y_hat, y), tf.size(y))

# 返回训练损失和训练精度

return metric[0] / metric[2], metric[1] / metric[2]

def train_ch3(net, train_iter, test_iter, loss, num_epochs, updater):

# """训练模型(定义见第3章)"""

for epoch in range(num_epochs):

train_metrics = train_epoch_ch3(net, train_iter, loss, updater)

test_acc = evaluate_accuracy(net, test_iter)

train_loss, train_acc = train_metrics

print("Epoch %s/%s:"%(epoch,num_epochs)+" train_loss: "+str(train_loss) + " train_acc: "+str(train_acc) + " test_acc: "+str(test_acc))

train_ch3(net, train_iter, test_iter, loss, num_epochs, trainer)

def predict_ch3(net, test_iter):

# """预测标签(定义见第3章)"""

# batch_size=256, 所以X和y的大小是256

for X, y in test_iter:

break

# 得到真实标签

trues = d2l.get_fashion_mnist_labels(y)

# 得到预测标签

preds = d2l.get_fashion_mnist_labels(tf.argmax(net(X), axis=1))

# 输出前15个的预测结果

print(trues[0:15])

print(preds[0:15])

predict_ch3(net, test_iter)